转载自微信公众号

原文链接: https://mp.weixin.qq.com/s?__biz=Mzg4MjgxMjgyMg==&mid=2247486049&idx=1&sn=1d98375dcbb9d0d68e8733f2dd0a2d40&chksm=cf51b898f826318ead24e414144235cfd516af4abb71190aeca42b1082bd606df6973eb963f0#rd

Open AI 自监督学习笔记

Video: https://www.youtube.com/watch?v=7l6fttRJzeU

Slides: https://nips.cc/media/neurips-2021/Slides/21895.pdf

Self-Supervised Learning

– Self-Prediction and Contrastive Learning

- Self-Supervised Learning

- a popular paradigm of representation learning

Outline

-

Introduction: motivation, basic concepts, examples

-

Early Work: Look into connection with old methods

-

Methods

- Self-prediction

- Contrastive Learning

- (for each subsection, present the framework and categorization)

-

Pretext tasks: a wide range of literature review

-

Techniques: improve training efficiency

Introduction

What is self-supervised learning and why we need it?

What is self-supervised learning?

-

Self-supervised learning (SSL):

- a special type of representation learning that enables learning good data representation from unlablled dataset

-

Motivation :

-

the idea of constructing supervised learning tasks out of unsupervised datasets

-

Why?

✅ Data labeling is expensive and thus high-quality dataset is limited

✅ Learning good representation makes it easier to transfer useful information to a variety of downstream tasks

⇒

\Rightarrow

⇒ e.g. Few-shot learning / Zero-shot transfer to new tasks

Self-supervised learning tasks are also known as pretext tasks

What’s Possible with Self-Supervised Learning?

Early Work

Precursors 先驱者 to recent self-supervised approaches

Early Work: Connecting the Dots

Some ideas:

Restricted Boltzmann Machines

- RBM:

-

a special case of markov random field

-

consisting of visible units and hidden units

-

has connections between any pair across visible and hidden units, but not within each group

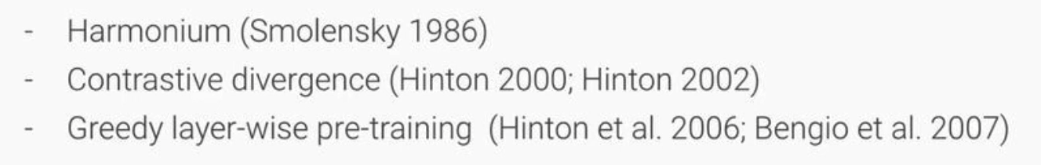

Autoencoder: Self-Supervised Learning for Vision in Early Days

- Autoencoder: a precursor to the modren self-supervised approaches

- Such as Denoising Autoencoder

- Has inspired many self-learning approaches in later years

- such as masked language model (e.g. BERT), MAE

Word2Vec: Self-Supervised Learning for Language

- Word Embeddings to map words to vectors

- extract the feature of words

- idea:

- the sum of neighboring word embedding is predictive of the word in the middle

- An interesting phenomenon resulting from word2Vec:

Autoregressive Modeling

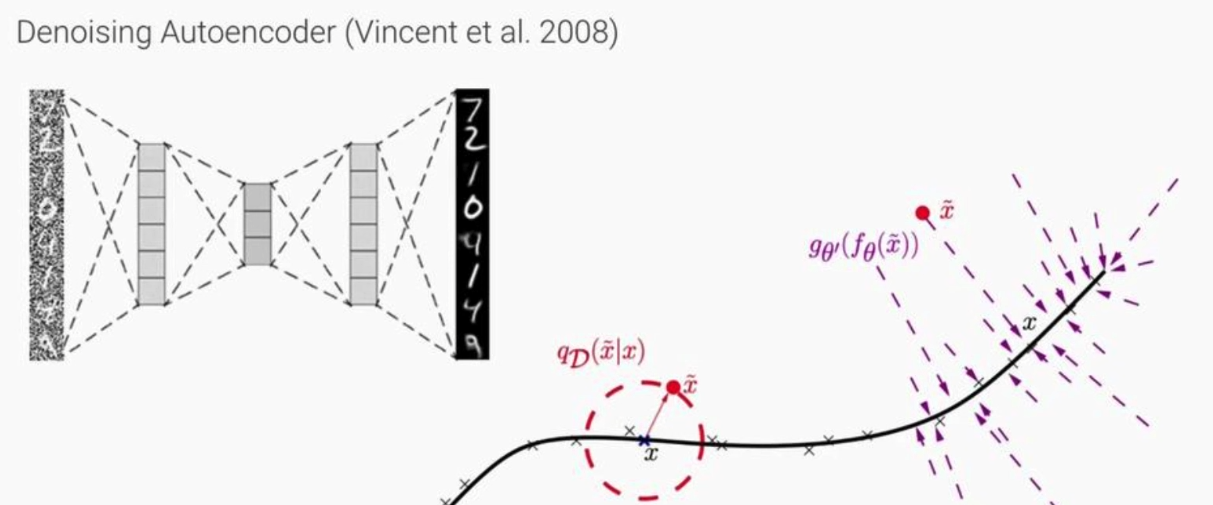

Siamese Networks

Many contrastive self-supervised learning methods use a pair of neural networks and learned from their difference

– this idea can be tracked back to Siamese Networks

- Self-organizing neural networks

- where two neural networks take seperate but related parts of the input, and learns to maximize the agreement between the two outputs

- Siamese Networks

-

if you believe that one network F can well encode x and get a good representation f(x)

-

then, 对于两个不同的输入x1和x2,their distance can be d(x1,x2) = L(f(x1),f(x2))

-

the idea of running two identical CNN on two different inputs and then comparing them —— a Siamese network

-

Train by:

✅ If xi and xj are the same person,

∣

∣

f

(

x

i

)

−

f

(

x

j

)

||f(xi)-f(xj)

∣∣f(xi)−f(xj) is small

✅ If xi and xj are the different person,

∣

∣

f

(

x

i

)

−

f

(

x

j

)

||f(xi)-f(xj)

∣∣f(xi)−f(xj) is large

Multiple Instance Learning & Metric Learning

Predecessors of the predetestors of the recent contrastive learning techniques : multiple instance learning and metric learning

Methods

- self-prediction

- Contrastive learning

Methods for Framing Self-Supervised Learning Tasks

-

Self-prediction: Given an individual data sample, the task is to predict one part of the sample given the other part

- 即 “Intra-sample” prediction

The part to be predicted pretends to be missing

-

Contrastive learning: Given multiple data samples, the task is to predict the relationship among them

-

relationship: can be based on inner logics within data

✅ such as different camera views of the same scene

✅ or create multiple augmented version of the same sample

The multiple samples can be selected from the dataset based on some known logics (e.g., the order of words / sentences), or fabricated by altering the original version

即 we know the true relationship between samples but pretend to not know it

Self-Prediction

Self-prediction: Autoregressive Generation

Self-Prediction: Masked Generation

Self-Prediction: Innate Relationship Prediction

Self-Prediction: Hybrid Self-Prediction Models

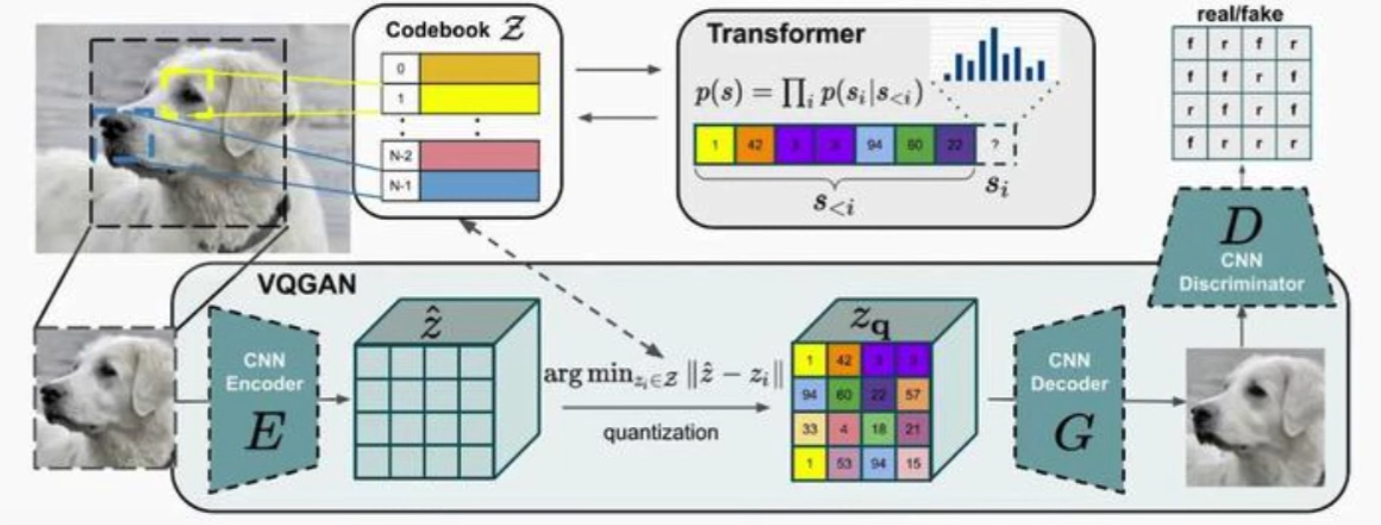

Hybrid Self-Prediction Models: Combines different type of generation modeling

- VQ-VAE + AR

- Jukebox (Dhariwal et al. 2020), DALL-E (Ramesh et al. 2021)

- VQ-VAE + AR + Adversial

-

VQGAN (Esser & Rombach et al. 2021)

-

VQ-VAE: to learn a discrete code book of context rich visual parts

-

A transformer model: trained to auto-aggressively modeling the color combination of this code book

Contrastive Learning

本文内容由网友自发贡献,版权归原作者所有,本站不承担相应法律责任。如您发现有涉嫌抄袭侵权的内容,请联系:hwhale#tublm.com(使用前将#替换为@)