| 主机名称 | IP地址 | 部署节点 | 部署组件 |

|---|---|---|---|

| m1 | 192.168.11.187 | k8s1: master k8s2: master |

k8s1:etcd、apiserver、controller-manager、scheduler k8s2:etcd、apiserver、controller-manager、scheduler |

| n1 | 192.168.11.188 | k8s1: node | k8s1:docker、kubelet、proxy |

| n2 | 192.168.11.189 | k8s2: node | k8s2:docker、kubelet、proxy |

k8s版本:v1.21.10 二进制形式启动

etcd版本:3.4.17 二进制形式启动

docker版本:19.03.9 二进制形式启动

calico版本:3.20.1 pod形式启动

1.用域名的形式部署,签发证书使用域名的方式签发

2.部署两套k8s,m1和n1是一套k8s,m1和n2是一套k8s

3.在此基础上测试两套集群的service-cluster-ip都用11.254.0.1是否会造成冲突

systemctl stop firewalld

systemctl disable firewalld

sed -i 's/enforcing/disabled/' /etc/selinux/config # 永久

setenforce 0 # 临时

swapoff -a # 临时

sed -ri 's/.*swap.*/#&/' /etc/fstab # 永久

# systemctl disable NetworkManager

hostnamectl set-hostname <hostname>

# 查看主机名

hostname

# m1

cat >> /etc/hosts << EOF

192.168.11.187 m1.etcd

192.168.11.187 m1.apiserver

EOF

# n1

cat >> /etc/hosts << EOF

192.168.11.187 m1.etcd # calico-node连接etcd

192.168.11.187 m1.apiserver # kubelet和kube-proxy连接apiserver

EOF

# n2

cat >> /etc/hosts << EOF

192.168.11.187 m1.etcd

192.168.11.187 m1.apiserver

EOF

yum install -y chrony

vim /etc/chrony.conf

server time1.aliyun.com iburst

启动并同步时间

systemctl enable chronyd && systemctl restart chronyd && sleep 5s && chronyc sources

#安装自动补全软件

yum install -y bash-completion

vim ~/.bashrc

#添加以下内容

export PATH=/usr/local/bin:$PATH

source <(kubectl completion bash)

#加载环境变量

source ~/.bashrc

modprobe overlay

modprobe br_netfilter

cat << EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

user.max_user_namespaces=28633

EOF

执行以下命令使配置生效:

sysctl --system # 生效

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

加载ipvs模块

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4

# 需提前下载好

cfssl cfssl-certinfo cfssljson

etcd

docker

k8s

说明:先在m1上创建好k8s1和k8s2的证书,然后把k8s1的证书发送到n1上,把k8s的证书发送到n2上

创建文件夹

mkdir -p /root/k8s1/{certs,cfg,manifests,etcdCerts}

mkdir -p /root/k8s2/{certs,cfg,manifests,etcdCerts}

cd /root/k8s1

cd /root/k8s2

cat <<EOF > ./etcdCerts/ca-config.json

{

"signing":{

"default":{

"expiry":"87600h"

},

"profiles":{

"etcd":{

"expiry":"87600h",

"usages":[

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

cat<<EOF > ./etcdCerts/ca-csr.json

{

"CN":"etcd",

"key":{

"algo":"rsa",

"size":2048

},

"names":[

{

"C":"CN",

"L":"BeiJing",

"ST":"BeiJing",

"O":"k8s",

"OU":"System"

}

]

}

EOF

cfssl gencert -initca ./etcdCerts/ca-csr.json | cfssljson -bare ./etcdCerts/ca

cd /root/k8s1

cd /root/k8s2

cat<<EOF > ./certs/ca-config.json

{

"signing":{

"default":{

"expiry":"87600h"

},

"profiles":{

"kubernetes":{

"expiry":"87600h",

"usages":[

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

cat<<EOF > ./certs/ca-csr.json

{

"CN":"kubernetes",

"key":{

"algo":"rsa",

"size":2048

},

"names":[

{

"C":"CN",

"L":"BeiJing",

"ST":"BeiJing",

"O":"k8s",

"OU":"System"

}

]

}

EOF

cfssl gencert -initca ./certs/ca-csr.json | cfssljson -bare ./certs/ca

cd /root/k8s1

cd /root/k8s2

cat<<EOF >./etcdCerts/etcd-csr.json

{

"CN":"etcd",

"hosts":[

"127.0.0.1",

"*.etcd"

],

"key":{

"algo":"rsa",

"size":2048

},

"names":[

{

"C":"CN",

"L":"BeiJing",

"ST":"BeiJing",

"O":"k8s",

"OU":"System"

}

]

}

EOF

cfssl gencert -ca=./etcdCerts/ca.pem -ca-key=./etcdCerts/ca-key.pem -config=./etcdCerts/ca-config.json -profile=etcd ./etcdCerts/etcd-csr.json | cfssljson -bare ./etcdCerts/etcd

cp ./etcdCerts/etcd-csr.json ./etcdCerts/peer-etcd-csr.json

cfssl gencert -ca=./etcdCerts/ca.pem -ca-key=./etcdCerts/ca-key.pem -config=./etcdCerts/ca-config.json -profile=etcd ./etcdCerts/peer-etcd-csr.json | cfssljson -bare ./etcdCerts/peer-etcd

cd /root/k8s1

cd /root/k8s2

cat<<EOF > ./certs/apiserver-csr.json

{

"CN":"kubernetes",

"hosts":[

"127.0.0.1",

"*.apiserver",

"11.254.0.1",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key":{

"algo":"rsa",

"size":2048

},

"names":[

{

"C":"CN",

"L":"BeiJing",

"ST":"BeiJing",

"O":"k8s",

"OU":"System"

}

]

}

EOF

cfssl gencert -ca=./certs/ca.pem -ca-key=./certs/ca-key.pem -config=./certs/ca-config.json -profile=kubernetes ./certs/apiserver-csr.json | cfssljson -bare ./certs/apiserver

#kubectl证书放在这,由于kubectl相当于系统管理员,我们使用admin命名

#准备admin证书配置 - kubectl只需客户端证书,因此证书请求中 hosts 字段可以为空

cd /root/k8s1

cd /root/k8s2

cat<<EOF > ./certs/admin-csr.json

{

"CN":"admin",

"hosts":[],

"key":{

"algo":"rsa",

"size":2048

},

"names":[

{

"C":"CN",

"L":"BeiJing",

"ST":"BeiJing",

"O":"system:masters",

"OU":"System"

}

]

}

EOF

#使用根证书(ca.pem)签发admin证书

cfssl gencert -ca=./certs/ca.pem -ca-key=./certs/ca-key.pem -config=./certs/ca-config.json -profile=kubernetes ./certs/admin-csr.json | cfssljson -bare ./certs/admin

cd /root/k8s1

cd /root/k8s2

cat<<EOF > ./certs/apiserver-kubelet-client-csr.json

{

"CN":"kube-apiserver-kubelet-client",

"hosts":[],

"key":{

"algo":"rsa",

"size":2048

},

"names":[

{

"C":"CN",

"L":"BeiJing",

"ST":"BeiJing",

"O":"system:masters",

"OU":"System"

}

]

}

EOF

cfssl gencert -ca=./certs/ca.pem -ca-key=./certs/ca-key.pem -config=./certs/ca-config.json -profile=kubernetes ./certs/apiserver-kubelet-client-csr.json | cfssljson -bare ./certs/apiserver-kubelet-client

cd /root/k8s1

cd /root/k8s2

cat<<EOF > ./certs/kube-proxy-csr.json

{

"CN":"system:kube-proxy",

"hosts":[],

"key":{

"algo":"rsa",

"size":2048

},

"names":[

{

"C":"CN",

"L":"BeiJing",

"ST":"BeiJing",

"O":"k8s",

"OU":"System"

}

]

}

EOF

cfssl gencert -ca=./certs/ca.pem -ca-key=./certs/ca-key.pem -config=./certs/ca-config.json -profile=kubernetes ./certs/kube-proxy-csr.json | cfssljson -bare ./certs/kube-proxy

cd /root/k8s1

cd /root/k8s2

cat<<EOF > ./certs/proxy-client-ca-csr.json

{

"CN":"front-proxy",

"key":{

"algo":"rsa",

"size":2048

},

"names":[

{

"C":"CN",

"L":"BeiJing",

"ST":"BeiJing",

"O":"k8s",

"OU":"System"

}

]

}

EOF

cfssl gencert -initca ./certs/proxy-client-ca-csr.json | cfssljson -bare ./certs/proxy-client-ca -

cd /root/k8s1

cd /root/k8s2

cat<<EOF > ./certs/proxy-client-csr.json

{

"CN":"front-proxy",

"hosts":[],

"key":{

"algo":"rsa",

"size":2048

},

"names":[

{

"C":"CN",

"L":"BeiJing",

"ST":"BeiJing",

"O":"k8s",

"OU":"System"

}

]

}

EOF

cfssl gencert -ca=./certs/proxy-client-ca.pem -ca-key=./certs/proxy-client-ca-key.pem -config=./certs/ca-config.json -profile=kubernetes ./certs/proxy-client-csr.json | cfssljson -bare ./certs/proxy-client

cd /root/k8s1

cd /root/k8s2

cat<<EOF > ./certs/kube-controller-manager-csr.json

{

"CN":"system:kube-controller-manager",

"hosts":[

"127.0.0.1",

"*.controller",

"11.254.0.1"

],

"key":{

"algo":"rsa",

"size":2048

},

"names":[

{

"C":"CN",

"L":"BeiJing",

"ST":"BeiJing",

"O":"k8s",

"OU":"System"

}

]

}

EOF

cfssl gencert -ca=./certs/ca.pem -ca-key=./certs/ca-key.pem -config=./certs/ca-config.json -profile=kubernetes ./certs/kube-controller-manager-csr.json | cfssljson -bare ./certs/kube-controller-manager

cd /root/k8s1

cd /root/k8s2

cat<<EOF > ./certs/kube-scheduler-csr.json

{

"CN":"system:kube-scheduler",

"hosts":[

"127.0.0.1",

"*.scheduler ",

"11.254.0.1"

],

"key":{

"algo":"rsa",

"size":2048

},

"names":[

{

"C":"CN",

"L":"BeiJing",

"ST":"BeiJing",

"O":"k8s",

"OU":"System"

}

]

}

EOF

cfssl gencert -ca=./certs/ca.pem -ca-key=./certs/ca-key.pem -config=./certs/ca-config.json -profile=kubernetes ./certs/kube-scheduler-csr.json | cfssljson -bare ./certs/kube-scheduler

cd /root/k8s1

cd /root/k8s2

cat<<EOF > ./certs/sa-csr.json

{

"CN":"sa",

"key":{

"algo":"rsa",

"size":2048

},

"names":[

{

"C":"CN",

"L":"BeiJing",

"ST":"BeiJing",

"O":"k8s",

"OU":"System"

}

]

}

EOF

cfssl gencert -initca ./certs/sa-csr.json | cfssljson -bare ./certs/sa -

openssl x509 -in ./certs/sa.pem -pubkey -noout > ./certs/sa.pub

#分发证书 其他节点

scp -r /root/k8s1/certs root@192.168.11.188:/root/k8s1/

scp -r /root/k8s1/etcdCerts root@192.168.11.188:/root/k8s1/

scp -r /root/k8s2/certs root@192.168.11.189:/root/k8s2/

scp -r /root/k8s2/etcdCerts root@192.168.11.189:/root/k8s2/

cat<<EOF > /usr/lib/systemd/system/etcd1.service

[Unit]

Description=Kubernetes etcd

[Service]

EnvironmentFile=/root/k8s1/cfg/etcd

ExecStart=/usr/local/bin/etcd \$ETCD_OPTS

Type=notify

Restart=on-failure

StartLimitInterval=0

RestartSec=10

KillMode=process

[Install]

WantedBy=multi-user.target

EOF

# 多个节点要设置 initial-cluster 为全部的节点列表 其他节点要修改名字和地址

cat<<EOF > /root/k8s1/cfg/etcd

ETCD_OPTS="--name=etcd-1 \\

--listen-peer-urls=https://192.168.11.187:2380 \\

--initial-advertise-peer-urls=https://m1.etcd:2380 \\

--listen-client-urls=https://192.168.11.187:2379 \\

--advertise-client-urls=https://192.168.11.187:2379 \\

--initial-cluster=etcd-1=https://m1.etcd:2380 \\

--cert-file=/root/k8s1/etcdCerts/etcd.pem \\

--key-file=/root/k8s1/etcdCerts/etcd-key.pem \\

--peer-cert-file=/root/k8s1/etcdCerts/peer-etcd.pem \\

--peer-key-file=/root/k8s1/etcdCerts/peer-etcd-key.pem \\

--trusted-ca-file=/root/k8s1/etcdCerts/ca.pem \\

--peer-trusted-ca-file=/root/k8s1/etcdCerts/ca.pem \\

--initial-cluster-token=etcd-cluster \\

--initial-cluster-state=new \\

--data-dir=/var/lib/etcd1/data/data \\

--wal-dir=/var/lib/etcd1/wal/wal \\

--max-wals=5 \\

--max-request-bytes=1572864 \\

--snapshot-count=100000 \\

--heartbeat-interval=100 \\

--election-timeout=500 \\

--max-snapshots=5 \\

--quota-backend-bytes=8589934592 \\

--auto-compaction-retention=5m \\

--enable-pprof=false \\

--metrics=extensive \\

--log-level=info"

EOF

systemctl stop etcd1 && systemctl daemon-reload && systemctl enable etcd1 && systemctl start etcd1 && systemctl status etcd1

# 常用命令

ETCDCTL_API=3 /usr/local/bin/etcdctl --cacert=/root/k8s1/etcdCerts/ca.pem --cert=/root/k8s1/etcdCerts/etcd.pem --key=/root/k8s1/etcdCerts/etcd-key.pem --endpoints="https://m1.etcd:2379" endpoint health --write-out=table

ETCDCTL_API=3 /usr/local/bin/etcdctl --cacert=/root/k8s1/etcdCerts/ca.pem --cert=/root/k8s1/etcdCerts/etcd.pem --key=/root/k8s1/etcdCerts/etcd-key.pem --endpoints="https://m1.etcd:2379" endpoint health --write-out=table member list -w table

ETCDCTL_API=3 /usr/local/bin/etcdctl --cacert=/root/k8s1/etcdCerts/ca.pem --cert=/root/k8s1/etcdCerts/etcd.pem --key=/root/k8s1/etcdCerts/etcd-key.pem --endpoints="https://m1.etcd:2379" endpoint health --write-out=table get / --prefix

# curl -k https://m1.etcd:2379/version --cacert /root/k8s1/etcdCerts/ca.pem --key /root/k8s1/etcdCerts/etcd-key.pem --cert /root/k8s1/etcdCerts/etcd.pem

{"etcdserver":"3.4.17","etcdcluster":"3.4.0"}

lsof -i:2379

lsof -i:2380

netstat -nltp | grep 2379

netstat -nltp | grep 2380

# 测试写入和读取

ETCDCTL_API=3 /usr/local/bin/etcdctl --cacert=/root/k8s1/etcdCerts/ca.pem --cert=/root/k8s1/etcdCerts/etcd.pem --key=/root/k8s1/etcdCerts/etcd-key.pem --endpoints="https://m1.etcd:2379" put 11111 22222

ETCDCTL_API=3 /usr/local/bin/etcdctl --cacert=/root/k8s1/etcdCerts/ca.pem --cert=/root/k8s1/etcdCerts/etcd.pem --key=/root/k8s1/etcdCerts/etcd-key.pem --endpoints="https://m1.etcd:2379" get 11111

cat<<EOF > /usr/lib/systemd/system/etcd2.service

[Unit]

Description=Kubernetes etcd

[Service]

EnvironmentFile=/root/k8s2/cfg/etcd

ExecStart=/usr/local/bin/etcd \$ETCD_OPTS

Type=notify

Restart=on-failure

StartLimitInterval=0

RestartSec=10

KillMode=process

[Install]

WantedBy=multi-user.target

EOF

# 多个节点要设置 initial-cluster 为全部的节点列表 其他节点要修改名字和地址

cat<<EOF > /root/k8s2/cfg/etcd

ETCD_OPTS="--name=etcd-2 \\

--listen-peer-urls=https://192.168.11.187:23800 \\

--initial-advertise-peer-urls=https://m1.etcd:23800 \\

--listen-client-urls=https://192.168.11.187:23799 \\

--advertise-client-urls=https://192.168.11.187:23799 \\

--initial-cluster=etcd-2=https://m1.etcd:23800 \\

--cert-file=/root/k8s2/etcdCerts/etcd.pem \\

--key-file=/root/k8s2/etcdCerts/etcd-key.pem \\

--peer-cert-file=/root/k8s2/etcdCerts/peer-etcd.pem \\

--peer-key-file=/root/k8s2/etcdCerts/peer-etcd-key.pem \\

--trusted-ca-file=/root/k8s2/etcdCerts/ca.pem \\

--peer-trusted-ca-file=/root/k8s2/etcdCerts/ca.pem \\

--initial-cluster-token=etcd-cluster \\

--initial-cluster-state=new \\

--data-dir=/var/lib/etcd2/data/data \\

--wal-dir=/var/lib/etcd2/wal/wal \\

--max-wals=5 \\

--max-request-bytes=1572864 \\

--snapshot-count=100000 \\

--heartbeat-interval=100 \\

--election-timeout=500 \\

--max-snapshots=5 \\

--quota-backend-bytes=8589934592 \\

--auto-compaction-retention=5m \\

--enable-pprof=false \\

--metrics=extensive \\

--log-level=info"

EOF

systemctl stop etcd2 && systemctl daemon-reload && systemctl enable etcd2 && systemctl start etcd2 && systemctl status etcd2

# 常用命令

ETCDCTL_API=3 /usr/local/bin/etcdctl --cacert=/root/k8s2/etcdCerts/ca.pem --cert=/root/k8s2/etcdCerts/etcd.pem --key=/root/k8s2/etcdCerts/etcd-key.pem --endpoints="https://m1.etcd:23799" endpoint health --write-out=table

以下在所有节点操作。这里采用二进制安装,用 yum 安装也一样。

(1)下载二进制包

下载地址:https://download.docker.com/linux/static/stable/x86_64/docker-19.03.9.tgz

# 公网下载

wget https://download.docker.com/linux/static/stable/x86_64/docker-19.03.9.tgz

tar zxvf docker-19.03.9.tgz

mv docker/* /usr/bin

(2) systemd管理 docker

cat > /usr/lib/systemd/system/docker.service << EOF

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

ExecStart=/usr/bin/dockerd

ExecReload=/bin/kill -s HUP $MAINPID

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TimeoutStartSec=0

Delegate=yes

KillMode=process

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target

EOF

(3)创建配置文件

mkdir /etc/docker

cat > /etc/docker/daemon.json << EOF

{

"registry-mirrors": ["https://b9pmyelo.mirror.aliyuncs.com"]

}

EOF

registry-mirrors 阿里云镜像加速器

(4)启动并设置开机启动

# 启动docker

systemctl stop docker

systemctl daemon-reload

systemctl enable docker

systemctl start docker

systemctl status docker

# 安装kube-apiserver

APISERVER="https://m1.apiserver:6443"

# 给kubectl用

cat<<EOF > /root/k8s1/cfg/admin.kubeconfig

apiVersion: v1

clusters:

- cluster:

certificate-authority: /root/k8s1/certs/ca.pem

server: ${APISERVER}

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: system:admin

name: system:admin

current-context: system:admin

kind: Config

preferences: {}

users:

- name: system:admin

user:

client-certificate: /root/k8s1/certs/admin.pem

client-key: /root/k8s1/certs/admin-key.pem

EOF

cp /root/k8s1/cfg/admin.kubeconfig ~/.kube/config

cat<<EOF > /usr/lib/systemd/system/kube-apiserver1.service

[Unit]

Description=Kubernetes kube-apiserver

[Service]

EnvironmentFile=/root/k8s1/cfg/kube-apiserver

ExecStart=/usr/local/bin/kube-apiserver \$KUBE_APISERVER_OPTS

Restart=on-failure

StartLimitInterval=0

RestartSec=10

KillMode=process

[Install]

WantedBy=multi-user.target

EOF

cat<<EOF > /root/k8s1/cfg/kube-apiserver

KUBE_APISERVER_OPTS="--advertise-address=192.168.11.187 \\

--anonymous-auth=false \\

--insecure-port=0 \\

--secure-port=6443 \\

--service-cluster-ip-range=11.254.0.0/16 \\

--kubelet-https=true \\

--tls-cert-file=/root/k8s1/certs/apiserver.pem \\

--tls-private-key-file=/root/k8s1/certs/apiserver-key.pem \\

--client-ca-file=/root/k8s1/certs/ca.pem \\

--kubelet-client-certificate=/root/k8s1/certs/apiserver-kubelet-client.pem \\

--kubelet-client-key=/root/k8s1/certs/apiserver-kubelet-client-key.pem \\

--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \\

--etcd-cafile=/root/k8s1/etcdCerts/ca.pem \\

--etcd-certfile=/root/k8s1/etcdCerts/etcd.pem \\

--etcd-keyfile=/root/k8s1/etcdCerts/etcd-key.pem \\

--etcd-servers=https://m1.etcd:2379 \\

--apiserver-count=1 \\

--logtostderr=true \\

--v=5 \\

--allow-privileged=true \\

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction,DefaultStorageClass,DefaultTolerationSeconds,MutatingAdmissionWebhook,ValidatingAdmissionWebhook,Priority \\

--authorization-mode=RBAC,Node \\

--enable-bootstrap-token-auth \\

--service-node-port-range=443-50000 \\

--requestheader-client-ca-file=/root/k8s1/certs/proxy-client-ca.pem \\

--requestheader-allowed-names=front-proxy \\

--requestheader-extra-headers-prefix=X-Remote-Extra- \\

--requestheader-group-headers=X-Remote-Group \\

--requestheader-username-headers=X-Remote-User \\

--proxy-client-cert-file=/root/k8s1/certs/proxy-client.pem \\

--proxy-client-key-file=/root/k8s1/certs/proxy-client-key.pem \\

--service-account-key-file=/root/k8s1/certs/sa.pub \\

--service-account-signing-key-file=/root/k8s1/certs/sa-key.pem \\

--service-account-issuer=api \\

--enable-aggregator-routing=true"

EOF

systemctl stop kube-apiserver1 && systemctl daemon-reload && systemctl enable kube-apiserver1 && systemctl start kube-apiserver1 && systemctl status kube-apiserver1

# 安装kube-apiserver

APISERVER="https://m1.apiserver:16443"

# 给kubectl用

cat<<EOF > /root/k8s2/cfg/admin.kubeconfig

apiVersion: v1

clusters:

- cluster:

certificate-authority: /root/k8s2/certs/ca.pem

server: ${APISERVER}

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: system:admin

name: system:admin

current-context: system:admin

kind: Config

preferences: {}

users:

- name: system:admin

user:

client-certificate: /root/k8s2/certs/admin.pem

client-key: /root/k8s2/certs/admin-key.pem

EOF

cat<<EOF > /usr/lib/systemd/system/kube-apiserver2.service

[Unit]

Description=Kubernetes kube-apiserver

[Service]

EnvironmentFile=/root/k8s2/cfg/kube-apiserver

ExecStart=/usr/local/bin/kube-apiserver \$KUBE_APISERVER_OPTS

Restart=on-failure

StartLimitInterval=0

RestartSec=10

KillMode=process

[Install]

WantedBy=multi-user.target

EOF

cat<<EOF > /root/k8s2/cfg/kube-apiserver

KUBE_APISERVER_OPTS="--advertise-address=192.168.11.187 \\

--anonymous-auth=false \\

--insecure-port=0 \\

--secure-port=16443 \\

--service-cluster-ip-range=11.254.0.0/16 \\

--kubelet-https=true \\

--tls-cert-file=/root/k8s2/certs/apiserver.pem \\

--tls-private-key-file=/root/k8s2/certs/apiserver-key.pem \\

--client-ca-file=/root/k8s2/certs/ca.pem \\

--kubelet-client-certificate=/root/k8s2/certs/apiserver-kubelet-client.pem \\

--kubelet-client-key=/root/k8s2/certs/apiserver-kubelet-client-key.pem \\

--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \\

--etcd-cafile=/root/k8s2/etcdCerts/ca.pem \\

--etcd-certfile=/root/k8s2/etcdCerts/etcd.pem \\

--etcd-keyfile=/root/k8s2/etcdCerts/etcd-key.pem \\

--etcd-servers=https://m1.etcd:23799 \\

--apiserver-count=1 \\

--logtostderr=true \\

--v=5 \\

--allow-privileged=true \\

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction,DefaultStorageClass,DefaultTolerationSeconds,MutatingAdmissionWebhook,ValidatingAdmissionWebhook,Priority \\

--authorization-mode=RBAC,Node \\

--enable-bootstrap-token-auth \\

--service-node-port-range=443-50000 \\

--requestheader-client-ca-file=/root/k8s2/certs/proxy-client-ca.pem \\

--requestheader-allowed-names=front-proxy \\

--requestheader-extra-headers-prefix=X-Remote-Extra- \\

--requestheader-group-headers=X-Remote-Group \\

--requestheader-username-headers=X-Remote-User \\

--proxy-client-cert-file=/root/k8s2/certs/proxy-client.pem \\

--proxy-client-key-file=/root/k8s2/certs/proxy-client-key.pem \\

--service-account-key-file=/root/k8s2/certs/sa.pub \\

--service-account-signing-key-file=/root/k8s2/certs/sa-key.pem \\

--service-account-issuer=api \\

--enable-aggregator-routing=true"

EOF

systemctl stop kube-apiserver2 && systemctl daemon-reload && systemctl enable kube-apiserver2 && systemctl start kube-apiserver2 && systemctl status kube-apiserver2

APISERVER="https://m1.apiserver:6443"

cat<<EOF > /root/k8s1/cfg/kube-controller-manager.kubeconfig

apiVersion: v1

clusters:

- cluster:

certificate-authority: /root/k8s1/certs/ca.pem

server: ${APISERVER}

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: system:kube-controller-manager

name: system:kube-controller-manager

current-context: system:kube-controller-manager

kind: Config

preferences: {}

users:

- name: system:kube-controller-manager

user:

client-certificate: /root/k8s1/certs/kube-controller-manager.pem

client-key: /root/k8s1/certs/kube-controller-manager-key.pem

EOF

cat<<EOF > /usr/lib/systemd/system/kube-controller-manager1.service

[Unit]

Description=Kubernetes kube-controller-manager

[Service]

EnvironmentFile=/root/k8s1/cfg/kube-controller-manager

ExecStart=/usr/local/bin/kube-controller-manager \$KUBE_CONTROLLER_MANAGER_OPTS

Restart=on-failure

StartLimitInterval=0

RestartSec=10

KillMode=process

[Install]

WantedBy=multi-user.target

EOF

cat<<EOF > /root/k8s1/cfg/kube-controller-manager

KUBE_CONTROLLER_MANAGER_OPTS="--master=${APISERVER} \\

--port=10252 \\

--secure-port=10257 \\

--controllers=*,bootstrapsigner,tokencleaner \\

--use-service-account-credentials=true \\

--kubeconfig=/root/k8s1/cfg/kube-controller-manager.kubeconfig \\

--authentication-kubeconfig=/root/k8s1/cfg/kube-controller-manager.kubeconfig \\

--authorization-kubeconfig=/root/k8s1/cfg/kube-controller-manager.kubeconfig \\

--allocate-node-cidrs \\

--service-cluster-ip-range=11.254.0.0/16 \\

--cluster-cidr=172.248.0.0/16 \\

--cluster-signing-cert-file=/root/k8s1/certs/ca.pem \\

--cluster-signing-key-file=/root/k8s1/certs/ca-key.pem \\

--root-ca-file=/root/k8s1/certs/ca.pem \\

--requestheader-client-ca-file=/root/k8s1/certs/proxy-client-ca.pem \\

--service-account-private-key-file=/root/k8s1/certs/sa-key.pem \\

--logtostderr=true \\

--v=4 \\

--leader-elect=true \\

--address=127.0.0.1 \\

--cluster-name=kubernetes"

EOF

systemctl stop kube-controller-manager1 && systemctl daemon-reload && systemctl enable kube-controller-manager1 && systemctl start kube-controller-manager1 && systemctl status kube-controller-manager1

journalctl -xefu kube-controller-manager

APISERVER="https://m1.apiserver:16443"

cat<<EOF > /root/k8s2/cfg/kube-controller-manager.kubeconfig

apiVersion: v1

clusters:

- cluster:

certificate-authority: /root/k8s2/certs/ca.pem

server: ${APISERVER}

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: system:kube-controller-manager

name: system:kube-controller-manager

current-context: system:kube-controller-manager

kind: Config

preferences: {}

users:

- name: system:kube-controller-manager

user:

client-certificate: /root/k8s2/certs/kube-controller-manager.pem

client-key: /root/k8s2/certs/kube-controller-manager-key.pem

EOF

cat<<EOF > /usr/lib/systemd/system/kube-controller-manager2.service

[Unit]

Description=Kubernetes kube-controller-manager

[Service]

EnvironmentFile=/root/k8s2/cfg/kube-controller-manager

ExecStart=/usr/local/bin/kube-controller-manager \$KUBE_CONTROLLER_MANAGER_OPTS

Restart=on-failure

StartLimitInterval=0

RestartSec=10

KillMode=process

[Install]

WantedBy=multi-user.target

EOF

cat<<EOF > /root/k8s2/cfg/kube-controller-manager

KUBE_CONTROLLER_MANAGER_OPTS="--master=${APISERVER} \\

--port=11252 \\

--secure-port=11257 \\

--controllers=*,bootstrapsigner,tokencleaner \\

--use-service-account-credentials=true \\

--kubeconfig=/root/k8s2/cfg/kube-controller-manager.kubeconfig \\

--authentication-kubeconfig=/root/k8s2/cfg/kube-controller-manager.kubeconfig \\

--authorization-kubeconfig=/root/k8s2/cfg/kube-controller-manager.kubeconfig \\

--allocate-node-cidrs \\

--service-cluster-ip-range=11.254.0.0/16 \\

--cluster-cidr=172.248.0.0/16 \\

--cluster-signing-cert-file=/root/k8s2/certs/ca.pem \\

--cluster-signing-key-file=/root/k8s2/certs/ca-key.pem \\

--root-ca-file=/root/k8s2/certs/ca.pem \\

--requestheader-client-ca-file=/root/k8s2/certs/proxy-client-ca.pem \\

--service-account-private-key-file=/root/k8s2/certs/sa-key.pem \\

--logtostderr=true \\

--v=4 \\

--leader-elect=true \\

--address=127.0.0.1 \\

--cluster-name=kubernetes"

EOF

systemctl stop kube-controller-manager2 && systemctl daemon-reload && systemctl enable kube-controller-manager2 && systemctl start kube-controller-manager2 && systemctl status kube-controller-manager2

journalctl -xefu kube-controller-manager

APISERVER="https://m1.apiserver:6443"

cat<<EOF > /root/k8s1/cfg/kube-scheduler.kubeconfig

apiVersion: v1

clusters:

- cluster:

certificate-authority: /root/k8s1/certs/ca.pem

server: ${APISERVER}

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: system:kube-scheduler

name: system:kube-scheduler

current-context: system:kube-scheduler

kind: Config

preferences: {}

users:

- name: system:kube-scheduler

user:

client-certificate: /root/k8s1/certs/kube-scheduler.pem

client-key: /root/k8s1/certs/kube-scheduler-key.pem

EOF

cat<<EOF > /usr/lib/systemd/system/kube-scheduler1.service

[Unit]

Description=Kubernetes kube-scheduler

[Service]

EnvironmentFile=/root/k8s1/cfg/kube-scheduler

ExecStart=/usr/local/bin/kube-scheduler \$KUBE_SCHEDULER_OPTS

Restart=on-failure

StartLimitInterval=0

RestartSec=10

KillMode=process

[Install]

WantedBy=multi-user.target

EOF

cat<<EOF > /root/k8s1/cfg/kube-scheduler

KUBE_SCHEDULER_OPTS="--master=${APISERVER} \\

--port=10251 \\

--secure-port=10259 \\

--kubeconfig=/root/k8s1/cfg/kube-scheduler.kubeconfig \\

--authentication-kubeconfig=/root/k8s1/cfg/kube-scheduler.kubeconfig \\

--authorization-kubeconfig=/root/k8s1/cfg/kube-scheduler.kubeconfig \\

--logtostderr=true \\

--v=4 \\

--leader-elect"

EOF

systemctl stop kube-scheduler1 && systemctl daemon-reload && systemctl enable kube-scheduler1 && systemctl start kube-scheduler1 && systemctl status kube-scheduler1

APISERVER="https://m1.apiserver:16443"

cat<<EOF > /root/k8s2/cfg/kube-scheduler.kubeconfig

apiVersion: v1

clusters:

- cluster:

certificate-authority: /root/k8s2/certs/ca.pem

server: ${APISERVER}

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: system:kube-scheduler

name: system:kube-scheduler

current-context: system:kube-scheduler

kind: Config

preferences: {}

users:

- name: system:kube-scheduler

user:

client-certificate: /root/k8s2/certs/kube-scheduler.pem

client-key: /root/k8s2/certs/kube-scheduler-key.pem

EOF

cat<<EOF > /usr/lib/systemd/system/kube-scheduler2.service

[Unit]

Description=Kubernetes kube-scheduler

[Service]

EnvironmentFile=/root/k8s2/cfg/kube-scheduler

ExecStart=/usr/local/bin/kube-scheduler \$KUBE_SCHEDULER_OPTS

Restart=on-failure

StartLimitInterval=0

RestartSec=10

KillMode=process

[Install]

WantedBy=multi-user.target

EOF

cat<<EOF > /root/k8s2/cfg/kube-scheduler

KUBE_SCHEDULER_OPTS="--master=${APISERVER} \\

--port=11251 \\

--secure-port=11259 \\

--kubeconfig=/root/k8s2/cfg/kube-scheduler.kubeconfig \\

--authentication-kubeconfig=/root/k8s2/cfg/kube-scheduler.kubeconfig \\

--authorization-kubeconfig=/root/k8s2/cfg/kube-scheduler.kubeconfig \\

--logtostderr=true \\

--v=4 \\

--leader-elect"

EOF

systemctl stop kube-scheduler2 && systemctl daemon-reload && systemctl enable kube-scheduler2 && systemctl start kube-scheduler2 && systemctl status kube-scheduler2

cd /root/k8s1

cd /root/k8s2

cat<<EOF > ./cfg/secret

apiVersion: v1

kind: Secret

metadata:

name: bootstrap-token-8edc64

namespace: kube-system

type: bootstrap.kubernetes.io/token

stringData:

description: "The default bootstrap token"

token-id: 8edc64

token-secret: cfc6c83dc955d6s3

usage-bootstrap-authentication: "true"

usage-bootstrap-signing: "true"

auth-extra-groups: system:bootstrappers:worker,system:bootstrappers:ingress

EOF

# 参考说明:https://kubernetes.io/zh/docs/reference/command-line-tools-reference/kubelet-tls-bootstrapping/

### 现在启动引导节点被身份认证为 system:bootstrapping 组的成员,它需要被 授权 创建证书签名请求(CSR)并在证书被签名之后将其取回。 幸运的是,Kubernetes 提供了一个 ClusterRole,其中精确地封装了这些许可, system:node-bootstrapper。 为了实现这一点,你只需要创建 ClusterRoleBinding,将 system:bootstrappers 组绑定到集群角色 system:node-bootstrapper。

# 允许启动引导节点创建 CSR

cd /root/k8s1

cd /root/k8s2

cat<<EOF > ./cfg/rb.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: create-csrs-for-bootstrapping

subjects:

- kind: Group

name: system:bootstrappers

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: ClusterRole

name: system:node-bootstrapper

apiGroup: rbac.authorization.k8s.io

EOF

--------------------------------------------------------------------------------

# 批复 "system:bootstrappers" 组的所有 CSR

cat<<EOF > ./cfg/approve.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: auto-approve-csrs-for-group

subjects:

- kind: Group

name: system:bootstrappers

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:nodeclient

apiGroup: rbac.authorization.k8s.io

EOF

# 批复 "system:nodes" 组的 CSR 续约请求

cat <<EOF > ./cfg/approve-node.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: auto-approve-renewals-for-nodes

subjects:

- kind: Group

name: system:nodes

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:selfnodeclient

apiGroup: rbac.authorization.k8s.io

EOF

kubectl apply -f /root/k8s1/cfg/secret

kubectl apply -f /root/k8s1/cfg/rb.yaml

kubectl apply -f /root/k8s1/cfg/approve.yaml

kubectl apply -f /root/k8s1/cfg/approve-node.yaml

kubectl --kubeconfig /root/k8s2/cfg/admin.kubeconfig apply -f /root/k8s2/cfg/secret

kubectl --kubeconfig /root/k8s2/cfg/admin.kubeconfig apply -f /root/k8s2/cfg/rb.yaml

kubectl --kubeconfig /root/k8s2/cfg/admin.kubeconfig apply -f /root/k8s2/cfg/approve.yaml

kubectl --kubeconfig /root/k8s2/cfg/admin.kubeconfig apply -f /root/k8s2/cfg/approve-node.yaml

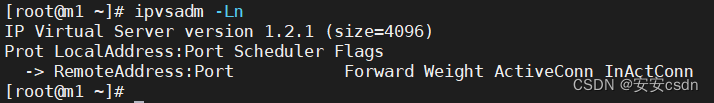

# 三台主机显示的是同样的内容

# ipvsadm没有记录

[root@m1 certs]# ipvsadm

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

[root@n1 certs]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

# 访问11.254.0.1:443也访问不到

[root@m1 certs]# curl https://11.254.0.1:443 --cacert ca.pem --cert apiserver.pem --key apiserver-key.pem

curl: (7) Failed connect to 11.254.0.1:443; 拒绝连接

[root@n1 certs]# curl https://11.254.0.1:443 --cacert ca.pem --cert apiserver.pem --key apiserver-key.pem

curl: (7) Failed connect to 11.254.0.1:443; 拒绝连接

cat<<EOF > /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

After=docker.service

Requires=docker.service

[Service]

EnvironmentFile=/root/k8s1/cfg/kubelet

ExecStart=/usr/local/bin/kubelet \$KUBELET_OPTS

Restart=on-failure

StartLimitInterval=0

RestartSec=10

KillMode=process

[Install]

WantedBy=multi-user.target

EOF

cat<<EOF > /root/k8s1/cfg/kubelet

KUBELET_OPTS="--hostname-override=n1 \\

--bootstrap-kubeconfig=/root/k8s1/cfg/bootstrap.kubeconfig \\

--kubeconfig=/root/k8s1/cfg/kubelet.kubeconfig \\

--cert-dir=/root/k8s1/certs \\

--network-plugin=cni \\

--config=/root/k8s1/cfg/kubelet.config \\

--fail-swap-on=false \\

--container-runtime=docker \\

--runtime-request-timeout=15m \\

--rotate-certificates \\

--container-runtime-endpoint=unix:///var/run/dockershim.sock \\

--v=4 \\

--root-dir=/vdata/kubelet \\

--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0"

EOF

# 不同节点修改自己的address 用docker

cat<<EOF > /root/k8s1/cfg/kubelet.config

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: 192.168.11.188

port: 10250

readOnlyPort: 10255

healthzBindAddress: 127.0.0.1

healthzPort: 10248

cgroupDriver: cgroupfs

httpCheckFrequency: 0s

imageMinimumGCAge: 0s

nodeStatusReportFrequency: 0s

nodeStatusUpdateFrequency: 0s

rotateCertificates: true

runtimeRequestTimeout: 0s

staticPodPath: /root/k8s1/manifests

streamingConnectionIdleTimeout: 0s

syncFrequency: 0s

volumeStatsAggPeriod: 0s

clusterDNS: [11.254.0.10]

clusterDomain: cluster.local

failSwapOn: false

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 0s

enabled: true

x509:

clientCAFile: /root/k8s1/certs/ca.pem

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 0s

cacheUnauthorizedTTL: 0s

fileCheckFrequency: 0s

cpuManagerReconcilePeriod: 0s

evictionPressureTransitionPeriod: 0s

evictionHard:

memory.available: "10%"

EOF

APISERVER="https://m1.apiserver:6443"

cat<<EOF > /root/k8s1/cfg/bootstrap.kubeconfig

apiVersion: v1

kind: Config

clusters:

- cluster:

certificate-authority: /root/k8s1/certs/ca.pem

server: ${APISERVER}

name: bootstrap

contexts:

- context:

cluster: bootstrap

user: kubelet-bootstrap

name: bootstrap

current-context: bootstrap

preferences: {}

users:

- name: kubelet-bootstrap

user:

token: 8edc64.cfc6c83dc955d6s3

EOF

systemctl stop kubelet && systemctl daemon-reload && systemctl enable kubelet && systemctl start kubelet && systemctl status kubelet

APISERVER="https://m1.apiserver:6443"

cat<<EOF > /root/k8s1/cfg/kube-proxy.kubeconfig

apiVersion: v1

clusters:

- cluster:

certificate-authority: /root/k8s1/certs/ca.pem

server: ${APISERVER}

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: system:kube-proxy

name: system:kube-proxy

current-context: system:kube-proxy

kind: Config

preferences: {}

users:

- name: system:kube-proxy

user:

client-certificate: /root/k8s1/certs/kube-proxy.pem

client-key: /root/k8s1/certs/kube-proxy-key.pem

EOF

cat<<EOF > /usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Proxy

After=network.target

[Service]

EnvironmentFile=/root/k8s1/cfg/kube-proxy

ExecStart=/usr/local/bin/kube-proxy \$KUBE_PROXY_OPTS

Restart=on-failure

StartLimitInterval=0

RestartSec=10

[Install]

WantedBy=multi-user.target

EOF

## 不同节点修改--hostname-override

cat<<EOF > /root/k8s1/cfg/kube-proxy

KUBE_PROXY_OPTS="--hostname-override=192.168.11.188 \\

--config=/root/k8s1/cfg/kube-proxy-bootstrap.config \\

--v=4"

EOF

cat<<EOF > /root/k8s1/cfg/kube-proxy-bootstrap.config

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

bindAddress: 0.0.0.0

clientConnection:

acceptContentTypes: ""

burst: 0

contentType: ""

kubeconfig: /root/k8s1/cfg/kube-proxy.kubeconfig

qps: 0

clusterCIDR: 172.248.0.0/16

configSyncPeriod: 0s

conntrack:

maxPerCore: null

min: null

tcpCloseWaitTimeout: null

tcpEstablishedTimeout: null

detectLocalMode: ""

enableProfiling: false

healthzBindAddress: ""

hostnameOverride: ""

iptables:

masqueradeAll: false

masqueradeBit: null

minSyncPeriod: 0s

syncPeriod: 0s

ipvs:

excludeCIDRs: null

minSyncPeriod: 0s

scheduler: ""

strictARP: false

syncPeriod: 0s

tcpFinTimeout: 0s

tcpTimeout: 0s

udpTimeout: 0s

metricsBindAddress: ""

mode: "ipvs"

nodePortAddresses: null

oomScoreAdj: null

portRange: ""

showHiddenMetricsForVersion: ""

udpIdleTimeout: 0s

winkernel:

enableDSR: false

networkName: ""

sourceVip: ""

EOF

systemctl stop kube-proxy && systemctl daemon-reload && systemctl enable kube-proxy && systemctl start kube-proxy && systemctl status kube-proxy

cat<<EOF > /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

After=docker.service

Requires=docker.service

[Service]

EnvironmentFile=/root/k8s2/cfg/kubelet

ExecStart=/usr/local/bin/kubelet \$KUBELET_OPTS

Restart=on-failure

StartLimitInterval=0

RestartSec=10

KillMode=process

[Install]

WantedBy=multi-user.target

EOF

cat<<EOF > /root/k8s2/cfg/kubelet

KUBELET_OPTS="--hostname-override=n2 \\

--bootstrap-kubeconfig=/root/k8s2/cfg/bootstrap.kubeconfig \\

--kubeconfig=/root/k8s2/cfg/kubelet.kubeconfig \\

--cert-dir=/root/k8s2/certs \\

--network-plugin=cni \\

--config=/root/k8s2/cfg/kubelet.config \\

--fail-swap-on=false \\

--container-runtime=docker \\

--runtime-request-timeout=15m \\

--rotate-certificates \\

--container-runtime-endpoint=unix:///var/run/dockershim.sock \\

--v=4 \\

--root-dir=/vdata/kubelet \\

--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0"

EOF

# 不同节点修改自己的address 用docker

cat<<EOF > /root/k8s2/cfg/kubelet.config

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: 192.168.11.189

port: 10250

readOnlyPort: 10255

healthzBindAddress: 127.0.0.1

healthzPort: 10248

cgroupDriver: cgroupfs

httpCheckFrequency: 0s

imageMinimumGCAge: 0s

nodeStatusReportFrequency: 0s

nodeStatusUpdateFrequency: 0s

rotateCertificates: true

runtimeRequestTimeout: 0s

staticPodPath: /root/k8s2/manifests

streamingConnectionIdleTimeout: 0s

syncFrequency: 0s

volumeStatsAggPeriod: 0s

clusterDNS: [11.254.0.10]

clusterDomain: cluster.local

failSwapOn: false

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 0s

enabled: true

x509:

clientCAFile: /root/k8s2/certs/ca.pem

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 0s

cacheUnauthorizedTTL: 0s

fileCheckFrequency: 0s

cpuManagerReconcilePeriod: 0s

evictionPressureTransitionPeriod: 0s

evictionHard:

memory.available: "10%"

EOF

APISERVER="https://m1.apiserver:16443"

cat<<EOF > /root/k8s2/cfg/bootstrap.kubeconfig

apiVersion: v1

kind: Config

clusters:

- cluster:

certificate-authority: /root/k8s2/certs/ca.pem

server: ${APISERVER}

name: bootstrap

contexts:

- context:

cluster: bootstrap

user: kubelet-bootstrap

name: bootstrap

current-context: bootstrap

preferences: {}

users:

- name: kubelet-bootstrap

user:

token: 8edc64.cfc6c83dc955d6s3

EOF

systemctl stop kubelet && systemctl daemon-reload && systemctl enable kubelet && systemctl start kubelet && systemctl status kubelet

APISERVER="https://m1.apiserver:16443"

cat<<EOF > /root/k8s2/cfg/kube-proxy.kubeconfig

apiVersion: v1

clusters:

- cluster:

certificate-authority: /root/k8s2/certs/ca.pem

server: ${APISERVER}

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: system:kube-proxy

name: system:kube-proxy

current-context: system:kube-proxy

kind: Config

preferences: {}

users:

- name: system:kube-proxy

user:

client-certificate: /root/k8s2/certs/kube-proxy.pem

client-key: /root/k8s2/certs/kube-proxy-key.pem

EOF

cat<<EOF > /usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Proxy

After=network.target

[Service]

EnvironmentFile=/root/k8s2/cfg/kube-proxy

ExecStart=/usr/local/bin/kube-proxy \$KUBE_PROXY_OPTS

Restart=on-failure

StartLimitInterval=0

RestartSec=10

[Install]

WantedBy=multi-user.target

EOF

## 不同节点修改--hostname-override

cat<<EOF > /root/k8s2/cfg/kube-proxy

KUBE_PROXY_OPTS="--hostname-override=192.168.11.189 \\

--config=/root/k8s2/cfg/kube-proxy-bootstrap.config \\

--v=4"

EOF

cat<<EOF > /root/k8s2/cfg/kube-proxy-bootstrap.config

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

bindAddress: 0.0.0.0

clientConnection:

acceptContentTypes: ""

burst: 0

contentType: ""

kubeconfig: /root/k8s2/cfg/kube-proxy.kubeconfig

qps: 0

clusterCIDR: 172.248.0.0/16

configSyncPeriod: 0s

conntrack:

maxPerCore: null

min: null

tcpCloseWaitTimeout: null

tcpEstablishedTimeout: null

detectLocalMode: ""

enableProfiling: false

healthzBindAddress: ""

hostnameOverride: ""

iptables:

masqueradeAll: false

masqueradeBit: null

minSyncPeriod: 0s

syncPeriod: 0s

ipvs:

excludeCIDRs: null

minSyncPeriod: 0s

scheduler: ""

strictARP: false

syncPeriod: 0s

tcpFinTimeout: 0s

tcpTimeout: 0s

udpTimeout: 0s

metricsBindAddress: ""

mode: "ipvs"

nodePortAddresses: null

oomScoreAdj: null

portRange: ""

showHiddenMetricsForVersion: ""

udpIdleTimeout: 0s

winkernel:

enableDSR: false

networkName: ""

sourceVip: ""

EOF

systemctl stop kube-proxy && systemctl daemon-reload && systemctl enable kube-proxy && systemctl start kube-proxy && systemctl status kube-proxy

# m1

[root@m1 certs]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 11.254.0.1 <none> 443/TCP 125m

[root@m1 certs]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

[root@m1 certs]# curl https://11.254.0.1:443 --cacert ca.pem --cert apiserver.pem --key apiserver-key.pem

curl: (7) Failed connect to 11.254.0.1:443; 拒绝连接

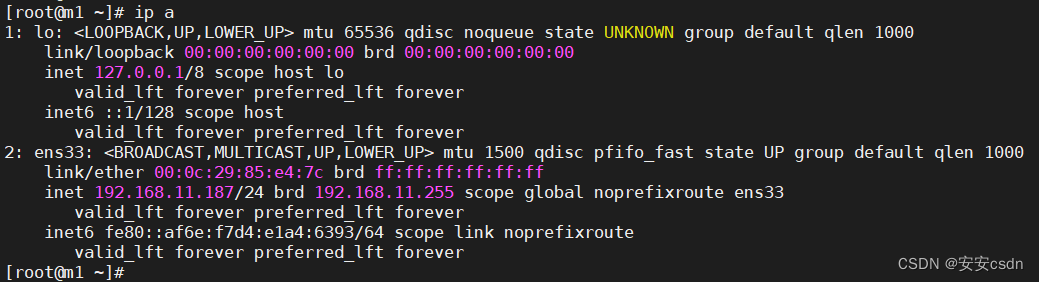

[root@m1 certs]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:85:e4:7c brd ff:ff:ff:ff:ff:ff

inet 192.168.11.187/24 brd 192.168.11.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::af6e:f7d4:e1a4:6393/64 scope link noprefixroute

valid_lft forever preferred_lft forever

[root@m1 certs]#

[root@m1 ~]# kubectl get ep -owide -A

NAMESPACE NAME ENDPOINTS AGE

default kubernetes 192.168.11.187:6443 10h

[root@m1 ~]# kubectl --kubeconfig /root/k8s2/cfg/admin.kubeconfig get ep -owide -A

NAMESPACE NAME ENDPOINTS AGE

default kubernetes 192.168.11.187:16443 10h

######################################################

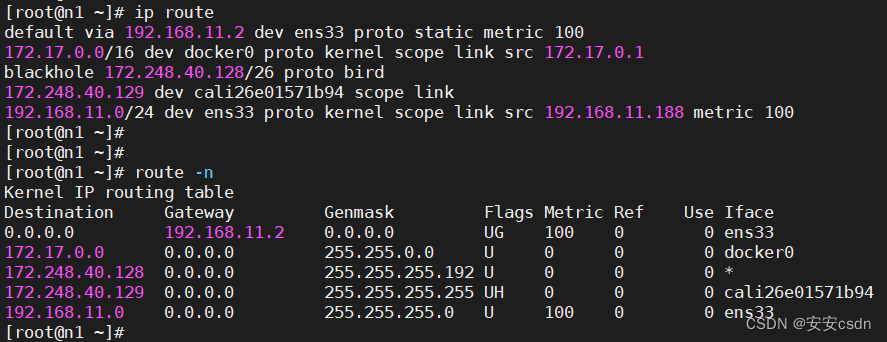

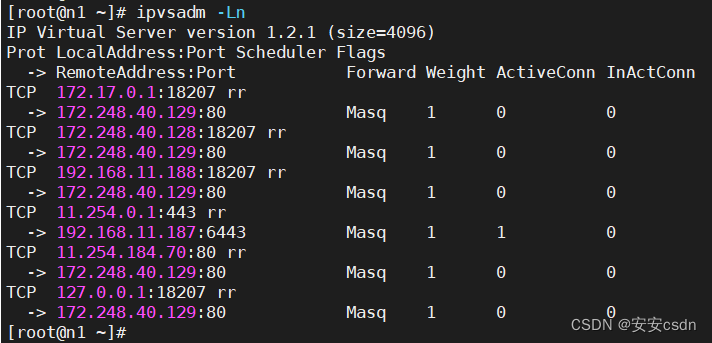

# n1

# n1上面已经有了11.254.0.1这条ipvs记录,所以curl 11.254.0.1的时候就通了

[root@n1 certs]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 11.254.0.1:443 rr

-> 192.168.11.187:6443 Masq 1 0 0

[root@n1 certs]#

[root@n1 certs]# curl https://11.254.0.1:443 --cacert ca.pem --cert apiserver.pem --key apiserver-key.pem

{

"kind": "Status",

"apiVersion": "v1",

"metadata": {

},

"status": "Failure",

"message": "Unauthorized",

"reason": "Unauthorized",

"code": 401

}[root@n1 certs]#

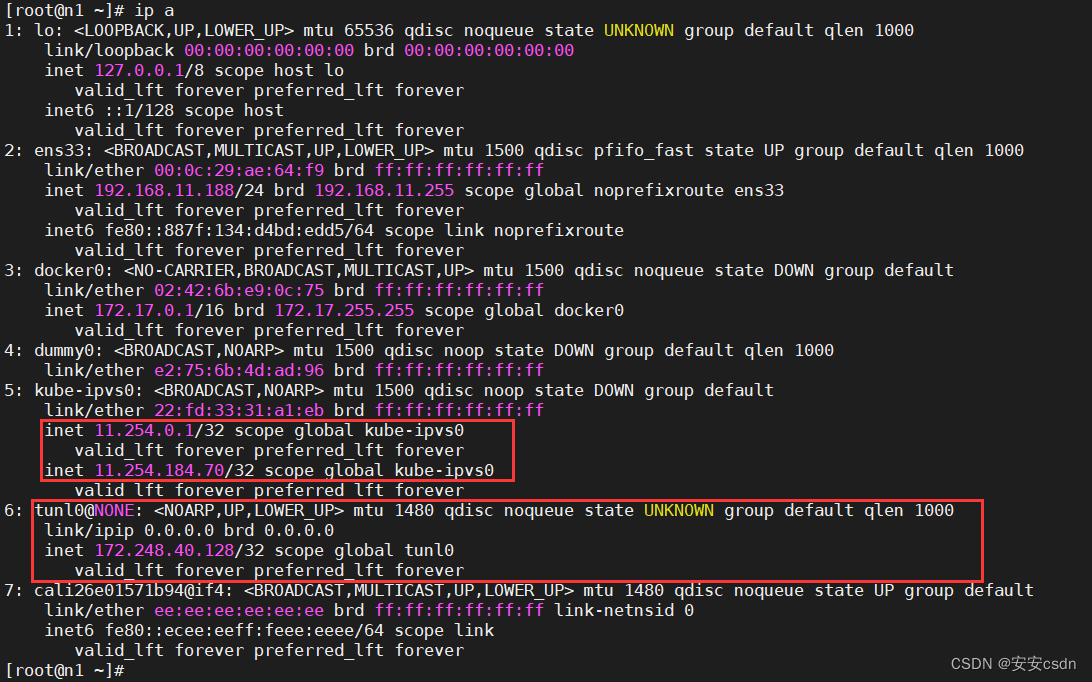

[root@n1 certs]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:ae:64:f9 brd ff:ff:ff:ff:ff:ff

inet 192.168.11.188/24 brd 192.168.11.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::887f:134:d4bd:edd5/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:6b:e9:0c:75 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

4: dummy0: <BROADCAST,NOARP> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether e2:75:6b:4d:ad:96 brd ff:ff:ff:ff:ff:ff

5: kube-ipvs0: <BROADCAST,NOARP> mtu 1500 qdisc noop state DOWN group default

link/ether 22:fd:33:31:a1:eb brd ff:ff:ff:ff:ff:ff

inet 11.254.0.1/32 scope global kube-ipvs0

valid_lft forever preferred_lft forever

# kube-proxy 首先会在宿主机上创建一个虚拟网卡(叫作:kube-ipvs0)

######################################################

# n2

[root@n2 certs]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 11.254.0.1:443 rr

-> 192.168.11.187:16443 Masq 1 0 0

[root@n2 certs]# curl https://11.254.0.1:443 --cacert ca.pem --cert apiserver.pem --key apiserver-key.pem

{

"kind": "Status",

"apiVersion": "v1",

"metadata": {

},

"status": "Failure",

"message": "Unauthorized",

"reason": "Unauthorized",

"code": 401

[root@n2 certs]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:26:f4:43 brd ff:ff:ff:ff:ff:ff

inet 192.168.11.189/24 brd 192.168.11.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::9f70:ae2e:2bbc:d7c3/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:e4:e6:9d:17 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

4: dummy0: <BROADCAST,NOARP> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 82:0b:46:7e:31:12 brd ff:ff:ff:ff:ff:ff

5: kube-ipvs0: <BROADCAST,NOARP> mtu 1500 qdisc noop state DOWN group default

link/ether 12:c3:82:5f:4c:29 brd ff:ff:ff:ff:ff:ff

inet 11.254.0.1/32 scope global kube-ipvs0

valid_lft forever preferred_lft forever

# k8s1部署calico

kubectl --kubeconfig /root/k8s1/cfg/admin.kubeconfig apply -f calico_zeng3.20.1_k8s1.yaml

# k8s2部署calico

kubectl --kubeconfig /root/k8s2/cfg/admin.kubeconfig apply -f calico_zeng3.20.1_k8s2.yaml

# k8s1

[root@m1 ~]# kubectl get node -owide -A

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

n1 Ready <none> 8h v1.21.10 192.168.11.188 <none> CentOS Linux 7 (Core) 3.10.0-1160.el7.x86_64 docker://19.3.9

# k8s2

[root@m1 ~]# kubectl --kubeconfig /root/k8s2/cfg/admin.kubeconfig get node -owide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

n2 Ready <none> 62m v1.21.10 192.168.11.189 <none> CentOS Linux 7 (Core) 3.10.0-1160.el7.x86_64 docker://19.3.9

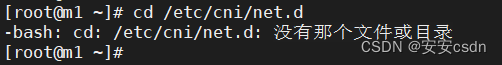

# 可以看到m1上面,没有刚刚部署的网络相关的文件

[root@m1 etcdCerts]# cd /etc/cni/net.d

-bash: cd: /etc/cni/net.d: 没有那个文件或目录

# n1上面才有

[root@n1 etcdCerts]# cd /etc/cni/net.d

[root@n1 net.d]#

[root@n1 net.d]#

[root@n1 net.d]# ll

总用量 8

-rw-r--r--. 1 root root 848 5月 25 09:45 10-calico.conflist

-rw-------. 1 root root 3059 5月 25 09:45 calico-kubeconfig

drwxr-xr-x. 2 root root 54 5月 25 09:45 calico-tls

[root@n1 net.d]#

## 集群1

# 下载nginx 【会联网拉取nginx镜像】

kubectl create deployment nginx --image=nginx

# 查看状态

kubectl get pod

# 暴露端口

kubectl expose deployment nginx --port=80 --type=NodePort

# 查看一下对外的端口

kubectl get pod,svc

## 集群2

# 下载nginx 【会联网拉取nginx镜像】

kubectl --kubeconfig /root/k8s2/cfg/admin.kubeconfig create deployment nginx --image=nginx

# 查看状态

kubectl --kubeconfig /root/k8s2/cfg/admin.kubeconfig get pod

# 暴露端口

kubectl --kubeconfig /root/k8s2/cfg/admin.kubeconfig expose deployment nginx --port=80 --type=NodePort

# 查看一下对外的端口

kubectl --kubeconfig /root/k8s2/cfg/admin.kubeconfig get pod,svc

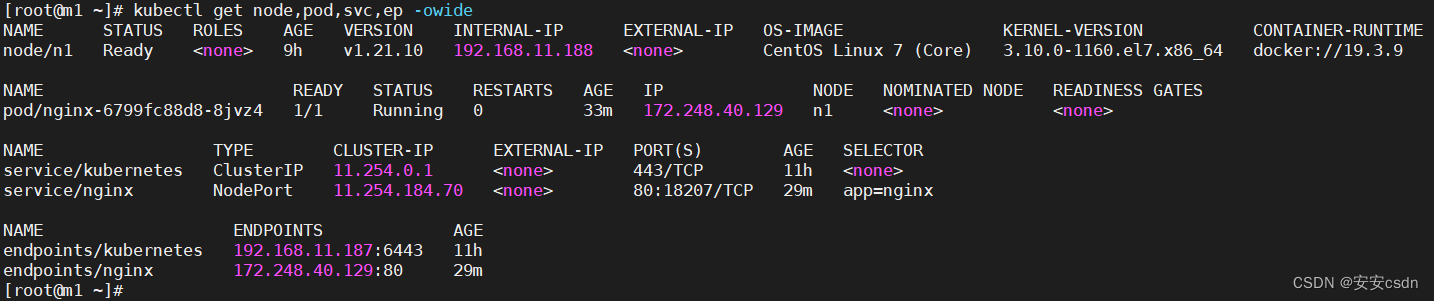

[root@m1 ~]# kubectl get pod,svc,ep -owide -A

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

default pod/nginx-6799fc88d8-8jvz4 1/1 Running 0 26m 172.248.40.129 n1 <none> <none>

kube-system pod/calico-kube-controllers-75b56fb6d7-rjzmf 1/1 Running 0 39m 192.168.11.188 n1 <none> <none>

kube-system pod/calico-node-s6vnm 1/1 Running 0 39m 192.168.11.188 n1 <none> <none>

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

default service/kubernetes ClusterIP 11.254.0.1 <none> 443/TCP 10h <none>

default service/nginx NodePort 11.254.184.70 <none> 80:18207/TCP 22m app=nginx

NAMESPACE NAME ENDPOINTS AGE

default endpoints/kubernetes 192.168.11.187:6443 10h

default endpoints/nginx 172.248.40.129:80 22m

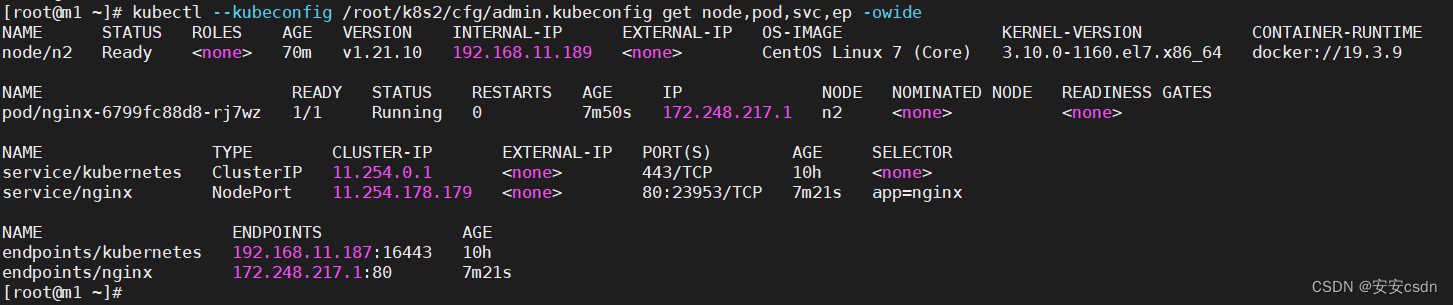

[root@m1 ~]# kubectl --kubeconfig /root/k8s2/cfg/admin.kubeconfig get pod,svc,ep -owide -A

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

default pod/nginx-6799fc88d8-rj7wz 1/1 Running 0 59s 172.248.217.1 n2 <none> <none>

kube-system pod/calico-kube-controllers-75b56fb6d7-g7nrl 1/1 Running 0 4m1s 192.168.11.189 n2 <none> <none>

kube-system pod/calico-node-c6htt 1/1 Running 0 4m1s 192.168.11.189 n2 <none> <none>

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

default service/kubernetes ClusterIP 11.254.0.1 <none> 443/TCP 10h <none>

default service/nginx NodePort 11.254.178.179 <none> 80:23953/TCP 30s app=nginx

NAMESPACE NAME ENDPOINTS AGE

default endpoints/kubernetes 192.168.11.187:16443 10h

default endpoints/nginx 172.248.217.1:80 30s

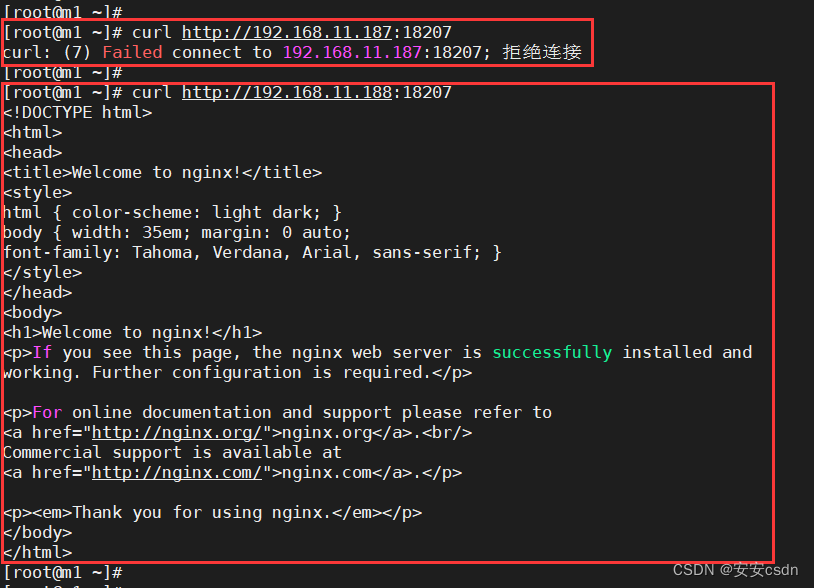

# 可以看到,m1上访问k8s1的服务http://192.168.11.187:18207访问不到,因为m1上没有node节点,只有master组件

# 可以看到,m1上访问k8s1的服务http://192.168.11.188:18207可以访问,因为n1上有node节点,有kube-proxy组件,有ipvs记录,所以可以访问

[root@m1 etcdCerts]# curl http://192.168.11.187:18207

curl: (7) Failed connect to 192.168.11.187:18207; 拒绝连接

[root@m1 etcdCerts]#

[root@m1 etcdCerts]#

[root@m1 etcdCerts]# curl http://192.168.11.188:18207

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

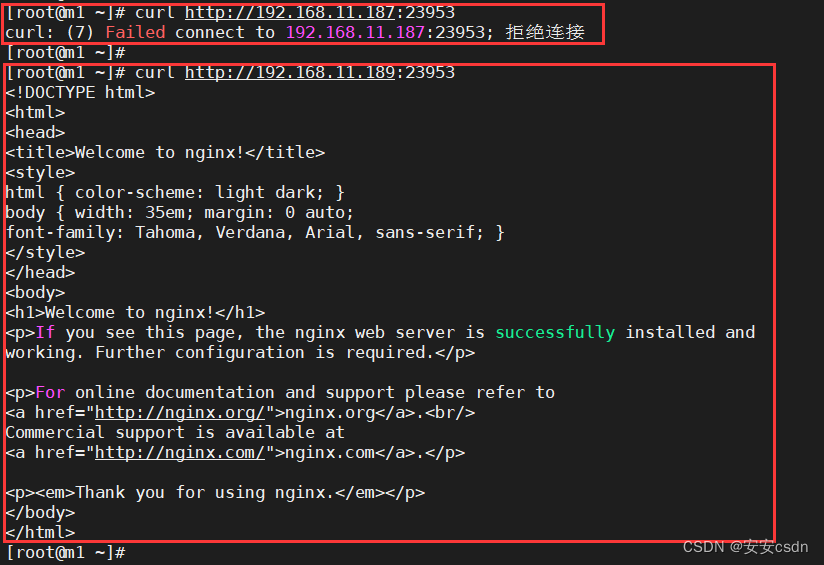

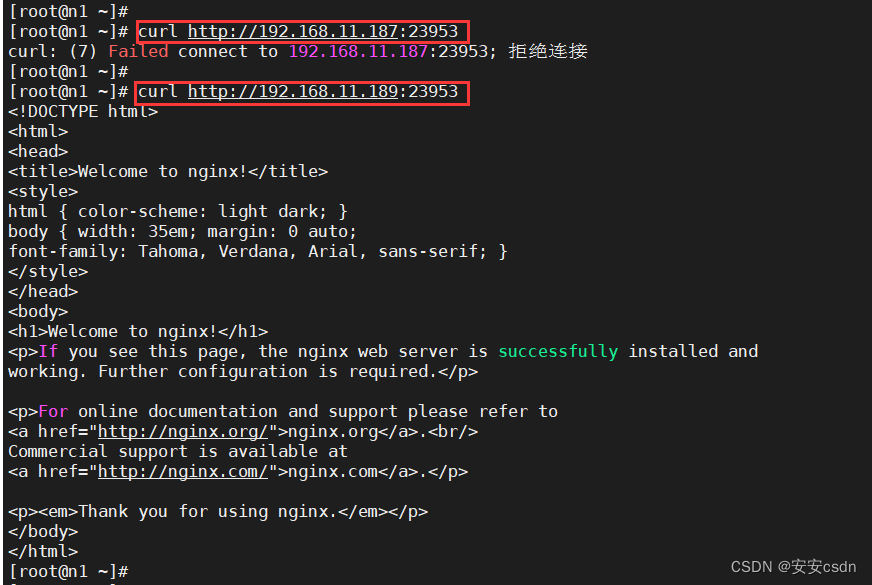

# 同理,可以看到,m1上访问k8s2的服务http://192.168.11.187:23953访问不到,因为m1上没有node节点,只有master组件

# 可以看到,m1上访问k8s2的服务http://192.168.11.189:23953可以访问,因为n2上有node节点,有kube-proxy组件,有ipvs记录,所以可以访问

[root@m1 ~]# curl http://192.168.11.187:23953

curl: (7) Failed connect to 192.168.11.187:23953; 拒绝连接

[root@m1 ~]#

[root@m1 ~]#

[root@m1 ~]#

[root@m1 ~]# curl http://192.168.11.189:23953

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

[root@m1 ~]#

所以,两套集群的service-cluster-ip都用11.254.0.1是不会造成冲突的!

信息类似n1节点

---

# Source: calico/templates/calico-etcd-secrets.yaml

# The following contains k8s Secrets for use with a TLS enabled etcd cluster.

# For information on populating Secrets, see http://kubernetes.io/docs/user-guide/secrets/

apiVersion: v1

kind: Secret

type: Opaque

metadata:

name: calico-etcd-secrets

namespace: kube-system

data:

# Populate the following with etcd TLS configuration if desired, but leave blank if

# not using TLS for etcd.

# The keys below should be uncommented and the values populated with the base64

# encoded contents of each file that would be associated with the TLS data.

# Example command for encoding a file contents: cat <file> | base64 -w 0

etcd-ca: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURzakNDQXBxZ0F3SUJBZ0lVUUwxZmJkWis1bnZKSEdWVnpzbkl5SVBWa05Jd0RRWUpLb1pJaHZjTkFRRUwKQlFBd1h6RUxNQWtHQTFVRUJoTUNRMDR4RURBT0JnTlZCQWdUQjBKbGFVcHBibWN4RURBT0JnTlZCQWNUQjBKbAphVXBwYm1jeEREQUtCZ05WQkFvVEEyczRjekVQTUEwR0ExVUVDeE1HVTNsemRHVnRNUTB3Q3dZRFZRUURFd1JsCmRHTmtNQjRYRFRJeU1EVXlOREV6TXpjd01Gb1hEVEkzTURVeU16RXpNemN3TUZvd1h6RUxNQWtHQTFVRUJoTUMKUTA0eEVEQU9CZ05WQkFnVEIwSmxhVXBwYm1jeEVEQU9CZ05WQkFjVEIwSmxhVXBwYm1jeEREQUtCZ05WQkFvVApBMnM0Y3pFUE1BMEdBMVVFQ3hNR1UzbHpkR1Z0TVEwd0N3WURWUVFERXdSbGRHTmtNSUlCSWpBTkJna3Foa2lHCjl3MEJBUUVGQUFPQ0FROEFNSUlCQ2dLQ0FRRUF5ZzRIMGhCamZ1WkorcDUxOUxxREw2cExqdjJkOGlFWWdJcG8KMUFoTU9VMVJOSFlsOXEwUk5zZ290U0tBVkNueHBoaTRwb3QzZjdDWkwrUkN4VmhLak9NaVBNZWhKZHR4SHNENApIaGpyVGtTd0sxU29nTXhjRDFxb0tIVWZibnlhdTIxeXRCcS9kTExZWHcwWU54czNtTjI3b05qa0Fkd0phQjd2Ck5GZlJlbVFGWjEyak9PL0xHSjZmZ0FUWWh6VVdRSmJNdFF4azJSemQ5b1RlRkVJTjc3ZnJPT1Fib25kTDVBeEQKVFNpOTVVUU9TVFpVWEhKbHNWNW1KVWNENjRUd1FDS2o0TnNpaFlXY1Z1RGQ1UndhZXRsVU9jTjVRRzlRM0xyUAo3cG5NTDVFUU9BYVUxRVdpTmZVdTVsRXRtVlJiekpNQWhodS9qNHg1S2J3b2tLRHc4d0lEQVFBQm8yWXdaREFPCkJnTlZIUThCQWY4RUJBTUNBUVl3RWdZRFZSMFRBUUgvQkFnd0JnRUIvd0lCQWpBZEJnTlZIUTRFRmdRVVF0ckUKQnE1WFB4RS9wcnJyNUlXbEtJRFJkWFF3SHdZRFZSMGpCQmd3Rm9BVVF0ckVCcTVYUHhFL3BycnI1SVdsS0lEUgpkWFF3RFFZSktvWklodmNOQVFFTEJRQURnZ0VCQUgycTBKcmQ2cjlsWnNleW0rZjZ6SG0vUzJNTFpDbjJsQmNoCnVUK3cwcmdZd1FlTVB0bklUNHFHS3F0TlA3U3M3bnUvQmNpRFg3VVNrTXdlOU55VytScjl2VGEwWDZEREhObEMKb2owUTUySnNQcy84aWoyb3MrZTBlNU5saEp5L3NJQlhZUDUwazJPQlVXN1ZDRFkyUUsxUGt1Z2M5N1lEalNacApiaGxZZ1c2RndyTm1oZ29EV1FHbUw1Z29SWm1NV04wbXQ4N3A1R3I1TG9VV0RNZ2Z4SUROemdJV1YrNCsxMi9qCjJ3K0dVWm14VXUybnM1ZE5xUDE1VDhTeE5YR1pCWHMxbnU3bkxCUnMvMWxoTTNYQlRqQ2xVT0U2aHpTOEN6WWIKU1FZZSt1UDE3RG1aTkMreU56aU95bGZxQWsrN3pRTllsdU84L0RPdmFGWVVFUzMzMitjPQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

etcd-cert: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUQ1akNDQXM2Z0F3SUJBZ0lVVFk3N1VDTldsbHBXdnVNSGZnMG9IZjBHbmdrd0RRWUpLb1pJaHZjTkFRRUwKQlFBd1h6RUxNQWtHQTFVRUJoTUNRMDR4RURBT0JnTlZCQWdUQjBKbGFVcHBibWN4RURBT0JnTlZCQWNUQjBKbAphVXBwYm1jeEREQUtCZ05WQkFvVEEyczRjekVQTUEwR0ExVUVDeE1HVTNsemRHVnRNUTB3Q3dZRFZRUURFd1JsCmRHTmtNQjRYRFRJeU1EVXlOREV6TXpnd01Gb1hEVE15TURVeU1URXpNemd3TUZvd1h6RUxNQWtHQTFVRUJoTUMKUTA0eEVEQU9CZ05WQkFnVEIwSmxhVXBwYm1jeEVEQU9CZ05WQkFjVEIwSmxhVXBwYm1jeEREQUtCZ05WQkFvVApBMnM0Y3pFUE1BMEdBMVVFQ3hNR1UzbHpkR1Z0TVEwd0N3WURWUVFERXdSbGRHTmtNSUlCSWpBTkJna3Foa2lHCjl3MEJBUUVGQUFPQ0FROEFNSUlCQ2dLQ0FRRUF1bUR4Mk80c3hEZHZ4d1VPQlZlSjlkaDA1bnhUM2RaaFpVeEEKZlNFZWVwUTVaZ3hzaUtNREpadUhCVjI5UHEwa0xRR0czQ0tTWnpzRGpMbzFPZm5XZ3NCdkVIY0dIQTV5RmdNTApiREdHalVQY2VBdEpCeTRyK1NEUWxKU1lsZWdxNzhyUG9tZHF4WXd0TkxvMGU3cXYrT2cvWi91REJSa2FURnRyCnNOSnhQTWE3TzJ6ckxmWlBtRGhCOXVINTNqc1dYOVZrckdRLzl0QkVEUnJFSnFYSGN4NFdET1NNTW1WeUE5VTkKYkJ6eDgvMWFyZFpuaFpoaGFYZWllTURUdE5pM0x1MmtaakI3RDhwZDQyREZCbG10VnpDcmNkejlvN3Z2b0FrQgpJVUNZYUNidDMyaGViRTJnUDF6MDIzbnVsekN0N2UzcEZTb0Fycy9ZQWdqYkNYNDBFUUlEQVFBQm80R1pNSUdXCk1BNEdBMVVkRHdFQi93UUVBd0lGb0RBZEJnTlZIU1VFRmpBVUJnZ3JCZ0VGQlFjREFRWUlLd1lCQlFVSEF3SXcKREFZRFZSMFRBUUgvQkFJd0FEQWRCZ05WSFE0RUZnUVVvT0IxSFh6dnVKY0xaekZ3Q2FHOG1JQkhubmt3SHdZRApWUjBqQkJnd0ZvQVVRdHJFQnE1WFB4RS9wcnJyNUlXbEtJRFJkWFF3RndZRFZSMFJCQkF3RG9JR0tpNWxkR05rCmh3Ui9BQUFCTUEwR0NTcUdTSWIzRFFFQkN3VUFBNElCQVFBem1zL3pIbFY4emROREpTV0hUc0xXQjNDaisvOTEKM2lZQ1FBRCtHZzR3bUN1N1RzSTMwaVFJUDk1VU10VlhkZUZkN1FMaWQxbm9LcytFSTNqbkJIQkNlNDNVTzR0bgpFTkFESlNRNXcrUG92Wi9OQ2VRN3MvQlZrQmVPVGU0SFR4SE5YVGlOcDVoaUdPYVRGRGQrQVJDWTFyWVUxYWFnCkpraVZtRDhJeDJFck10dlpaUnBwejEvNEVMSTFsM1dzZlFVWXdENGNjNk5NRHJJcC9VdkpiUDFOM1k4bzhmTnQKWS9HUUhGdEVLcm1DL2MwZnFLOHpWd2RISnU5ejV6Sjd6ODhsUXo5eGJlTWUzanV5MzBmQlpySVFuNGxVamppSgpqOE4rZFlaNzBVZDN2V2g4cm9YaXdxUHQvcGpkVmljbzNDYzVWSURZSkttS0ZrdVVkcGdKZzZzVQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

etcd-key: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFb3dJQkFBS0NBUUVBdW1EeDJPNHN4RGR2eHdVT0JWZUo5ZGgwNW54VDNkWmhaVXhBZlNFZWVwUTVaZ3hzCmlLTURKWnVIQlYyOVBxMGtMUUdHM0NLU1p6c0RqTG8xT2ZuV2dzQnZFSGNHSEE1eUZnTUxiREdHalVQY2VBdEoKQnk0citTRFFsSlNZbGVncTc4clBvbWRxeFl3dE5MbzBlN3F2K09nL1ovdURCUmthVEZ0cnNOSnhQTWE3TzJ6cgpMZlpQbURoQjl1SDUzanNXWDlWa3JHUS85dEJFRFJyRUpxWEhjeDRXRE9TTU1tVnlBOVU5YkJ6eDgvMWFyZFpuCmhaaGhhWGVpZU1EVHROaTNMdTJrWmpCN0Q4cGQ0MkRGQmxtdFZ6Q3JjZHo5bzd2dm9Ba0JJVUNZYUNidDMyaGUKYkUyZ1AxejAyM251bHpDdDdlM3BGU29BcnMvWUFnamJDWDQwRVFJREFRQUJBb0lCQUU1UlBHOU9yaXNKcklPeAo0UkZubG9aakhjUllqQmxVNDNwZ2oxekZWUHhuV3JOQ0Z6MVhXcFFzQlpIQXNTd3NMMTVtSE5oV0FyVTBQQ3FmCmVJeFRLc3VvdDBMdzhQVkxNSytGT2dDTjB4OTdXNkpxQTVicjFQaUx6SG9TOHdkVEZ1L0tobk9WQjIzWm1JbG0Ka1hWSW5uZDJpR1pXYnRqdWtubHhsUkFwYmdIK1BKa3pZVVlFdWc3a3dlVTh1K1llL2F3NllXY2QyaE9yWVZWSgpaMzNLL2Z6YXJjYnU0c1JyODhGbERtUFBsQXdaNG9aOWRFTHNWUzhhMkYvOENGYWk1S2dUb0EyZmlnNVhJcDRhCld6a1h0cXU1M1lrTUtHamR0aVVKZlh1d2dLUW5nNjMyYW94VUdIeDRZanFJOUxwZUNUcjdNbXZ0N3FCam1hd1EKTDd6bXJzRUNnWUVBNVA3UENxYXhIWXljaEtYTFhlckxiZzdqeTBNV1dYaFQzUXlvSFcxRlVmR2tEOEcySDJVbgpVbjlvZzRZMEVwWklFQ0F1d3N3ZmJ0RXRLMCtXY1RuUjZ1Q1JTeVNBdlQwUW9hbGhsSCtyaWk5M2JqVWtGRTk5CkgwVGp4Y3FQajU3blpYUU1wcmx4S2ZrZW05N3k0VWFPYXZzdTh1OVZoaVV4WW1FL2N5b0JKb2tDZ1lFQTBGdVMKZ1BaM3l0cmhoRDFyNzErdHdZMXpSOVhMWWsrTDhmRUU0eUxyQkR5UFZaQkF5WWFLWlBhNm0vbk5ua1VRdXErMwpHdGdXY0JxcWJEUFh3QmZydFFMRjZXcExlREtxdEVSY3JPdDFMdm00OHRmRmgvSG5JQ0JtRU4rT0tZeFA0c3lPCkxpaW5KdFhwdXRFOEd5QXRMSXVBYWpjZCtlcVo0RU82Q3AyYXYwa0NnWUExakRRai9IaThQUzQ0Z29COHRMUGYKUjVJUzdOd2tEZFFtZzVnb1VXMzlUSEVkMGlGaFZBa083SVluQTFIWDZ0WnRGdWw0V2IwYjc4UU5OYTRyU2VjQgpjb1BuTzJEelgzMHRJR3VXQ3dpMDVvYVorY2szQ3FOcWxYUmh3dzB3KzJxR3VjMWZpMHVnRWdZTFV1WWVzWjRKCi9EU3RVRFFDaTEzeDhHV2k2M2FiYVFLQmdCU3Y4dVRoRTlYTlU3VFlrRjN0QndoL3JlR0ZCYk9XS0ZQMlZVRGsKRjZlTkI4SThGMktxL0JTNE5xRUQ5WGx3YkEvTklJWUd6SHVHK2tMU0J1cm90UnQ4MGYreDhScFhGWUhlZzhFYgpnOEFOUldLL0w3cW10d2NHa0h1K1pwUVRmVjhNWmxXSTdjZTZWNEdZQXJyQ3dCbDdKRjNuYVErR1RvN295cEVCClF2c1pBb0dCQU5ycTk2cU1MK3IyaXdSV00yckRyZy9JL21IOGZ4SlZHWFpqemFKMkhTSit6Umd6NmtJcHhhdkkKODd2eFNXdWxFSDNqYktsTFhvNVdQamxsL3pZVDFjWWNzbFBFc1JQN2IxUjJUL002MkFVU2J0M0ZCRUx5Tlh1WQp0M0xSREZYYUpxTDVnUGlPQmRJa0w2UEVMUzNFekY5ODB6SER4SHFib3lCdE0vVHJTRzRzCi0tLS0tRU5EIFJTQSBQUklWQVRFIEtFWS0tLS0tCg==

---

# Source: calico/templates/calico-config.yaml

# This ConfigMap is used to configure a self-hosted Calico installation.

kind: ConfigMap

apiVersion: v1

metadata:

name: calico-config

namespace: kube-system

data:

# Configure this with the location of your etcd cluster.

# If you're using TLS enabled etcd uncomment the following.

# You must also populate the Secret below with these files.

etcd_ca: /calico-secrets/etcd-ca

etcd_cert: /calico-secrets/etcd-cert

etcd_endpoints: "https://m1.etcd:2379"

etcd_key: /calico-secrets/etcd-key

# Typha is disabled.

typha_service_name: "none"

# Configure the backend to use.

calico_backend: "bird"

# Configure the MTU to use for workload interfaces and tunnels.

# By default, MTU is auto-detected, and explicitly setting this field should not be required.

# You can override auto-detection by providing a non-zero value.

veth_mtu: "0"

# The CNI network configuration to install on each node. The special

# values in this config will be automatically populated.

cni_network_config: |-

{

"name": "k8s-pod-network",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "calico",

"log_level": "info",

"log_file_path": "/var/log/calico/cni/cni.log",

"datastore_type": "etcdv3",

"etcd_endpoints": "https://m1.etcd:2379",

"etcd_key_file": "/root/k8s1/etcdCerts/etcd-key.pem",

"etcd_cert_file": "/root/k8s1/etcdCerts/etcd.pem",

"etcd_ca_cert_file": "/root/k8s1/etcdCerts/ca.pem",

"mtu": __CNI_MTU__,

"ipam": {

"type": "calico-ipam"

},

"policy": {

"type": "k8s"

},

"kubernetes": {

"kubeconfig": "/etc/cni/net.d/calico-kubeconfig"

}

},

{

"type": "portmap",

"snat": true,

"capabilities": {"portMappings": true}

},

{

"type": "bandwidth",

"capabilities": {"bandwidth": true}

}

]

}

---

# Source: calico/templates/calico-kube-controllers-rbac.yaml

# Include a clusterrole for the kube-controllers component,

# and bind it to the calico-kube-controllers serviceaccount.

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: calico-kube-controllers

rules:

# Pods are monitored for changing labels.

# The node controller monitors Kubernetes nodes.

# Namespace and serviceaccount labels are used for policy.

- apiGroups: [""]

resources:

- pods

- nodes

- namespaces

- serviceaccounts

verbs:

- watch

- list

- get

# Watch for changes to Kubernetes NetworkPolicies.

- apiGroups: ["networking.k8s.io"]

resources:

- networkpolicies

verbs:

- watch

- list

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: calico-kube-controllers

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: calico-kube-controllers

subjects:

- kind: ServiceAccount

name: calico-kube-controllers

namespace: kube-system

---

---

# Source: calico/templates/calico-node-rbac.yaml

# Include a clusterrole for the calico-node DaemonSet,

# and bind it to the calico-node serviceaccount.

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: calico-node

rules:

# The CNI plugin needs to get pods, nodes, and namespaces.

- apiGroups: [""]

resources:

- pods

- nodes

- namespaces

verbs:

- get

# EndpointSlices are used for Service-based network policy rule

# enforcement.

- apiGroups: ["discovery.k8s.io"]

resources:

- endpointslices

verbs:

- watch

- list

- apiGroups: [""]

resources:

- endpoints

- services

verbs:

# Used to discover service IPs for advertisement.

- watch

- list

# Pod CIDR auto-detection on kubeadm needs access to config maps.

- apiGroups: [""]

resources:

- configmaps

verbs:

- get

- apiGroups: [""]

resources:

- nodes/status

verbs:

# Needed for clearing NodeNetworkUnavailable flag.

- patch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: calico-node

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: calico-node

subjects:

- kind: ServiceAccount

name: calico-node

namespace: kube-system

---

# Source: calico/templates/calico-node.yaml

# This manifest installs the calico-node container, as well

# as the CNI plugins and network config on

# each master and worker node in a Kubernetes cluster.

kind: DaemonSet

apiVersion: apps/v1

metadata:

name: calico-node

namespace: kube-system

labels:

k8s-app: calico-node

spec:

selector:

matchLabels:

k8s-app: calico-node

updateStrategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

template:

metadata:

labels:

k8s-app: calico-node

spec:

nodeSelector:

kubernetes.io/os: linux

hostNetwork: true

tolerations:

# Make sure calico-node gets scheduled on all nodes.

- effect: NoSchedule

operator: Exists

# Mark the pod as a critical add-on for rescheduling.

- key: CriticalAddonsOnly

operator: Exists

- effect: NoExecute

operator: Exists

serviceAccountName: calico-node

# Minimize downtime during a rolling upgrade or deletion; tell Kubernetes to do a "force

# deletion": https://kubernetes.io/docs/concepts/workloads/pods/pod/#termination-of-pods.

terminationGracePeriodSeconds: 0

priorityClassName: system-node-critical

initContainers:

# This container installs the CNI binaries

# and CNI network config file on each node.

- name: install-cni

image: docker.io/calico/cni:v3.20.1

command: ["/opt/cni/bin/install"]

envFrom:

- configMapRef:

# Allow KUBERNETES_SERVICE_HOST and KUBERNETES_SERVICE_PORT to be overridden for eBPF mode.

name: kubernetes-services-endpoint

optional: true

env:

# Name of the CNI config file to create.

- name: CNI_CONF_NAME

value: "10-calico.conflist"

# The CNI network config to install on each node.

- name: CNI_NETWORK_CONFIG

valueFrom:

configMapKeyRef:

name: calico-config

key: cni_network_config

# The location of the etcd cluster.

- name: ETCD_ENDPOINTS

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_endpoints

# CNI MTU Config variable

- name: CNI_MTU

valueFrom:

configMapKeyRef:

name: calico-config

key: veth_mtu

# Prevents the container from sleeping forever.

- name: SLEEP

value: "false"

volumeMounts:

- mountPath: /host/opt/cni/bin

name: cni-bin-dir

- mountPath: /host/etc/cni/net.d

name: cni-net-dir

- mountPath: /calico-secrets

name: etcd-certs

securityContext:

privileged: true

# Adds a Flex Volume Driver that creates a per-pod Unix Domain Socket to allow Dikastes

# to communicate with Felix over the Policy Sync API.

- name: flexvol-driver

image: docker.io/calico/pod2daemon-flexvol:v3.20.1

volumeMounts:

- name: flexvol-driver-host

mountPath: /host/driver

securityContext:

privileged: true

containers:

# Runs calico-node container on each Kubernetes node. This

# container programs network policy and routes on each

# host.

- name: calico-node

image: docker.io/calico/node:v3.20.1

envFrom:

- configMapRef:

# Allow KUBERNETES_SERVICE_HOST and KUBERNETES_SERVICE_PORT to be overridden for eBPF mode.

name: kubernetes-services-endpoint

optional: true

env:

# The location of the etcd cluster.

- name: ETCD_ENDPOINTS

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_endpoints

# Location of the CA certificate for etcd.

- name: ETCD_CA_CERT_FILE

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_ca

# Location of the client key for etcd.

- name: ETCD_KEY_FILE

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_key

# Location of the client certificate for etcd.

- name: ETCD_CERT_FILE

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_cert

# Set noderef for node controller.

- name: CALICO_K8S_NODE_REF

valueFrom:

fieldRef:

fieldPath: spec.nodeName

# Choose the backend to use.

- name: CALICO_NETWORKING_BACKEND

valueFrom:

configMapKeyRef:

name: calico-config

key: calico_backend

# Cluster type to identify the deployment type

- name: CLUSTER_TYPE

value: "k8s,bgp"

# Auto-detect the BGP IP address.

- name: IP

value: "autodetect"

- name: IP_AUTODETECTION_METHOD

value: "interface=ens33"

# Enable IPIP

- name: CALICO_IPV4POOL_IPIP

value: "Always"

# Enable or Disable VXLAN on the default IP pool.

- name: CALICO_IPV4POOL_VXLAN

value: "Never"

# Set MTU for tunnel device used if ipip is enabled

- name: FELIX_IPINIPMTU

valueFrom:

configMapKeyRef:

name: calico-config

key: veth_mtu

# Set MTU for the VXLAN tunnel device.

- name: FELIX_VXLANMTU

valueFrom:

configMapKeyRef:

name: calico-config

key: veth_mtu

# Set MTU for the Wireguard tunnel device.

- name: FELIX_WIREGUARDMTU

valueFrom:

configMapKeyRef:

name: calico-config

key: veth_mtu

# The default IPv4 pool to create on startup if none exists. Pod IPs will be

# chosen from this range. Changing this value after installation will have

# no effect. This should fall within `--cluster-cidr`.

- name: CALICO_IPV4POOL_CIDR

value: "172.248.0.0/16"

# Disable file logging so `kubectl logs` works.

- name: CALICO_DISABLE_FILE_LOGGING

value: "true"

# Set Felix endpoint to host default action to ACCEPT.

- name: FELIX_DEFAULTENDPOINTTOHOSTACTION

value: "ACCEPT"

# Disable IPv6 on Kubernetes.

- name: FELIX_IPV6SUPPORT

value: "false"

- name: FELIX_HEALTHENABLED

value: "true"

securityContext:

privileged: true

resources:

requests:

cpu: 250m

livenessProbe:

exec:

command:

- /bin/calico-node

- -felix-live

- -bird-live

periodSeconds: 10

initialDelaySeconds: 10

failureThreshold: 6

timeoutSeconds: 10

readinessProbe:

exec:

command:

- /bin/calico-node

- -felix-ready

- -bird-ready

periodSeconds: 10

timeoutSeconds: 10

volumeMounts:

# For maintaining CNI plugin API credentials.

- mountPath: /host/etc/cni/net.d

name: cni-net-dir

readOnly: false

- mountPath: /lib/modules

name: lib-modules

readOnly: true

- mountPath: /run/xtables.lock

name: xtables-lock

readOnly: false

- mountPath: /var/run/calico

name: var-run-calico

readOnly: false

- mountPath: /var/lib/calico

name: var-lib-calico

readOnly: false

- mountPath: /calico-secrets

name: etcd-certs

- name: policysync

mountPath: /var/run/nodeagent

# For eBPF mode, we need to be able to mount the BPF filesystem at /sys/fs/bpf so we mount in the

# parent directory.

- name: sysfs

mountPath: /sys/fs/

# Bidirectional means that, if we mount the BPF filesystem at /sys/fs/bpf it will propagate to the host.

# If the host is known to mount that filesystem already then Bidirectional can be omitted.

mountPropagation: Bidirectional

- name: cni-log-dir

mountPath: /var/log/calico/cni

readOnly: true

volumes:

# Used by calico-node.

- name: lib-modules

hostPath:

path: /lib/modules

- name: var-run-calico

hostPath:

path: /var/run/calico

- name: var-lib-calico

hostPath:

path: /var/lib/calico

- name: xtables-lock

hostPath:

path: /run/xtables.lock

type: FileOrCreate

- name: sysfs

hostPath:

path: /sys/fs/

type: DirectoryOrCreate

# Used to install CNI.

- name: cni-bin-dir

hostPath:

path: /opt/cni/bin

- name: cni-net-dir

hostPath:

path: /etc/cni/net.d