注:1.octavia源码下载地址:

cd /home

git clone https://github.com/openstack/octavia.git -b stable/ussuri #证书

git clone https://github.com/openstack/octavia-dashboard.git -b stable/ussuri #在dashboard中添加loadbalancer

注:如各节点重启后需重新配置o-hm0网卡ip和mac

主节点(controller1)基础配置

一、创建数据库

mysql -uroot -p123456

CREATE DATABASE octavia;

GRANT ALL PRIVILEGES ON octavia.* TO 'octavia'@'localhost' IDENTIFIED BY '123456';

GRANT ALL PRIVILEGES ON octavia.* TO 'octavia'@'%' IDENTIFIED BY '123456';

flush privileges;

exit;

|

二、安装软件包

yum -y install\

openstack-octavia-api.noarch\

openstack-octavia-common.noarch \

openstack-octavia-health-manager.noarch \

openstack-octavia-housekeeping.noarch \

openstack-octavia-worker.noarch \

python3-octaviaclient.noarch

|

三、创建keystone认证体系(用户、角色、endpoint)

openstack user create --domain default --password 123456 octavia

openstack role add --project service --user octavia admin

openstack service create load-balancer --name octavia

openstack endpoint create octavia public http://controller1:9876 --region RegionOne

openstack endpoint create octavia admin http://controller1:9876 --region RegionOne

openstack endpoint create octavia internal http://controller1:9876 --region RegionOne

|

四、导入Amphora镜像,按需求创建实例类型

# 先下载U版镜像

https://tarballs.opendev.org/openstack/octavia/test-images/test-only-amphora-x64-haproxy-centos-8.qcow2

# source service octavia的环境变量

export OS_USERNAME=octavia

export OS_PASSWORD=123456

export OS_PROJECT_NAME=service

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_DOMAIN_NAME=Default

export OS_AUTH_URL=http://controller1:5000/v3

export OS_IDENTITY_API_VERSION=3

# 上传镜像

openstack image create amphora-x64-haproxy --public --container-format=bare \

--disk-format qcow2 --file test-only-amphora-x64-haproxy-centos-8.qcow2 --tag amphora

# 创建实例类型

openstack flavor create --ram 4096 --disk 50 --vcpus 2 flavor

|

五、创建安全组

# Amphora 虚拟机使用,LB Network 与 Amphora 通信,分别为service下的安全组添加规则

openstack security group create lb-mgmt-sec-grp --project service

openstack security group rule create --protocol udp --dst-port 5555 lb-mgmt-sec-grp

openstack security group rule create --protocol tcp --dst-port 22 lb-mgmt-sec-grp

openstack security group rule create --protocol tcp --dst-port 9443 lb-mgmt-sec-grp

openstack security group rule create --protocol icmp lb-mgmt-sec-grp

|

# Amphora 虚拟机使用,Health Manager 与 Amphora 通信,分别为service下的安全组添加规则

openstack security group create lb-health-mgr-sec-grp --project service

openstack security group rule create --protocol udp --dst-port 5555 lb-health-mgr-sec-grp

openstack security group rule create --protocol tcp --dst-port 22 lb-health-mgr-sec-grp

openstack security group rule create --protocol tcp --dst-port 9443 lb-health-mgr-sec-grp

|

六、创建管理网络(注意要指定租户)

openstack network create lb-mgmt-net --project service

openstack subnet create --subnet-range 192.168.0.0/24 --allocation-pool \

start=192.168.0.2,end=192.168.0.100 --network lb-mgmt-net lb-mgmt-subnet

|

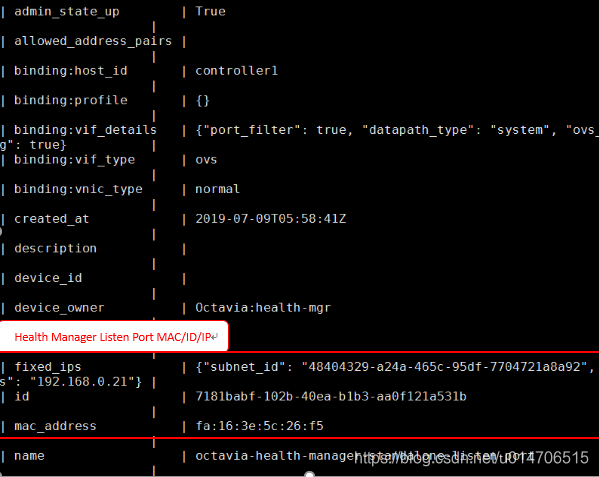

七、创建管理端口

openstack port create octavia-health-manager-standalone-listen-port \

--security-group lb-health-mgr-sec-grp \

--device-owner Octavia:health-mgr \

--host <hostname> --network lb-mgmt-net \

--project service

ovs-vsctl --may-exist add-port br-int o-hm0 \

-- set Interface o-hm0 type=internal \

-- set Interface o-hm0 external-ids:iface-status=active \

-- set Interface o-hm0 external-ids:attached-mac=<Health Manager Listen Port MAC> \

-- set Interface o-hm0 external-ids:iface-id=<Health Manager Listen Port ID>

|

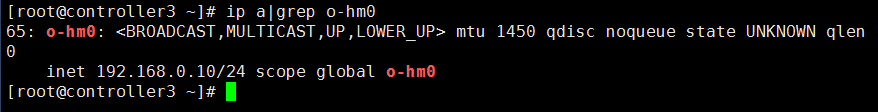

八、为管理端口设置ip(Health Manager 监听端口设置 IP)

ip link set dev o-hm0 address <Health Manager Listen Port MAC>

ip addr add <Health Manager Listen Port IP/24> dev o-hm0 #<>处是添加ip和子网

ip link set dev o-hm0 up #启动网卡使配置生效

|

九、生成octavia controller与amphora通信的证书

U版的证书生成脚本不会用 - - 手动生成

# 注意,以下所有让输入pass密码的,直接输入123456回车

# 让输入其他参数的直接回车

cd /home

mkdir certs

chmod 700 certs

cd certs

cp /home/octavia/bin/openssl.cnf ./

mkdir client_ca

mkdir server_ca

cd server_ca

mkdir certs crl newcerts private

chmod 700 private

touch index.txt

echo 1000 > serial

openssl genrsa -aes256 -out private/ca.key.pem 4096

chmod 400 private/ca.key.pem

openssl req -config ../openssl.cnf -key private/ca.key.pem -new -x509 -days \

7300 -sha256 -extensions v3_ca -out certs/ca.cert.pem

cd ../client_ca

mkdir certs crl csr newcerts private

chmod 700 private

touch index.txt

echo 1000 > serial

openssl genrsa -aes256 -out private/ca.key.pem 4096

chmod 400 private/ca.key.pem

openssl req -config ../openssl.cnf -key private/ca.key.pem -new -x509 -days 7300 \

-sha256 -extensions v3_ca -out certs/ca.cert.pem

openssl genrsa -aes256 -out private/client.key.pem 2048

openssl req -config ../openssl.cnf -new -sha256 -key private/client.key.pem -out \

csr/client.csr.pem

openssl ca -config ../openssl.cnf -extensions usr_cert -days 7300 -notext \

-md sha256 -in csr/client.csr.pem -out certs/client.cert.pem

openssl rsa -in private/client.key.pem -out private/client.cert-and-key.pem

cat certs/client.cert.pem >> private/client.cert-and-key.pem

cd ..

mkdir /etc/octavia/certs

chmod 700 /etc/octavia/certs

cp server_ca/private/ca.key.pem /etc/octavia/certs/server_ca.key.pem

chmod 700 /etc/octavia/certs/server_ca.key.pem

cp server_ca/certs/ca.cert.pem /etc/octavia/certs/server_ca.cert.pem

cp client_ca/certs/ca.cert.pem /etc/octavia/certs/client_ca.cert.pem

cp client_ca/private/client.cert-and-key.pem /etc/octavia/certs/client.cert-and-key.pem

chmod 700 /etc/octavia/certs/client.cert-and-key.pem

chown -R octavia.octavia /etc/octavia/certs

|

十、创建密钥对

mkdir -p /etc/octavia/.ssh

ssh-keygen -b 2048 -t rsa -N "" -f /etc/octavia/.ssh/octavia_ssh_key

nova keypair-add --pub-key=/etc/octavia/.ssh/octavia_ssh_key.pub octavia_ssh_key --user {octavia_user_id}

|

修改配置文件

十一、修改配置文件

#/etc/octavia/octavia.conf

[DEFAULT]

transport_url = rabbit://openstack:openstack@controller1:5672, \

openstack:openstack@controller2:5672,openstack:openstack@controller3:5672

[api_settings]

bind_host = 172.27.125.201 <host IP>

bind_port = 9876

api_handler = queue_producer

auth_strategy = keystone

[database]

connection = mysql+pymysql://octavia:123456@controller1:3306/octavia

[health_manager]

bind_ip = 192.168.0.12 <Health Manager Listen Port IP>

bind_port = 5555

controller_ip_port_list = 192.168.0.12:5555, 192.168.0.*:5555, \

<ha环境需填写其余节点的网卡ip:端口>

heartbeat_key = insecure

[keystone_authtoken]

auth_uri = http://172.27.125.106:5000

auth_url = http://172.27.125.106:5000

memcached_servers = controller1:11211,controller2:11211,controller3:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = octavia

password = 123456

[certificates]

cert_generator = local_cert_generator

ca_private_key_passphrase = 123456

ca_private_key = /etc/octavia/certs/server_ca.key.pem

ca_certificate = /etc/octavia/certs/server_ca.cert.pem

[haproxy_amphora]

client_cert = /etc/octavia/certs/client.cert-and-key.pem

server_ca = /etc/octavia/certs/server_ca.cert.pem

key_path = /etc/octavia/.ssh/octavia_ssh_key

base_path = /var/lib/octavia

base_cert_dir = /var/lib/octavia/certs

connection_max_retries = 5500

connection_retry_interval = 5

rest_request_conn_timeout = 10

rest_request_read_timeout = 120

[controller_worker]

client_ca = /etc/octavia/certs/client_ca.cert.pem

# <openstack image list查看第三步上传镜像时的tag标签>

amp_image_tag = amphora

# <openstack image show查看第三步创建镜像的owner id(请注意是owner的id)>

amp_image_owner_id = 22d71ab1b5b548f7b076b61e7c3ed7dc

# <openstack flavor list查看第三步创建的实例类型id>

amp_flavor_id = c6cc5162-26cb-4e98-aa49-efb3eb369eb2

# <openstack security group list查看第五步创建的lb-mgmt-sec-grp id>

amp_secgroup_list = 0428056a-f1fb-457e-bd33-c2d23eb6d2cd

# <openstack network list查看第六步创建的lb-mgmt-net id>

amp_boot_network_list = 450227cc-11e8-4422-8bf5-540ef5cb2dfe

amp_ssh_key_name = octavia_ssh_key

network_driver = allowed_address_pairs_driver

compute_driver = compute_nova_driver

amphora_driver = amphora_haproxy_rest_driver

# 物理环境下可以不用配置此项,如果在虚拟机环境中部署可适当调整下面两个参数

workers = 2

# 物理环境下可以不用配置此项

amp_active_retries = 100

# 物理环境下可以不用配置此项

amp_active_wait_sec = 2

loadbalancer_topology = ACTIVE_STANDBY

[oslo_messaging]

topic = octavia_prov

rpc_thread_pool_size = 2

[house_keeping]

load_balancer_expiry_age = 3600

amphora_expiry_age = 3600

[service_auth]

memcached_servers = controller1:11211,controller2:11211,controller3:11211

project_domain_name = default

project_name = service

user_domain_name = default

password = 123456

username = octavia

auth_type = password

auth_url = http://172.27.125.106:5000

auth_uri = http://172.27.125.106:5000

|

十二、初始化octavia数据库

octavia-db-manage upgrade head

|

十三、启动服务

#重启octavia各组件

(如果使用httpd纳管octavia-api,就需要stop并disable octavia-api防止octavia发生端口冲突)

systemctl restart octavia-api.service

systemctl restart octavia-worker.service

systemctl restart octavia-health-manager.service

systemctl restart octavia-housekeeping.service

|

#设为开机启动

systemctl enable octavia-api.service

systemctl enable octavia-worker.service

systemctl enable octavia-health-manager.service

systemctl enable octavia-housekeeping.service

|

十四、添加 Load Balancers 页面

cd octavia_file/octavia-dashboard

python setup.py install

cd octavia_dashboard/enabled/

cp _1482_project_load_balancer_panel.py /usr/share/openstack-dashboard/openstack_dashboard/enabled/

cd /usr/share/openstack-dashboard

./manage.py collectstatic

./manage.py compress

systemctl restart httpd

|

其余节点(controller2/3)基础配置

一、安装软件包

yum -y install\

openstack-octavia-api.noarch\

openstack-octavia-common.noarch \

openstack-octavia-health-manager.noarch \

openstack-octavia-housekeeping.noarch \

openstack-octavia-worker.noarch \

python2-octaviaclient.noarch

|

二、创建管理端口

openstack port create octavia-health-manager-standalone-listen-port \

--security-group lb-health-mgr-sec-grp \

--device-owner Octavia:health-mgr \

--host <hostname> --network lb-mgmt-net \

--project service

ovs-vsctl --may-exist add-port br-int o-hm0 \

-- set Interface o-hm0 type=internal \

-- set Interface o-hm0 external-ids:iface-status=active \

-- set Interface o-hm0 external-ids:attached-mac=<Health Manager Listen Port MAC> \

-- set Interface o-hm0 external-ids:iface-id=<Health Manager Listen Port ID>

|

三、为管理端口设置IP

ip link set dev o-hm0 address <Health Manager Listen Port MAC>

ip addr add <ip-addr/24> dev o-hm0

ip link set dev o-hm0 up

|

四、复制主节点controller1上已经生成的证书和秘钥

mkdir -p /etc/octavia/.ssh/

mkdir -p /etc/octavia/certs/

进入controller1中把目录/etc/octavia/certs和/etc/octavia/.ssh下的文件打包传输的其余各节点中的对应文件夹下

chown octavia:octavia /etc/octavia/certs -R

|

五、修改配置文件

#/etc/octavia/octavia.conf

[DEFAULT]

transport_url = rabbit://openstack:openstack@controller1:5672, \

openstack:openstack@controller2:5672,openstack:openstack@controller3:5672

[api_settings]

bind_host = 172.27.125.202 <host IP>

bind_port = 9876

api_handler = queue_producer

auth_strategy = keystone

[database]

connection = mysql+pymysql://octavia:123456@controller2:3306/octavia

[health_manager]

bind_ip = 192.168.0.* <Health Manager Listen Port IP>

bind_port = 5555

# <ha环境需填写其余节点的网卡ip:端口>

controller_ip_port_list = 192.168.0.11:5555, 192.168.0.*:5555

heartbeat_key = insecure

[keystone_authtoken]

auth_uri = http://172.27.125.106:5000

auth_url = http://172.27.125.106:5000

memcached_servers = controller1:11211,controller2:11211,controller3:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = octavia

password = 123456

[certificates]

cert_generator = local_cert_generator

ca_private_key_passphrase = 123456

ca_private_key = /etc/octavia/certs/server_ca.key.pem

ca_certificate = /etc/octavia/certs/server_ca.cert.pem

[haproxy_amphora]

client_cert = /etc/octavia/certs/client.cert-and-key.pem

server_ca = /etc/octavia/certs/server_ca.cert.pem

key_path = /etc/octavia/.ssh/octavia_ssh_key

base_path = /var/lib/octavia

base_cert_dir = /var/lib/octavia/certs

connection_max_retries = 5500

connection_retry_interval = 5

rest_request_conn_timeout = 10

rest_request_read_timeout = 120

[controller_worker]

client_ca = /etc/octavia/certs/client_ca.cert.pem

amp_image_tag = amphora

# <openstack image show IMAGE_ID 查看第三步创建镜像的owner id(请注意是owner的id)>

amp_image_owner_id = 22d71ab1b5b548f7b076b61e7c3ed7dc

# <openstack flavor list查看第三步创建的实例类型id>

amp_flavor_id = c6cc5162-26cb-4e98-aa49-efb3eb369eb2

# <openstack security group list查看第五步创建的lb-mgmt-sec-grp id>

amp_secgroup_list = 0428056a-f1fb-457e-bd33-c2d23eb6d2cd

# <openstack network list查看第六步创建的lb-mgmt-net id>

amp_boot_network_list = 450227cc-11e8-4422-8bf5-540ef5cb2dfe

amp_ssh_key_name = octavia_ssh_key

network_driver = allowed_address_pairs_driver

compute_driver = compute_nova_driver

amphora_driver = amphora_haproxy_rest_driver

workers = 2

amp_active_retries = 100

amp_active_wait_sec = 2

loadbalancer_topology = ACTIVE_STANDBY

[oslo_messaging]

topic = octavia_prov

rpc_thread_pool_size = 2

[house_keeping]

load_balancer_expiry_age = 3600

amphora_expiry_age = 3600

[service_auth]

memcached_servers = controller1:11211,controller2:11211,controller3:11211

project_domain_name = default

project_name = service

user_domain_name = default

password = 123456

username = octavia

auth_type = password

auth_url = http://172.27.125.106:5000

auth_uri = http://172.27.125.106:5000

|

六、初始化octavia数据库

octavia-db-manage upgrade head

|

七、启动服务

(如果使用httpd纳管octavia-api,就需要stop并disable octavia-api防止octavia发生端口冲突)

#重启octavia各组件

systemctl restart octavia-api.service

systemctl restart octavia-worker.service

systemctl restart octavia-health-manager.service

systemctl restart octavia-housekeeping.service

|

#设为开机启动

systemctl enable octavia-api.service

systemctl enable octavia-worker.service

systemctl enable octavia-health-manager.service

systemctl enable octavia-housekeeping.service

|

本文内容由网友自发贡献,版权归原作者所有,本站不承担相应法律责任。如您发现有涉嫌抄袭侵权的内容,请联系:hwhale#tublm.com(使用前将#替换为@)