作者 | yanwei

来源 | 墨天轮 https://www.modb.pro/db/95684

大家好,我是 JiekeXu,很高兴又和大家见面了,今天和大家一起来看看 Linux7.9 安装 Oracle19c RAC 详细配置方案,欢迎点击上方蓝字关注我,标星或置顶,更多干货第一时间到达!

一、安装规划

1.1 软件规划

1.1.1 软件下载

1.1.1.1 OS下载

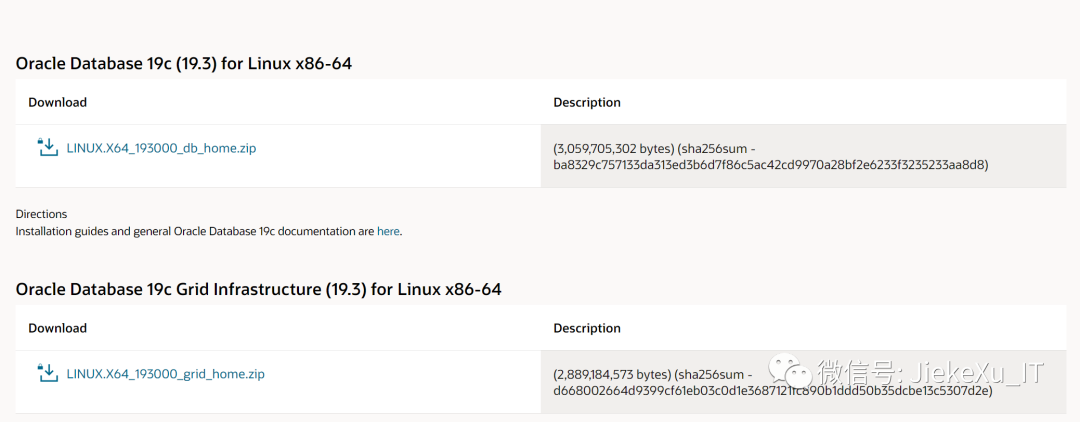

1.1.1.2 RAC软件下载

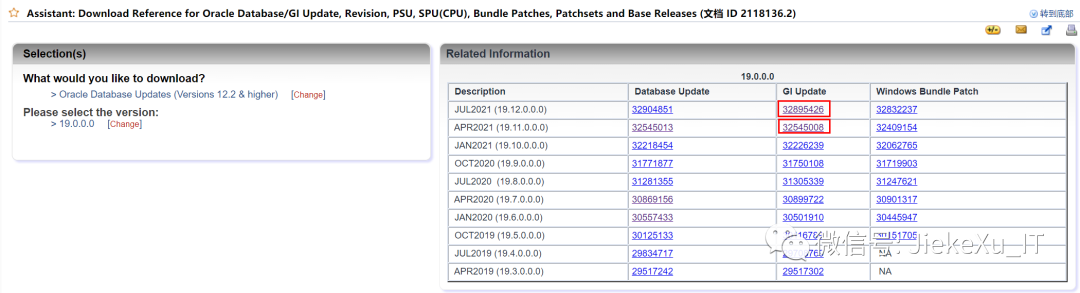

1.1.1.3 RU下载

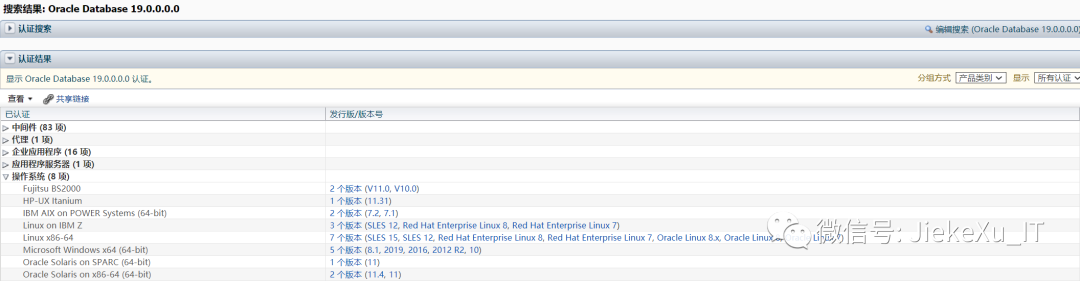

1.1.2 操作系统认证

1.2 虚拟机规划

1.3 网络规划

1.4 操作系统规划

1.4.1 操作系统目录

1.4.2 软件包

1.5 共享存储规划

1.6 Oracle规划

1.6.1 软件规划

1.6.2 用户组和用户

1.6.3 软件目录规划

1.6.4 整体数据库安装规划

1.6.5 RU升级规划

二、虚拟机安装

2.1 选择硬件兼容性

2.2 选择操作系统ISO

2.3 命名虚拟机

2.4 CPU

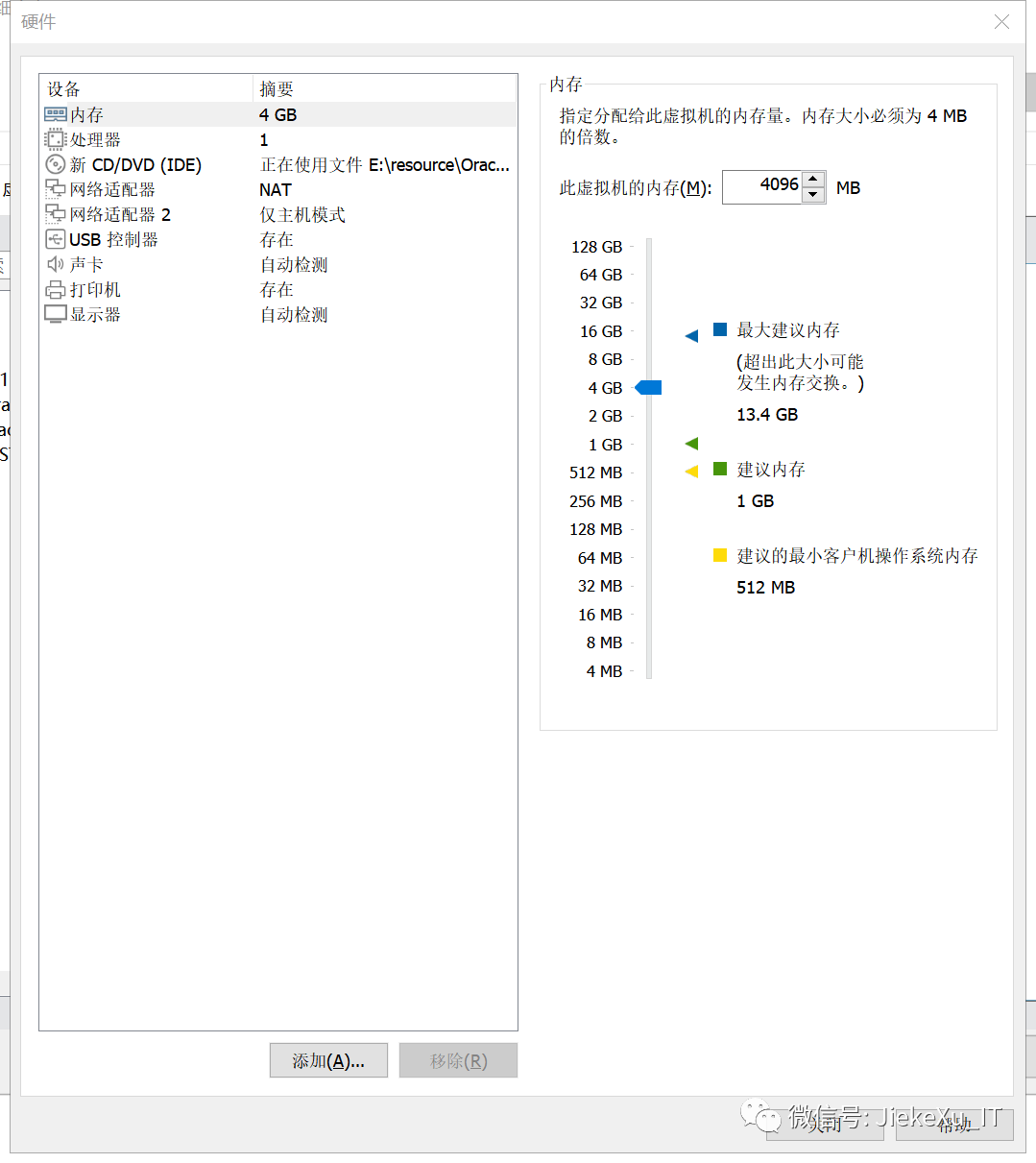

2.5 内存

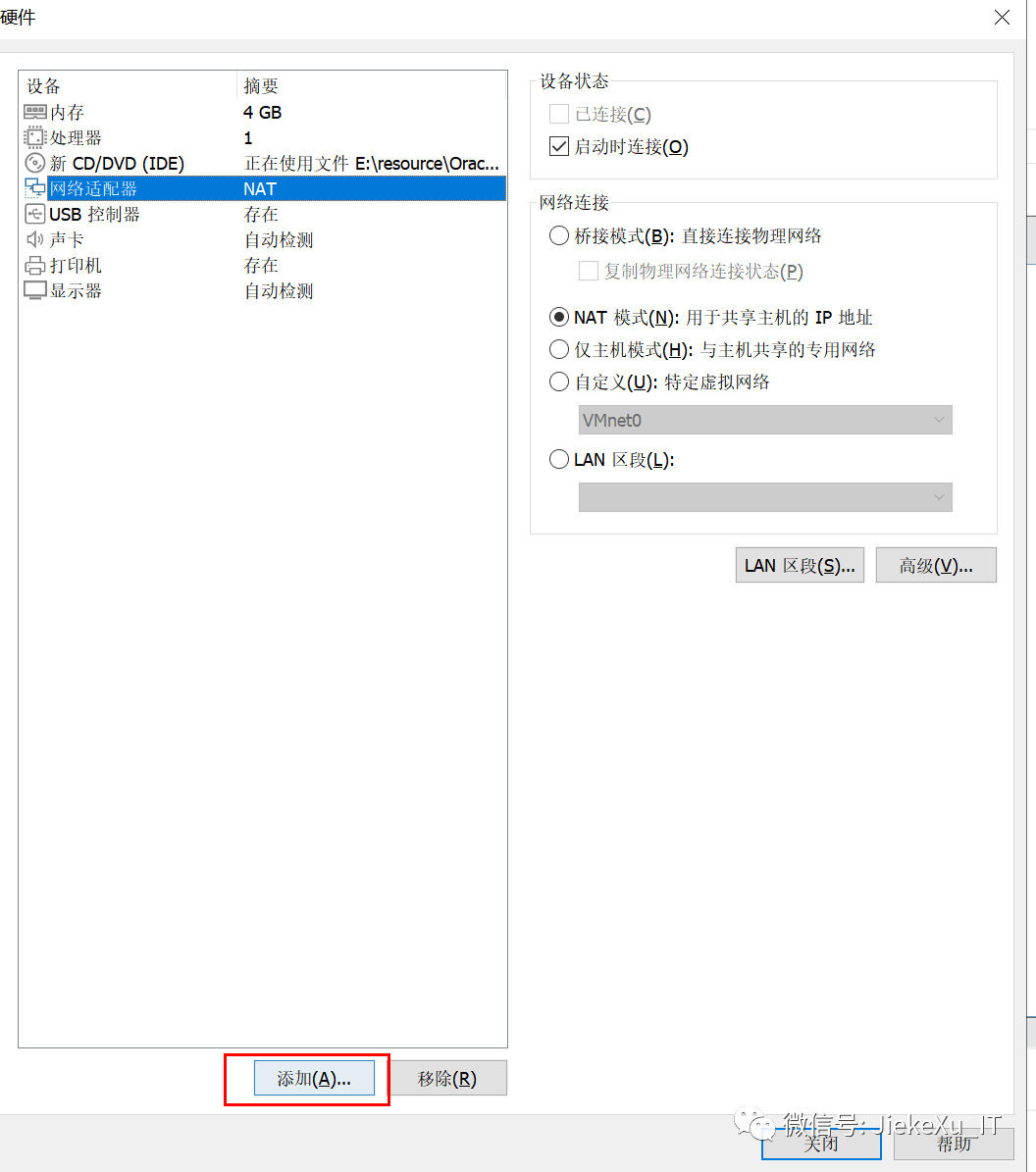

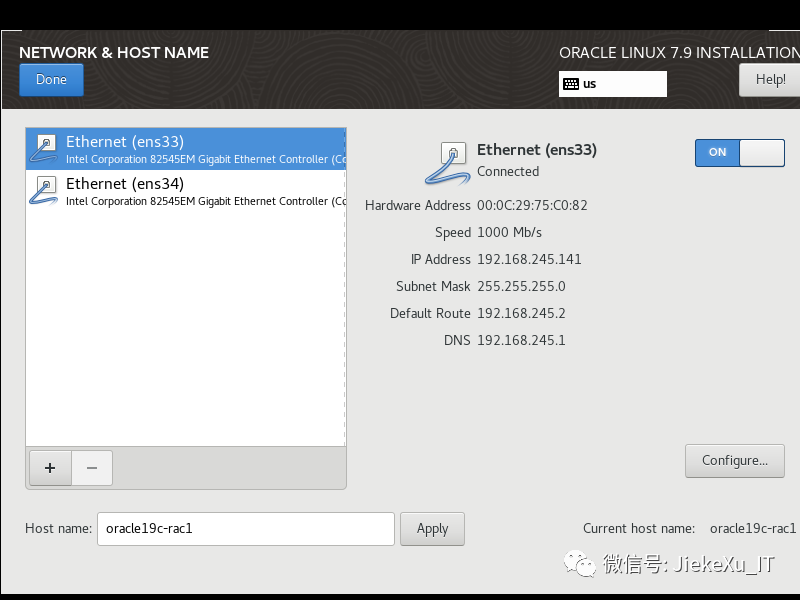

2.6 网卡

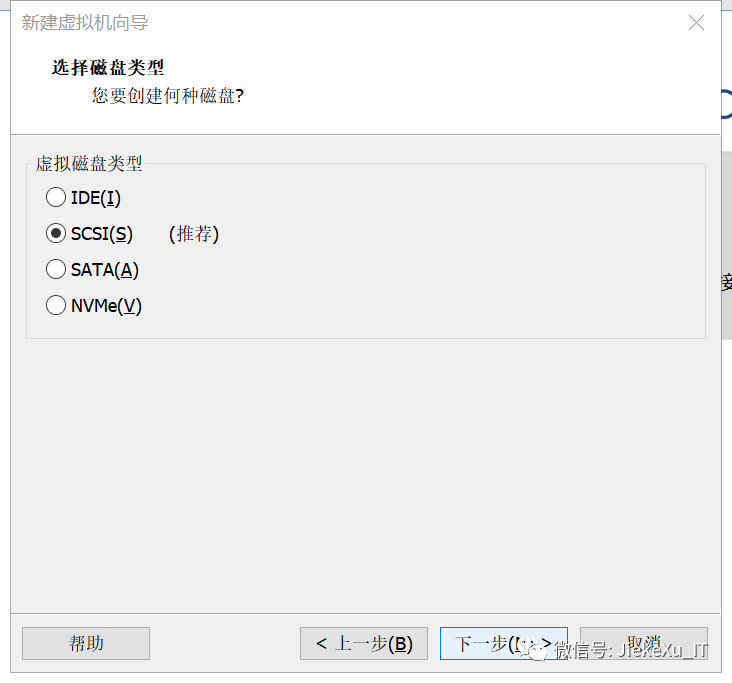

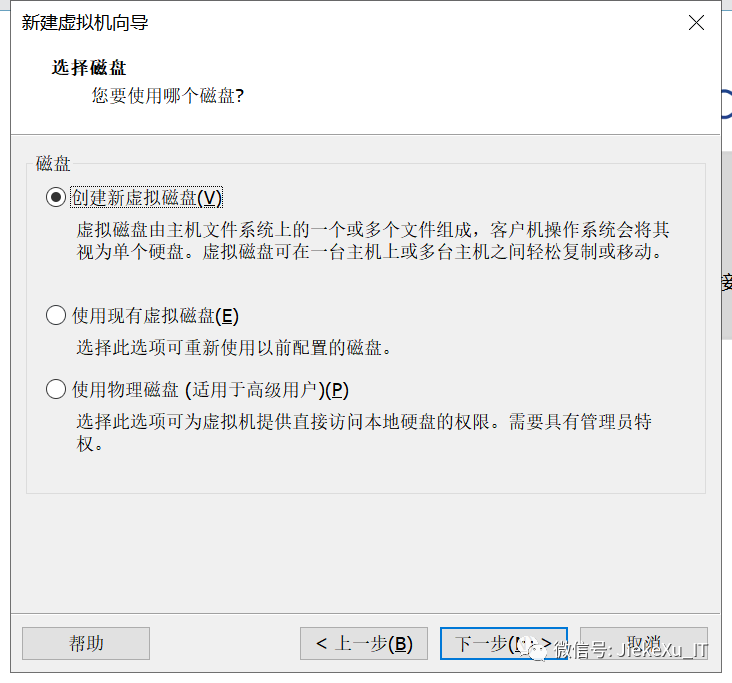

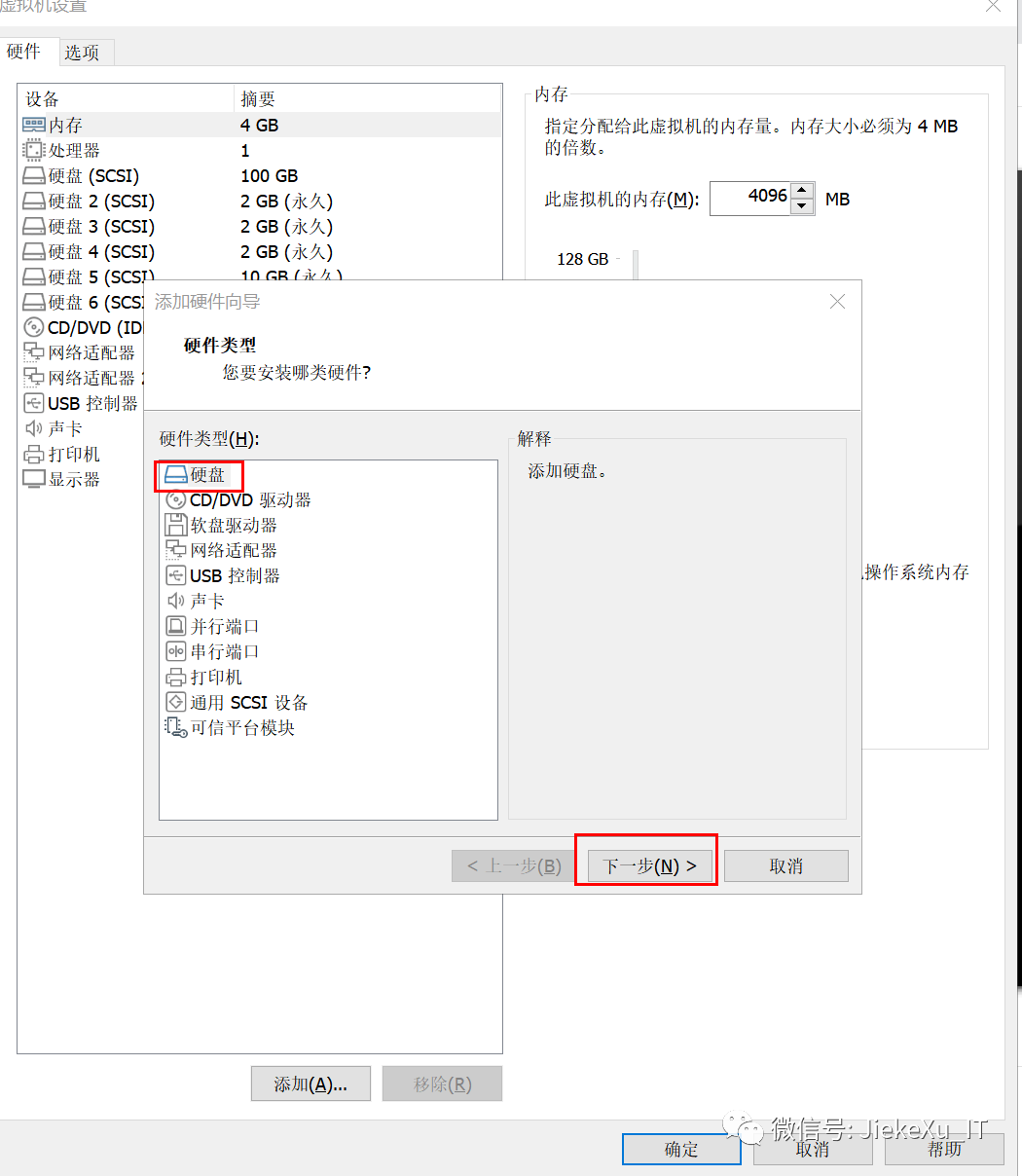

2.7 硬盘

2.8 添加网卡

2.9 同样方式创建RAC2节点

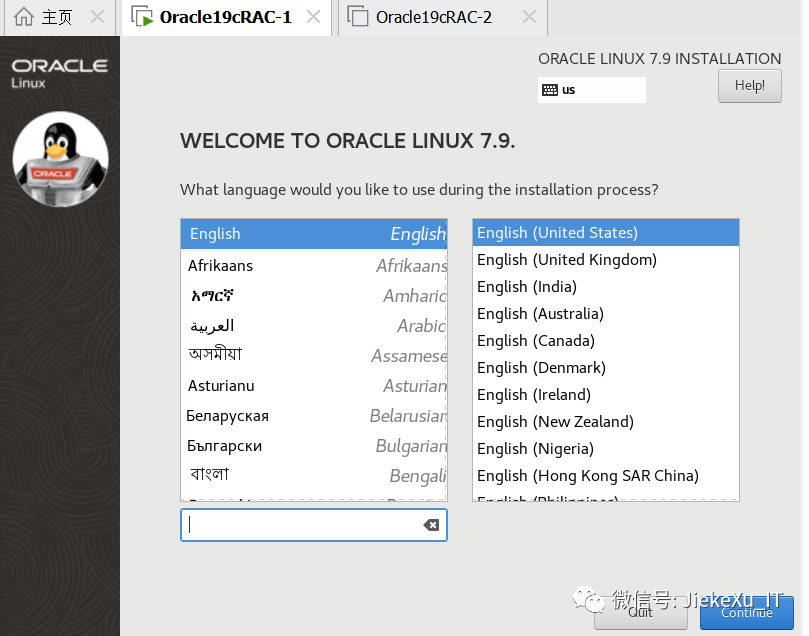

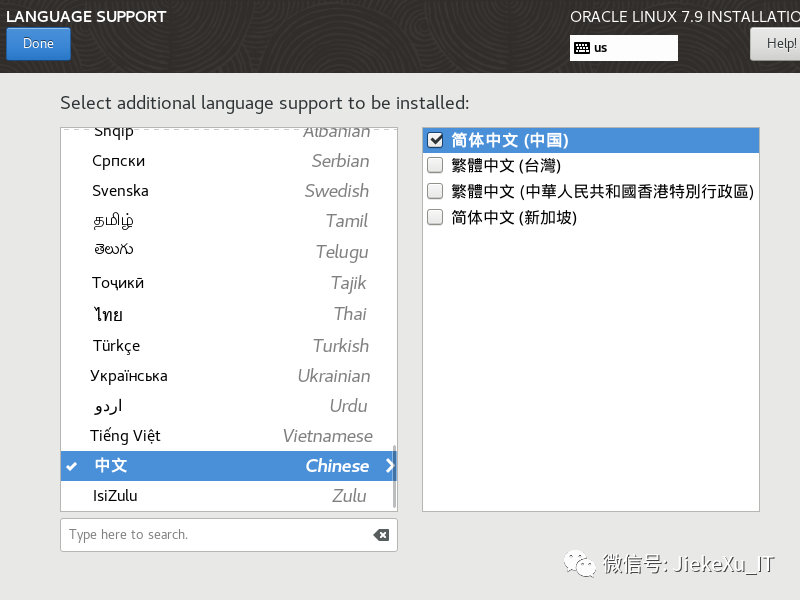

2.10 分别安装操作系统

2.11 安装完成之后,加快SSH登录

三、共享存储配置

3.1 创建共享磁盘-命令行

3.2 创建共享磁盘-图形(可选,本次未采用)

3.3 关闭两台虚拟机,编辑相关vmx文件

3.4 重新启动虚拟机

3.5 multipath+udev方式绑定存储

四、19cRAC安装准备工作

4.1 硬件配置和系统情况

4.1.1 检查操作系统

4.1.2 检查内存

4.1.3 检查swap

4.1.4 检查/tmp

4.1.4 检查时间和时区

4.2 主机名和hosts文件

4.2.1 设置和检查主机名

4.2.2 调整hosts文件

4.3 网卡(虚拟)配置、netwok文件

4.3.1 (可选)禁用虚拟网卡

4.3.2 检查节点的网卡名和IP

4.3.3 测试连通性

4.3.4 调整network

4.4 调整/dev/shm

4.5 关闭THP和numa

4.6 关闭防火墙

4.7 关闭selinux

4.8 配置软件yum源

4.8 安装软件包

4.9 配置核心参数

4.10 关闭avahi服务

4.11 关闭其他服务

4.12 配置ssh服务

4.13 hugepage配置(可选)

4.14 修改login配置

4.15 配置用户限制

4.16 配置NTP服务(可选)

4.16.1 使用ctss

4.16.2 使用ntp

4.16.3 使用chony

4.17 创建组和用户

4.18 创建目录

4.19 配置用户环境变量

4.19.1 grid

4.19.2 oracle

4.20 配置共享存储(multipath+udev)

4.20.1 multipath

4.20.2 UDEV

4.20.2 UDEV(非multipath)

4.20.3 afd(不推荐)

4.21 配置IO调度

4.22 重启OS

4.23 整体check脚本检查

五、安装GI+RU

5.1 修改软件包权限

5.2 解压缩软件

5.2.1 解压缩grid 软件

5.2.2 升级OPatch

5.2.3 解压缩19.11RU

5.3 安装cvuqdisk软件

5.4 配置grid 用户ssh(可选)

5.5 安装前检查

5.6 执行安装(直接升级19.11)

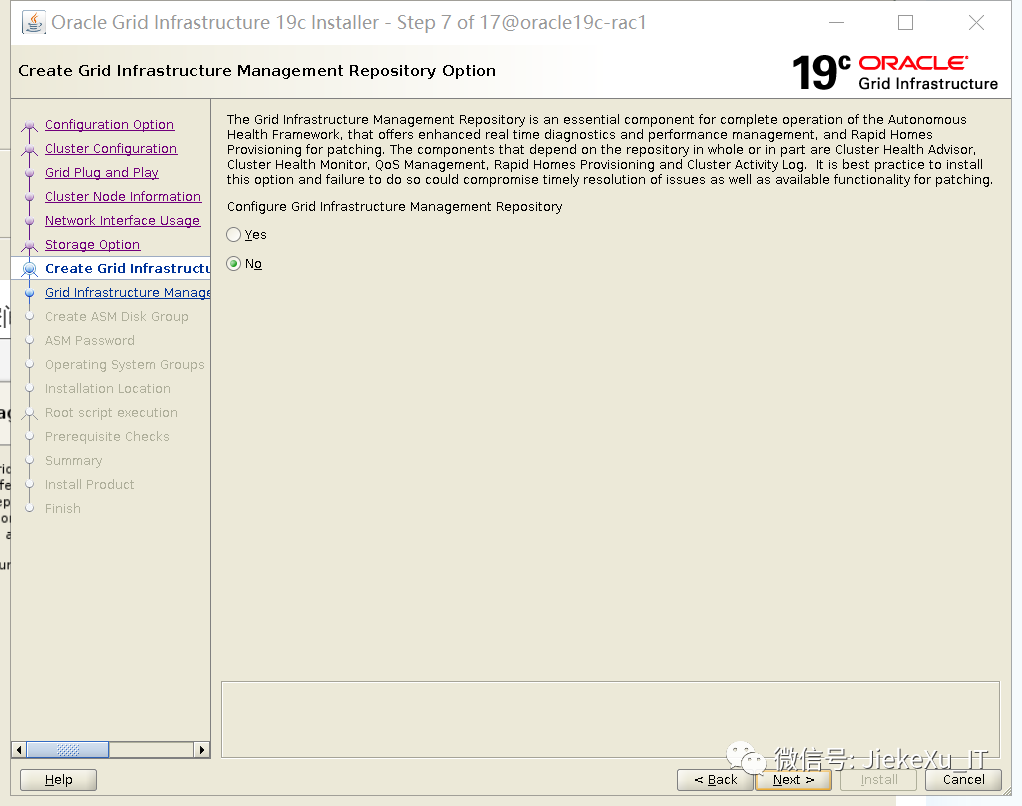

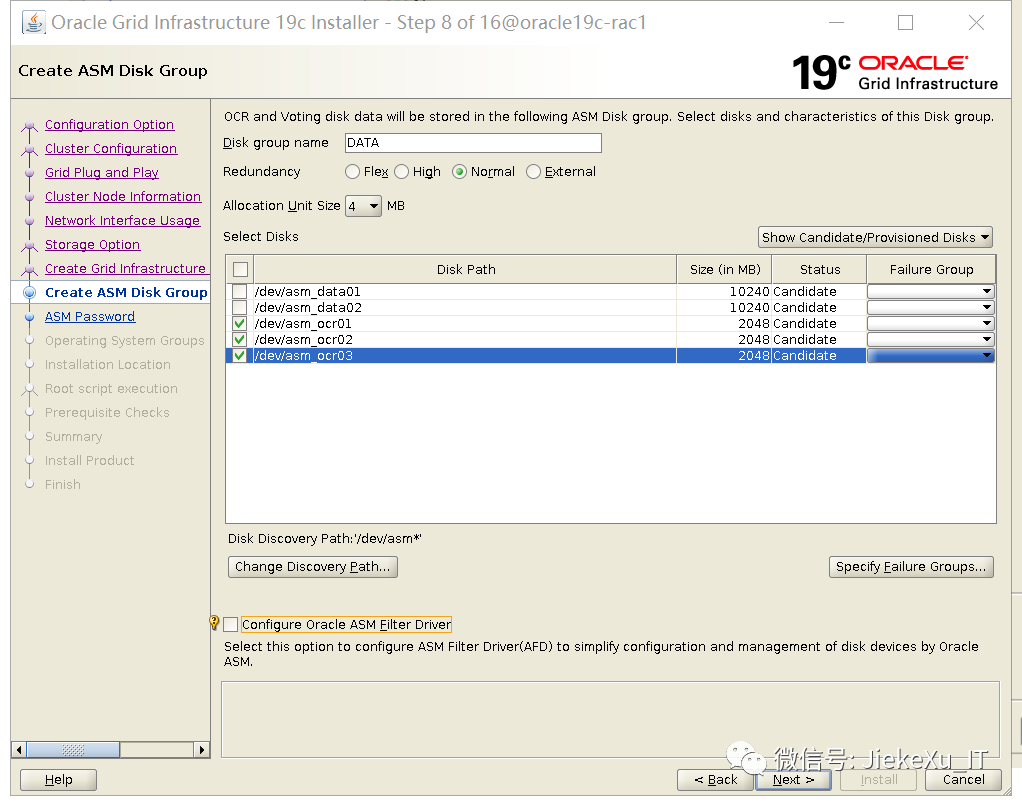

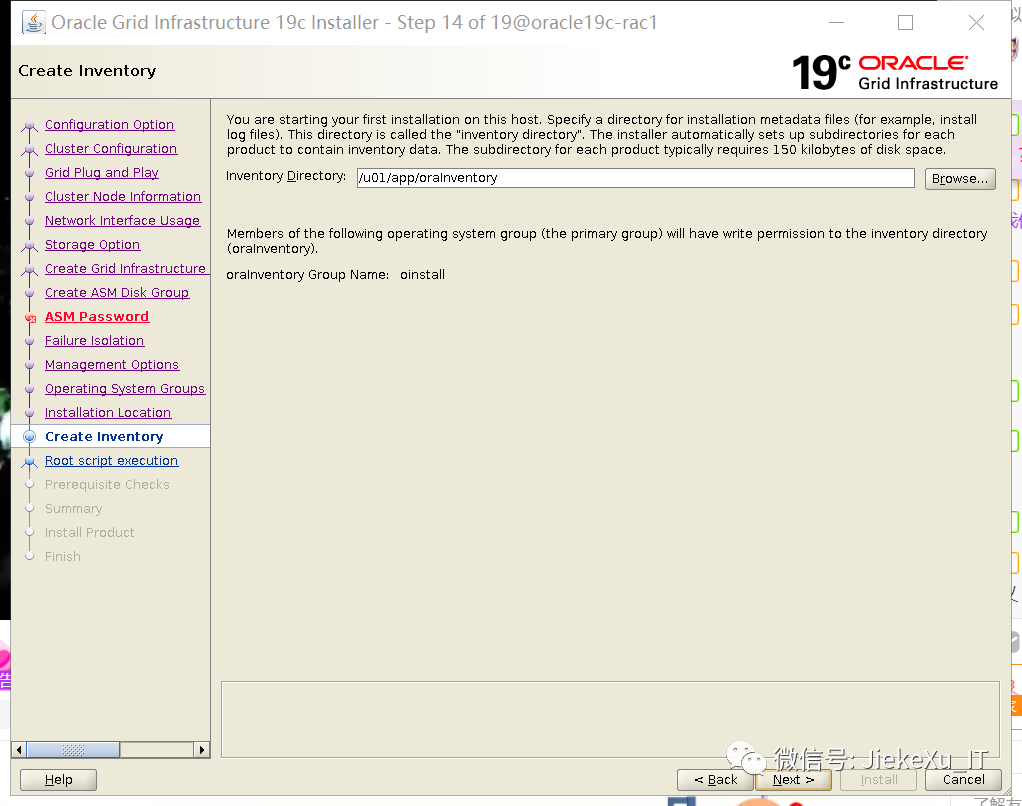

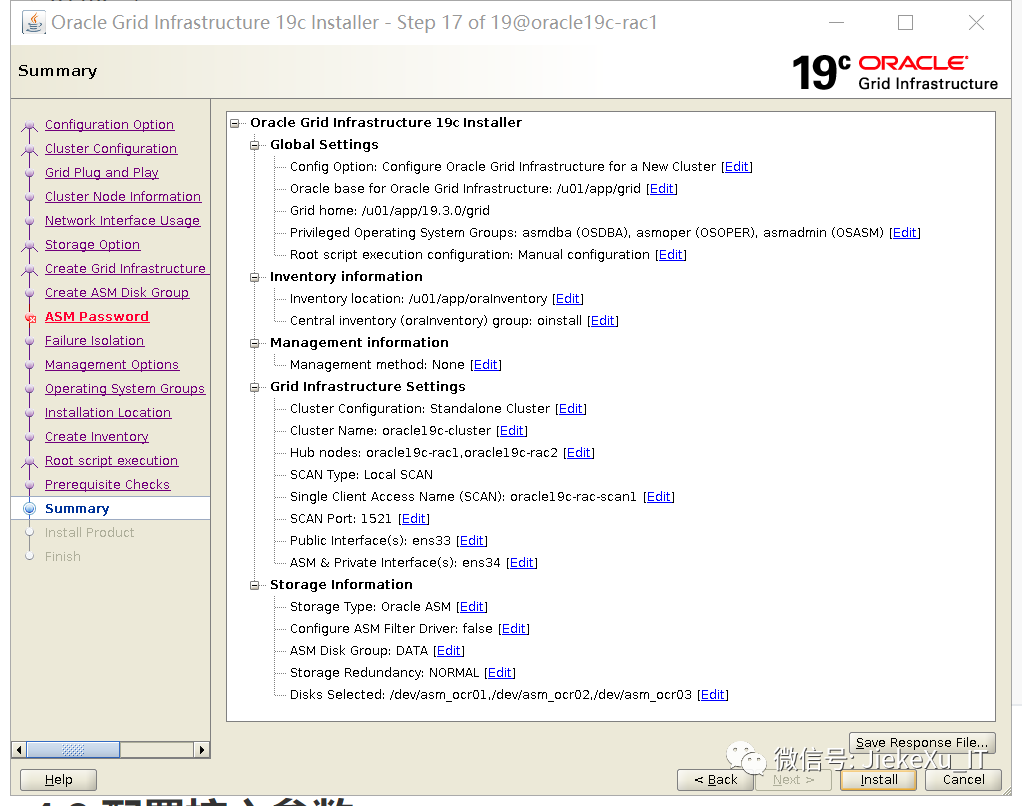

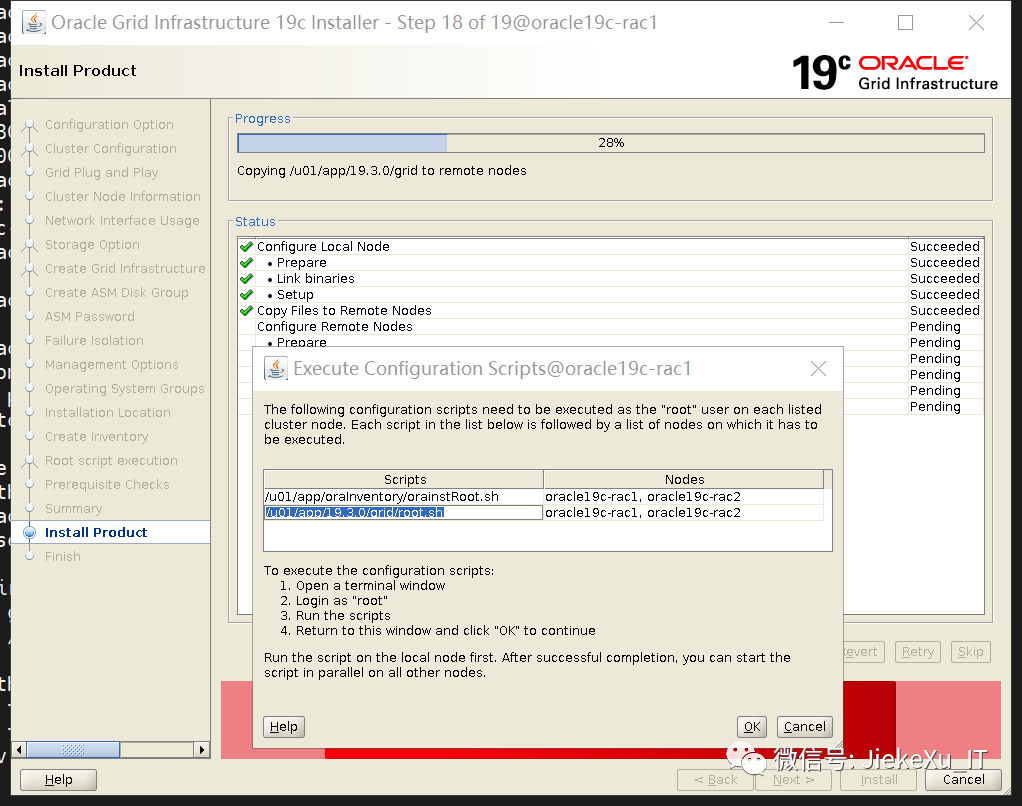

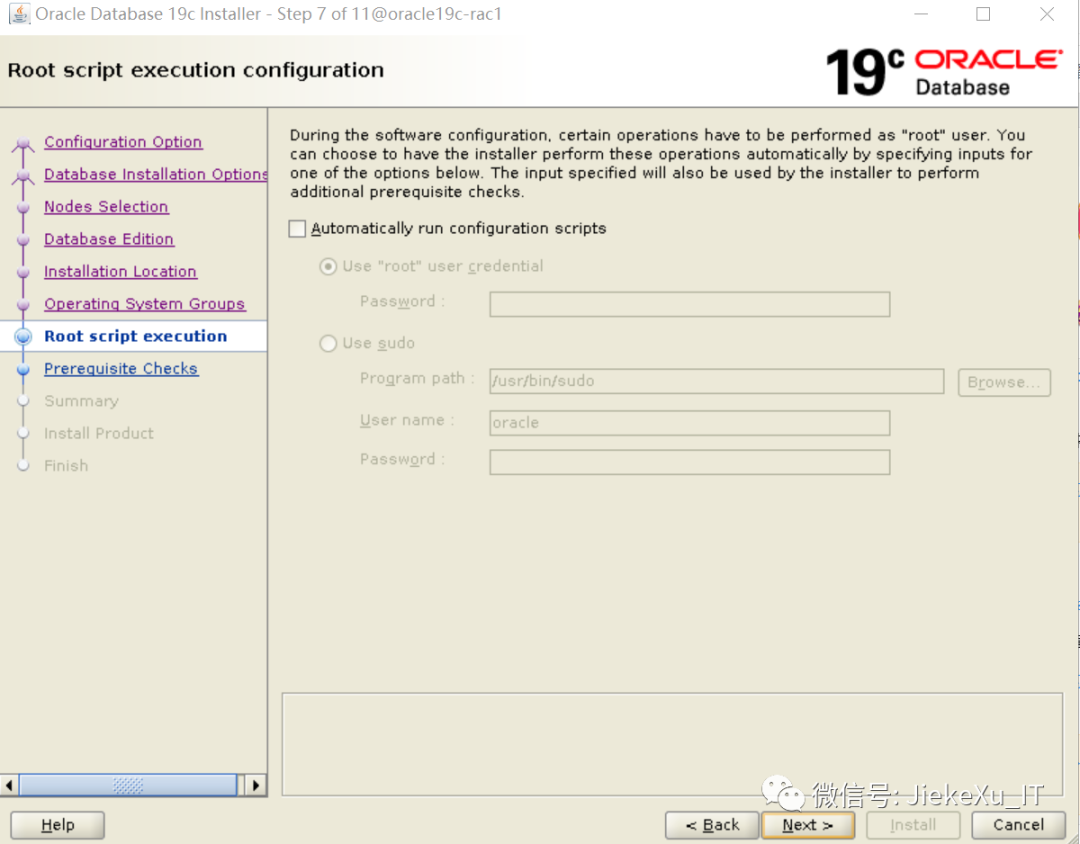

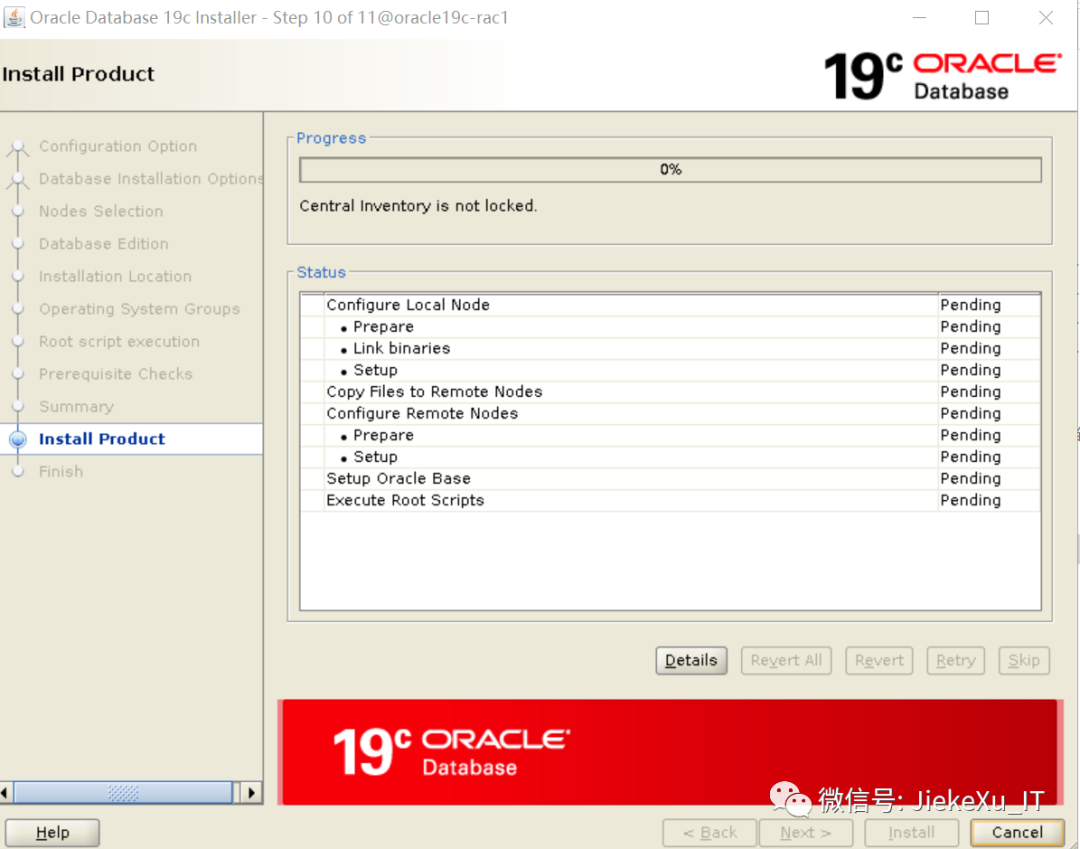

5.6.1 图形截图

5.6.2 执行脚本

5.6.3 检查

六 创建磁盘组

七、安装Oracle软件+RU

7.1 修改软件包权限

7.2 解压缩到oracle_home

7.3 升级opatch版本

7.4 安装Oracle软件(直接升级RU19.11)

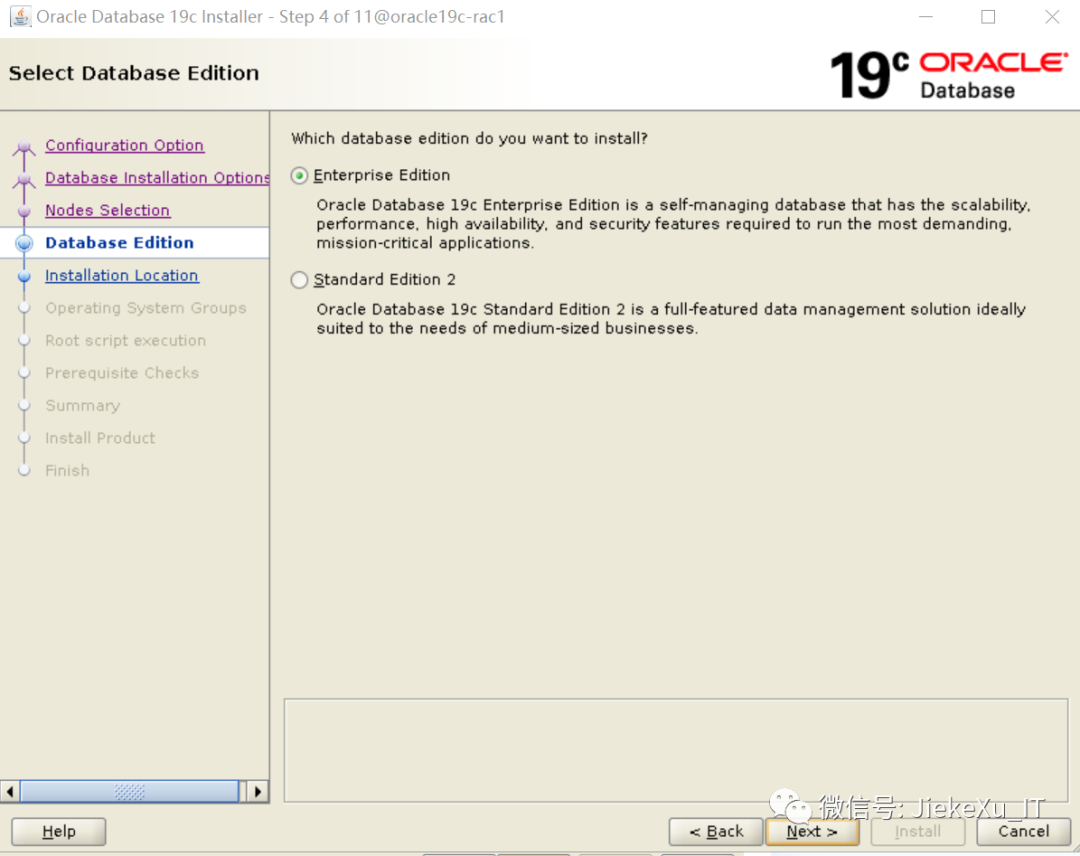

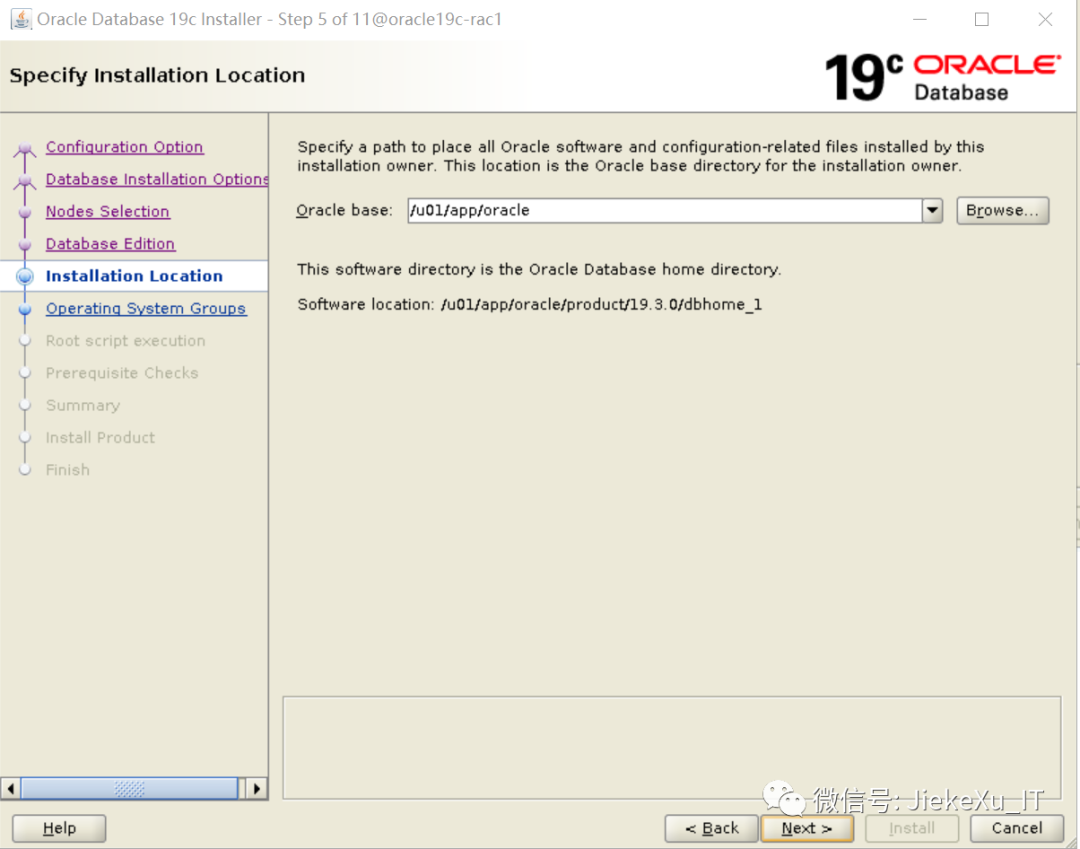

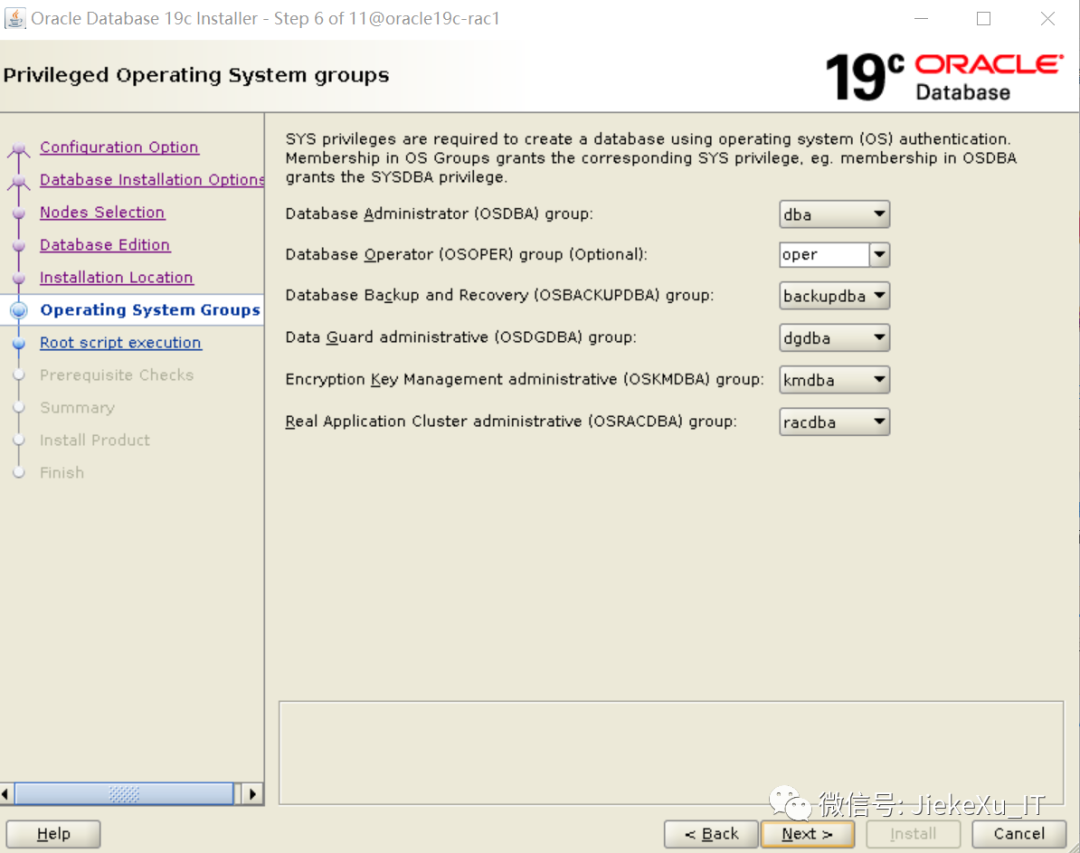

7.5 安装截图

八 创建数据库

8.1 数据库规划

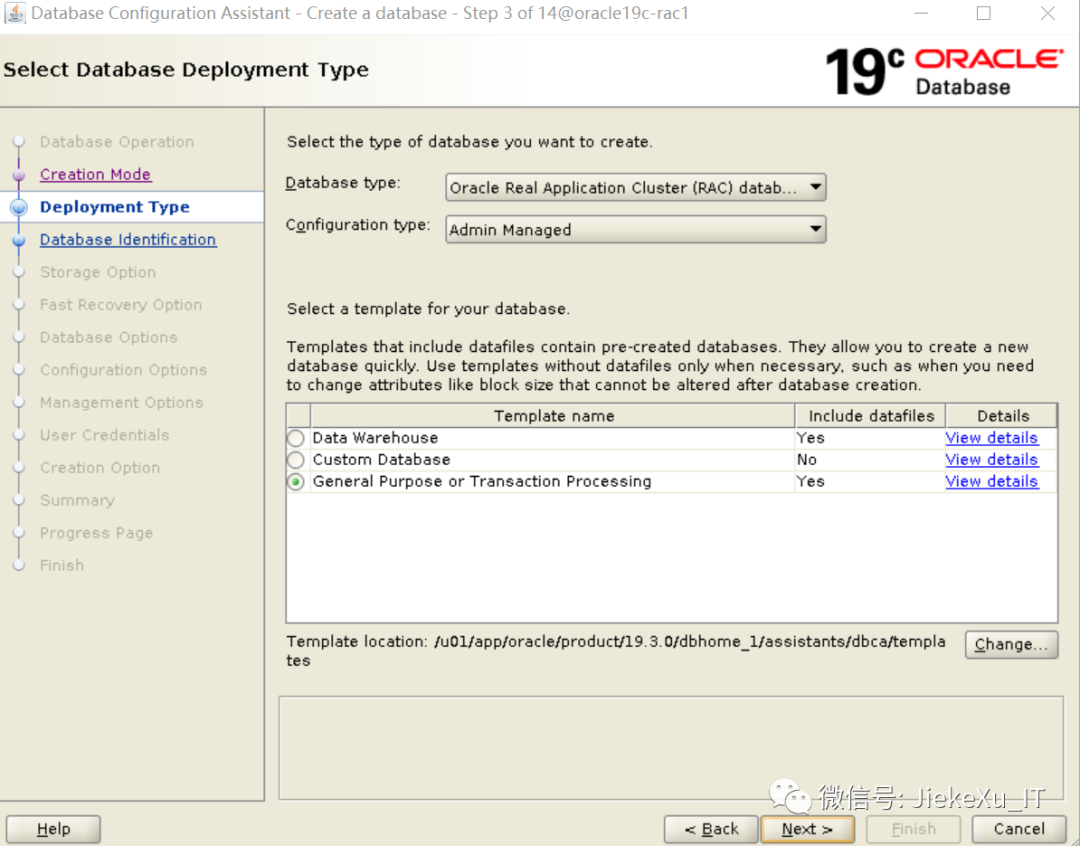

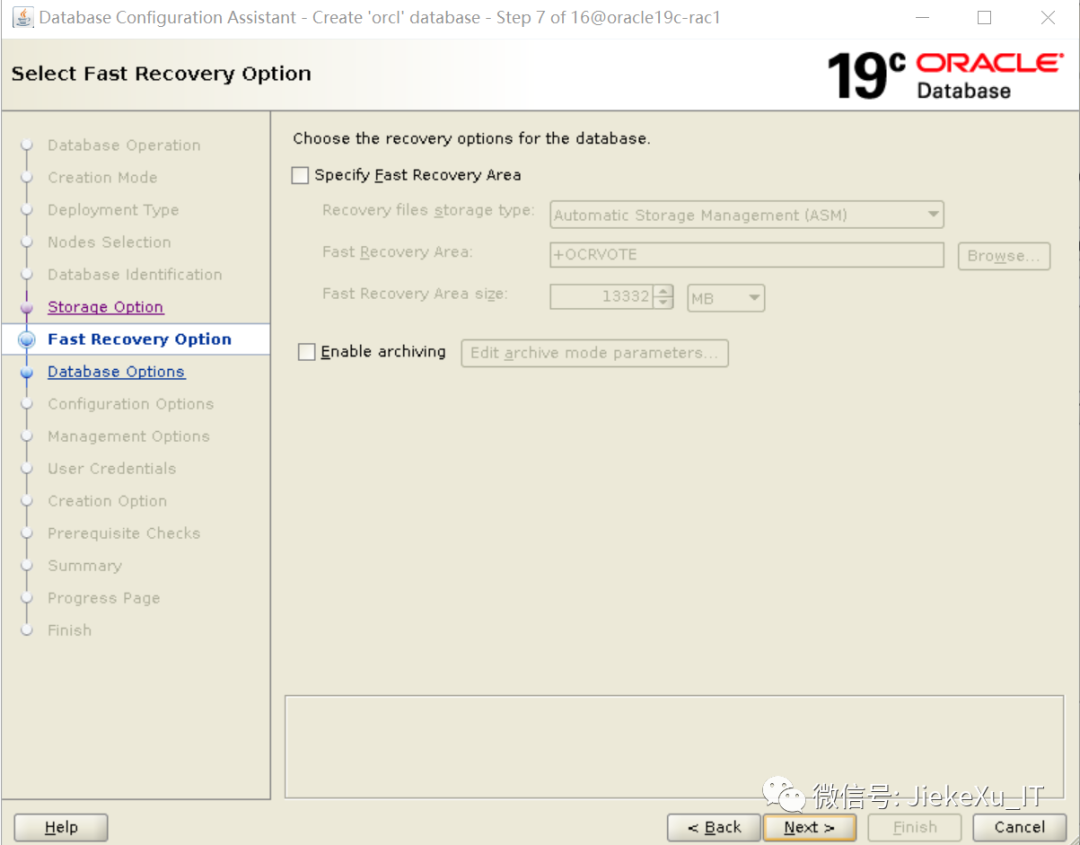

8.2 dbca建库

8.3 连接测试

8.3.1 连接CDB

8.3.2 连接pdb

8.3.3 datafile

九 RAC日常管理命令

9.1 集群资源状态

9.2 集群服务状态

9.3 数据库状态

9.4 监听状态

9.5 scan状态

9.6 nodeapps状态

9.7 VIP状态

9.8 数据库配置

9.9 OCR

9.10 VOTEDISK

9.11 GI版本

9.12 ASM

9.13 启动和关闭RAC

9.14 节点状态

9.15 切换scan

9.16 切换VIP

| 软件 | 版本 |

|---|---|

| 虚拟化软件 | VMware®Workstation16 Pro15.0.0 build-10134415 |

| OS软件 | OracleLinux-R7-U9-Server-x86_64-dvd.iso,要求7.4以上 |

| Oracle软件 | LINUX.X64_193000_db_home.zip(安装包) |

| GI软件 | LINUX.X64_193000_grid_home.zip(安装包) |

| RU软件 |

p32545008_190000_Linux-x86-64.zip -19.11(非滚动升级方式)p32895426_190000_Linux-x86-64.zip(滚动升级方式) --编者 不太懂能从 RU 区分是否滚动升级方式? |

https://yum.oracle.com/oracle-linux-isos.html

https://www.oracle.com/database/technologies/oracle19c-linux-downloads.html

可以利用sha256sum检验软件的完整性。

[root@dwrac1 soft]# sha256sum LINUX.X64_193000_db_home.zip

ba8329c757133da313ed3b6d7f86c5ac42cd9970a28bf2e6233f3235233aa8d8 LINUX.X64_193000_db_home.zip

[root@dwrac1 soft]# sha256sum LINUX.X64_193000_grid_home.zip

d668002664d9399cf61eb03c0d1e3687121fc890b1ddd50b35dcbe13c5307d2e LINUX.X64_193000_grid_home.zip

每个 RU 大小在 2.5G 左右。

编者注:19.11 RU 下载 32578973 即可,包含 GI and DB,还有 OJVM 补丁,比较方便。不过现在 19.12 RU Linux 版已经发布了,可查看:https://www.modb.pro/download/137693 。

因此安装 Oracle19c RAC,选择 Linux 操作系统,要求最好在 7.5 版本以上。这点请注意。

硬件要求 8G 内存,但是无奈自己的笔记本只有16G.因此每个虚拟机划分为 4G。尝试安装。

| 配置 | |

|---|---|

| CPU | 2core |

| MEM | 4G |

| DISK | 100G |

| 网卡 | 两个网卡,一块Public IP、一块Private IP |

| ISO | OracleLinux-R7-U9-Server-x86_64-dvd.iso |

| 节点名称 | Public IP(NAT) | Private IP(HOST) | Virtual IP | SCAN 名称 | SCAN IP |

|---|---|---|---|---|---|

| oracle19c-rac1 | 192.168.245.141 | 192.168.28.141 | 192.168.245.143 | rac-scan | 192.168.245.145 |

| oracle19c-rac2 | 192.168.245.142 | 192.168.28.142 | 192.168.245.144 |

cp /etc/hosts /etc/hosts_`date +"%Y%m%d_%H%M%S"`

echo '#public ip

192.168.245.141 oracle19c-rac1

192.168.245.142 oracle19c-rac2

#private ip

192.168.28.141 oracle19c-rac1-priv

192.168.28.142 oracle19c-rac2-priv

#vip

192.168.245.143 oracle19c-rac1-vip

192.168.245.144 oracle19c-rac2-vip

#scanip

192.168.245.145 oracle19c-rac-scan1'>> /etc/hosts

Table 1-3 Server Configuration Checklist for Oracle Grid Infrastructure

| Check | Task |

|---|---|

| Disk space allocated to the temporary file system | At least 1 GB of space in the temporary disk space (/tmp) directory. |

| Swap space allocation relative to RAM | Between 4 GB and 16 GB: Equal to RAM More than 16 GB: 16 GB Note: If you enable HugePages for your Linux servers, then you should deduct the memory allocated to HugePages from the available RAM before calculating swap space. |

| HugePages memory allocation | Allocate memory to HugePages large enough for the System Global Areas (SGA) of all databases planned to run on the cluster, and to accommodate the System Global Area for the Grid Infrastructure Management Repository. |

| Mount point paths for the software binaries | Oracle recommends that you create an Optimal Flexible Architecture configuration as described in the appendix “Optimal Flexible Architecture” in Oracle Grid Infrastructure Installation and Upgrade Guide for your platform. |

| Ensure that the Oracle home (the Oracle home path you select for Oracle Database) uses only ASCII characters | The ASCII character restriction includes installation owner user names, which are used as a default for some home paths, as well as other directory names you may select for paths. |

| Set locale (if needed) | Specify the language and the territory, or locale, in which you want to use Oracle components. A locale is a linguistic and cultural environment in which a system or program is running. NLS (National Language Support) parameters determine the locale-specific behavior on both servers and clients. The locale setting of a component determines the language of the user interface of the component, and the globalization behavior, such as date and number formatting. |

| Set Network Time Protocol for Cluster Time Synchronization | Oracle Clusterware requires the same time zone environment variable setting on all cluster nodes.Ensure that you set the time zone synchronization across all cluster nodes using either an operating system configured network time protocol (NTP) or Oracle Cluster Time Synchronization Service. |

| Check Shared Memory File System Mount | By default, your operating system includes an entry in /etc/fstab to mount /dev/shm. However, if your Cluster Verification Utility (CVU) or Oracle Universal Installer (OUI) checks fail, then ensure that the /dev/shm mount area is of type tmpfs and is mounted with the following options:rw and exec permissions set on itWithout noexec or nosuid set on itNote: Your operating system usually sets these options as the default permissions. If they are set by the operating system, then they are not listed on the mount options. |

| 要求 | |

|---|---|

| 软件目录 | 安装Grid Infrastracture所需空间:12GB 安装Oracle Database所需空间:7.3GB 此外安装过程中分析、收集、跟踪文件所需空间:10GB 建议总共至少100GB(此处不包含ASM或NFS的空间需求) |

| /tmp | 至少1G |

| swap | 4-16G等于内存;大于16G选择16G |

| /dev/shm | 6G |

| HugePages | 本方案不做配置 |

| 时间同步 |

| 分区 | 大小 |

|---|---|

| /boot | 1G |

| / | 10G |

| /tmp | 10G |

| SWAP | 8G |

| /u01 | 70G |

Table 4-2 x86-64 Oracle Linux 7 Minimum Operating System Requirements

| Item | Requirements |

|---|---|

| SSH Requirement | Ensure that OpenSSH is installed on your servers. OpenSSH is the required SSH software. |

| Oracle Linux 7 | Subscribe to the Oracle Linux 7 channel on the Unbreakable Linux Network, or configure a yum repository from the Oracle Linux yum server website, and then install the Oracle Preinstallation RPM. This RPM installs all required kernel packages for Oracle Grid Infrastructure and Oracle Database installations, and performs other system configuration.Supported distributions:Oracle Linux 7.4 with the Unbreakable Enterprise Kernel 4: 4.1.12-124.19.2.el7uek.x86_64 or laterOracle Linux 7.4 with the Unbreakable Enterprise Kernel 5: 4.14.35-1818.1.6.el7uek.x86_64 or laterOracle Linux 7.5 with the Red Hat Compatible kernel: 3.10.0-862.11.6.el7.x86_64 or later |

| Packages for Oracle Linux 7 | Install the latest released versions of the following packages: bc binutils compat-libcap1 compat-libstdc33 elfutils-libelf elfutils-libelf-devel fontconfig-devel glibc glibc-devel ksh libaio libaio-devel libXrender libXrender-devel libX11 libXau libXi libXtst libgcc libstdclibstdc+±devel libxcb make smartmontools sysstat Note:If you intend to use 32-bit client applications to access 64-bit servers, then you must also install (where available) the latest 32-bit versions of the packages listed in this table. |

| Optional Packages for Oracle Linux 7 | Based on your requirement, install the latest released versions of the following packages: ipmiutil (for Intelligent Platform Management Interface) net-tools (for Oracle RAC and Oracle Clusterware) nfs-utils (for Oracle ACFS) python (for Oracle ACFS Remote) python-configshell (for Oracle ACFS Remote) python-rtslib (for Oracle ACFS Remote) python-six (for Oracle ACFS Remote) targetcli (for Oracle ACFS Remote) |

| KVM virtualization | Kernel-based virtual machine (KVM), also known as KVM virtualization, is certified on Oracle Database 19c for all supported Oracle Linux 7 distributions. For more information on supported virtualization technologies for Oracle Database, refer to the virtualization matrix:https://www.oracle.com/database/technologies/virtualization-matrix.html |

able 1-6 Oracle Grid Infrastructure Storage Configuration Checks

| Check | Task |

|---|---|

| Minimum disk space (local or shared) for Oracle Grid Infrastructure Software | At least 12 GB of space for the Oracle Grid Infrastructure for a cluster home (Grid home). Oracle recommends that you allocate 100 GB to allow additional space for patches. At least 10 GB for Oracle Database Enterprise Edition.Allocate additional storage space as per your cluster configuration, as described in Oracle Clusterware Storage Space Requirements. |

| Select Oracle ASM Storage Options | During installation, based on the cluster configuration, you are asked to provide Oracle ASM storage paths for the Oracle Clusterware files. These path locations must be writable by the Oracle Grid Infrastructure installation owner (Grid user). These locations must be shared across all nodes of the cluster on Oracle ASM because the files in the Oracle ASM disk group created during installation must be available to all cluster member nodes.For Oracle Standalone Cluster deployment, shared storage, either Oracle ASM or shared file system, is locally mounted on each of the cluster nodes.For Oracle Domain Services Cluster deployment, Oracle ASM storage is shared across all nodes, and is available to Oracle Member Clusters. Oracle Member Cluster for Oracle Databases can either use storage services from the Oracle Domain Services Cluster or local Oracle ASM storage shared across all the nodes. Oracle Member Cluster for Applications always use storage services from the Oracle Domain Services Cluster. Before installing Oracle Member Cluster, create a Member Cluster Manifest file that specifies the storage details.Voting files are files that Oracle Clusterware uses to verify cluster node membership and status. Oracle Cluster Registry files (OCR) contain cluster and database configuration information for Oracle Clusterware. |

| Select Grid Infrastructure Management Repository (GIMR) Storage Option | Depending on the type of cluster you are installing, you can choose to either host the Grid Infrastructure Management Repository (GIMR) for a cluster on the same cluster or on a remote cluster.Note:Starting with Oracle Grid Infrastructure 19c, configuring GIMR is optional for Oracle Standalone Cluster deployments.For Oracle Standalone Cluster deployment, you can specify the same or separate Oracle ASM disk group for the GIMR.For Oracle Domain Services Cluster deployment, the GIMR must be configured on a separate Oracle ASM disk group.Oracle Member Clusters use the remote GIMR of the Oracle Domain Services Cluster. You must specify the GIMR details when you create the Member Cluster Manifest file before installation. |

Table 8-1 Minimum Available Space Requirements for Oracle Standalone Cluster With GIMR Configuration

| Redundancy Level | DATA Disk Group | MGMT Disk Group | Oracle Fleet Patching and Provisioning | Total Storage |

|---|---|---|---|---|

| External | 1 GB | 28 GBEach node beyond four:5 GB | 1 GB | 30 GB |

| Normal | 2 GB | 56 GBEach node beyond four:5 GB | 2 GB | 60 GB |

| High/Flex/Extended | 3 GB | 84 GBEach node beyond four:5 GB | 3 GB | 90 GB |

Table 8-2 Minimum Available Space Requirements for Oracle Standalone Cluster Without GIMR Configuration

| Redundancy Level | DATA Disk Group | Oracle Fleet Patching and Provisioning | Total Storage |

|---|---|---|---|

| External | 1 GB | 1 GB | 2 GB |

| Normal | 2 GB | 2 GB | 4 GB |

| High/Flex/Extended | 3 GB | 3 GB | 6 GB |

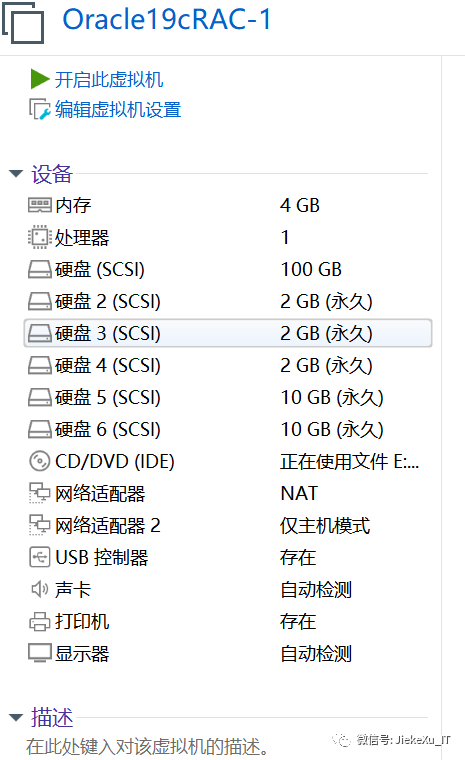

根据要求,采用的磁盘组策略如下:

| 磁盘组 | 大小 |

|---|---|

| OCRVOTE | 3*2G |

| DATA | 2*10G |

| 软件 | 版本 |

|---|---|

| Oracle软件 | LINUX.X64_193000_db_home.zip(安装包) |

| GI软件 | LINUX.X64_193000_grid_home.zip(安装包) |

| RU软件 | p32545008_190000_Linux-x86-64.zip -19.11(安装直接升级方式)p32895426_190000_Linux-x86-64.zip(非滚动升级方式) |

| opatch版本 | OPatch 12.2.0.1.25 for DB 12.x, 18.x, 19.x, 20.x and 21.x releases (May 2021) |

常见用户组说明

| 组 | 角色 | 权限 |

|---|---|---|

| oinstall | 安装和升级oracle软件 | |

| dba | sysdba | 创建、删除、修改、启动、关闭数据库,切换日志归档模式,备份恢复数据库 |

| oper | sysoper | 启动、关闭、修改、备份、恢复数据库,修改归档模式 |

| asmdba | sysdba自动存储管理 | 管理ASM实例 |

| asmoper | sysoper自动存储管理 | 启动、停止ASM实例 |

| asmadmin | sysasm | 挂载、卸载磁盘组,管理其他存储设备 |

| backupdba | sysbackup | 启动关闭和执行备份恢复(12c) |

| dgdba | sysdg | 管理Data Guard(12c) |

| kmdba | syskm | 加密管理相关操作 |

| racdba | rac管理 |

| **GroupName** | **GroupID** | **说明** |

|---|---|---|

| oinstall | 54421 | Oracle清单和软件所有者 |

| dba | 54322 | 数据库管理员 |

| oper | 54323 | DBA操作员组 |

| backupdba | 54324 | 备份管理员 |

| dgdba | 54325 | DG管理员 |

| kmdba | 54326 | KM管理员 |

| asmdba | 54327 | ASM数据库管理员组 |

| asmoper | 54328 | ASM操作员组 |

| asmadmin | 54329 | Oracle自动存储管理组 |

| racdba | 54330 | RAC管理员 |

| 用户UID | OS用户 | 主 | 用户目录 | 默认shell | |

|---|---|---|---|---|---|

| 10000 | oracle | oinstall | dba,asmdba,backupdba,dgdba,kmdba,racdba,oper | /home/oracle | bash |

| 10001 | grid | oinstall | dba,asmadmin,asmdba,racdba,asmoper | /home/grid | bash |

| 目录名称 | 路径 | 说明 |

|---|---|---|

| ORACLE_BASE (oracle) | /u01/app/oracle | oracle基目录 |

| ORACLE_HOME (oracle) | /u01/app/oracle/product/19.3.0/dbhome_1 | oracle用户HOME目录 |

| ORACLE_BASE (grid) | /u01/app/grid | grid基目录 |

| ORACLE_HOME (grid) | /u01/app/19.3.0/grid | grid用户HOME目录 |

| 规划内容 | 规划描述 |

|---|---|

| PDB | ocrlpdb |

| 内存规划 | SGA PGA |

| processes | 1000 |

| 字符集 | ZHS16GBK |

| 归档模式 | 非 |

| redo | 5组 每组200M |

| undo | 2G 自动扩展 最大4G |

| temp | 4G |

| 闪回配置 | 4G大小 |

| 归档模式 | 非归档(手工调整归档模式) |

安装完成之后,计划升级到最新的RU 19.11版本。

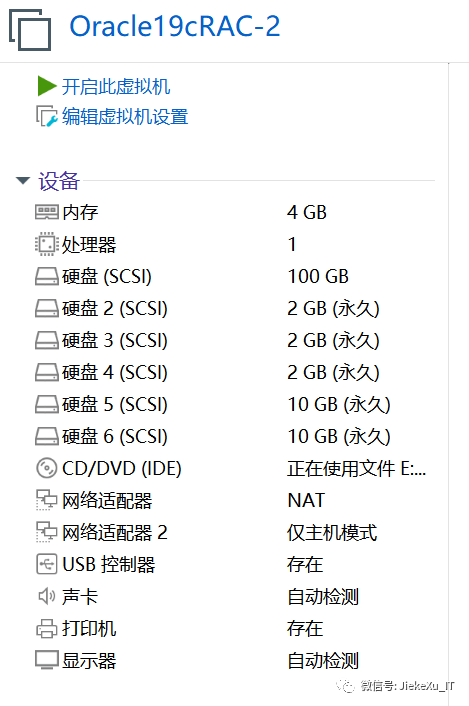

两台虚拟机创建方式相同,只是IP和主机名不同,因此相关说明只截取一台

编者注:内存这块虽是个人学习,但也需要至少 8GB 的内存方可,GI 安装官方文档说明最低需要 8GB 内存。

过程省略……

--配置LoginGraceTime参数为0, 将timeout wait设置为无限制

cp /etc/ssh/sshd_config /etc/ssh/sshd_config_`date +"%Y%m%d_%H%M%S"` && sed -i '/#LoginGraceTime 2m/ s/#LoginGraceTime 2m/LoginGraceTime 0/' /etc/ssh/sshd_config && grep LoginGraceTime /etc/ssh/sshd_config

--加快SSH登陆速度,禁用DNS

cp /etc/ssh/sshd_config /etc/ssh/sshd_config_`date +"%Y%m%d_%H%M%S"` && sed -i '/#UseDNS yes/ s/#UseDNS yes/UseDNS no/' /etc/ssh/sshd_config && grep UseDNS /etc/ssh/sshd_config

三、共享存储配置

vmware-vdiskmanager.exe -c -s 2g -a lsilogic -t 2 "E:\vm\sharedisk19c\share-ocr01.vmdk" vmware-vdiskmanager.exe -c -s 2g -a lsilogic -t 2 "E:\vm\sharedisk19c\share-ocr02.vmdk" vmware-vdiskmanager.exe -c -s 2g -a lsilogic -t 2 "E:\vm\sharedisk19c\share-ocr03.vmdk" vmware-vdiskmanager.exe -c -s 10GB -a lsilogic -t 2 "E:\vm\sharedisk19c\share-data01.vmdk"vmware-vdiskmanager.exe -c -s 10GB -a lsilogic -t 2 "E:\vm\sharedisk19c\share-data02.vmdk"

执行过程:

PS D:\app\vmware16> .\vmware-vdiskmanager.exe -c -s 2g -a lsilogic -t 2 "E:\vm\sharedisk19c\share-ocr01.vmdk"Creating disk 'E:\vm\sharedisk19c\share-ocr01.vmdk' Create: 100% done.Virtual disk creation successful.

PS D:\app\vmware16> .\vmware-vdiskmanager.exe -c -s 2g -a lsilogic -t 2 "E:\vm\sharedisk19c\share-ocr02.vmdk"Creating disk 'E:\vm\sharedisk19c\share-ocr02.vmdk' Create: 100% done.Virtual disk creation successful.PS D:\app\vmware16>PS D:\app\vmware16> .\vmware-vdiskmanager.exe -c -s 2g -a lsilogic -t 2 "E:\vm\sharedisk19c\share-ocr03.vmdk"Creating disk 'E:\vm\sharedisk19c\share-ocr03.vmdk' Create: 100% done.Virtual disk creation successful.PS D:\app\vmware16>PS D:\app\vmware16> .\vmware-vdiskmanager.exe -c -s 10GB -a lsilogic -t 2 "E:\vm\sharedisk19c\share-data01.vmdk"Creating disk 'E:\vm\sharedisk19c\share-data01.vmdk' Create: 100% done.Virtual disk creation successful.PS D:\app\vmware16> .\vmware-vdiskmanager.exe -c -s 10GB -a lsilogic -t 2 "E:\vm\sharedisk19c\share-data02.vmdk"Creating disk 'E:\vm\sharedisk19c\share-data02.vmdk' Create: 100% done.Virtual disk creation successful.

相关命令说明:vmware-vdiskmanager [选项]这里的选项你必须包含以下的一些选择项或参数选项和参数描述

虚拟磁盘文件的名字。虚拟磁盘文件必须是.vmdk为扩展名。你能够指定一个你想要储存的虚拟磁盘文件的路径。如果你在你的宿主机中映射了网络共享,你也可以提供确切的虚拟磁盘文件的路径信息来创建虚拟磁盘在这个网络共享中

-c创建虚拟磁盘。你必须用-a, -s 和 -t 并指定选项参数,然后你需要指定所要创建的虚拟磁盘文件的文件名。

-s [GB|MB]指定虚拟磁盘的大小。确定大小用GB或MB做单位。你必须在创建磁盘时指定其大小。尽管你必须指定虚拟磁盘的大小,但当你增长它的大小时,你不能用-s这个选项。可以指定的磁盘大小规定:IDE和SCSI适配器都为最小100MB,最大950GB。

-a [ ide | buslogic | lsilogic ]指定磁盘适配器的类型。你在创建新的虚拟磁盘时必须指定其类型。选择以下类型之一:ide —— IDE接口适配器buslogic —— BusLogic SCSI接口适配器lsilogic —— LSI Logic SCSI接口适配器

-t [0|1|2|3]你在创建一个新的虚拟磁盘或者重新配置一个虚拟磁盘时必须指定虚拟磁盘的类型。指定以下类型之一:0 —— 创建一个包含在单一虚拟文件中的可增长虚拟磁盘1 —— 创建一个被分割为每个文件2GB大小的可增长虚拟磁盘2 —— 创建一个包含在单一虚拟文件中的预分配虚拟磁盘3 —— 创建一个被分割为每个文件2GB大小的预分配虚拟磁盘

通过界面创建方法:

添加硬盘

在节点二 添加硬盘

#shared disks configure

diskLib.dataCacheMaxSize=0

diskLib.dataCacheMaxReadAheadSize=0

diskLib.dataCacheMinReadAheadSize=0

diskLib.dataCachePageSize=4096

diskLib.maxUnsyncedWrites = "0"

disk.locking = "FALSE"

scsi1.sharedBus = "virtual"

scsi1.present = "TRUE"

scsi1.virtualDev = "lsilogic"

scsi1:0.mode = "independent-persistent"

scsi1:0.deviceType = "disk"

scsi1:0.present = "TRUE"

scsi1:0.fileName = "E:\vm\sharedisk19c\share-ocr01.vmdk"

scsi1:0.redo = ""

scsi1:1.mode = "independent-persistent"

scsi1:1.deviceType = "disk"

scsi1:1.present = "TRUE"

scsi1:1.fileName = "E:\vm\sharedisk19c\share-ocr02.vmdk"

scsi1:1.redo = ""

scsi1:2.mode = "independent-persistent"

scsi1:2.deviceType = "disk"

scsi1:2.present = "TRUE"

scsi1:2.fileName = "E:\vm\sharedisk19c\share-ocr03.vmdk"

scsi1:2.redo = ""

scsi1:3.mode = "independent-persistent"

scsi1:3.deviceType = "disk"

scsi1:3.present = "TRUE"

scsi1:3.fileName = "E:\vm\sharedisk19c\share-data01.vmdk"

scsi1:3.redo = ""

scsi1:4.mode = "independent-persistent"

scsi1:4.deviceType = "disk"

scsi1:4.present = "TRUE"

scsi1:4.fileName = "E:\vm\sharedisk19c\share-data02.vmdk"

scsi1:4.redo = ""

重新打开虚拟机设置进行确认

详情见 4.20 后面配置

[root@oracle19c-rac1 ~]# cat /etc/oracle-release

Oracle Linux Server release 7.9

[root@oracle19c-rac2 ~]# cat /etc/oracle-release

Oracle Linux Server release 7.9

[root@oracle19c-rac1 ~]# dmidecode |grep Name

Product Name: VMware Virtual Platform

Product Name: 440BX Desktop Reference Platform

Manufacturer Name: Intel

CPU:

[root@oracle19c-rac1 ~]# dmidecode |grep -i cpu|grep -i version|awk -F ':' '{print $2}'

Intel(R) Core(TM) i5-10210U CPU @ 1.60GHz

dmidecode|grep -A5 "Memory Device"|grep Size|grep -v No |grep -v Range

[root@oracle19c-rac1 ~]# dmidecode|grep -A5 "Memory Device"|grep Size|grep -v No |grep -v Range

Size: 4096 MB

[root@oracle19c-rac2 ~]# dmidecode|grep -A5 "Memory Device"|grep Size|grep -v No |grep -v Range

Size: 4096 MB

or

grep MemTotal /proc/meminfo | awk '{print $2}'

[root@oracle19c-rac1 ~]# free -h

total used free shared buff/cache available

Mem: 3.8G 154M 3.6G 8.8M 121M 3.5G

Swap: 4.0G 0B 4.0G

[root@oracle19c-rac1 ~]#

[root@oracle19c-rac2 ~]# free -h

total used free shared buff/cache available

Mem: 3.8G 155M 3.6G 8.8M 121M 3.5G

Swap: 4.0G 0B 4.0G

or

grep SwapTotal /proc/meminfo | awk '{print $2}'

[root@oracle19c-rac1 ~]# grep MemTotal /proc/meminfo | awk '{print $2}'

4021836

[root@oracle19c-rac1 ~]# grep SwapTotal /proc/meminfo | awk '{print $2}'

4194300

[root@oracle19c-rac1 ~]# df -h /tmp

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/ol-tmp 10G 33M 10G 1% /tmp

[root@oracle19c-rac2 ~]# df -h /tmp

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/ol-tmp 10G 33M 10G 1% /tmp

检查时间和时区

[root@oracle19c-rac1 ~]# date

Tue Jul 27 23:14:02 CST 2021

[root@oracle19c-rac2 ~]# date

Tue Jul 27 23:14:01 CST 2021

时区:

[root@oracle19c-rac1 ~]# timedatectl status|grep Local

Local time: Tue 2021-07-27 23:15:42 CST

[root@oracle19c-rac1 ~]# date -R

Tue, 27 Jul 2021 23:16:21 +0800

[root@oracle19c-rac2 ~]# timedatectl status|grep Local

Local time: Tue 2021-07-27 23:15:41 CST

[root@oracle19c-rac2 ~]# date -R

Tue, 27 Jul 2021 23:16:20 +0800

[root@oracle19c-rac2 ~]#

[root@oracle19c-rac1 ~]# timedatectl | grep "Asia/Shanghai"

Time zone: Asia/Shanghai (CST, +0800)

[root@oracle19c-rac2 ~]# timedatectl | grep "Asia/Shanghai"

Time zone: Asia/Shanghai (CST, +0800)

--设置时区:

timedatectl set-timezone "Asia/Shanghai" && timedatectl status|grep Local

[root@oracle19c-rac1 ~]# hostnamectl status

Static hostname: oracle19c-rac1

Icon name: computer-vm

Chassis: vm

Machine ID: 34ebaf27901a40b48fc42276652571d4

Boot ID: 0f9f03ed0ac1456aa94b5a76fe8bbead

Virtualization: vmware

Operating System: Oracle Linux Server 7.9

CPE OS Name: cpe:/o:oracle:linux:7:9:server

Kernel: Linux 5.4.17-2102.201.3.el7uek.x86_64

Architecture: x86-64

[root@oracle19c-rac2 ~]# hostnamectl status

Static hostname: oracle19c-rac2

Icon name: computer-vm

Chassis: vm

Machine ID: f1b57a32977647909b24903f1e20dcf6

Boot ID: 32567092d4c140e6a1f82cada7da5e07

Virtualization: vmware

Operating System: Oracle Linux Server 7.9

CPE OS Name: cpe:/o:oracle:linux:7:9:server

Kernel: Linux 5.4.17-2102.201.3.el7uek.x86_64

Architecture: x86-64

设置方法:

hostnamectl set-hostname oracle19c-rac1

hostnamectl set-hostname oracle19c-rac2

--主机名允许使用小写字母、数字和中横线(-),并且只能以小写字母开头。

cp /etc/hosts /etc/hosts_`date +"%Y%m%d_%H%M%S"`

echo '#public ip

192.168.245.141 oracle19c-rac1

192.168.245.142 oracle19c-rac2

#private ip

192.168.28.141 oracle19c-rac1-priv

192.168.28.142 oracle19c-rac2-priv

#vip

192.168.245.143 oracle19c-rac1-vip

192.168.245.144 oracle19c-rac2-vip

#scanip

192.168.245.145 oracle19c-rac-scan1'>> /etc/hosts

[root@oracle19c-rac1 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

#public ip

192.168.245.141 oracle19c-rac1

192.168.245.142 oracle19c-rac2

#private ip

192.168.28.141 oracle19c-rac1-priv

192.168.28.142 oracle19c-rac2-priv

#vip

192.168.245.143 oracle19c-rac1-vip

192.168.245.144 oracle19c-rac2-vip

#scanip

192.168.245.145 oracle19c-rac-scan1

[root@oracle19c-rac2 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

#public ip

192.168.245.141 oracle19c-rac1

192.168.245.142 oracle19c-rac2

#private ip

192.168.28.141 oracle19c-rac1-priv

192.168.28.142 oracle19c-rac2-priv

#vip

192.168.245.143 oracle19c-rac1-vip

192.168.245.144 oracle19c-rac2-vip

#scanip

192.168.245.145 oracle19c-rac-scan1

systemctl stop libvirtd

systemctl disable libvirtd

Note:对于虚拟机可选,需要重启操作系统

[root@oracle19c-rac1 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:75:c0:82 brd ff:ff:ff:ff:ff:ff

inet 192.168.245.141/24 brd 192.168.245.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::29c:ece5:a550:57c0/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: ens34: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:75:c0:8c brd ff:ff:ff:ff:ff:ff

inet 192.168.28.141/24 brd 192.168.28.255 scope global noprefixroute ens34

valid_lft forever preferred_lft forever

inet6 fe80::df7c:406a:1561:b983/64 scope link noprefixroute

valid_lft forever preferred_lft forever

[root@oracle19c-rac1 ~]#

[root@oracle19c-rac2 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:4f:be:2e brd ff:ff:ff:ff:ff:ff

inet 192.168.245.142/24 brd 192.168.245.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::bba7:d9fc:63bf:363d/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: ens34: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:4f:be:38 brd ff:ff:ff:ff:ff:ff

inet 192.168.28.142/24 brd 192.168.28.255 scope global noprefixroute ens34

valid_lft forever preferred_lft forever

inet6 fe80::1d01:e007:acaf:aaf2/64 scope link noprefixroute

valid_lft forever preferred_lft forever

需要确认两个节点的网卡名一致,否者安装会出现问题。

如何两个节点名称不一致,可以通过如下方式修改某一个节点.cat /etc/udev/rules.d/70-persistent-net.rules

ACTION=="add", SUBSYSTEM=="net", DRIVERS=="?*", ATTR{type}=="1", ATTR{address}=="00:50:56:86:64:82", KERNEL=="ens256" NAME="ens224"

ACTION=="add", SUBSYSTEM=="net", DRIVERS=="?*", ATTR{type}=="1", ATTR{address}=="00:50:56:86:05:a1", KERNEL=="ens161" NAME="ens192"

[root@oracle19c-rac1 ~]# ping oracle19c-rac1PING oracle19c-rac1 (192.168.245.141) 56(84) bytes of data.64 bytes from oracle19c-rac1 (192.168.245.141): icmp_seq=1 ttl=64 time=0.101 ms64 bytes from oracle19c-rac1 (192.168.245.141): icmp_seq=2 ttl=64 time=0.056 ms^C--- oracle19c-rac1 ping statistics ---2 packets transmitted, 2 received, 0% packet loss, time 1009msrtt min/avg/max/mdev = 0.056/0.078/0.101/0.024 ms[root@oracle19c-rac1 ~]# ping oracle19c-rac2PING oracle19c-rac2 (192.168.245.142) 56(84) bytes of data.64 bytes from oracle19c-rac2 (192.168.245.142): icmp_seq=1 ttl=64 time=0.313 ms64 bytes from oracle19c-rac2 (192.168.245.142): icmp_seq=2 ttl=64 time=3.21 ms64 bytes from oracle19c-rac2 (192.168.245.142): icmp_seq=3 ttl=64 time=0.484 ms^C--- oracle19c-rac2 ping statistics ---3 packets transmitted, 3 received, 0% packet loss, time 2021msrtt min/avg/max/mdev = 0.313/1.337/3.216/1.330 ms[root@oracle19c-rac1 ~]# ping oracle19c-rac2-privPING oracle19c-rac2-priv (192.168.28.142) 56(84) bytes of data.64 bytes from oracle19c-rac2-priv (192.168.28.142): icmp_seq=1 ttl=64 time=0.867 ms64 bytes from oracle19c-rac2-priv (192.168.28.142): icmp_seq=2 ttl=64 time=0.453 ms^C--- oracle19c-rac2-priv ping statistics ---2 packets transmitted, 2 received, 0% packet loss, time 1002msrtt min/avg/max/mdev = 0.453/0.660/0.867/0.207 ms[root@oracle19c-rac1 ~]# ping oracle19c-rac1-privPING oracle19c-rac1-priv (192.168.28.141) 56(84) bytes of data.64 bytes from oracle19c-rac1-priv (192.168.28.141): icmp_seq=1 ttl=64 time=0.534 ms64 bytes from oracle19c-rac1-priv (192.168.28.141): icmp_seq=2 ttl=64 time=0.049 ms64 bytes from oracle19c-rac1-priv (192.168.28.141): icmp_seq=3 ttl=64 time=0.114 ms^C--- oracle19c-rac1-priv ping statistics ---3 packets transmitted, 3 received, 0% packet loss, time 2074msrtt min/avg/max/mdev = 0.049/0.232/0.534/0.215 ms

当使用Oracle集群的时候,Zero Configuration Network一样可能会导致节点间的通信问题,所以也应该停掉Without zeroconf, a network administrator must set up network services, such as Dynamic Host Configuration Protocol (DHCP) and Domain Name System (DNS), or configure each computer's network settings manually.在使用平常的网络设置方式的情况下是可以停掉Zero Conf的

两个节点执行

echo "NOZEROCONF=yes" >>/etc/sysconfig/network && cat /etc/sysconfig/network

[root@oracle19c-rac1 ~]# echo "NOZEROCONF=yes" >>/etc/sysconfig/network && cat /etc/sysconfig/network

# Created by anaconda

NOZEROCONF=yes

[root@oracle19c-rac2 ~]# echo "NOZEROCONF=yes" >>/etc/sysconfig/network && cat /etc/sysconfig/network

# Created by anaconda

NOZEROCONF=yes

[root@oracle19c-rac1 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

devtmpfs 2.0G 0 2.0G 0% /dev

tmpfs 2.0G 0 2.0G 0% /dev/shm

tmpfs 2.0G 8.8M 2.0G 1% /run

tmpfs 2.0G 0 2.0G 0% /sys/fs/cgroup

/dev/mapper/ol-root 10G 1.4G 8.7G 14% /

/dev/mapper/ol-u01 70G 8.1G 62G 12% /u01

/dev/mapper/ol-tmp 10G 33M 10G 1% /tmp

/dev/sda1 1014M 169M 846M 17% /boot

tmpfs 393M 0 393M 0% /run/user/0

[root@oracle19c-rac2 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

devtmpfs 2.0G 0 2.0G 0% /dev

tmpfs 2.0G 0 2.0G 0% /dev/shm

tmpfs 2.0G 8.8M 2.0G 1% /run

tmpfs 2.0G 0 2.0G 0% /sys/fs/cgroup

/dev/mapper/ol-root 10G 1.4G 8.7G 14% /

/dev/mapper/ol-u01 70G 33M 70G 1% /u01

/dev/mapper/ol-tmp 10G 33M 10G 1% /tmp

/dev/sda1 1014M 169M 846M 17% /boot

tmpfs 393M 0 393M 0% /run/user/0

需要把/dev/shm调整到4G

Linux OL7/RHEL7: PRVE-0421 : No entry exists in /etc/fstab for mounting /dev/shm (文档 ID 2065603.1)

cp /etc/fstab /etc/fstab_`date +"%Y%m%d_%H%M%S"`

echo "tmpfs /dev/shm tmpfs rw,exec,size=4G 0 0">>/etc/fstab

[root@oracle19c-rac1 ~]# cp /etc/fstab /etc/fstab_`date +"%Y%m%d_%H%M%S"`

[root@oracle19c-rac1 ~]# echo "tmpfs /dev/shm tmpfs rw,exec,size=4G 0 0">>/etc/fstab

[root@oracle19c-rac1 ~]#

[root@oracle19c-rac1 ~]# cat /etc/fstab

#

# /etc/fstab

# Created by anaconda on Sun Jul 25 17:13:08 2021

#

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

/dev/mapper/ol-root / xfs defaults 0 0

UUID=9d5cc891-b252-41b1-a721-0ce462e56a30 /boot xfs defaults 0 0

/dev/mapper/ol-tmp /tmp xfs defaults 0 0

/dev/mapper/ol-u01 /u01 xfs defaults 0 0

/dev/mapper/ol-swap swap swap defaults 0 0

tmpfs /dev/shm tmpfs rw,exec,size=4G 0 0

[root@oracle19c-rac1 ~]#

[root@oracle19c-rac1 ~]# mount -o remount /dev/shm

[root@oracle19c-rac1 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

devtmpfs 2.0G 0 2.0G 0% /dev

tmpfs 4.0G 0 4.0G 0% /dev/shm

tmpfs 2.0G 8.8M 2.0G 1% /run

tmpfs 2.0G 0 2.0G 0% /sys/fs/cgroup

/dev/mapper/ol-root 10G 1.4G 8.7G 14% /

/dev/mapper/ol-u01 70G 8.1G 62G 12% /u01

/dev/mapper/ol-tmp 10G 33M 10G 1% /tmp

/dev/sda1 1014M 169M 846M 17% /boot

tmpfs 393M 0 393M 0% /run/user/0

检查:

cat /sys/kernel/mm/transparent_hugepage/enabled

cat /sys/kernel/mm/transparent_hugepage/defrag

修改

sed -i 's/quiet/quiet transparent_hugepage=never numa=off/' /etc/default/grub

grep quiet /etc/default/grub

grub2-mkconfig -o /boot/grub2/grub.cfg

重启后检查是否生效:

cat /sys/kernel/mm/transparent_hugepage/enabled

cat /proc/cmdline

#不重启,临时生效

echo never > /sys/kernel/mm/transparent_hugepage/enabled

cat /sys/kernel/mm/transparent_hugepage/enabled

#关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

systemctl status firewalld

[root@oracle19c-rac1 ~]# systemctl stop firewalld

[root@oracle19c-rac1 ~]# systemctl disable firewalld

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@oracle19c-rac2 ~]# echo never > /sys/kernel/mm/transparent_hugepage/enabled

[root@oracle19c-rac2 ~]# cat /sys/kernel/mm/transparent_hugepage/enabled

always madvise [never]

cp /etc/selinux/config /etc/selinux/config_`date +"%Y%m%d_%H%M%S"`&& sed -i 's/SELINUX\=enforcing/SELINUX\=disabled/g' /etc/selinux/config

cat /etc/selinux/config

#不重启

setenforce 0

getenforce

[root@oracle19c-rac1 ~]# sestatus

SELinux status: enabled

SELinuxfs mount: /sys/fs/selinux

SELinux root directory: /etc/selinux

Loaded policy name: targeted

Current mode: permissive

Mode from config file: disabled

Policy MLS status: enabled

Policy deny_unknown status: allowed

Max kernel policy version: 31

[root@oracle19c-rac2 ~]# sestatus

SELinux status: enabled

SELinuxfs mount: /sys/fs/selinux

SELinux root directory: /etc/selinux

Loaded policy name: targeted

Current mode: permissive

Mode from config file: disabled

Policy MLS status: enabled

Policy deny_unknown status: allowed

Max kernel policy version: 31

#mount cdrom

mount /dev/cdrom /mnt

#设置

cd /etc/yum.repos.d/

mkdir bak

mv *.repo ./bak/

cat >> /etc/yum.repos.d/oracle-linux-ol7.repo << "EOF"

[base]

name=base

baseurl=file:///mnt

enabled=1

gpgcheck=0

EOF

#测试

yum repolist

#检查(根据官方文档要求)

rpm -q --qf '%{NAME}-%{VERSION}-%{RELEASE} (%{ARCH})\n' \

bc \

binutils \

compat-libcap1 \

compat-libstdc++-33 \

elfutils-libelf \

elfutils-libelf-devel \

fontconfig-devel \

glibc \

gcc \

gcc-c++ \

glibc \

glibc-devel \

ksh \

libstdc++ \

libstdc++-devel \

libaio \

libaio-devel \

libXrender \

libXrender-devel \

libxcb \

libX11 \

libXau \

libXi \

libXtst \

libgcc \

libstdc++-devel \

make \

sysstat \

unzip \

readline \

smartmontools

#安装软件包和工具包

yum install -y bc* ntp* binutils* compat-libcap1* compat-libstdc++* dtrace-modules* dtrace-modules-headers* dtrace-modules-provider-headers* dtrace-utils* elfutils-libelf* elfutils-libelf-devel* fontconfig-devel* glibc* glibc-devel* ksh* libaio* libaio-devel* libdtrace-ctf-devel* libXrender* libXrender-devel* libX11* libXau* libXi* libXtst* libgcc* librdmacm-devel* libstdc++* libstdc++-devel* libxcb* make* net-tools* nfs-utils* python* python-configshell* python-rtslib* python-six* targetcli* smartmontools* sysstat* gcc* nscd* unixODBC* unzip readline tigervnc*

已经上传相关软件到/u01/sw下,两个节点

rpm -ivh /u01/sw/compat-libstdc++-33-3.2.3-72.el7.x86_64.rpm

#检查

rpm -q --qf '%{NAME}-%{VERSION}-%{RELEASE} (%{ARCH})\n' \

bc \

binutils \

compat-libcap1 \

compat-libstdc++-33 \

elfutils-libelf \

elfutils-libelf-devel \

fontconfig-devel \

glibc \

gcc \

gcc-c++ \

glibc \

glibc-devel \

ksh \

libstdc++ \

libstdc++-devel \

libaio \

libaio-devel \

libXrender \

libXrender-devel \

libxcb \

libX11 \

libXau \

libXi \

libXtst \

libgcc \

libstdc++-devel \

make \

sysstat \

unzip \

readline \

smartmontools

编者注:在 Linux 7之前,内核参数文件是修改 /etc/sysctl.conf 文件,但在 Linux 7.x 之后发生了变化(/etc/sysctl.d/97-oracle-database-sysctl.conf):但仍然可以修改这个文件,没有什么不一样,官方文档中 19c 使用 97-oracle-database-sysctl.conf。生效方式: /sbin/sysctl --system

主要核心参数手工计算如下:

MEM=$(expr $(grep MemTotal /proc/meminfo|awk '{print $2}') \* 1024)

SHMALL=$(expr $MEM / $(getconf PAGE_SIZE))

SHMMAX=$(expr $MEM \* 3 / 5) # 这里配置为3/5 RAM大小

echo $MEM

echo $SHMALL

echo $SHMMAX

min_free_kbytes = sqrt(lowmem_kbytes * 16) = 4 * sqrt(lowmem_kbytes)(注:lowmem_kbytes即可认为是系统内存大小)

vm.nr_hugepages =(内存M/3+ASM内存大小4096M)/Hugepagesize M

#操作系统内存的1/3加上ASM实例内存4G。

#x86平台 Hugepagesize =2048即2M,linuxone平台Hugepagesize=1024 即1M

# 例x86平台64G内存 (64G*1024/3+4096M)/2M=12971

例x86平台32G内存 (32G*1024/3+4096M)/2M=7509

例x86平台16G内存 (16G*1024/3+4096M)/2M=4778

#linuxone平台 64G内存 (64G*1024/3+4096M)/1M=25942

#linuxone平台 32G内存 (32G*1024/3+4096M)/1M=12971

256*1024/3+4096

cp /etc/sysctl.conf /etc/sysctl.conf.bak

memTotal=$(grep MemTotal /proc/meminfo | awk '{print $2}')

totalMemory=$((memTotal / 2048))

shmall=$((memTotal / 4))

if [ $shmall -lt 2097152 ]; then

shmall=2097152

fi

shmmax=$((memTotal * 1024 - 1))

if [ "$shmmax" -lt 4294967295 ]; then

shmmax=4294967295

fi

cat <<EOF>>/etc/sysctl.conf

fs.aio-max-nr = 1048576

fs.file-max = 6815744

kernel.shmall = $shmall

kernel.shmmax = $shmmax

kernel.shmmni = 4096

kernel.sem = 250 32000 100 128

net.ipv4.ip_local_port_range = 9000 65500

net.core.rmem_default = 16777216

net.core.rmem_max = 16777216

net.core.wmem_max = 16777216

net.core.wmem_default = 16777216

fs.aio-max-nr = 6194304

vm.dirty_ratio=20

vm.dirty_background_ratio=3

vm.dirty_writeback_centisecs=100

vm.dirty_expire_centisecs=500

vm.swappiness=10

vm.min_free_kbytes=524288

net.core.netdev_max_backlog = 30000

net.core.netdev_budget = 600

#vm.nr_hugepages =

net.ipv4.conf.all.rp_filter = 2

net.ipv4.conf.default.rp_filter = 2

net.ipv4.ipfrag_time = 60

net.ipv4.ipfrag_low_thresh=6291456

net.ipv4.ipfrag_high_thresh = 8388608

EOF

sysctl -p

[root@oracle19c-rac2 ~]# sysctl -p

fs.aio-max-nr = 1048576

fs.file-max = 6815744

kernel.shmall = 2097152

kernel.shmmax = 4294967295

kernel.shmmni = 4096

kernel.sem = 250 32000 100 128

net.ipv4.ip_local_port_range = 9000 65500

net.core.rmem_default = 16777216

net.core.rmem_max = 16777216

net.core.wmem_max = 16777216

net.core.wmem_default = 16777216

fs.aio-max-nr = 6194304

vm.dirty_ratio = 20

vm.dirty_background_ratio = 3

vm.dirty_writeback_centisecs = 100

vm.dirty_expire_centisecs = 500

vm.swappiness = 10

vm.min_free_kbytes = 524288

net.core.netdev_max_backlog = 30000

net.core.netdev_budget = 600

net.ipv4.conf.all.rp_filter = 2

net.ipv4.conf.default.rp_filter = 2

net.ipv4.ipfrag_time = 60

net.ipv4.ipfrag_low_thresh = 6291456

net.ipv4.ipfrag_high_thresh = 8388608

systemctl stop avahi-deamon

systemctl disable avahi-deamon

systemctl stop avahi-chsconfd

systemctl disable avahi-chsconfd

--禁用开机启动

systemctl disable accounts-daemon.service

systemctl disable atd.service

systemctl disable avahi-daemon.service

systemctl disable avahi-daemon.socket

systemctl disable bluetooth.service

systemctl disable brltty.service

--systemctl disable chronyd.service

systemctl disable colord.service

systemctl disable cups.service

systemctl disable debug-shell.service

systemctl disable firewalld.service

systemctl disable gdm.service

systemctl disable ksmtuned.service

systemctl disable ktune.service

systemctl disable libstoragemgmt.service

systemctl disable mcelog.service

systemctl disable ModemManager.service

--systemctl disable ntpd.service

systemctl disable postfix.service

systemctl disable postfix.service

systemctl disable rhsmcertd.service

systemctl disable rngd.service

systemctl disable rpcbind.service

systemctl disable rtkit-daemon.service

systemctl disable tuned.service

systemctl disable upower.service

systemctl disable wpa_supplicant.service

--停止服务

systemctl stop accounts-daemon.service

systemctl stop atd.service

systemctl stop avahi-daemon.service

systemctl stop avahi-daemon.socket

systemctl stop bluetooth.service

systemctl stop brltty.service

--systemctl stop chronyd.service

systemctl stop colord.service

systemctl stop cups.service

systemctl stop debug-shell.service

systemctl stop firewalld.service

systemctl stop gdm.service

systemctl stop ksmtuned.service

systemctl stop ktune.service

systemctl stop libstoragemgmt.service

systemctl stop mcelog.service

systemctl stop ModemManager.service

--systemctl stop ntpd.service

systemctl stop postfix.service

systemctl stop postfix.service

systemctl stop rhsmcertd.service

systemctl stop rngd.service

systemctl stop rpcbind.service

systemctl stop rtkit-daemon.service

systemctl stop tuned.service

systemctl stop upower.service

systemctl stop wpa_supplicant.service

暂时不停止chrony和ntp

--配置LoginGraceTime参数为0, 将timeout wait设置为无限制

cp /etc/ssh/sshd_config /etc/ssh/sshd_config_`date +"%Y%m%d_%H%M%S"` && sed -i '/#LoginGraceTime 2m/ s/#LoginGraceTime 2m/LoginGraceTime 0/' /etc/ssh/sshd_config && grep LoginGraceTime /etc/ssh/sshd_config

--加快SSH登陆速度,禁用DNS

cp /etc/ssh/sshd_config /etc/ssh/sshd_config_`date +"%Y%m%d_%H%M%S"` && sed -i '/#UseDNS yes/ s/#UseDNS yes/UseDNS no/' /etc/ssh/sshd_config && grep UseDNS /etc/ssh/sshd_config

4.13 hugepage配置(可选)

与AMM冲突

如果您有较大的RAM和SGA,则HugePages对于在Linux上提高Oracle数据库性能至关重要

grep HugePagesize /proc/meminfo

Hugepagesize: 2048 kB

chmod 755 hugepages_settings.sh

需要在数据库启动情况下执行

脚本:

cat hugepages_settings.sh

#!/bin/bash

#

# hugepages_settings.sh

#

# Linux bash script to compute values for the

# recommended HugePages/HugeTLB configuration

# on Oracle Linux

#

# Note: This script does calculation for all shared memory

# segments available when the script is run, no matter it

# is an Oracle RDBMS shared memory segment or not.

#

# This script is provided by Doc ID 401749.1 from My Oracle Support

# http://support.oracle.com

# Welcome text

echo "

This script is provided by Doc ID 401749.1 from My Oracle Support

(http://support.oracle.com) where it is intended to compute values for

the recommended HugePages/HugeTLB configuration for the current shared

memory segments on Oracle Linux. Before proceeding with the execution please note following:

* For ASM instance, it needs to configure ASMM instead of AMM.

* The 'pga_aggregate_target' is outside the SGA and

you should accommodate this while calculating the overall size.

* In case you changes the DB SGA size,

as the new SGA will not fit in the previous HugePages configuration,

it had better disable the whole HugePages,

start the DB with new SGA size and run the script again.

And make sure that:

* Oracle Database instance(s) are up and running

* Oracle Database 11g Automatic Memory Management (AMM) is not setup

(See Doc ID 749851.1)

* The shared memory segments can be listed by command:

# ipcs -m

Press Enter to proceed..."

read

# Check for the kernel version

KERN=`uname -r | awk -F. '{ printf("%d.%d/n",$1,$2); }'`

# Find out the HugePage size

HPG_SZ=`grep Hugepagesize /proc/meminfo | awk '{print $2}'`

if [ -z "$HPG_SZ" ];then

echo "The hugepages may not be supported in the system where the script is being executed."

exit 1

fi

# Initialize the counter

NUM_PG=0

# Cumulative number of pages required to handle the running shared memory segments

for SEG_BYTES in `ipcs -m | cut -c44-300 | awk '{print $1}' | grep "[0-9][0-9]*"`

do

MIN_PG=`echo "$SEG_BYTES/($HPG_SZ*1024)" | bc -q`

if [ $MIN_PG -gt 0 ]; then

NUM_PG=`echo "$NUM_PG+$MIN_PG+1" | bc -q`

fi

done

RES_BYTES=`echo "$NUM_PG * $HPG_SZ * 1024" | bc -q`

# An SGA less than 100MB does not make sense

# Bail out if that is the case

if [ $RES_BYTES -lt 100000000 ]; then

echo "***********"

echo "** ERROR **"

echo "***********"

echo "Sorry! There are not enough total of shared memory segments allocated for

HugePages configuration. HugePages can only be used for shared memory segments

that you can list by command:

# ipcs -m

of a size that can match an Oracle Database SGA. Please make sure that:

* Oracle Database instance is up and running

* Oracle Database 11g Automatic Memory Management (AMM) is not configured"

exit 1

fi

# Finish with results

case $KERN in

'2.4') HUGETLB_POOL=`echo "$NUM_PG*$HPG_SZ/1024" | bc -q`;

echo "Recommended setting: vm.hugetlb_pool = $HUGETLB_POOL" ;;

'2.6') echo "Recommended setting: vm.nr_hugepages = $NUM_PG" ;;

'3.8') echo "Recommended setting: vm.nr_hugepages = $NUM_PG" ;;

'3.10') echo "Recommended setting: vm.nr_hugepages = $NUM_PG" ;;

'4.1') echo "Recommended setting: vm.nr_hugepages = $NUM_PG" ;;

'4.14') echo "Recommended setting: vm.nr_hugepages = $NUM_PG" ;;

'4.18') echo "Recommended setting: vm.nr_hugepages = $NUM_PG" ;;

'5.4') echo "Recommended setting: vm.nr_hugepages = $NUM_PG" ;;

*) echo "Kernel version $KERN is not supported by this script (yet). Exiting." ;;

esac

# End

计算需要的页数:

linux 一个大页的大小为 2M,开启大页的总内存应该比 sga_max_size 稍稍大一点,比如

sga_max_size=3g,则:hugepages > (3*1024)/2 = 1536

配置 sysctl.conf 文件,添加:

[root@ node01 ~]$ vi /etc/sysctl.conf

vm.nr_hugepages = 1550

配置/etc/security/limits.conf,添加(比 sga_max_size 稍大,官方建议为总物理内存的 90%,以 K 为

单位):

[root@ node01 ~]$ vi /etc/security/limits.conf

oracle soft memlock 3400000

oracle hard memlock 3400000

# vim /etc/sysctl.conf

vm.nr_hugepages = xxxx

# sysctl -p

vim /etc/security/limits.conf

oracle soft memlock xxxxxxxxxxx

oracle hard memlock xxxxxxxxxxx

cat >> /etc/pam.d/login <<EOF

session required pam_limits.so

EOF

cat >> /etc/security/limits.conf <<EOF

grid soft nproc 2047

grid hard nproc 16384

grid soft nofile 1024

grid hard nofile 65536

grid soft stack 10240

grid hard stack 32768

oracle soft nproc 2047

oracle hard nproc 16384

oracle soft nofile 1024

oracle hard nofile 65536

oracle soft stack 10240

oracle hard stack 32768

oracle soft memlock 3145728

oracle hard memlock 3145728

EOF

ntp

chony

-x

各节点系统时间校对:

--检验时间和时区确认正确

date

--关闭chrony服务,移除chrony配置文件(后续使用ctss)

systemctl list-unit-files|grep chronyd

systemctl status chronyd

systemctl disable chronyd

systemctl stop chronyd

mv /etc/chrony.conf /etc/chrony.conf_bak

mv /etc/ntp.conf /etc/ntp.conf_bak

systemctl list-unit-files|grep -E 'ntp|chrony'

--这里实验环境,选择不使用NTP和chrony,这样Oracle会自动使用自己的ctss服务

1)修改所有节点的/etc/ntp.conf

【命令】vi /etc/ntp.conf

【内容】

restrict 192.168.6.3 nomodify notrap nopeer noquery //当前节点IP地址

restrict 192.168.6.2 mask 255.255.255.0 nomodify notrap //集群所在网段的网关(Gateway),子网掩码(Genmask)

2)选择一个主节点,修改其/etc/ntp.conf

【命令】vi /etc/ntp.conf

【内容】在server部分添加一下部分,并注释掉server 0 ~ n

server 127.127.1.0

Fudge 127.127.1.0 stratum 10

3)主节点以外,继续修改/etc/ntp.conf

【命令】vi /etc/ntp.conf

【内容】在server部分添加如下语句,将server指向主节点。

server 192.168.6.3

Fudge 192.168.6.3 stratum 10

节点1

echo

systemctl status ntpd

systemctl stop ntpd

systemctl stop chronyd

systemctl disable chronyd

sed -i 's/OPTIONS="-g"/OPTIONS="-g -x"/' /etc/sysconfig/ntpd

vim /etc/ntp.conf

注释server

sed '/^server/s/^/#/' /etc/ntp.conf -i

server 127.127.1.0

Fudge 127.127.1.0 stratum 10

# Hosts on local network are less restricted.

restrict 192.168.245.0 mask 255.255.255.0 nomodify notrap

把网段改为 192.168.245.0,取消注释

# Use public servers from the pool.ntp.org project.

# Please consider joining the pool (http://www.pool.ntp.org/join.html).

#server 0.rhel.pool.ntp.org iburst

#server 1.rhel.pool.ntp.org iburst

#server 2.rhel.pool.ntp.org iburst

#server 3.rhel.pool.ntp.org iburst

server 127.127.1.0

Fudge 127.127.1.0 stratum 10

#broadcast 192.168.1.255 autokey # broadcast server

#broadcastclient # broadcast client

#broadcast 224.0.1.1 autokey # multicast server

#multicastclient 224.0.1.1 # multicast client

#manycastserver 239.255.254.254 # manycast server

#manycastclient 239.255.254.254 autokey # manycast client

# Enable public key cryptography.

#crypto

includefile /etc/ntp/crypto/pw

# Key file containing the keys and key identifiers used when operating

# with symmetric key cryptography.

keys /etc/ntp/keys

---

把网段改为 192.168.245.0

systemctl start ntpd

systemctl enable ntpd

echo

节点2

echo

systemctl stop ntpd

systemctl stop chronyd

systemctl disable chronyd

sed -i 's/OPTIONS="-g"/OPTIONS="-g -x"/' /etc/sysconfig/ntpd

sed -i 's/^server/#server/g' /etc/ntp.conf

sed -i '$a server 192.168.245.141 iburst' /etc/ntp.conf

systemctl start ntpd

systemctl enable ntpd

echo

检查ntp配置文件/etc/sysconfig/ntpd,也已经从默认值OPTIONS="-g"修改成OPTIONS="-x -g",但是在使用命令$ cluvfy comp clocksync -n all –verbose检查时为什么会失败呢?

通过MOS文档《Linux:CVU NTP Prerequisite check fails with PRVF-7590, PRVG-1024 and PRVF-5415 (Doc ID2126223.1)》分析可以看出:If var/run/ntpd.pid does not existon the server, the CVU command fails. This is due to unpublished bug 19427746 which has been fixed in Oracle 12.2.(意思是:如果服务器上不存在/var/run/ntpd.pid,则CVU命令失败。这是由于未发布的错误BUG 19427746,该错误已在Oracle 12.2中修复。)

最小化安装没有安装相关包

需要自行安装 yum -y install chrony

配置文件说明

$ cat /etc/chrony.conf

# 使用pool.ntp.org项目中的公共服务器。以server开,理论上你想添加多少时间服务器都可以。

# Please consider joining the pool (http://www.pool.ntp.org/join.html).

server 0.centos.pool.ntp.org iburst

server 1.centos.pool.ntp.org iburst

server 2.centos.pool.ntp.org iburst

server 3.centos.pool.ntp.org iburst

# 根据实际时间计算出服务器增减时间的比率,然后记录到一个文件中,在系统重启后为系统做出最佳时间补偿调整。

driftfile /var/lib/chrony/drift

# chronyd根据需求减慢或加速时间调整,

# 在某些情况下系统时钟可能漂移过快,导致时间调整用时过长。

# 该指令强制chronyd调整时期,大于某个阀值时步进调整系统时钟。

# 只有在因chronyd启动时间超过指定的限制时(可使用负值来禁用限制)没有更多时钟更新时才生效。

makestep 1.0 3

# 将启用一个内核模式,在该模式中,系统时间每11分钟会拷贝到实时时钟(RTC)。

rtcsync

# Enable hardware timestamping on all interfaces that support it.

# 通过使用hwtimestamp指令启用硬件时间戳

#hwtimestamp eth0

#hwtimestamp eth1

#hwtimestamp *

# Increase the minimum number of selectable sources required to adjust

# the system clock.

#minsources 2

# 指定一台主机、子网,或者网络以允许或拒绝NTP连接到扮演时钟服务器的机器

#allow 192.168.0.0/16

#deny 192.168/16

# Serve time even if not synchronized to a time source.

local stratum 10

# 指定包含NTP验证密钥的文件。

#keyfile /etc/chrony.keys

# 指定日志文件的目录。

logdir /var/log/chrony

# Select which information is logged.

#log measurements statistics tracking

RAC1:

1 先注释server :

sed '/^server/s/^/#/' /etc/chrony.conf -i

注释server

2

# vi /etc/chrony.conf

# Serve time even if not synchronized to a time source.开启该服务,在不与外网同步时间的情况下,依然为下层终端提供同步服务

local stratum 10

#allow用来标记允许同步的网段或主机,下例是允许192.168.245.0/24这个网段的终端来同步,127/8是本机和自己同步。

allow 192.168.245.0/24

server 127.0.0.1 iburst --表示本机同步

allow #允许所有网段连入

local stratum 10

3 重新启动 systemctl restart chronyd.service

RAC2:

1 先注释server :

sed '/^server/s/^/#/' /etc/chrony.conf -i

注释server

2

# vi /etc/chrony.conf

server 192.168.245.141 iburst --表示RAC1同步

重启时间同步服务:

systemctl restart chronyd.service

systemctl enable chronyd.service

查看时间同步源:

# chronyc sources -v

chronyc sourcestats -v

查看 ntp_servers 是否在线

chronyc activity -v

查看 ntp 详细信息

chronyc tracking -v

groupadd -g 54321 oinstall

groupadd -g 54322 dba

groupadd -g 54323 oper

groupadd -g 54324 backupdba

groupadd -g 54325 dgdba

groupadd -g 54326 kmdba

groupadd -g 54327 asmdba

groupadd -g 54328 asmoper

groupadd -g 54329 asmadmin

groupadd -g 54330 racdba

useradd -g oinstall -G dba,oper,backupdba,dgdba,kmdba,asmdba,racdba -u 10000 oracle

useradd -g oinstall -G dba,asmdba,asmoper,asmadmin,racdba -u 10001 grid

echo "oracle" | passwd --stdin oracle

echo "grid" | passwd --stdin grid

mkdir -p /u01/app/19.3.0/grid

mkdir -p /u01/app/grid

mkdir -p /u01/app/oracle/product/19.3.0/dbhome_1

chown -R grid:oinstall /u01

chown -R oracle:oinstall /u01/app/oracle

chmod -R 775 /u01/

cat >> /home/grid/.bash_profile << "EOF"

################add#########################

umask 022

export TMP=/tmp

export TMPDIR=$TMP

export ORACLE_BASE=/u01/app/grid

export ORACLE_HOME=/u01/app/19.3.0/grid

export TNS_ADMIN=$ORACLE_HOME/network/admin

export NLS_LANG=AMERICAN_AMERICA.AL32UTF8

export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib

export ORACLE_SID=+ASM1

export PATH=/usr/sbin:$PATH

export PATH=$ORACLE_HOME/bin:$ORACLE_HOME/OPatch:$PATH

alias sas='sqlplus / as sysasm'

export PS1="[\`whoami\`@\`hostname\`:"'$PWD]\$ '

EOF

cat >> /home/grid/.bash_profile << "EOF"

################ enmo add#########################

umask 022

export TMP=/tmp

export TMPDIR=$TMP

export ORACLE_BASE=/u01/app/grid

export ORACLE_HOME=/u01/app/19.3.0/grid

export TNS_ADMIN=$ORACLE_HOME/network/admin

export NLS_LANG=AMERICAN_AMERICA.AL32UTF8

export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib

export ORACLE_SID=+ASM2

export PATH=/usr/sbin:$PATH

export PATH=$ORACLE_HOME/bin:$ORACLE_HOME/OPatch:$PATH

alias sas='sqlplus / as sysasm'

export PS1="[\`whoami\`@\`hostname\`:"'$PWD]\$ '

EOF

cat >> /home/oracle/.bash_profile << "EOF"

################ add#########################

umask 022

export TMP=/tmp

export TMPDIR=$TMP

export NLS_LANG=AMERICAN_AMERICA.AL32UTF8

export ORACLE_BASE=/u01/app/oracle

export ORACLE_HOME=$ORACLE_BASE/product/19.3.0/dbhome_1

export ORACLE_HOSTNAME=oracle19c-rac1

export TNS_ADMIN=\$ORACLE_HOME/network/admin

export LD_LIBRARY_PATH=\$ORACLE_HOME/lib:/lib:/usr/lib

export ORACLE_SID=orcl1

export PATH=/usr/sbin:$PATH

export PATH=$ORACLE_HOME/bin:$ORACLE_HOME/OPatch:$PATH

alias sas='sqlplus / as sysdba'

export PS1="[\`whoami\`@\`hostname\`:"'$PWD]\$ '

EOF

cat >> /home/oracle/.bash_profile << "EOF"

################ add#########################

umask 022

export TMP=/tmp

export TMPDIR=$TMP

export NLS_LANG=AMERICAN_AMERICA.AL32UTF8

export ORACLE_BASE=/u01/app/oracle

export ORACLE_HOME=$ORACLE_BASE/product/19.3.0/dbhome_1

export ORACLE_HOSTNAME=oracle19c-rac1

export TNS_ADMIN=\$ORACLE_HOME/network/admin

export LD_LIBRARY_PATH=\$ORACLE_HOME/lib:/lib:/usr/lib

export ORACLE_SID=orcl2

export PATH=/usr/sbin:$PATH

export PATH=$ORACLE_HOME/bin:$ORACLE_HOME/OPatch:$PATH

alias sas='sqlplus / as sysdba'

export PS1="[\`whoami\`@\`hostname\`:"'$PWD]\$ '

EOF

##安装multipath

yum install -y device-mapper*

mpathconf --enable --with_multipathd y

##查看共享盘的scsi_id

/usr/lib/udev/scsi_id -g -u /dev/sdb

/usr/lib/udev/scsi_id -g -u /dev/sdc

/usr/lib/udev/scsi_id -g -u /dev/sdd

/usr/lib/udev/scsi_id -g -u /dev/sde

/usr/lib/udev/scsi_id -g -u /dev/sdf

##配置multipath,wwid的值为上面获取的scsi_id,alias可自定义,这里配置3块OCR盘,2块DATA盘

defaults {

user_friendly_names yes

}

这里可以和之前的冲突了

cp /etc/multipath.conf /etc/multipath.conf.bak

sed '/^/s/^/#/' /etc/ntp.conf -i 注释所有的行

cat <<EOF>> /etc/multipath.conf

defaults {

user_friendly_names yes

}

blacklist {

devnode "^sda"

}

multipaths {

multipath {

wwid "36000c29a961f7eb1208473713ca7b007"

alias asm_ocr01

}

multipath {

wwid "36000c29f87ed61db71c60bd3d6e737dc"

alias asm_ocr02

}

multipath {

wwid "36000c297c53b91255620471a6deb6853"

alias asm_ocr03

}

multipath {

wwid "36000c29e516572af5c105d12e8c0db12"

alias asm_data01

}

multipath {

wwid "36000c29d6be6679787ceadf23b29b180"

alias asm_data02

}

}

EOF

激活multipath多路径:

multipath -F

multipath -v2

multipath -ll

cd /dev/mapper

for i in asm_*; do

printf "%s %s\n" "$i" "$(udevadm info --query=all --name=/dev/mapper/"$i" | grep -i dm_uuid)" >>/dev/mapper/udev_info

done

while read -r line; do

dm_uuid=$(echo "$line" | awk -F'=' '{print $2}')

disk_name=$(echo "$line" | awk '{print $1}')

echo "KERNEL==\"dm-*\",ENV{DM_UUID}==\"${dm_uuid}\",SYMLINK+=\"${disk_name}\",OWNER=\"grid\",GROUP=\"asmadmin\",MODE=\"0660\"" >>/etc/udev/rules.d/99-oracle-asmdevices.rules

done < /dev/mapper/udev_info

##重载udev

udevadm control --reload-rules

udevadm trigger --type=devices

ll /dev/asm*

[root@oracle19c-rac2 dev]# more /etc/udev/rules.d/99-oracle-asmdevices.rules

KERNEL=="dm-*",ENV{DM_UUID}=="mpath-36000c29e516572af5c105d12e8c0db12",SYMLINK+="asm_data01",OWNER="grid",GROUP="asmadmin",MODE="0660"

KERNEL=="dm-*",ENV{DM_UUID}=="mpath-36000c29d6be6679787ceadf23b29b180",SYMLINK+="asm_data02",OWNER="grid",GROUP="asmadmin",MODE="0660"

KERNEL=="dm-*",ENV{DM_UUID}=="mpath-36000c29a961f7eb1208473713ca7b007",SYMLINK+="asm_ocr01",OWNER="grid",GROUP="asmadmin",MODE="0660"

KERNEL=="dm-*",ENV{DM_UUID}=="mpath-36000c29f87ed61db71c60bd3d6e737dc",SYMLINK+="asm_ocr02",OWNER="grid",GROUP="asmadmin",MODE="0660"

KERNEL=="dm-*",ENV{DM_UUID}=="mpath-36000c297c53b91255620471a6deb6853",SYMLINK+="asm_ocr03",OWNER="grid",GROUP="asmadmin",MODE="0660"

[root@oracle19c-rac2 dev]#

[root@oracle19c-rac2 mapper]# ll

total 4

lrwxrwxrwx 1 root root 7 Jul 28 15:44 asm_data01 -> ../dm-7

lrwxrwxrwx 1 root root 7 Jul 28 15:44 asm_data02 -> ../dm-8

lrwxrwxrwx 1 root root 7 Jul 28 15:44 asm_ocr01 -> ../dm-4

lrwxrwxrwx 1 root root 7 Jul 28 15:44 asm_ocr02 -> ../dm-5

lrwxrwxrwx 1 root root 7 Jul 28 15:44 asm_ocr03 -> ../dm-6

crw------- 1 root root 10, 236 Jul 28 15:44 control

lrwxrwxrwx 1 root root 7 Jul 28 15:44 ol-root -> ../dm-0

lrwxrwxrwx 1 root root 7 Jul 28 15:44 ol-swap -> ../dm-1

lrwxrwxrwx 1 root root 7 Jul 28 15:44 ol-tmp -> ../dm-2

lrwxrwxrwx 1 root root 7 Jul 28 15:44 ol-u01 -> ../dm-3

-rw-r--r-- 1 root root 307 Jul 28 15:44 udev_info

[root@oracle19c-rac2 mapper]# ll /dev/dm*

brw-rw---- 1 root disk 252, 0 Jul 28 15:44 /dev/dm-0

brw-rw---- 1 root disk 252, 1 Jul 28 15:44 /dev/dm-1

brw-rw---- 1 root disk 252, 2 Jul 28 15:44 /dev/dm-2

brw-rw---- 1 root disk 252, 3 Jul 28 15:44 /dev/dm-3

brw-rw---- 1 grid asmadmin 252, 4 Jul 28 15:44 /dev/dm-4

brw-rw---- 1 grid asmadmin 252, 5 Jul 28 15:44 /dev/dm-5

brw-rw---- 1 grid asmadmin 252, 6 Jul 28 15:44 /dev/dm-6

brw-rw---- 1 grid asmadmin 252, 7 Jul 28 15:44 /dev/dm-7

brw-rw---- 1 grid asmadmin 252, 8 Jul 28 15:44 /dev/dm-8

crw-rw---- 1 root audio 14, 9 Jul 28 15:44 /dev/dmmidi

for i in b c d e ;

do

echo "KERNEL==\"sd*\", ENV{DEVTYPE}==\"disk\", SUBSYSTEM==\"block\", PROGRAM==\"/lib/udev/scsi_id -g -u -d \$devnode\",

RESULT==\"`/lib/udev/scsi_id -g -u -d /dev/sd$i`\", SYMLINK+=\"asm-disk$i\", OWNER=\"grid\", GROUP=\"asmadmin\", MODE=

\"0660\"" >> /etc/udev/rules.d/99-oracle-asmdevices.rules

done

# 加载rules文件,重新加载udev rule

/sbin/udevadm control --reload

# 检查新的设备名称

/sbin/udevadm trigger --type=devices --action=change

# 诊断udev rule

/sbin/udevadm test /sys/block/*

用脚本生成udev 配置:

for i in b c d e f;

do

echo "KERNEL==/"sd*/",ENV{DEVTYPE}==/"disk/",SUBSYSTEM==/"block/",PROGRAM==/"/usr/lib/udev/scsi_id -g -u -d /$devnode/",RESULT==/"`/usr/lib/udev/scsi_id -g -u /dev/sd$i`/", RUN+=/"/bin/sh -c 'mknod /dev/asmdisk$i b /$major /$minor; chown grid:asmadmin /dev/asmdisk$i; chmod 0660 /dev/asmdisk$i'/""

done

将脚本内容写入/etc/udev/rules.d/99-oracle-asmdevices.rules 文件。

[root@rac1 software]# vim /etc/udev/rules.d/99-oracle-asmdevices.rules

[root@rac1 software]# cat /etc/udev/rules.d/99-oracle-asmdevices.rules

KERNEL=="sd*",ENV{DEVTYPE}=="disk",SUBSYSTEM=="block",PROGRAM=="/usr/lib/udev/scsi_id -g -u -d $devnode",RESULT=="36000c29cf5ef7bd3907344106bcca59b", RUN+="/bin/sh -c 'mknod /dev/asmdiskb b $major $minor; chown grid:asmadmin /dev/asmdiskb; chmod 0660 /dev/asmdiskb'"

KERNEL=="sd*",ENV{DEVTYPE}=="disk",SUBSYSTEM=="block",PROGRAM=="/usr/lib/udev/scsi_id -g -u -d $devnode",RESULT=="36000c293ef1e02395716263ee17e8926", RUN+="/bin/sh -c 'mknod /dev/asmdiskc b $major $minor; chown grid:asmadmin /dev/asmdiskc; chmod 0660 /dev/asmdiskc'"

KERNEL=="sd*",ENV{DEVTYPE}=="disk",SUBSYSTEM=="block",PROGRAM=="/usr/lib/udev/scsi_id -g -u -d $devnode",RESULT=="36000c29a790b610473d4800954053180", RUN+="/bin/sh -c 'mknod /dev/asmdiskd b $major $minor; chown grid:asmadmin /dev/asmdiskd; chmod 0660 /dev/asmdiskd'"

KERNEL=="sd*",ENV{DEVTYPE}=="disk",SUBSYSTEM=="block",PROGRAM=="/usr/lib/udev/scsi_id -g -u -d $devnode",RESULT=="36000c292fe31e2c5ec2cf689791c09d7", RUN+="/bin/sh -c 'mknod /dev/asmdiske b $major $minor; chown grid:asmadmin /dev/asmdiske; chmod 0660 /dev/asmdiske'"

KERNEL=="sd*",ENV{DEVTYPE}=="disk",SUBSYSTEM=="block",PROGRAM=="/usr/lib/udev/scsi_id -g -u -d $devnode",RESULT=="36000c29c87abcc922d24e7bc0e2978a7", RUN+="/bin/sh -c 'mknod /dev/asmdiskf b $major $minor; chown grid:asmadmin /dev/asmdiskf; chmod 0660 /dev/asmdiskf'"

KERNEL=="sd*",ENV{DEVTYPE}=="disk",SUBSYSTEM=="block",PROGRAM=="/usr/lib/udev/scsi_id -g -u -d $devnode",RESULT=="36000c297ede8a5b0a407451699668920", RUN+="/bin/sh -c 'mknod /dev/asmdiskg b $major $minor; chown grid:asmadmin /dev/asmdiskg; chmod 0660 /dev/asmdiskg'"

让UDEV生效:/sbin/udevadm trigger —type=devices —action=change

or

KERNEL=="sdb", SUBSYSTEM=="block", PROGRAM=="/usr/lib/udev/scsi_id -g -u -d

/dev/$name",RESULT=="360003ff44dc75adc8cec9cce0033f402", OWNER="grid",

GROUP="asmadmin", MODE="0660"

KERNEL=="sdc", SUBSYSTEM=="block", PROGRAM=="/usr/lib/udev/scsi_id -g -u -d

/dev/$name",RESULT=="360003ff44dc75adc9ba684d395391bae", OWNER="grid",

GROUP="asmadmin", MODE="0660

ll /dev/asm*

Use Oracle ASM command line tool (ASMCMD) to provision the disk devices

for use with Oracle ASM Filter Driver.

[root@19c-node1 grid]# asmcmd afd_label DATA1 /dev/sdb --init

[root@19c-node1 grid]# asmcmd afd_label DATA2 /dev/sdc --init

[root@19c-node1 grid]# asmcmd afd_label DATA3 /dev/sdd --init

[root@19c-node1 grid]# asmcmd afd_lslbl /dev/sdb

[root@19c-node1 grid]# asmcmd afd_lslbl /dev/sdc

[root@19c-node1 grid]# asmcmd afd_lslbl /dev/sdd

说明:

# cat /sys/block/${ASM_DISK}/queue/scheduler

noop [deadline] cfq

If the default disk I/O scheduler is not Deadline, then set it using a rules file:

1. Using a text editor, create a UDEV rules file for the Oracle ASM devices:

# vi /etc/udev/rules.d/60-oracle-schedulers.rules

2. Add the following line to the rules file and save it:

ACTION=="add|change", KERNEL=="sd[a-z]", ATTR{queue/rotational}=="0",

ATTR{queue/scheduler}="deadline"

3. On clustered systems, copy the rules file to all other nodes on the cluster. For

example:

$ scp 60-oracle-schedulers.rules root@node2:/etc/udev/rules.d/

4. Load the rules file and restart the UDEV service. For example:

a. Oracle Linux and Red Hat Enterprise Linux

#udevadm control --reload-rules && udevadm trigger

操作:

cat /etc/udev/rules.d/60-oracle-schedulers.rules

ACTION=="add|change", KERNEL=="dm-[a-z]", ATTR{queue/rotational}=="0", ATTR{queue/scheduler}="deadline"

udevadm control --reload-rules && udevadm trigger

[root@oracle19c-rac1 dev]# cat /sys/block/

dm-0/ dm-1/ dm-2/ dm-3/ dm-4/ dm-5/ dm-6/ dm-7/ dm-8/ sda/ sdb/ sdc/ sdd/ sde/ sdf/ sr0/

[root@oracle19c-rac1 dev]# cat /sys/block/dm-4/queue/scheduler

[mq-deadline] kyber bfq none

[root@oracle19c-rac1 dev]#

[root@oracle19c-rac1 dev

###################################################################################

## 重启操作系统进行修改验证

## 需要人工干预

###################################################################################

###################################################################################

## 检查修改信息

###################################################################################

echo "###################################################################################"

echo "检查修改信息"

echo

echo "-----------------------------------------------------------------------------------"

echo

echo "/etc/selinux/config"

echo

cat /etc/selinux/config

echo

echo

echo "-----------------------------------------------------------------------------------"

echo

echo "/etc/sysconfig/network"

echo

cat /etc/sysconfig/network

echo

echo

echo "-----------------------------------------------------------------------------------"

echo

echo "/sys/kernel/mm/transparent_hugepage/enabled"

echo

cat /sys/kernel/mm/transparent_hugepage/enabled

echo

echo

echo "-----------------------------------------------------------------------------------"

echo

echo "/etc/hosts"

echo

cat /etc/hosts

echo

echo

echo "-----------------------------------------------------------------------------------"

echo

echo "/etc/ntp.conf"

echo

cat /etc/ntp.conf

echo

echo

echo "-----------------------------------------------------------------------------------"

echo

echo "/etc/sysctl.conf"

echo

cat /etc/sysctl.conf

echo

echo

echo "-----------------------------------------------------------------------------------"

echo

echo "/etc/security/limits.conf"

echo

cat /etc/security/limits.conf

echo

echo

echo "-----------------------------------------------------------------------------------"

echo

echo "/etc/pam.d/login"

echo

cat /etc/pam.d/login

echo

echo

echo "-----------------------------------------------------------------------------------"

echo

echo "/etc/profile"

echo

cat /etc/profile

echo

echo

echo "-----------------------------------------------------------------------------------"

echo

echo "/home/grid/.bash_profile"

echo

cat /home/grid/.bash_profile

echo

echo

echo "-----------------------------------------------------------------------------------"

echo

echo "/home/oracle/.bash_profile"

echo

cat /home/oracle/.bash_profile

echo

echo

echo "--------------------------------systemctl------------------------------------------"

echo

systemctl status firewalld

echo

systemctl status avahi-daemon

echo

systemctl status nscd

echo

systemctl status ntpd

echo

echo

echo "-----------------------------------------------------------------------------------"

echo

rpm -q --qf '%{NAME}-%{VERSION}-%{RELEASE} (%{ARCH})\n' bc binutils compat-libcap1 compat-libstdc++-33 elfutils-libelf elfutils-libelf-devel fontconfig-devel glibc glibc-devel ksh libaio libaio-devel libX11 libXau libXi libXtst libXrender libXrender-devel libgcc libstdc++ libstdc++-devel libxcb make net-tools nfs-utils python python-configshell python-rtslib python-six targetcli smartmontools sysstat gcc-c++ nscd unixODBC

echo

echo "################请仔细核对所有文件信息 !!!!!!!################"

[root@oracle19c-rac1 ~]# vi check.sh

[root@oracle19c-rac1 ~]# sh check.sh

###################################################################################

检查修改信息

-----------------------------------------------------------------------------------

/etc/selinux/config

# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing - SELinux security policy is enforced.

# permissive - SELinux prints warnings instead of enforcing.

# disabled - No SELinux policy is loaded.

SELINUX=disabled

# SELINUXTYPE= can take one of three values:

# targeted - Targeted processes are protected,

# minimum - Modification of targeted policy. Only selected processes are protected.

# mls - Multi Level Security protection.

SELINUXTYPE=targeted

-----------------------------------------------------------------------------------

/etc/sysconfig/network

# Created by anaconda

NOZEROCONF=yes

-----------------------------------------------------------------------------------

/sys/kernel/mm/transparent_hugepage/enabled

always madvise [never]

-----------------------------------------------------------------------------------

/etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

#public ip

192.168.245.141 oracle19c-rac1

192.168.245.142 oracle19c-rac2

#private ip

192.168.28.141 oracle19c-rac1-priv

192.168.28.142 oracle19c-rac2-priv

#vip

192.168.245.143 oracle19c-rac1-vip

192.168.245.144 oracle19c-rac2-vip

#scanip

192.168.245.145 oracle19c-rac-scan1

-----------------------------------------------------------------------------------

/etc/ntp.conf

cat: /etc/ntp.conf: No such file or directory

-----------------------------------------------------------------------------------

/etc/sysctl.conf

# sysctl settings are defined through files in

# /usr/lib/sysctl.d/, /run/sysctl.d/, and /etc/sysctl.d/.

#

# Vendors settings live in /usr/lib/sysctl.d/.

# To override a whole file, create a new file with the same in

# /etc/sysctl.d/ and put new settings there. To override

# only specific settings, add a file with a lexically later

# name in /etc/sysctl.d/ and put new settings there.

#

# For more information, see sysctl.conf(5) and sysctl.d(5).

fs.aio-max-nr = 1048576

fs.file-max = 6815744

kernel.shmall = 2097152

kernel.shmmax = 4294967295

kernel.shmmni = 4096

kernel.sem = 250 32000 100 128

net.ipv4.ip_local_port_range = 9000 65500

net.core.rmem_default = 16777216

net.core.rmem_max = 16777216

net.core.wmem_max = 16777216

net.core.wmem_default = 16777216

fs.aio-max-nr = 6194304

vm.dirty_ratio=20

vm.dirty_background_ratio=3

vm.dirty_writeback_centisecs=100

vm.dirty_expire_centisecs=500

vm.swappiness=10

vm.min_free_kbytes=524288

net.core.netdev_max_backlog = 30000

net.core.netdev_budget = 600

vm.nr_hugepages = 1550

net.ipv4.conf.all.rp_filter = 2

net.ipv4.conf.default.rp_filter = 2

net.ipv4.ipfrag_time = 60

net.ipv4.ipfrag_low_thresh=6291456

net.ipv4.ipfrag_high_thresh = 8388608

-----------------------------------------------------------------------------------

/etc/security/limits.conf

# /etc/security/limits.conf

#

#This file sets the resource limits for the users logged in via PAM.

#It does not affect resource limits of the system services.

#

#Also note that configuration files in /etc/security/limits.d directory,

#which are read in alphabetical order, override the settings in this

#file in case the domain is the same or more specific.

#That means for example that setting a limit for wildcard domain here

#can be overriden with a wildcard setting in a config file in the

#subdirectory, but a user specific setting here can be overriden only

#with a user specific setting in the subdirectory.

#

#Each line describes a limit for a user in the form:

#

#<domain> <type> <item> <value>

#

#Where:

#<domain> can be:

# - a user name

# - a group name, with @group syntax

# - the wildcard *, for default entry

# - the wildcard %, can be also used with %group syntax,

# for maxlogin limit

#

#<type> can have the two values:

# - "soft" for enforcing the soft limits

# - "hard" for enforcing hard limits

#

#<item> can be one of the following:

# - core - limits the core file size (KB)

# - data - max data size (KB)

# - fsize - maximum filesize (KB)

# - memlock - max locked-in-memory address space (KB)

# - nofile - max number of open file descriptors

# - rss - max resident set size (KB)

# - stack - max stack size (KB)

# - cpu - max CPU time (MIN)

# - nproc - max number of processes

# - as - address space limit (KB)

# - maxlogins - max number of logins for this user

# - maxsyslogins - max number of logins on the system

# - priority - the priority to run user process with

# - locks - max number of file locks the user can hold

# - sigpending - max number of pending signals

# - msgqueue - max memory used by POSIX message queues (bytes)

# - nice - max nice priority allowed to raise to values: [-20, 19]

# - rtprio - max realtime priority

#

#<domain> <type> <item> <value>

#

#* soft core 0

#* hard rss 10000

#@student hard nproc 20

#@faculty soft nproc 20

#@faculty hard nproc 50

#ftp hard nproc 0

#@student - maxlogins 4

# End of file

grid soft nproc 2047

grid hard nproc 16384

grid soft nofile 1024

grid hard nofile 65536

grid soft stack 10240

grid hard stack 32768

oracle soft nproc 2047

oracle hard nproc 16384

oracle soft nofile 1024

oracle hard nofile 65536

oracle soft stack 10240

oracle hard stack 32768

oracle soft memlock 3145728

oracle hard memlock 3145728

-----------------------------------------------------------------------------------

/etc/pam.d/login

#%PAM-1.0

auth [user_unknown=ignore success=ok ignore=ignore default=bad] pam_securetty.so

auth substack system-auth

auth include postlogin

account required pam_nologin.so

account include system-auth

password include system-auth

# pam_selinux.so close should be the first session rule

session required pam_selinux.so close

session required pam_loginuid.so

session optional pam_console.so

# pam_selinux.so open should only be followed by sessions to be executed in the user context

session required pam_selinux.so open

session required pam_namespace.so

session optional pam_keyinit.so force revoke

session include system-auth

session include postlogin

-session optional pam_ck_connector.so

session required pam_limits.so

-----------------------------------------------------------------------------------

/etc/profile

# /etc/profile

# System wide environment and startup programs, for login setup

# Functions and aliases go in /etc/bashrc

# It's NOT a good idea to change this file unless you know what you

# are doing. It's much better to create a custom.sh shell script in

# /etc/profile.d/ to make custom changes to your environment, as this

# will prevent the need for merging in future updates.

pathmunge () {

case ":${PATH}:" in

*:"$1":*)

;;