前言

TDengine Database官方及社区里有一些性能测试对比案例,不过发布的都比较早,其使用的版本都是早期低版本。本次测试参考官方提测的《使用 taosdemo 对 TDengine 进行性能测试》文章进行。因教程中的TDengine测版本较低,文章内容未进行更新,本次测试将使用2.6.0.28版本测试。

taosBenchmark 是TDengine专门为测试数据库开发的一个应用程序,用于对TDengine时序数据库进行写入和查询的性能测试,便于用户快速便捷的测试和体验TDengine高性能的特点。其工作逻辑是通过 taosBenchmark 模拟大量数据,还可以通过 taosBenchmark 参数灵活控制表的个数、表的列数、数据类型、一次插入的记录条数、记录的时间间隔、乱序比例、客户端接口类型以及并发线程数量等等。同时,taosBenchmark 也支持查询和订阅的测试。其运行操作也比较简单。本次我们针对其内置好的模拟数据进行写入和读取的性能测试。针对其更加丰富的参数使用,读者可自行去社区查阅学习。【本文仅供参考,有问题请留言讨论】

本次时序数据库的测试目标:

前提条件

本次测试,使用以下环境

- 一台Linux服务器(因目前 2.X 版服务端 taosd 和 taosAdapter 仅在 Linux 系统上安装和运行),包含10GB的空闲硬盘空间,用于存储产生的测试数据。本次测试采用的是华为云服务器:通用计算型 | s3.2xlarge.2 | 8vCPUs | 16GiB;CentOS 7.2 64bit。

- root权限。

准备测试

安装TDengine

TDengine 完整的软件包包括服务端(taosd)、用于与第三方系统对接并提供 RESTful 接口的 taosAdapter、应用驱动(taosc)、命令行程序 (CLI,taos) 和一些工具软件。

TDengine的安装有很多方式,包括:Docker、apt-get、安装包、源码方式进行安装,本次我们通过TDengine开源版本提供的tar.gz 格式安装包进行安装,可参考官方说明:安装和卸载 | TDengine 文档 | 涛思数据。

- 从官网下载获得 tar.gz 安装包,例如 TDengine-server-2.6.0.28-Linux-x64.tar.gz; 2、进入到 TDengine-server-2.6.0.28-Linux-x64.tar.gz 安装包所在目录,先解压文件后,进入子目录,执行其中的 ./install.sh -e no 安装脚本,如下是安装执行过程:

$ tar xvzf TDengine-enterprise-server-2.6.0.28-Linux-x64.tar.gz

TDengine-enterprise-server-2.6.0.28/

TDengine-enterprise-server-2.6.0.28/driver/

TDengine-enterprise-server-2.6.0.28/driver/vercomp.txt

TDengine-enterprise-server-2.6.0.28/driver/libtaos.so.2.6.0.28

TDengine-enterprise-server-2.6.0.28/install.sh

TDengine-enterprise-server-2.6.0.28/examples/

...

$ ll

total 43816

drwxrwxr-x 3 ubuntu ubuntu 4096 Feb 22 09:31 ./

drwxr-xr-x 20 ubuntu ubuntu 4096 Feb 22 09:30 ../

drwxrwxr-x 4 ubuntu ubuntu 4096 Feb 22 09:30 TDengine-enterprise-server-2.6.0.28/

-rw-rw-r-- 1 ubuntu ubuntu 44852544 Feb 22 09:31 TDengine-enterprise-server-2.6.0.28-Linux-x64.tar.gz

$ cd TDengine-enterprise-server-2.6.0.28/

$ ll

total 40784

drwxrwxr-x 4 ubuntu ubuntu 4096 Feb 22 09:30 ./

drwxrwxr-x 3 ubuntu ubuntu 4096 Feb 22 09:31 ../

drwxrwxr-x 2 ubuntu ubuntu 4096 Feb 22 09:30 driver/

drwxrwxr-x 10 ubuntu ubuntu 4096 Feb 22 09:30 examples/

-rwxrwxr-x 1 ubuntu ubuntu 33294 Feb 22 09:30 install.sh*

-rw-rw-r-- 1 ubuntu ubuntu 41704288 Feb 22 09:30 taos.tar.gz

$ sudo ./install.sh

Start to update TDengine...

Created symlink /etc/systemd/system/multi-user.target.wants/taosd.service → /etc/systemd/system/taosd.service.

Nginx for TDengine is updated successfully!

To configure TDengine : edit /etc/taos/taos.cfg

To configure Taos Adapter (if has) : edit /etc/taos/taosadapter.toml

To start TDengine : sudo systemctl start taosd

To access TDengine : use taos -h ubuntu-1804 in shell OR from http://127.0.0.1:6060

TDengine is updated successfully!

Install taoskeeper as a standalone service

taoskeeper is installed, enable it by `systemctl enable taoskeeper`

注意:install.sh 安装脚本在执行过程中,会通过命令行交互界面询问一些配置信息。如果希望采取无交互安装方式,那么可以用 -e no 参数来执行 install.sh 脚本。运行 ./install.sh -h 指令可以查看所有参数的详细说明信息。

本次测试我们直接用 -e no 参数来执行 install.sh 脚本。

- 启动

TDengine 使用 Linux 系统的 systemd/systemctl/service 来管理系统的启动和、停止、重启操作。TDengine 的服务进程是 taosd,默认情况下 TDengine 在系统启动后将自动启动。DBA 可以通过 systemd/systemctl/service 手动操作停止、启动、重新启动服务。

以 systemctl 为例,命令如下:

- 启动服务进程:systemctl start taosd

- 停止服务进程:systemctl stop taosd

- 重启服务进程:systemctl restart taosd

- 查看服务状态:systemctl status taosd

开展测试

在整个测试过程中,建议打开服务器的控制台监控,查看系统的CPU和内存占用情况。

测试过程

- 写入测试

- 测试用例1:

在不使用任何参数的情况下执行 taosBenchmark 命令,该命令将模拟生成一个电力行业典型应用的电表数据采集场景数据,即建立一个名为 test 的数据库,并创建一个名为 meters 的超级表,超级表的结构为:

taos> describe test.meters;

Field | Type | Length | Note |

=================================================================================

ts | TIMESTAMP | 8 | |

current | FLOAT | 4 | |

voltage | INT | 4 | |

phase | FLOAT | 4 | |

groupid | INT | 4 | TAG |

location | BINARY | 64 | TAG |

Query OK, 6 row(s) in set (0.002972s)

并按照 TDengine 数据建模的最佳实践,以 meters 超级表为模板生成一万个子表,代表一万个独立上报数据的电表设备,显示如下:

[root@ecs-3fb0 cfg]

[11/09 16:42:55.631713] INFO: taos client version: 2.6.0.28

Press enter key to continue or Ctrl-C to stop

[11/09 16:43:33.358768] INFO: create database: <CREATE DATABASE IF NOT EXISTS test PRECISION 'ms';>

[11/09 16:43:33.860524] INFO: stable meters does not exist, will create one

[11/09 16:43:34.361379] INFO: create stable: <CREATE TABLE test.meters (ts TIMESTAMP,current float,voltage int,phase float) TAGS (groupid int,location binary(24))>

[11/09 16:43:34.869963] INFO: generate stable<meters> columns data with lenOfCols<80> * prepared_rand<10000>

[11/09 16:43:34.880122] INFO: generate stable<meters> tags data with lenOfTags<62> * childTblCount<10000>

[11/09 16:43:34.884222] INFO: start creating 10000 table(s) with 8 thread(s)

[11/09 16:43:34.884813] INFO: thread[0] start creating table from 0 to 1249

[11/09 16:43:34.885088] INFO: thread[1] start creating table from 1250 to 2499

[11/09 16:43:34.885339] INFO: thread[2] start creating table from 2500 to 3749

[11/09 16:43:34.885700] INFO: thread[3] start creating table from 3750 to 4999

[11/09 16:43:34.887636] INFO: thread[4] start creating table from 5000 to 6249

[11/09 16:43:34.887926] INFO: thread[5] start creating table from 6250 to 7499

[11/09 16:43:34.888186] INFO: thread[6] start creating table from 7500 to 8749

[11/09 16:43:34.888430] INFO: thread[7] start creating table from 8750 to 9999

[11/09 16:43:48.838616] SUCC: Spent 13.9540 seconds to create 10000 table(s) with 8 thread(s), already exist 0 table(s), actual 10000 table(s) pre created, 0 table(s) will be auto create

然后 taosBenchmark 为每个电表设备模拟生成一万条记录,此时默认的:

– 每个写入请求记录数为30000,写入行设为10000;

– 写入线程数为8;

[11/09 16:43:51.824455] INFO: record per request (30000) is larger than insert rows (10000) in progressive mode, which will be set to 10000

[11/09 16:43:51.834750] INFO: Estimate memory usage: 11.82MB

Press enter key to continue or Ctrl-C to stop

[11/09 16:43:55.818914] INFO: thread[0] start progressive inserting into table from 0 to 1249

[11/09 16:43:55.818933] INFO: thread[3] start progressive inserting into table from 3750 to 4999

[11/09 16:43:55.818928] INFO: thread[2] start progressive inserting into table from 2500 to 3749

[11/09 16:43:55.818923] INFO: thread[1] start progressive inserting into table from 1250 to 2499

[11/09 16:43:55.818991] INFO: thread[4] start progressive inserting into table from 5000 to 6249

[11/09 16:43:55.819010] INFO: thread[5] start progressive inserting into table from 6250 to 7499

[11/09 16:43:55.819028] INFO: thread[6] start progressive inserting into table from 7500 to 8749

[11/09 16:43:55.821339] INFO: thread[7] start progressive inserting into table from 8750 to 9999

[11/09 16:44:25.821878] INFO: thread[3] has currently inserted rows: 8910000

[11/09 16:44:25.827670] INFO: thread[0] has currently inserted rows: 9230000

[11/09 16:44:25.831597] INFO: thread[4] has currently inserted rows: 9070000

[11/09 16:44:25.837201] INFO: thread[6] has currently inserted rows: 9050000

[11/09 16:44:25.839675] INFO: thread[7] has currently inserted rows: 8790000

[11/09 16:44:25.845131] INFO: thread[2] has currently inserted rows: 9540000

[11/09 16:44:25.852908] INFO: thread[1] has currently inserted rows: 9130000

[11/09 16:44:25.856755] INFO: thread[5] has currently inserted rows: 9410000

经过执行结果输出可以看到:

执行100000000条数据写入用时41秒,每秒钟插入性能高达 240 万 4104 条记录。官方提供的测试结果在同样的用例执行下(服务器配置信息未知)用了 6 秒的时间插入了一亿条记录,每秒钟插入性能高达 1628 万 5462 条记录。由于服务器资源配置和服务器写入延时较大的问题,导致本次测试结果与官方数据有一定的差别,属正常现象。但测试结果总体数据表现已经非常优秀。

[11/09 16:44:35.034487] SUCC: thread[2] completed total inserted rows: 12500000, 344858.35 records/second

[11/09 16:44:36.020174] SUCC: thread[1] completed total inserted rows: 12500000, 335522.93 records/second

[11/09 16:44:36.056786] SUCC: thread[5] completed total inserted rows: 12500000, 335212.72 records/second

[11/09 16:44:36.364985] SUCC: thread[0] completed total inserted rows: 12500000, 332542.39 records/second

[11/09 16:44:36.495492] SUCC: thread[6] completed total inserted rows: 12500000, 331381.29 records/second

[11/09 16:44:36.509238] SUCC: thread[4] completed total inserted rows: 12500000, 331320.34 records/second

[11/09 16:44:37.250942] SUCC: thread[3] completed total inserted rows: 12500000, 324860.17 records/second

[11/09 16:44:37.414442] SUCC: thread[7] completed total inserted rows: 12500000, 323742.61 records/second

[11/09 16:44:37.869222] SUCC: Spent 41.595522 seconds to insert rows: 100000000 with 8 thread(s) into test 2404104.94 records/second

[11/09 16:44:37.869252] SUCC: insert delay, min: 13.31ms, avg: 30.09ms, p90: 38.90ms, p95: 49.66ms, p99: 180.11ms, max: 358.02ms

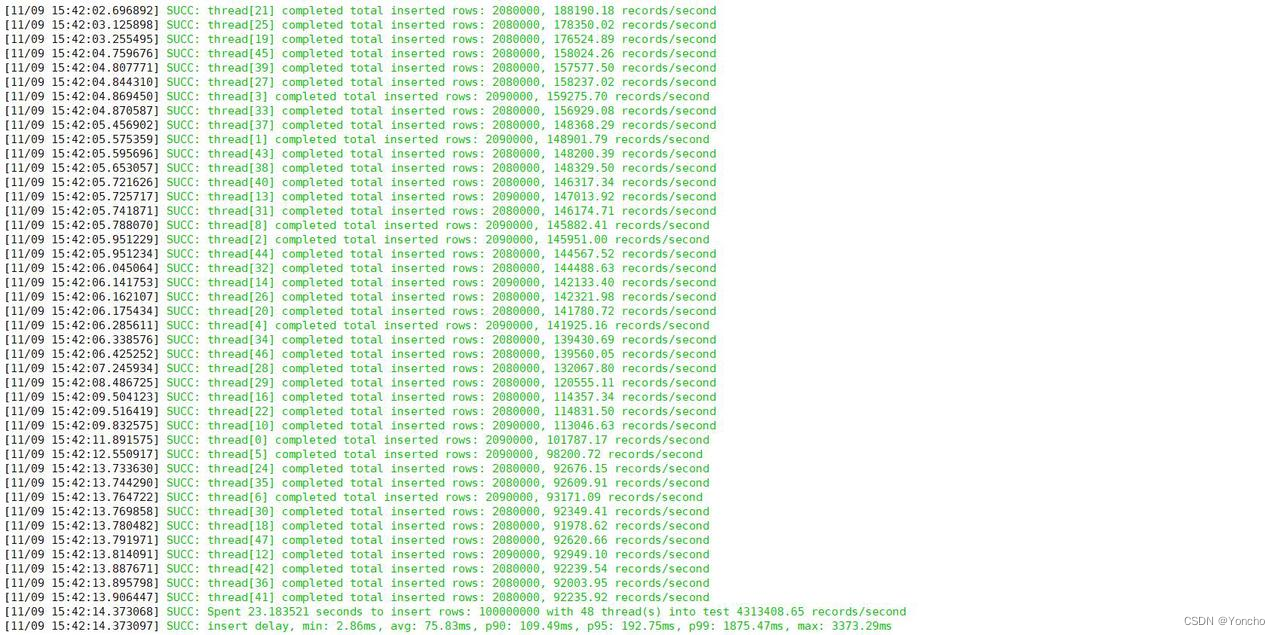

- 测试用例2:

在<测试用例1>的测试过程中,将 taosBenchmark 命令带上 -T 48,意思为开启48个写入线程,执行过程如下:

[root@ecs-3fb0 cfg]

[11/09 17:26:13.519402] INFO: taos client version: 2.6.0.28

Press enter key to continue or Ctrl-C to stop

[11/09 17:26:15.134105] INFO: create database: <CREATE DATABASE IF NOT EXISTS test PRECISION 'ms';>

[11/09 17:26:15.635880] INFO: stable meters does not exist, will create one

[11/09 17:26:16.136620] INFO: create stable: <CREATE TABLE test.meters (ts TIMESTAMP,current float,voltage int,phase float) TAGS (groupid int,location binary(24))>

[11/09 17:26:16.657756] INFO: generate stable<meters> columns data with lenOfCols<80> * prepared_rand<10000>

[11/09 17:26:16.671521] INFO: generate stable<meters> tags data with lenOfTags<62> * childTblCount<10000>

[11/09 17:26:16.678598] INFO: start creating 10000 table(s) with 8 thread(s)

[11/09 17:26:16.679281] INFO: thread[0] start creating table from 0 to 1249

[11/09 17:26:16.679602] INFO: thread[1] start creating table from 1250 to 2499

[11/09 17:26:16.679915] INFO: thread[2] start creating table from 2500 to 3749

[11/09 17:26:16.682153] INFO: thread[3] start creating table from 3750 to 4999

[11/09 17:26:16.682462] INFO: thread[4] start creating table from 5000 to 6249

[11/09 17:26:16.682751] INFO: thread[5] start creating table from 6250 to 7499

[11/09 17:26:16.683011] INFO: thread[6] start creating table from 7500 to 8749

[11/09 17:26:16.683203] INFO: thread[7] start creating table from 8750 to 9999

[11/09 17:26:30.625863] SUCC: Spent 13.9470 seconds to create 10000 table(s) with 8 thread(s), already exist 0 table(s), actual 10000 table(s) pre created, 0 table(s) will be auto created

Press enter key to continue or Ctrl-C to stop

[11/09 17:26:31.526109] INFO: record per request (30000) is larger than insert rows (10000) in progressive mode, which will be set to 10000

[11/09 17:26:31.562217] INFO: Estimate memory usage: 51.99MB

Press enter key to continue or Ctrl-C to stop

[11/09 17:26:32.305446] INFO: thread[0] start progressive inserting into table from 0 to 208

[11/09 17:26:32.305473] [11/09 17:26:32.305459] INFO: thread[2] start progressive inserting into table from 418 to 626

[11/09 17:26:32.305449] INFO: thread[1] start progressive inserting into table from 209 to 417

INFO: thread[3] start progressive inserting into table from 627 to 835

[11/09 17:26:32.305515] INFO: thread[4] start progressive inserting into table from 836 to 1044

[11/09 17:26:32.305670] INFO: thread[15] start progressive inserting into table from 3135 to 3343

[11/09 17:26:32.306086] INFO: thread[18] start progressive inserting into table from 3760 to 3967

[11/09 17:26:32.307668] INFO: thread[44] start progressive inserting into table from 9168 to 9375

[11/09 17:26:32.307831] INFO: thread[30] start progressive inserting into table from 6256 to 6463

[11/09 17:26:32.307839] INFO: thread[45] start progressive inserting into table from 9376 to 9583

[11/09 17:26:32.307980] INFO: thread[17] start progressive inserting into table from 3552 to 3759

[11/09 17:26:32.307984] INFO: thread[36] start progressive inserting into table from 7504 to 7711

[11/09 17:26:32.308019] INFO: thread[8] start progressive inserting into table from 1672 to 1880

[11/09 17:26:32.308037] INFO: thread[40] start progressive inserting into table from 8336 to 8543

[11/09 17:26:32.308447] INFO: thread[41] start progressive inserting into table from 8544 to 8751

[11/09 17:26:32.308530] INFO: thread[6] start progressive inserting into table from 1254 to 1462

[11/09 17:26:32.308687] INFO: thread[19] start progressive inserting into table from 3968 to 4175

[11/09 17:26:32.308821] INFO: thread[38] start progressive inserting into table from 7920 to 8127

[11/09 17:26:32.309141] INFO: thread[37] start progressive inserting into table from 7712 to 7919

[11/09 17:26:32.308031] INFO: thread[27] start progressive inserting into table from 5632 to 5839

[11/09 17:26:32.310077] INFO: thread[21] start progressive inserting into table from 4384 to 4591

[11/09 17:26:32.310152] INFO: thread[20] start progressive inserting into table from 4176 to 4383

[11/09 17:26:32.310203] INFO: thread[31] start progressive inserting into table from 6464 to 6671

[11/09 17:26:32.308035] INFO: thread[39] start progressive inserting into table from 8128 to 8335

[11/09 17:26:32.310744] INFO: thread[46] start progressive inserting into table from 9584 to 9791

[11/09 17:26:32.310830] INFO: thread[11] start progressive inserting into table from 2299 to 2507

[11/09 17:26:32.311017] INFO: thread[29] start progressive inserting into table from 6048 to 6255

[11/09 17:26:32.311245] INFO: thread[47] start progressive inserting into table from 9792 to 9999

[11/09 17:26:32.311297] INFO: thread[24] start progressive inserting into table from 5008 to 5215

[11/09 17:26:32.311682] INFO: thread[12] start progressive inserting into table from 2508 to 2716

[11/09 17:26:32.312475] INFO: thread[23] start progressive inserting into table from 4800 to 5007

[11/09 17:26:32.312570] INFO: thread[32] start progressive inserting into table from 6672 to 6879

[11/09 17:26:32.312625] INFO: thread[22] start progressive inserting into table from 4592 to 4799

[11/09 17:26:32.312678] INFO: thread[42] start progressive inserting into table from 8752 to 8959

[11/09 17:26:32.308021] INFO: thread[25] start progressive inserting into table from 5216 to 5423

[11/09 17:26:32.313582] INFO: thread[35] start progressive inserting into table from 7296 to 7503

[11/09 17:26:32.313718] INFO: thread[43] start progressive inserting into table from 8960 to 9167

[11/09 17:26:32.308025] INFO: thread[26] start progressive inserting into table from 5424 to 5631

[11/09 17:26:32.314693] INFO: thread[28] start progressive inserting into table from 5840 to 6047

[11/09 17:26:32.314796] INFO: thread[9] start progressive inserting into table from 1881 to 2089

[11/09 17:26:32.314911] INFO: thread[33] start progressive inserting into table from 6880 to 7087

[11/09 17:26:32.315325] INFO: thread[16] start progressive inserting into table from 3344 to 3551

[11/09 17:26:32.315622] INFO: thread[14] start progressive inserting into table from 2926 to 3134

[11/09 17:26:32.315897] INFO: thread[34] start progressive inserting into table from 7088 to 7295

[11/09 17:26:32.315973] INFO: thread[5] start progressive inserting into table from 1045 to 1253

[11/09 17:26:32.316878] INFO: thread[10] start progressive inserting into table from 2090 to 2298

[11/09 17:26:32.317259] INFO: thread[7] start progressive inserting into table from 1463 to 1671

[11/09 17:26:32.317760] INFO: thread[13] start progressive inserting into table from 2717 to 2925

[11/09 17:27:00.365086] SUCC: thread[2] completed total inserted rows: 2090000, 75863.75 records/second

[11/09 17:27:00.493822] SUCC: thread[8] completed total inserted rows: 2090000, 75637.93 records/second

[11/09 17:27:00.825880] SUCC: thread[44] completed total inserted rows: 2080000, 74298.97 records/second

[11/09 17:27:01.045686] SUCC: thread[21] completed total inserted rows: 2080000, 73688.22 records/second

[11/09 17:27:01.049718] SUCC: thread[39] completed total inserted rows: 2080000, 73725.26 records/second

[11/09 17:27:01.054190] SUCC: thread[19] completed total inserted rows: 2080000, 73760.15 records/second

[11/09 17:27:01.091836] SUCC: thread[45] completed total inserted rows: 2080000, 73701.16 records/second

[11/09 17:27:01.110052] SUCC: thread[33] completed total inserted rows: 2080000, 73502.23 records/second

[11/09 17:27:01.157685] SUCC: thread[28] completed total inserted rows: 2080000, 73383.12 records/second

[11/09 17:27:01.194270] SUCC: thread[20] completed total inserted rows: 2080000, 73370.50 records/second

[11/09 17:27:01.227142] SUCC: thread[37] completed total inserted rows: 2080000, 73293.44 records/second

[11/09 17:27:01.261687] SUCC: thread[40] completed total inserted rows: 2080000, 73210.92 records/second

[11/09 17:27:01.353070] SUCC: thread[31] completed total inserted rows: 2080000, 72981.87 records/second

[11/09 17:27:01.366231] SUCC: thread[3] completed total inserted rows: 2090000, 73233.17 records/second

[11/09 17:27:01.371686] SUCC: thread[38] completed total inserted rows: 2080000, 72884.24 records/second

[11/09 17:27:01.426157] SUCC: thread[25] completed total inserted rows: 2080000, 72680.42 records/second

[11/09 17:27:01.456252] SUCC: thread[4] completed total inserted rows: 2090000, 73060.84 records/second

[11/09 17:27:01.558129] SUCC: thread[22] completed total inserted rows: 2080000, 72428.90 records/second

[11/09 17:27:01.559171] SUCC: thread[27] completed total inserted rows: 2080000, 72486.59 records/second

[11/09 17:27:01.569404] SUCC: thread[16] completed total inserted rows: 2080000, 72391.96 records/second

[11/09 17:27:01.589362] SUCC: thread[34] completed total inserted rows: 2080000, 72299.10 records/second

[11/09 17:27:01.589707] SUCC: thread[32] completed total inserted rows: 2080000, 72407.69 records/second

[11/09 17:27:01.594174] SUCC: thread[10] completed total inserted rows: 2090000, 72726.52 records/second

[11/09 17:27:01.610639] SUCC: thread[13] completed total inserted rows: 2090000, 72697.21 records/second

[11/09 17:27:01.631136] SUCC: thread[14] completed total inserted rows: 2090000, 72568.95 records/second

[11/09 17:27:01.636533] SUCC: thread[43] completed total inserted rows: 2080000, 72185.51 records/second

[11/09 17:27:01.648486] SUCC: thread[26] completed total inserted rows: 2080000, 72275.52 records/second

[11/09 17:27:01.675671] SUCC: thread[15] completed total inserted rows: 2090000, 72493.13 records/second

[11/09 17:27:01.775693] SUCC: thread[46] completed total inserted rows: 2080000, 71895.65 records/second

[11/09 17:27:01.802677] SUCC: thread[1] completed total inserted rows: 2090000, 72105.79 records/second

[11/09 17:27:01.829260] SUCC: thread[9] completed total inserted rows: 2090000, 72151.09 records/second

[11/09 17:27:01.880842] SUCC: thread[41] completed total inserted rows: 2080000, 71700.76 records/second

[11/09 17:27:01.911553] SUCC: thread[23] completed total inserted rows: 2080000, 71471.06 records/second

[11/09 17:27:01.982751] SUCC: thread[24] completed total inserted rows: 2080000, 71373.91 records/second

[11/09 17:27:02.063169] SUCC: thread[30] completed total inserted rows: 2080000, 71088.89 records/second

[11/09 17:27:02.063704] SUCC: thread[47] completed total inserted rows: 2080000, 71200.03 records/second

[11/09 17:27:02.064125] SUCC: thread[7] completed total inserted rows: 2090000, 71456.82 records/second

[11/09 17:27:02.094536] SUCC: thread[35] completed total inserted rows: 2080000, 71069.86 records/second

[11/09 17:27:02.151676] SUCC: thread[42] completed total inserted rows: 2080000, 70907.14 records/second

[11/09 17:27:02.152561] SUCC: thread[6] completed total inserted rows: 2090000, 71280.12 records/second

[11/09 17:27:02.163409] SUCC: thread[36] completed total inserted rows: 2080000, 71028.18 records/second

[11/09 17:27:02.163477] SUCC: thread[17] completed total inserted rows: 2080000, 70919.58 records/second

[11/09 17:27:02.174467] SUCC: thread[12] completed total inserted rows: 2090000, 71227.96 records/second

[11/09 17:27:02.197242] SUCC: thread[29] completed total inserted rows: 2080000, 70830.95 records/second

[11/09 17:27:02.206336] SUCC: thread[18] completed total inserted rows: 2080000, 70882.92 records/second

[11/09 17:27:02.235195] SUCC: thread[11] completed total inserted rows: 2090000, 71177.81 records/second

[11/09 17:27:02.258538] SUCC: thread[5] completed total inserted rows: 2090000, 71041.25 records/second

[11/09 17:27:02.294383] SUCC: thread[0] completed total inserted rows: 2090000, 70960.44 records/second

[11/09 17:27:02.751293] SUCC: Spent 29.988757 seconds to insert rows: 100000000 with 48 thread(s) into test 3334583.02 records/second

[11/09 17:27:02.751336] SUCC: insert delay, min: 14.20ms, avg: 138.17ms, p90: 194.66ms, p95: 217.56ms, p99: 265.10ms, max: 374.39ms

经过执行结果输出可以看到:

执行100000000条数据写入用时29.9秒,每秒钟插入性能高达 333 万 4583 条记录。

- 查询测试

- 测试用例3:

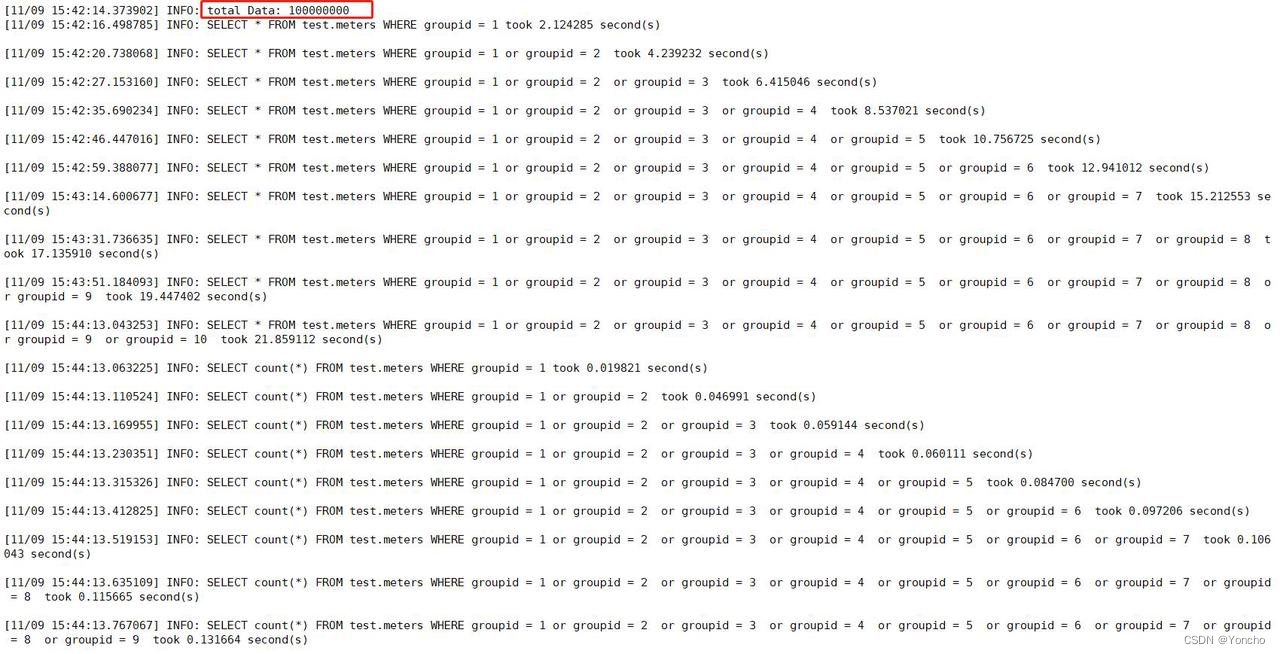

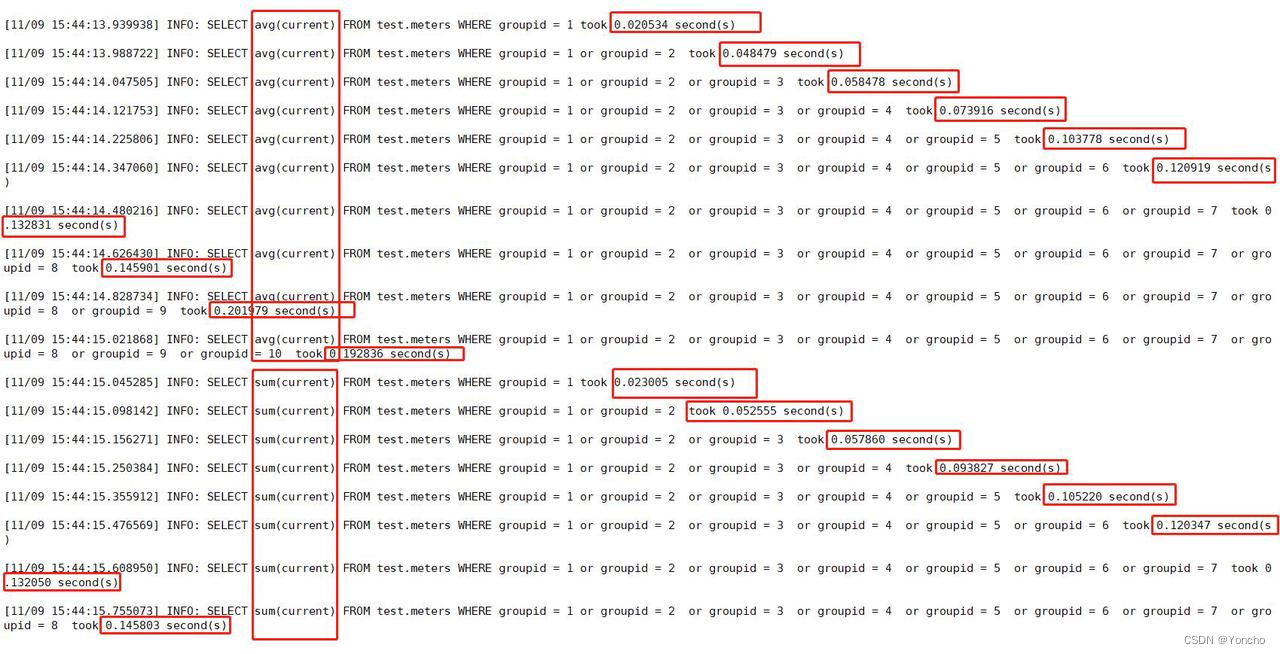

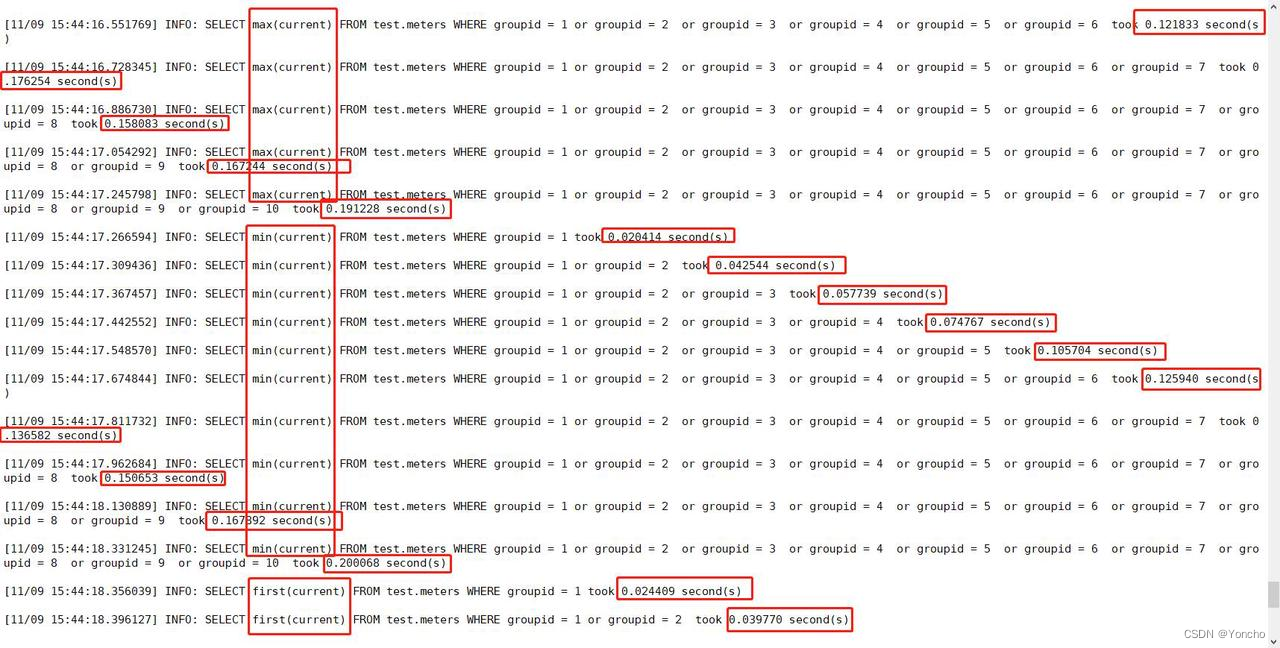

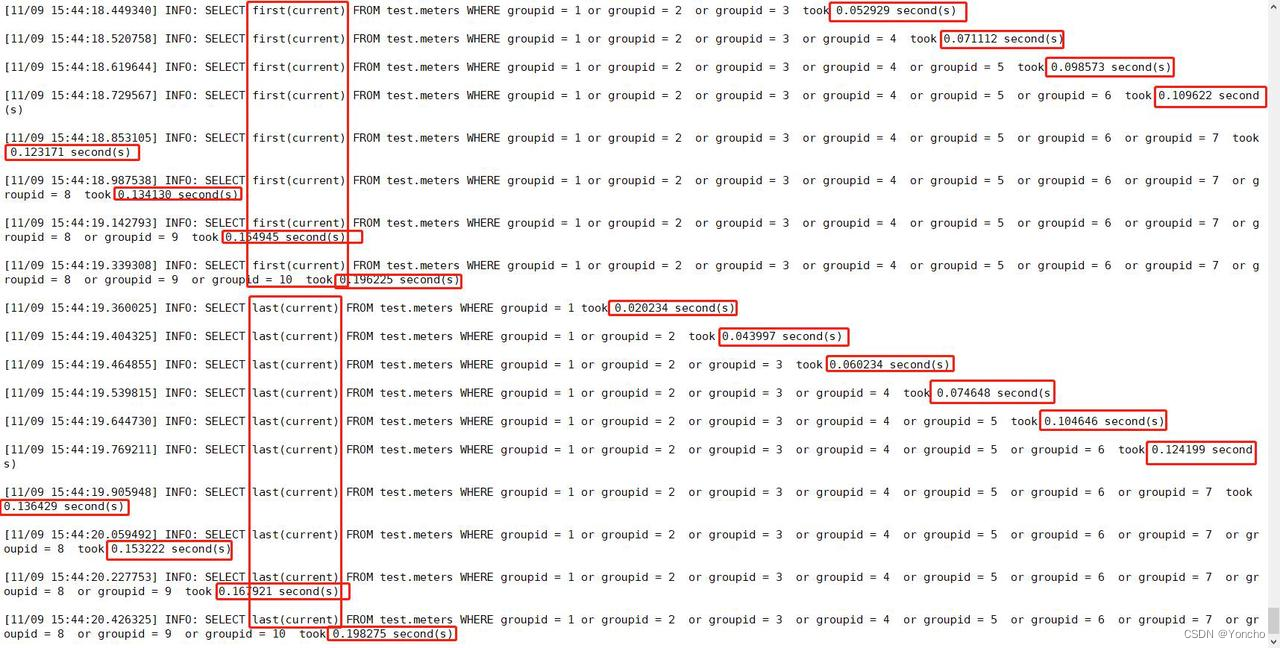

taosBenchmark 给出了查询测试的方法,是提供了一个 -x 参数,可以在插入数据结束后进行常用查询操作并输出查询消耗时间。测试时是通过<测试用例1>预先写入一亿条数据,然后对这一亿条数据进行查询性能测试,查询的测试主要对常用聚合函数进行操作,包括:count()、avg()、sum()、max()、min()、first()、last()等。测试过程如下图:

通过测试结果可以看到:通过查询语句取出一亿条记录操作仅消耗2.1秒。而对一亿条记录进行常用的聚合函数操作通常仅需要几十毫秒,时长最快的仅十几毫秒,时间最长的 count 函数也才到一百多毫秒。查询性能表现非常优秀。

结果分析

通过本测试产生的数据和相关的写入、查询用例测试可以看出,TDengine在性能上表现非常优秀。

细致分析下来可以有以下结论【在8核CPU的服务器下】:

- 写入性能:执行100000000条数据写入用时29.9秒,每秒钟插入性能高达 333 万 4583 条记录。

- 普通查询全部数据:通过查询语句取出一亿条记录操作仅消耗2.1秒。

- 全部数据聚合计算查询:对一亿条记录进行常用的聚合函数操作通常仅需要几十毫秒,时长最快的

仅十几毫秒,时间最长的 count 函数也才到一百多毫秒。

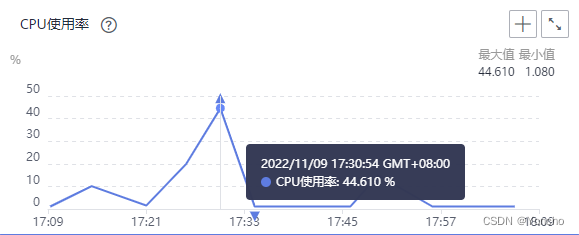

通过华为云控制台监控的观察,测试用例执行时,TDengine的CPU占用率仅达到44.6%【没有更详细的测试插件,仅供参考】,表明在8核CPU的服务器下TDengine的CPU占用率低很多。

在实际测试过程中,分别测试过8线程、12线程、16线程、48线程…192线程等。结果发现当线程数等于服务器核数的2倍时,其测试结果最佳。当线程数大于CPU核数2倍以上时,测试结果表现出其写入用时基本处于平稳。

本文内容由网友自发贡献,版权归原作者所有,本站不承担相应法律责任。如您发现有涉嫌抄袭侵权的内容,请联系:hwhale#tublm.com(使用前将#替换为@)