一、前提

使用虚拟机版本 : VMware workstation 15

提示:如需转载切记标记来源 CSDN :Stein10010

详细说明见官网:Stein

1.1设置四节点

Controller: 两块网卡 Host-Only(提供租户API接口172.16.30.10)

Host-Only (172.16.20.110) 2.5G内存 10G硬盘

Compute:一块网卡Host-Only (172.16.20.120) 5G内存 20G硬盘

实例虚拟机运行在计算节点 需分配足够多内存供虚机使用

Network-Node:两块网卡 NAT(设置浮动IP,172.16.10.10)

Host-Only(172.16.20.130) 512MB 内存 5G硬盘

Block-Storage: 一块网卡Host-Only (172.16.20.140) 512MB内存

两块硬盘 /sda 5G /sdb 100G

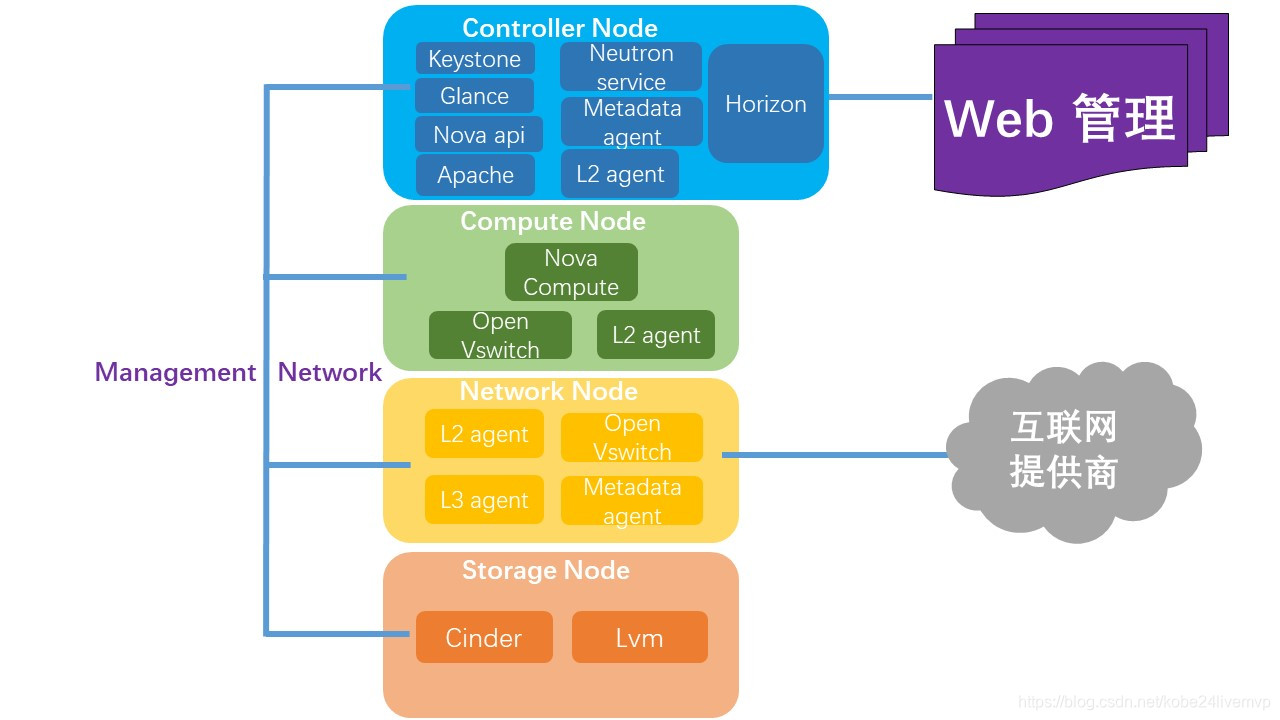

1.2网络平台架构

1.3准备环境(所有节点)

为四台主机添加hosts解析文件,为每台机器设置主机名,关闭firewalld,sellinux,设置静态IP

1.3.1设置hosts

# cp /etc/hosts /etc/hosts.bak

# vi /etc/hosts

172.16.20.110 controller

172.16.20.120 compute

172.16.20.130 network-node

172.16.20.140 block-storage

1.3.2设置主机名

# hostnamectl set-hostname XXX

1.3.3关闭 firewalld

# systemctl stop firewalld

# systemctl disable firewalld

1.3.4关闭SELinux

# vi /etc/selinux/config

SELINUX=disabled

# reboot

1.3.5设置静态IP

# vi /etc/sysconfig/network-scripts/ifcfg-ens3X

1.3.6自定义yum源

# cp /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.bak

# curl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo /Centos-7.repo

# vi /etc/yum.repos.d/openstack-stein.repo

[openstack-stein]

name=openstack-stein

baseurl=https://mirrors.aliyun.com/centos/7/cloud/x86_64/openstack-stein/

enabled=1

gpgcheck=0

[qemu-kvm]

name=qemu-kvm

baseurl= https://mirrors.aliyun.com/centos/7/virt/x86_64/kvm-common/

enabled=1

gpgcheck=0

1.3.7安装预装包

# yum install -y python-openstackclient openstack-selinux chrony

1.3.8 编写Openstack admin 许可(在controller节点使用)

# vi ~/admin-openrc.sh

export OS_AUTH_TYPE=password

export OS_USERNAME=admin

export OS_PASSWORD=123123

export OS_PROJECT_NAME=admin

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_DOMAIN_NAME=Default

export OS_AUTH_URL=http://controller:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

二、Controller节点

2.1需安装

Chrony(NTP,时间同步) mariadb(数据库) rabbitmq (Message queue消息队列)

Memcached(cache tokens,缓存令牌) Etcd(集群)

Keystone (认证服务Identity service) Glance (Image service 镜像服务)

Nova (Compute service 计算服务 nova-api, nova-conductor, nova-novncproxy, nova-scheduler, nova-console, openstack-nova-placement-api)

Neutron (Networking service 网络服务 neutron,neutron-ml2, neutronclientwhich)

Horizon (Dashborad web管理服务)

2.2配置网卡信息

2.2.1网卡一

# vi /etc/sysconfig/network-scripts/ifcfg-ens33

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=static

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens33

DEVICE=ens33

ONBOOT=yes

IPADDR=172.16.20.110

NETMASK=255.255.255.0

2.2.2网卡二

# vi /etc/sysconfig/network-scripts/ifcfg-ens34

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=static

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens34

DEVICE=ens34

ONBOOT=yes

IPADDR=172.16.30.10

NETMASK=255.255.255.0

# systemctl restart network

2.3配置时间同步,chrony

# vi /etc/chrony.conf

#server 0.centos.pool.ntp.org iburst

#server 1.centos.pool.ntp.org iburst

#server 2.centos.pool.ntp.org iburst

#server 3.centos.pool.ntp.org iburst

server cn.ntp.org.cn iburst

allow 172.16.20.0/24

# systemctl enable chronyd.service

# systemctl start chronyd.service

2.4安装mariadb数据库

2.4.1安装包

# yum install mariadb mariadb-server python2-PyMySQL -y

2.4.2配置

# cp /etc/my.cnf.d/openstack.cnf /etc/my.cnf.d/openstack.cnf.bak

注:如不存在该文件则新建

# vi /etc/my.cnf.d/openstack.cnf

[mysqld]

bind-address = 172.16.20.110

default-storage-engine = innodb

innodb_file_per_table = on

max_connections = 4096

collation-server = utf8_general_ci

character-set-server = utf8

2.4.3启动服务

# systemctl enable mariadb.service

# systemctl start mariadb.service

2.4.4运行安全权限脚本

# mysql_secure_installation

Set root password? [Y/n] y

New password: ## 此处为root用户密码,这里设为123123

Re-enter new password:

Remove anonymous users? [Y/n] y

Disallow root login remotely? [Y/n] n

Remove test database and access to it? [Y/n] y

Reload privilege tables now? [Y/n] y

2.5安装rabbitmq消息队列服务

2.5.1安装所需包

# yum install -y rabbitmq-server

2.5.2启动服务并加入开机启动

# systemctl enable rabbitmq-server.service

# systemctl start rabbitmq-server.service

2.5.3添加openstack用户,允许进行配置,写入和读取访问 openstack

# rabbitmqctl add_user openstack 123123(这里为队列认证密码 设为123123)

# rabbitmqctl set_permissions openstack ".*" ".*" ".*"

2.6安装Memcached缓存令牌

2.6.1安装所需包

# yum install memcached python-memcached -y

2.6.2配置

# cp /etc/sysconfig/memcached /etc/sysconfig/memcached.bak

# vi /etc/sysconfig/memcached

OPTIONS="-l 127.0.0.1,::1,controller"

2.6.3启动服务

# systemctl enable memcached.service

# systemctl start memcached.service

2.7安装 etcd 集群

2.7.1 安装所需包

# yum install etcd -y

2.7.2 配置

# cp /etc/etcd/etcd.conf /etc/etcd/etcd.conf.bak

# vi /etc/etcd/etcd.conf

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS=“http:// 172.16.20.110:2380”

ETCD_LISTEN_CLIENT_URLS=“http:// 172.16.20.110:2379,http://127.0.0.1:2379”

ETCD_NAME=“controller”

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS=“http:// 172.16.20.110:2380”

ETCD_ADVERTISE_CLIENT_URLS=“http:// 172.16.20.110:2379”

ETCD_INITIAL_CLUSTER=“controller=http:// 172.16.20.110:2380”

ETCD_INITIAL_CLUSTER_TOKEN=“etcd-cluster-01”

ETCD_INITIAL_CLUSTER_STATE=“new”

2.7.3启动服务

# systemctl enable etcd

# systemctl start etcd

2.7.4查看集群健康

# etcdctl cluster-health

2.8安装 Keystone

2.8.1安装Keytone数据库

# mysql -u root -p123123

MariaDB [(none)]> CREATE DATABASE keystone;

MariaDB [(none)]> GRANT ALL PRIVILEGES ON keystone.* TO ‘keystone’@‘localhost’

IDENTIFIED BY ‘123123’; //这里将Keystone访问数据库密码设置为123123

MariaDB [(none)]> GRANT ALL PRIVILEGES ON keystone.* TO ‘keystone’@’%’

IDENTIFIED BY ‘123123’; //这里将Keystone访问数据库密码设置为123123

MariaDB [(none)]>exit

2.8.2安装所需包

# yum install openstack-keystone httpd mod_wsgi -y

2.8.3 配置

#cp /etc/keystone/keystone.conf /etc/keystone/keystone.conf.bak

#vi /etc/keystone/keystone.conf

[database]

connection = mysql+pymysql://keystone:123123@controller/keystone

//123123 数据库设置keystone的密码

[token]

provider = fernet

2.8.4填充Identity服务数据库

# su -s /bin/sh -c "keystone-manage db_sync" keystone

2.8.5 初始化Fernet密钥存储库

# keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

# keystone-manage credential_setup --keystone-user keystone --keystone-group keystone

2.8.6 引导身份服务

# keystone-manage bootstrap --bootstrap-password 123123\ --bootstrap-admin-url http://controller:5000/v3/ \ --bootstrap-internal-url http://controller:5000/v3/ \ --bootstrap-public-url http://controller:5000/v3/ \ --bootstrap-region-id RegionOne

// 123123为 admin-openrc.sh 中的admin密码

2.8.7 配置Apache HTTP服务器

# cp /etc/httpd/conf/httpd.conf /etc/httpd/conf/httpd.conf.bak

# vi /etc/httpd/conf/httpd.conf

ServerName controller

2.8.8创建链接

# ln -s /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d/

2.8.9启动服务并加入开机启动

# systemctl enable httpd.service

# systemctl start httpd.service

2.8.10创建域,项目,用户和角色

# source admin-openrc.sh

# openstack domain create --description "An Example Domain" example

# openstack project create --domain default \ --description "Service Project" service

# openstack project create --domain default \ --description "Demo Project" myproject

# openstack user create --domain default \ --password-prompt myuser

# openstack role create myrole

# openstack role add --project myproject --user myuser myrole

2.9安装 glance

2.9.1 安装数据库

# mysql -u root -p123123

MariaDB [(none)]> CREATE DATABASE glance;

MariaDB [(none)]> GRANT ALL PRIVILEGES ON glance.* TO ‘glance’@‘localhost’

IDENTIFIED BY ‘123123’; //这里将glance访问数据库密码设置为123123

MariaDB [(none)]> GRANT ALL PRIVILEGES ON glance.* TO ‘glance’@’%’

IDENTIFIED BY ‘123123’; //这里将glance访问数据库密码设置为123123

MariaDB [(none)]>exit

2.9.2创建服务凭据

# source admin-openrc.sh

2.9.2.1创建glance用户

# openstack user create --domain default --password-prompt glance

User Password:123123

Repeat User Password:123123

2.9.2.2将admin角色添加到glance用户和 service项目

# openstack role add --project service --user glance admin

2.9.2.3创建glance服务实体

# openstack service create --name glance \ --description "OpenStack Image" image

2.9.2.4创建Image服务API端点

# openstack endpoint create --region RegionOne \ image public http://controller:9292

# openstack endpoint create --region RegionOne \ image internal http://controller:9292

# openstack endpoint create --region RegionOne \ image admin http://controller:9292

2.9.3安装所需包

# yum install openstack-glance -y

2.9.4配置

# cp /etc/glance/glance-api.conf /etc/glance/glance-api.conf.bak

# vi /etc/glance/glance-api.conf

[database]

connection = mysql+pymysql://glance:123123@controller/glance

//123123 数据库设置glance的密码

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = glance

password = 123123 //keystone设置glance的密码

[paste_deploy]

flavor = keystone

[glance_store]

stores = file,http

default_store = file

filesystem_store_datadir = /var/lib/glance/images/

# cp /etc/glance/glance-registry.conf /etc/glance/glance-registry.conf.bak

# vi /etc/glance/glance-registry.conf

[database]

connection = mysql+pymysql://glance:123123@controller/glance

//123123 数据库设置glance的密码

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = glance

password = 123123 //keystone设置glance的密码

[paste_deploy]

flavor = keystone

2.9.5填充Image服务数据库

# su -s /bin/sh -c "glance-manage db_sync" glance

2.9.6启动服务并加入开机启动

# systemctl enable openstack-glance-api.service \

openstack-glance-registry.service

# systemctl start openstack-glance-api.service \ openstack-glance-registry.service

2.9.7验证glance 镜像服务

# source admin-openrc.sh

# curl -O http://download.cirros-cloud.net/0.4.0/cirros-0.4.0-x86_64-disk.img

# openstack image create "cirros" \ --file cirros-0.4.0-x86_64-disk.img \ --disk-format qcow2 --container-format bare \ --public

# openstack image list

2.10安装 nova

2.10.1安装Nova数据库

# mysql -u root -p123123

MariaDB [(none)]> CREATE DATABASE nova_api;

MariaDB [(none)]> CREATE DATABASE nova;

MariaDB [(none)]> CREATE DATABASE nova_cell0;

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_api.* TO ‘nova’@‘localhost’

IDENTIFIED BY ‘123123’;

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_api.* TO ‘nova’@’%’

IDENTIFIED BY ‘123123’;

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO ‘nova’@‘localhost’

IDENTIFIED BY ‘123123’;

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO ‘nova’@’%’

IDENTIFIED BY ‘123123’;

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_cell0.* TO ‘nova’@‘localhost’

IDENTIFIED BY ‘123123’;

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_cell0.* TO ‘nova’@’%’

IDENTIFIED BY ‘123123’;

MariaDB [(none)]>exit

2.10.2创建Compute服务凭据

# source admin-openrc.sh

# openstack user create --domain default --password-prompt nova

User Password:123123

Repeat User Password:123123

# openstack role add --project service --user nova admin

# openstack service create --name nova \ --description "OpenStack Compute" compute

2.10.3创建Compute API服务端点

# openstack endpoint create --region RegionOne \ compute public http://controller:8774/v2.1

# openstack endpoint create --region RegionOne \ compute internal http://controller:8774/v2.1

# openstack endpoint create --region RegionOne \ compute admin http://controller:8774/v2.1

2.10.4创建Placement服务凭据和服务端点

# openstack user create --domain default --password-prompt placement

User Password:123123

Repeat User Password:123123

# openstack role add --project service --user placement admin

# openstack service create --name placement \ --description "Placement API" placement

# openstack endpoint create --region RegionOne \ placement public http://controller:8778

# openstack endpoint create --region RegionOne \ placement internal http://controller:8778

# openstack endpoint create --region RegionOne \ placement admin http://controller:8778

2.10.5安装所需包

# yum install openstack-nova-api openstack-nova-conductor \ openstack-nova-novncproxy openstack-nova-scheduler \ openstack-nova-placement-api -y

2.10.6配置

# cp /etc/nova/nova.conf /etc/nova/nova.conf.bak

# vi /etc/nova/nova.conf

[DEFAULT]

enabled_apis = osapi_compute,metadata

transport_url = rabbit://openstack:123123@controller

my_ip = 172.16.20.110 //Compute节点管理网络IP

use_neutron = true

linuxnet_interface_driver = nova.network.linux_net.LinuxOVSInterfaceDriver

firewall_driver = nova.virt.firewall.NoopFirewallDriver

[api_database]

connection = mysql+pymysql://nova:123123@controller/nova_api

[database]

connection = mysql+pymysql://nova:123123@controller/nova

[api]

auth_strategy = keystone

[keystone_authtoken]

auth_url = http://controller:5000/v3

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = 123123 //controller nova连接keystone密码为123123

[vnc]

enabled = true

server_listen = $my_ip

server_proxyclient_address = $my_ip

[glance]

api_servers = http://controller:9292

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

[placement]

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:5000/v3

username = placement

password = 123123 //controller placement连接keystone密码为123123

# cp /etc/httpd/conf.d/00-nova-placement-api.conf /etc/httpd/conf.d/00-nova-placement-api.conf.bak

# vi /etc/httpd/conf.d/00-nova-placement-api.conf

<Directory /usr/bin>

= 2.4>

Require all granted

<IfVersion < 2.4>

Order allow,deny

Allow from all

2.10.7重启http服务

# systemctl restart httpd

2.10.8填充nova-api数据库

# su -s /bin/sh -c "nova-manage api_db sync" nova

2.10.9注册cell0数据库

# su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova

2.10.10创建cell1单元格

# su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova

2.10.11填充Nova数据库

# su -s /bin/sh -c "nova-manage db sync" nova

2.10.12验证nova cell0和cell1是否正确注册

# su -s /bin/sh -c "nova-manage cell_v2 list_cells" nova

2.10.13启动服务

# systemctl enable openstack-nova-api.service \ openstack-nova-scheduler.service \ openstack-nova-conductor.service openstack-nova-novncproxy.service

# systemctl start openstack-nova-api.service \ openstack-nova-scheduler.service \ openstack-nova-conductor.service openstack-nova-novncproxy.service

2.11安装Neutron

2.11.1配置IP转发:

2.11.1.1安装网桥模块

# modprobe bridge br_netfilter

2.11.1.2配置

# cp /etc/sysctl.conf /etc/sysctl.conf.bak

# vi /etc/sysctl.conf

net.ipv4.conf.all.rp_filter=0

net.ipv4.conf.default.rp_filter=0

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

2.11.1.3启动模块

# sysctl -p

2.11.2创建neutron数据库

# mysql -u root -p123123

MariaDB [(none)]> CREATE DATABASE neutron;

MariaDB [(none)]> GRANT ALL PRIVILEGES ON neutron.* TO ‘neutron’@‘localhost’

IDENTIFIED BY ‘123123’;

MariaDB [(none)]> GRANT ALL PRIVILEGES ON neutron.* TO ‘neutron’@’%’

IDENTIFIED BY ‘123123’;

MariaDB [(none)]> exit

2.11.3创建neutron用户

# source admin-openrc.sh

# openstack user create --domain default --password-prompt neutron

2.11.4将admin角色添加到neutron用户

# openstack role add --project service --user neutron admin

2.11.5创建neutron服务实体

# openstack service create --name neutron \ --description "OpenStack Networking" network

2.11.6创建网络服务API端点

# openstack endpoint create --region RegionOne \ network public http://controller:9696

# openstack endpoint create --region RegionOne \ network internal http://controller:9696

# openstack endpoint create --region RegionOne \ network admin http://controller:9696

2.11.7安装所需包

# yum install openstack-neutron openstack-neutron-ml2 ebtables -y

2.11.8配置

2.11.8.1配置neutron.conf

# cp /etc/neutron/neutron.conf /etc/neutron/neutron.conf.bak

# vi /etc/neutron/neutron.conf

[nova]

auth_url = http://controller:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = nova

password = 123123

[DEFAULT]

core_plugin = ml2

service_plugins = router

allow_overlapping_ips = true

transport_url = rabbit://openstack:123123@controller

auth_strategy = keystone

notify_nova_on_port_status_changes = true

notify_nova_on_port_data_changes = true

[database]

connection = mysql+pymysql://neutron:123123@controller/neutron

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = 123123

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp

2.11.8.2配置ML2

# cp /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugins/ml2/ml2_co nf.ini.bak

# vi /etc/neutron/plugins/ml2/ml2_conf.ini

[ml2]

type_drivers = flat,vlan,gre,vxlan

tenant_network_types = vxlan

mechanism_drivers = openvswitch,l2population

extension_drivers = port_security

[ml2_type_flat]

flat_networks = physnet

[ml2_type_vxlan]

vni_ranges = 1:1000

[securitygroup]

#enable_ipset = true

firewall_driver = neutron.agent.linux.iptables_firewall.OVSHybridIptables

FirewallDriver

enable_security_group = True

2.11.8.3配置nova.conf

# vi /etc/nova/nova.conf

[neutron]

url = http://controller:9696

auth_url = http://controller:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = 123123 //neutron 连接nova 密码为123123

service_metadata_proxy = true

metadata_proxy_shared_secret = 123123

2.11.8.4配置metadata_agent

# cp /etc/neutron/metadata_agent.ini /etc/neutron/metadata_agent.ini.bak

# vi /etc/neutron/metadata_agent.ini

[DEFAULT]

nova_metadata_host = controller

metadata_proxy_shared_secret = 123123

2.11.9 ML2创建链接指向ML2插件配置文件

# ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

2.11.10填充数据库

# su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron

2.11.10启动服务

# systemctl restart openstack-nova-api.service

# systemctl enable neutron-server.service neutron-metadata-agent.service

# systemctl start neutron-server.service neutron-metadata-agent.service

2.12安装Horizon

2.12.1安装所需包

# yum install openstack-dashboard -y

2.12.2配置

# cp /etc/openstack-dashboard/local_settings /etc/openstack-dashboard/local_settings.bak

# vi /etc/openstack-dashboard/local_settings

OPENSTACK_HOST = “controller”

ALLOWED_HOSTS = [’*’, ‘two.example.com’]

SESSION_ENGINE = ‘django.contrib.sessions.backends.cache’

CACHES = {

‘default’: {

‘BACKEND’: ‘django.core.cache.backends.memcached.MemcachedCache’,

‘LOCATION’: ‘controller:11211’,

}

}

OPENSTACK_KEYSTONE_URL = “http://%s:5000/v3” % OPENSTACK_HOST

OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True

OPENSTACK_API_VERSIONS = {

“identity”: 3,

“image”: 2,

“volume”: 2,

}

OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = “Default”

OPENSTACK_KEYSTONE_DEFAULT_ROLE = “user”

TIME_ZONE = “TIME_ZONE”

# cp /etc/httpd/conf.d/openstack-dashboard.conf /etc/httpd/conf.d/openstack-dashboard.conf.bak

# vi /etc/httpd/conf.d/openstack-dashboard.conf

WSGIApplicationGroup %{GLOBAL}

2.12.3启动服务

# systemctl restart httpd.service memcached.service

2.13安装Cinder

2.13.1创建数据库

# mysql -u root -p123123

MariaDB [(none)]> CREATE DATABASE cinder;

MariaDB [(none)]> GRANT ALL PRIVILEGES ON cinder.* TO ‘cinder’@‘localhost’

IDENTIFIED BY ‘123123’;

MariaDB [(none)]> GRANT ALL PRIVILEGES ON cinder.* TO ‘cinder’@’%’

IDENTIFIED BY ‘123123’;

MariaDB [(none)]>exit

2.13.2创建cinder用户

# source admin-openrc.sh

# openstack user create --domain default --password-prompt cinder

2.13.3将admin角色添加到cinder用户

# openstack role add --project service --user cinder admin

2.13.4创建cinderv2和cinderv3服务实体

# openstack service create --name cinderv2 \ --description "OpenStack Block Storage" volumev2

# openstack service create --name cinderv3 \ --description "OpenStack Block Storage" volumev3

2.13.5创建Block Storage服务API端点

# openstack endpoint create --region RegionOne \ volumev2 public http://controller:8776/v2/%\(project_id\)s

# openstack endpoint create --region RegionOne \ volumev2 internal http://controller:8776/v2/%\(project_id\)s

# openstack endpoint create --region RegionOne \ volumev2 admin http://controller:8776/v2/%\(project_id\)s

# openstack endpoint create --region RegionOne \ volumev3 public http://controller:8776/v3/%\(project_id\)s

# openstack endpoint create --region RegionOne \ volumev3 internal http://controller:8776/v3/%\(project_id\)s

# openstack endpoint create --region RegionOne \ volumev3 admin http://controller:8776/v3/%\(project_id\)s

2.13.6安装所需包

# yum install openstack-cinder -y

2.13.7配置

# cp /etc/cinder/cinder.conf /etc/cinder/cinder.conf.bak

# vi /etc/cinder/cinder.conf

[DEFAULT]

transport_url = rabbit://openstack:123123@controller

auth_strategy = keystone

my_ip = 172.16.20.110 //Compute节点管理网络IP

[database]

connection = mysql+pymysql://cinder:123123@controller/cinder

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = cinder

password = 123123

[oslo_concurrency]

lock_path = /var/lib/cinder/tmp

# vi /etc/nova/nova.conf

[cinder]

os_region_name = RegionOne

2.13.8填充块存储数据库

# su -s /bin/sh -c "cinder-manage db sync" cinder

2.13.9启动服务

# systemctl restart openstack-nova-api.service

# systemctl enable openstack-cinder-api.service openstack-cinder-scheduler.service

# systemctl start openstack-cinder-api.service openstack-cinder-scheduler.service

三、Compute节点

3.1需安装

Chrony(NTP,时间同步) Nova (Compute service 计算服务 nova-compute)

Neutron (Networking service 网络服务 openvswitch,neutron-l2-agent)

3.2配置网卡信息

# vi /etc/sysconfig/network-scripts/ifcfg-ens33

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=static

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens33

DEVICE=ens33

ONBOOT=yes

IPADDR=172.16.20.120

NETMASK=255.255.255.0

# systemctl restart network

3.3安装 nova

3.3.1安装所需包

# yum install openstack-nova-compute -y

3.3.2配置

# cp /etc/nova/nova.conf /etc/nova/nova.conf.bak

# vi /etc/nova/nova.conf

[DEFAULT]

enabled_apis = osapi_compute,metadata

transport_url = rabbit://openstack:123123@controller

//连接rabbit密码为123123

my_ip = 172.16.20.120

use_neutron = true

linuxnet_interface_driver = nova.network.linux_net.LinuxOVSInterfaceDriver

firewall_driver = nova.virt.firewall.NoopFirewallDriver

[api]

auth_strategy = keystone

[keystone_authtoken]

auth_url = http://controller:5000/v3

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = 123123 //compute nova连接keystone密码为123123

[vnc]

enabled = true

server_listen = 0.0.0.0

server_proxyclient_address = $my_ip

novncproxy_base_url = http://172.16.30.10:6080/vnc_auto.html

[glance]

api_servers = http://controller:9292

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

[placement]

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:5000/v3

username = placement

password = 123123 //compute nova 连接placement密码为123123

3.3.3检查虚拟机是否支持虚拟化

# egrep -c '(vmx|svm)' /proc/cpuinfo

仅当结果为0时,开启二类虚拟化 使用qemu:

# vi /etc/nova/nova.conf

[libvirt]

virt_type = qemu

3.3.4启动服务

# systemctl enable libvirtd.service openstack-nova-compute.service

# systemctl start libvirtd.service openstack-nova-compute.service

3.3.5添加compute节点信息入controller节点 cell 数据库 (controller节点执行)

# source admin-openrc.sh

# openstack compute service list --service nova-compute

3.3.5.1发现conpute节点主机

# su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova

每当添加一个新的compute节点到openstack网络都需要手动在controller节点执行 “# su -s /bin/sh -c “nova-manage cell_v2 discover_hosts --verbose” nova”

在nova.conf中加入一下语句可自动发现主机:

# vi /etc/nova/nova.conf

[scheduler]

discover_hosts_in_cells_interval = 300

3.4安装 neutron

3.4.1配置IP转发

# modprobe bridge br_netfilter

# cp /etc/sysctl.conf /etc/sysctl.conf.bak

# vi /etc/sysctl.conf

net.ipv4.conf.all.rp_filter=0

net.ipv4.conf.default.rp_filter=0

net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1

# sysctl -p

3.4.2安装相应包

# yum install openstack-neutron openstack-neutron-ml2 neutron-openvswitch-agent -y

3.4.3配置

3.4.3.1配置neutron.conf

# cp /etc/neutron/neutron.conf /etc/neutron/neutron.conf.bak

# vi /etc/neutron/neutron.conf

[DEFAULT]

auth_strategy = keystone

core_plugin = ml2

service_plugins = router

allow_overlapping_ips = True

transport_url = rabbit://openstack:123123@controller

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = 123123

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp

3.4.3.2配置ML2插件

# cp /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugins/ml2/ ml2_conf.ini.bak

# vi /etc/neutron/plugins/ml2/ml2_conf.ini

[ml2]

type_drivers = flat,vlan,gre,vxlan

tenant_network_types = vxlan

mechanism_drivers = openvswitch,l2population

extension_drivers = port_security

[ml2_type_flat]

flat_networks = physnet

[ml2_type_vxlan]

vni_ranges = 1:1000

[securitygroup]

firewall_driver = neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver

enable_security_group = true

3.4.3.3配置openvswitch_agent

# cp /etc/neutron/plugins/ml2/openvswitch_agent.ini /etc/neutron/plug ins/ml2/openvswitch_agent.ini.bak

# vi /etc/neutron/plugins/ml2/openvswitch_agent.ini

[ovs]

local_ip = 172.16.20.120

[agent]

tunnel_types = vxlan

l2_population = True

[securitygroup]

firewall_driver = neutron.agent.linux.iptables_firewall.OVSHybridIpt

ablesFirewallDriver

enable_security_group = True

3.4.3.4配置nova.conf

# vi /etc/nova/nova.conf

url = http://controller:9696

auth_url = http://controller:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = 123123

3.4.3.5启动服务

# systemctl enable openvswitch.service neutron-openvswitch-agent.service

# systemctl start openvswitch.service neutron-openvswitch-agent.service

# systemctl restart openstack-nova-compute.service

四、Network节点

4.1需安装

Chrony(NTP,时间同步) Neutron (Networking service 网络服务 openvswitch,neutron-l2-agent,neutron-l3-agent,metadata-agent)

4.2安装 neutron

4.2.1安装所需包

# yum install openstack-neutron openstack-neutron-ml2 neutron-openvswitch-agent -y

4.2.2配置IP转发

# modprobe bridge br_netfilter

# cp /etc/sysctl.conf /etc/sysctl.conf.bak

# vi /etc/sysctl.conf

net.ipv4.conf.all.rp_filter=0

net.ipv4.conf.default.rp_filter=0

net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1

# sysctl -p

4.2.3创建虚拟网桥

# ovs-vsctl add-br br-ex

# ovs-vsctl add-port br-ex ensxx

(用做NAT,浮动IP的网卡)

4.2.4配置网卡信息

4.2.4.1网卡一

# cp /etc/sysconfig/network-scripts/ifcfg-ens33 /etc/sysconfig/network-scripts/ifcfg-ens33.bak

# vi /etc/sysconfig/network-scripts/ifcfg-ens33 (用做NAT,浮动IP的网卡)

TYPE=OVSPort

BOOTPROTO=none

NAME=ens33

DEVICE=ens33

DEVICETYPE=ovs

OVS_BRIDGE=br-ex

ONBOOT=yes

4.2.4.2网卡二

# cp /etc/sysconfig/network-scripts/ifcfg-ens34 /etc/sysconfig/network-scripts/ifcfg-ens34

# vi /etc/sysconfig/network-scripts/ifcfg-ens33 (Host-Only网卡)

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=none

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=no

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens34

DEVICE=ens34

ONBOOT=yes

IPADDR=172.16.20.130

PREFIX=24

4.2.4.3网桥

创建网桥 br-ex 配置文件 ifcfg-br-ex:

# vi /etc/sysconfig/network-scripts/ifcfg-br-ex

TYPE=OVSBridge

BOOTPROTO=static

DEFROUTE=yes

PEERDNS=yes

PEERROUTES=yes

NAME=br-ex

DEVICE=br-ex

DEVICETYPE=ovs

ONBOOT=yes

IPADDR=172.16.10.10

NETMASK=255.255.255.0

GATEWAY=172.16.10.2

DNS1=172.16.10.2

4.2.5配置

4.2.5.1配置neutron.conf

# cp /etc/neutron/neutron.conf /etc/neutron/neutron.conf.bak

# vi /etc/neutron/neutron.conf

[DEFAULT]

auth_strategy = keystone

core_plugin = ml2

service_plugins = router

allow_overlapping_ips = True

transport_url = rabbit://openstack:123123@controller

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = 123123

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp

4.2.5.2配置ML2插件

# cp /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugins/ml2/ml

2_conf.ini.bak

# vi /etc/neutron/plugins/ml2/ml2_conf.ini

[ml2]

type_drivers = flat,vlan,gre,vxlan

tenant_network_types = vxlan

mechanism_drivers = openvswitch,l2population

extension_drivers = port_security

[ml2_type_flat]

flat_networks = physnet

[ml2_type_vxlan]

vni_ranges = 1:1000

[securitygroup]

firewall_driver = neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver

enable_security_group = True

#enable_ipset = True

4.2.5.3配置ML3插件

# cp /etc/neutron/l3_agent.ini /etc/neutron/l3_agent.ini.bak

# vi /etc/neutron/l3_agent.ini

[DEFAULT]

interface_driver=neutron.agent.linux.interface.OVSInterfaceDriver

debug = false

4.2.5.4配置DHCP

# cp /etc/neutron/dhcp_agent.ini /etc/neutron/dhcp_agent.ini.bak

# vi /etc/neutron/dhcp_agent.ini

[DEFAULT]

interface_driver = neutron.agent.linux.interface.OVSInterfaceDriver

dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

enable_isolated_metadata = True

4.2.5.5配置openvswitch

# cp /etc/neutron/plugins/ml2/openvswitch_agent.ini /etc/neutron/pl

ugins/ml2/openvswitch_agent.ini.bak

# vi /etc/neutron/plugins/ml2/openvswitch_agent.ini

[ovs]

local_ip = 172.16.20.130

bridge_mappings = physnet:br-ex

[agent]

tunnel_types = vxlan

l2_population = True

[securitygroup]

firewall_driver = neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver

enable_security_group = True

4.2.5.6配置metadata

# cp /etc/neutron/metadata_agent.ini /etc/neutron/metadata_agent.ini.bak

# vi /etc/neutron/metadata_agent.ini

nova_metadata_host = controller

metadata_proxy_shared_secret = 123123

4.2.6启动服务

# systemctl enable openvswitch.service neutron-l3-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service

neutron-ovs-cleanup.service

# systemctl start openvswitch.service neutron-l3-agent.service

neutron-dhcp-agent.service neutron-metadata-agent.service

4.2.7验证网络服务是否正常启动(在controller节点执行)

# source admin-openrc.sh

# openstack network agent list

五、Block-Storage节点

5.1需安装

Chrony(NTP,时间同步) Cinder(Block-Storage 块存储服务)

5.2配置网卡信息

# vi /etc/sysconfig/network-scripts/ifcfg-ens33

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=static

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens33

DEVICE=ens33

ONBOOT=yes

IPADDR=172.16.20.140

NETMASK=255.255.255.0

# systemctl restart network

5.3安装Cinder

5.3.1安装LVM服务

5.3.1.1安装所需包

# yum install lvm2 device-mapper-persistent-data -y

5.3.1.2启动服务

# systemctl enable lvm2-lvmetad.service

# systemctl start lvm2-lvmetad.service

5.3.1.3创建LVM卷

# pvcreate /dev/sdb

# vgcreate cinder-volumes /dev/sdb

# cp etc/lvm/lvm.conf etc/lvm/lvm.conf.bak

# vi etc/lvm/lvm.conf

devices {

…

filter = [ “a/sdb/”, “r/.*/”]

5.3.2安装cinder包

# yum install openstack-cinder targetcli python-keystone

5.3.3修改配置文件

# cp /etc/cinder/cinder.conf /etc/cinder/cinder.conf.bak

# vi /etc/cinder/cinder.conf

[DEFAULT]

transport_url = rabbit://openstack:123123@controller

auth_strategy = keystone

my_ip = 172.16.20.140

enabled_backends = lvm

glance_api_servers = http://controller:9292

[database]

connection = mysql+pymysql://cinder:123123@controller/cinder

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = cinder

password = 123123

[lvm]

volume_driver = cinder.volume.drivers.lvm.LVMVolumeDriver

volume_group = cinder-volumes

target_protocol = iscsi

target_helper = lioadm

[oslo_concurrency]

lock_path = /var/lib/cinder/tmp

5.3.4启动cinder服务并加入开机启动

# systemctl enable openstack-cinder-volume.service target.service

# systemctl start openstack-cinder-volume.service target.service

本文内容由网友自发贡献,版权归原作者所有,本站不承担相应法律责任。如您发现有涉嫌抄袭侵权的内容,请联系:hwhale#tublm.com(使用前将#替换为@)