作者:张华 发表于:2022-05-27

版权声明:可以任意转载,转载时请务必以超链接形式标明文章原始出处和作者信息及本版权声明

准备两个LXD容器

$ lxc list

+--------+---------+-----------------------+------+-----------+-----------+

| NAME | STATE | IPV4 | IPV6 | TYPE | SNAPSHOTS |

+--------+---------+-----------------------+------+-----------+-----------+

| master | RUNNING | 192.168.122.20 (ens3) | | CONTAINER | 0 |

+--------+---------+-----------------------+------+-----------+-----------+

| node1 | RUNNING | 192.168.122.21 (ens3) | | CONTAINER | 0 |

+--------+---------+-----------------------+------+-----------+-----------+

步骤如下(注意:下面实际设置的两个IP是10.5.0.34和10.5.153.72, 要设置192.168.122.20与192.168.122.21请做相应修改,另外,如果要用virsh的192.168.122.0/24的网段,注意先’sudo virsh net-destroy default’):

sudo snap install lxd --classic

sudo usermod -aG $USER lxd

sudo chown -R $USER ~/.config/

export EDITOR=vim

lxc network create br-ovntest

lxc network set br-ovntest ipv4.address=10.5.0.1/16

lxc network set br-ovntest ipv4.dhcp=false

#lxc network set br-ovntest ipv4.dhcp.ranges=10.5.0.2-10.5.0.99

lxc network set br-ovntest ipv4.nat=true

lxc network set br-ovntest ipv6.address none

lxc network show br-ovntest

lxc profile create ovntest 2>/dev/null || echo "ovntest profile already exists"

lxc profile device set ovntest root pool=default

cat << EOF | tee /tmp/ovntest.yaml

config:

boot.autostart: "true"

linux.kernel_modules: openvswitch,nbd,ip_tables,ip6_tables

security.nesting: "true"

security.privileged: "true"

description: ""

devices:

#multiple NICs must come from different bridges, otherwise it will throw: Failed start validation for device "ens3"

ens3:

mtu: "9000"

name: ens3

nictype: bridged

parent: br-ovntest

type: nic

ens4:

mtu: "9000"

name: ens4

nictype: bridged

parent: br-eth0

type: nic

kvm:

path: /dev/kvm

type: unix-char

mem:

path: /dev/mem

type: unix-char

root:

path: /

pool: default

type: disk

tun:

path: /dev/net/tun

type: unix-char

name: juju-default

used_by: []

EOF

cat /tmp/ovntest.yaml |lxc profile edit ovntest

cat << EOF | tee network.yml

version: 1

config:

- type: physical

name: ens3

subnets:

- type: static

ipv4: true

address: 10.5.0.34

netmask: 255.255.0.0

gateway: 10.5.0.1

control: auto

- type: nameserver

address: 192.168.99.1

EOF

lxc launch jammy n1 -p ovntest --config=user.network-config="$(cat network.yml)"

#lxc network attach br-eth0 n1 ens4

cat << EOF | tee network.yml

version: 1

config:

- type: physical

name: ens3

subnets:

- type: static

ipv4: true

address: 10.5.153.72

netmask: 255.255.0.0

gateway: 10.5.0.1

control: auto

- type: nameserver

address: 192.168.99.1

EOF

lxc launch jammy n2 -p ovntest --config=user.network-config="$(cat network.yml)"

安装OVN

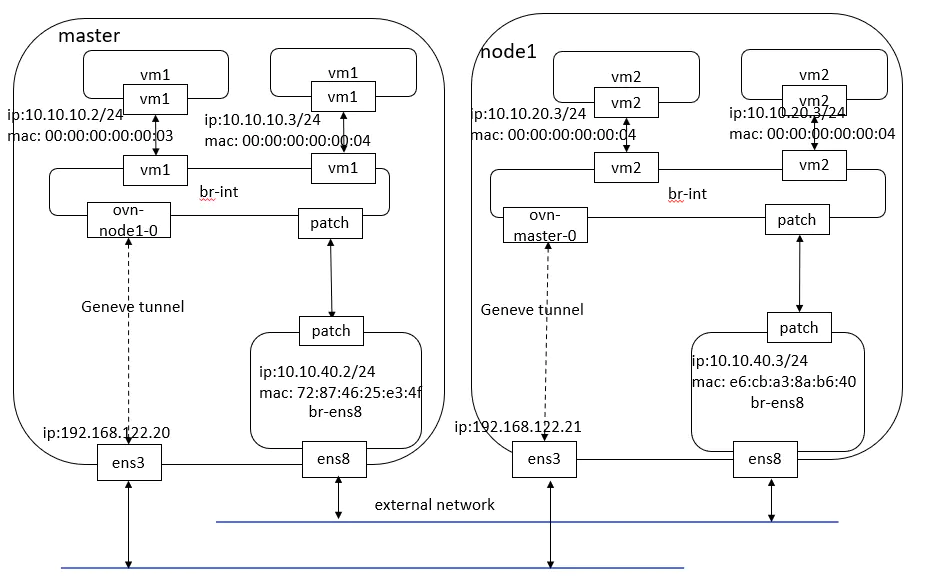

master节点将同时作为控制面与数据面, 所以除了安装ovn-central, 也安装了ovn-host与openvswitch-switch

#on master,

lxc exec `lxc list |grep master |awk -F '|' '{print $2}'` bash

apt install ovn-central openvswitch-switch ovn-host net-tools -y

ovn-nbctl set-connection ptcp:6641

ovn-sbctl set-connection ptcp:6642

netstat -lntp |grep 664

#on node1 and master

lxc exec `lxc list |grep node1 |awk -F '|' '{print $2}'` bash

apt install openvswitch-switch ovn-host net-tools -y

ovs-vsctl add-br br-int

ovs-vsctl set bridge br-int protocols=OpenFlow10,OpenFlow11,OpenFlow12,OpenFlow13,OpenFlow14,OpenFlow15

#on all compute nodes (both master and node1), make ovn-controller connect to southbound db

ovs-vsctl set open_vswitch . \

external_ids:ovn-remote=tcp:192.168.122.20:6642 \

external_ids:ovn-encap-ip=$(ip addr show ens3| awk '$1 == "inet" {print $2}' | cut -f1 -d/) \

external_ids:ovn-encap-type=geneve \

external_ids:system-id=$(hostname)

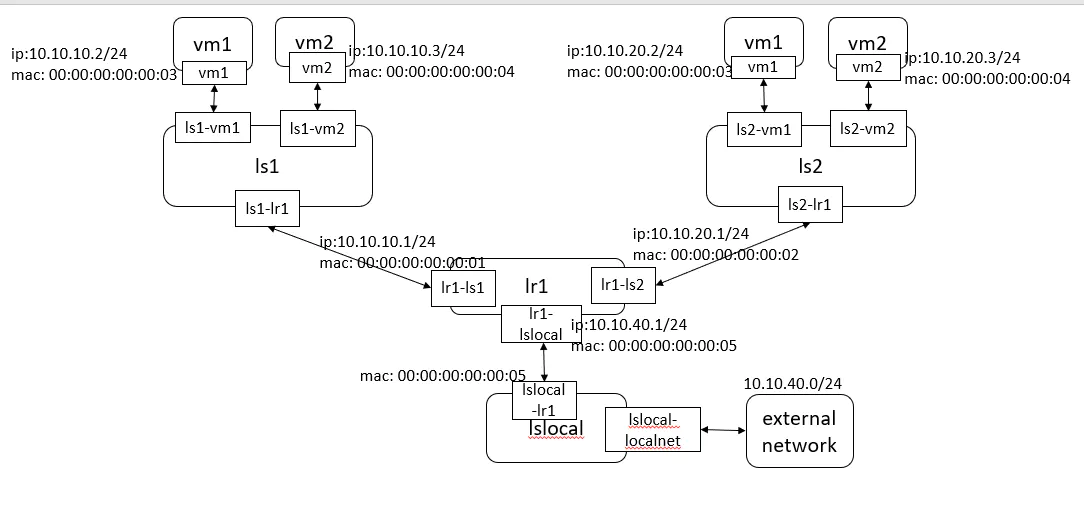

逻辑拓扑, 物理拓扑及实现

- 东西向二层流量(tunnel id相同),tunnel走geneve port, non-tunnel走provider net, 例如从compute chassis到gw chassis的也是东西向流量默认是走geneve port的,但当reside-on-redirect-chassis=true后当相于是DVR的南北向流量了则是从localnet port(provnet-xxx)出

- 东西向三层流量(tunnel id不同,即DVR), 每个compute chassis的L3 IP可以相同,但L2 MAC(ovn-chassis-mac-mappings)不同.同样,也是tunnel走geneve port, non-tunnel走provider net

- 南北向流量(without FIP), VM与lrp-xxx(GW port)可以在或不在同一chassis, VM流量先发往lrp-xxx, lrp-xxx作SNAT后从br-ex发出

- 南北向流量(with FIP), 在compute chassis的br-int处作DNAT, 如:br-int -> provnet-xxx -> br-ens8

特别对于南北向流量,即使通过ovn-chassis-mac-mappings使用了DVR, 也会根据reside-on-redirect-chassis(是否是provider network还是tunnel network) 的不同决定是走centralized GW还是local dvr GW.

南北向流量,对于provider network(flat/vlan), 会设置reside-on-redirect-chassis=False从local DVR GW出(经br-data)

- DVR物理路径:vm port -> br-int -> provxxx -> br-data -> external network

- DVR逻辑路径:vm port(localport) -> br-int(vSwitch, private_net) -> vRouter -> br-data(vSwitch, ext_net -> localnet port -> external network(eg: external-ids:ovn-bridge-mapping=br-data)

南北向流量,对于tunnel network(gre/vxlan), 会设置reside-on-redirect-chassis=True从centralized GW出(经Geneve tunnel)

- 集中化路由物理路径: vm port -> br-int(compute chassis) -> geneve tunnel -> br-int(cenetralized GW) -> br-data(external net)

- 集中化路由逻辑路径: vm port(localport) -> br-int(vSwitch) -> geneve tunnel -> br-int(vSwitch) -> br-data(vSwitch) -> localneet port -> external network (注:因为是虚拟路由,所以物理上两个chassis上的br-int在逻辑上其实也就是一个vSwitch(br-int)

流量实现时,datapath都有一个tunnel_key(ovn-sbctl list Datapath_Binding)并同步到SB, logical port(ovn-sbctl list Port_Binding)在创建时会用它所在datapath上的tunnel_key并同步到SB. 除了这种port还有三种特殊port:

- localnet port, vSwitch与phyNet之间的连接点,如br-int与br-ens8之间的provnet-xxx(在ovs上会看到br-int与by-ens8之间建立了patch, 该patch用于与物理网络的通信

- patch port, vSwitch与vRouter之间的连接点,比如创建子网接口后,会自动生成逻辑path端口用于子网和路由器,目前看来逻辑patch不会在ovs上生成。

- localport port, vSwitch与VM之间的连接点,如br-int上连接虚机的port

ovn-controller实现OVN SG时使用了三个寄存器:

- reg14:logical input port field, ingress tunnel_key

- reg15:logical output port field,engress tunnel_key

- metadata:logical datapath field,datapath tunnel_key

- reg13: logical conntrack zone for lports (Logic Ports)

注意: 下列使用’sudo ovn-nbctl lsp-set-addresses’时有时会报错"Invalid address format’ , 那是因为带sudo时命令行输入参数中的双引号被shell吃掉了.

$ sudo ovn-nbctl lsp-set-addresses sw0-port1 "00:00:00:00:00:03 10.0.0.4"

ovn-nbctl: 10.0.0.4: Invalid address format. See ovn-nb(5). Hint: An Ethernet address must be listed before an IP address, together as a single argument.

可以改用dyminic来分配bypass

sudo ovn-nbctl set Logical_Switch sw0 other_config:subnet=10.0.0.0/24

sudo ovn-nbctl lsp-set-addresses sw0-port1 "dynamic"

sudo ovn-nbctl --columns=name,dynamic_addresses,addresses list logical_switch_port

或者在root用户下命令来bypass.

# create vRouer (lr1) and two vSwitch (ls1 and ls2)

ovn-nbctl ls-add ls1

ovn-nbctl ls-add ls2

ovn-nbctl lr-add lr1

# dhcp for ls1

#ovn-nbctl set logical_switch ls1 other_config:subnet="10.10.10.0/24" other_config:exclude_ips="10.10.10.2..10.10.10.10"

#ovn-nbctl dhcp-options-create 10.10.10.0/24

#DHCP_UUID=$(ovn-nbctl --bare --columns=_uuid find dhcp_options cidr="10.10.10.0/24")

#ovn-nbctl dhcp-options-set-options ${DHCP_UUID} lease_time=3600 router=10.10.10.1 server_id=10.10.10.1 server_mac=c0:ff:ee:00:00:01

#ovn-nbctl list dhcp_options

# connect ls1 to lr1

ovn-nbctl lrp-add lr1 lr1-ls1 00:00:00:00:00:01 10.10.10.1/24

ovn-nbctl lsp-add ls1 ls1-lr1

ovn-nbctl lsp-set-type ls1-lr1 router

ovn-nbctl lsp-set-addresses ls1-lr1 00:00:00:00:00:01

ovn-nbctl lsp-set-options ls1-lr1 router-port=lr1-ls1

# connect ls2 to lr1

ovn-nbctl lrp-add lr1 lr1-ls2 00:00:00:00:00:02 10.10.20.1/24

ovn-nbctl lsp-add ls2 ls2-lr1

ovn-nbctl lsp-set-type ls2-lr1 router

ovn-nbctl lsp-set-addresses ls2-lr1 00:00:00:00:00:02

ovn-nbctl lsp-set-options ls2-lr1 router-port=lr1-ls2

# create 4 test VMs on two chassises

ovn-nbctl lsp-add ls1 ls1-vm1

ovn-nbctl lsp-set-addresses ls1-vm1 "00:00:00:00:00:03 10.10.10.2"

ovn-nbctl lsp-set-port-security ls1-vm1 "00:00:00:00:00:03 10.10.10.2"

ovn-nbctl lsp-add ls1 ls1-vm2

ovn-nbctl lsp-set-addresses ls1-vm2 "00:00:00:00:00:04 10.10.10.3"

ovn-nbctl lsp-set-port-security ls1-vm2 "00:00:00:00:00:04 10.10.10.3"

ovn-nbctl lsp-add ls2 ls2-vm1

ovn-nbctl lsp-set-addresses ls2-vm1 "00:00:00:00:00:03 10.10.20.2"

ovn-nbctl lsp-set-port-security ls2-vm1 "00:00:00:00:00:03 10.10.20.2"

ovn-nbctl lsp-add ls2 ls2-vm2

ovn-nbctl lsp-set-addresses ls2-vm2 "00:00:00:00:00:04 10.10.20.3"

ovn-nbctl lsp-set-port-security ls2-vm2 "00:00:00:00:00:04 10.10.20.3"

# on master

ip netns add vm1

ovs-vsctl add-port br-int vm1 -- set interface vm1 type=internal

ip link set vm1 netns vm1

ip netns exec vm1 ip link set vm1 address 00:00:00:00:00:03

ip netns exec vm1 ip addr add 10.10.10.2/24 dev vm1

ip netns exec vm1 ip link set vm1 up

ip netns exec vm1 ip route add default via 10.10.10.1 dev vm1

ovs-vsctl set Interface vm1 external_ids:iface-id=ls1-vm1

ip netns add vm2

ovs-vsctl add-port br-int vm2 -- set interface vm2 type=internal

ip link set vm2 netns vm2

ip netns exec vm2 ip link set vm2 address 00:00:00:00:00:04

ip netns exec vm2 ip addr add 10.10.10.3/24 dev vm2

ip netns exec vm2 ip link set vm2 up

ip netns exec vm2 ip route add default via 10.10.10.1 dev vm2

ovs-vsctl set Interface vm2 external_ids:iface-id=ls1-vm2

# on node1

ip netns add vm1

ovs-vsctl add-port br-int vm1 -- set interface vm1 type=internal

ip link set vm1 netns vm1

ip netns exec vm1 ip link set vm1 address 00:00:00:00:00:03

ip netns exec vm1 ip addr add 10.10.20.2/24 dev vm1

ip netns exec vm1 ip link set vm1 up

ip netns exec vm1 ip route add default via 10.10.20.1 dev vm1

ovs-vsctl set Interface vm1 external_ids:iface-id=ls2-vm1

ip netns add vm2

ovs-vsctl add-port br-int vm2 -- set interface vm2 type=internal

ip link set vm2 netns vm2

ip netns exec vm2 ip link set vm2 address 00:00:00:00:00:04

ip netns exec vm2 ip addr add 10.10.20.3/24 dev vm2

ip netns exec vm2 ip link set vm2 up

ip netns exec vm2 ip route add default via 10.10.20.1 dev vm2

ovs-vsctl set Interface vm2 external_ids:iface-id=ls2-vm2

# create the static route

ovn-nbctl lr-route-add lr1 "0.0.0.0/0" 10.10.40.1

# create ha-chassis-group

ovn-nbctl lrp-add lr1 lr1-lslocal 00:00:00:00:00:05 10.10.40.1/24

ovn-nbctl ha-chassis-group-add ha1

ovn-nbctl ha-chassis-group-add-chassis ha1 master 1

ovn-nbctl ha-chassis-group-add-chassis ha1 node1 2

ha1_uuid=`ovn-nbctl --bare --columns _uuid find ha_chassis_group name="ha1"`

ovn-nbctl set Logical_Router_Port lr1-lslocal ha_chassis_group=$ha1_uuid

# connect lslocal to lr1

ovn-nbctl ls-add lslocal

ovn-nbctl lsp-add lslocal lslocal-lr1

ovn-nbctl lsp-set-type lslocal-lr1 router

ovn-nbctl lsp-set-addresses lslocal-lr1 00:00:00:00:00:05

ovn-nbctl lsp-set-options lslocal-lr1 router-port=lr1-lslocal

ovn-nbctl lsp-add lslocal lslocal-localnet

ovn-nbctl lsp-set-addresses lslocal-localnet unknown

ovn-nbctl lsp-set-type lslocal-localnet localnet

ovn-nbctl lsp-set-options lslocal-localnet network_name=externalnet

# on master

ovs-vsctl add-br br-ens8

ovs-vsctl add-port br-ens8 ens8

ovs-vsctl set Open_vSwitch . external-ids:ovn-bridge-mappings=externalnet:br-ens8

#ovn-nbctl lrp-set-gateway-chassis lr1-lslocal master 1

#ovn-nbctl lrp-set-gateway-chassis lr1-lslocal node1 2

ovs-vsctl set Open_vSwitch . external-ids:ovn-cms-options=\"enable-chassis-as-gw\"

ip link set dev br-ens8 up

ip addr add 10.10.40.2/24 dev br-ens8

ovs-vsctl get Open_vSwitch . external-ids

# on node1

ovs-vsctl add-br br-ens8

ovs-vsctl add-port br-ens8 ens8

ovs-vsctl set Open_vSwitch . external-ids:ovn-bridge-mappings=externalnet:br-ens8

#ovn-nbctl lrp-set-gateway-chassis lr1-lslocal master 1

#ovn-nbctl lrp-set-gateway-chassis lr1-lslocal node1 2

ovs-vsctl set Open_vSwitch . external-ids:ovn-cms-options=\"enable-chassis-as-gw\"

ip link set dev br-ens8 up

ip addr add 10.10.40.3/24 dev br-ens8

# add NAT item

ovn-nbctl -- --id=@nat create nat type="snat" logical_ip=10.10.10.0/24 \

external_ip=10.10.40.1 -- add logical_router lr1 nat @nat

# query ways

ovn-nbctl list gateway_chassis

ovn-nbctl show

ovn-nbctl list logical_router

ovn-nbctl list logical_router_port

ovn-nbctl list logical_switch

ovn-nbctl list logical_switch_port

ovn-nbctl list ha_chassis_group

ovn-nbctl -f csv list ha_chassis |egrep -v '^_uuid' |sort -t ',' -k 4

ovn-sbctl show

ovn-sbctl list Port_Binding

ovs-vsctl show

测试结果

# Test

root@master:~# ip netns exec vm1 ping 10.10.20.3 -c1

PING 10.10.20.3 (10.10.20.3) 56(84) bytes of data.

64 bytes from 10.10.20.3: icmp_seq=1 ttl=63 time=0.146 ms

root@master:~# ip netns exec vm1 ping 10.10.40.1 -c1

PING 10.10.40.1 (10.10.40.1) 56(84) bytes of data.

64 bytes from 10.10.40.1: icmp_seq=1 ttl=254 time=0.276 ms

一些输出

root@master:~# ovn-nbctl show

switch 31f0f2f4-1a02-4efd-8731-09993279f917 (lslocal)

port lslocal-localnet

type: localnet

addresses: ["unknown"]

port lslocal-lr1

type: router

addresses: ["00:00:00:00:00:05"]

router-port: lr1-lslocal

switch fae87181-383e-41eb-8c41-5a6b52c358ca (ls1)

port ls1-vm2

addresses: ["00:00:00:00:00:04 10.10.10.3"]

port ls1-lr1

type: router

addresses: ["00:00:00:00:00:01"]

router-port: lr1-ls1

port ls1-vm1

addresses: ["00:00:00:00:00:03 10.10.10.2"]

switch bf707a77-f6a0-4bd6-9549-fb3027a4b539 (ls2)

port ls2-lr1

type: router

addresses: ["00:00:00:00:00:02"]

router-port: lr1-ls2

port ls2-vm1

addresses: ["00:00:00:00:00:03 10.10.20.2"]

port ls2-vm2

addresses: ["00:00:00:00:00:04 10.10.20.3"]

router d872c966-89f7-46db-b5dd-362315042b35 (lr1)

port lr1-ls2

mac: "00:00:00:00:00:02"

networks: ["10.10.20.1/24"]

port lr1-ls1

mac: "00:00:00:00:00:01"

networks: ["10.10.10.1/24"]

port lr1-lslocal

mac: "00:00:00:00:00:05"

networks: ["10.10.40.1/24"]

gateway chassis: [node1 master]

nat 62e5b090-b6a7-416c-8f8c-a4c4f9290236

external ip: "10.10.40.1"

logical ip: "10.10.10.0/24"

type: "snat"

root@master:~# ovn-sbctl show

Chassis master

hostname: master

Encap geneve

ip: "192.168.122.20"

options: {csum="true"}

Port_Binding ls1-vm1

Port_Binding ls1-vm2

Chassis node1

hostname: node1

Encap geneve

ip: "192.168.122.21"

options: {csum="true"}

Port_Binding cr-lr1-lslocal

Port_Binding ls2-vm2

Port_Binding ls2-vm1

root@master:~# ovs-vsctl show

7fa21184-4091-4c56-bf22-f27bd43b049d

Bridge br-ens8

Port br-ens8

Interface br-ens8

type: internal

Port ens8

Interface ens8

Port patch-lslocal-localnet-to-br-int

Interface patch-lslocal-localnet-to-br-int

type: patch

options: {peer=patch-br-int-to-lslocal-localnet}

Bridge br-int

Port vm2

Interface vm2

type: internal

Port ovn-node1-0

Interface ovn-node1-0

type: geneve

options: {csum="true", key=flow, remote_ip="192.168.122.21"}

bfd_status: {diagnostic="No Diagnostic", flap_count="1", forwarding="true", remote_diagnostic="No Diagnostic", remote_state=up, state=up}

Port br-int

Interface br-int

type: internal

Port vm1

Interface vm1

type: internal

Port patch-br-int-to-lslocal-localnet

Interface patch-br-int-to-lslocal-localnet

type: patch

options: {peer=patch-lslocal-localnet-to-br-int}

ovs_version: "2.13.5"

root@node1:~# ovs-vsctl show

1f40614f-ea1f-40c5-b564-97e7d4a678e6

Bridge br-ens8

Port br-ens8

Interface br-ens8

type: internal

Port ens8

Interface ens8

Port patch-lslocal-localnet-to-br-int

Interface patch-lslocal-localnet-to-br-int

type: patch

options: {peer=patch-br-int-to-lslocal-localnet}

Bridge br-int

Port vm1

Interface vm1

type: internal

Port patch-br-int-to-lslocal-localnet

Interface patch-br-int-to-lslocal-localnet

type: patch

options: {peer=patch-lslocal-localnet-to-br-int}

Port ovn-master-0

Interface ovn-master-0

type: geneve

options: {csum="true", key=flow, remote_ip="192.168.122.20"}

bfd_status: {diagnostic="No Diagnostic", flap_count="1", forwarding="true", remote_diagnostic="No Diagnostic", remote_state=up, state=up}

Port br-int

Interface br-int

type: internal

Port vm2

Interface vm2

type: internal

ovs_version: "2.13.5"

root@master:~# ovn-nbctl list ha_chassis_group

_uuid : c843f65f-a215-4bd7-8427-c2da6ec33cf8

external_ids : {}

ha_chassis : [25448727-ce5f-4676-974e-ef7d3e1ee915, d236dd68-c65b-4842-8e8d-b999d6895e09]

name : ha1

root@master:~# ovn-nbctl -f csv list ha_chassis |egrep -v '^_uuid' |sort -t ',' -k 4

25448727-ce5f-4676-974e-ef7d3e1ee915,master,{},1

d236dd68-c65b-4842-8e8d-b999d6895e09,node1,{},2

root@master:~# ovn-nbctl list gateway_chassis

_uuid : 3d670f79-fe01-432c-93d1-113aa5747fcc

chassis_name : master

external_ids : {}

name : lr1-lslocal-master

options : {}

priority : 1

_uuid : 0b6cc1ab-bb03-4674-81c9-1257bcfcbd7c

chassis_name : node1

external_ids : {}

name : lr1-lslocal-node1

options : {}

priority : 2

root@master:~# ovs-vsctl get Open_vSwitch . external-ids

{hostname=master, ovn-bridge-mappings="externalnet:br-ens8", ovn-cms-options=enable-chassis-as-gw, ovn-encap-ip="192.168.122.20", ovn-encap-type=geneve, ovn-remote="tcp:192.168.122.20:6642", rundir="/var/run/openvswitch", system-id=master}

root@node1:~# ovs-vsctl get Open_vSwitch . external-ids

{hostname=node1, ovn-bridge-mappings="externalnet:br-ens8", ovn-cms-options=enable-chassis-as-gw, ovn-encap-ip="192.168.122.21", ovn-encap-type=geneve, ovn-remote="tcp:192.168.122.20:6642", rundir="/var/run/openvswitch", system-id=node1}

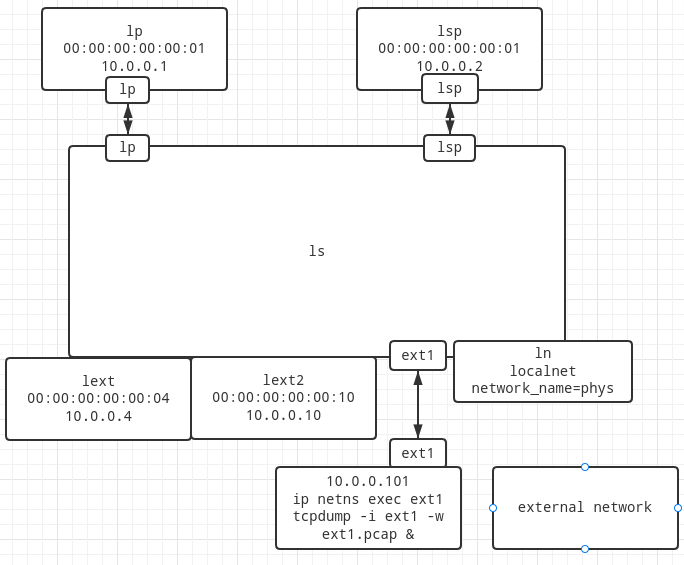

20220606更新 - 只用一个lxd容器测试从localport到localnet的流量

实际在模拟https://blog.csdn.net/quqi99/article/details/116893909中的sriov vm external port到metadata localport的流量

如图(采用tps://www.processon.com/diagrams绘制),它实现了如下物理拓扑(见:https://bugzilla.redhat.com/show_bug.cgi?id=1974062):

- 相比上例, 这里只有一个vSwitch, 没有vRouter, 所以VM与external network同子网. 也只在一台lxd容器里运行(vSwitch与有多少台host无关 )

- br-int (ls) 上两个localport用于模拟VM (lp=10.0.0.1, lsp=10.0.0.2)

- vSwitch有一个localnet port (ln)用于和external network关联, 这样在br-int与br-phys之间会有一对veth pair, ls与br-int是通过这样关联的(ovs-vsctl add-port br-int lp – set interface lp type=internal external_ids:iface-id=lp)

- br-phys再创建一个localport用于模拟externa network上的一个VM(ext1=10.0.0.4)

- 当从lp=10.0.0.1往ext1=10.0.0.4正常情况下应该是可以用tcpdump抓到icmp包的,但由于ovn bug(https://github.com/ovn-org/ovn/commit/1148580290d0ace803f20aeaa0241dd51c100630) 抓不着 - https://bugs.launchpad.net/ubuntu/+source/ovn/+bug/1943266

实现步骤如下:

cat << EOF | tee network.yml

version: 1

config:

- type: physical

name: ens3

subnets:

- type: static

ipv4: true

address: 192.168.122.122

netmask: 255.255.255.0

gateway: 192.168.122.1

control: auto

- type: nameserver

address: 192.168.99.1

EOF

lxc launch ubuntu:focal hv1 -p juju-default --config=user.network-config="$(cat network.yml)"

lxc exec `lxc list |grep hv1 |awk -F '|' '{print $2}'` bash

apt install ovn-central openvswitch-switch ovn-host net-tools -y

ovn-nbctl set-connection ptcp:6641

ovn-sbctl set-connection ptcp:6642

ovs-vsctl set open . external_ids:system-id=hv1 external_ids:ovn-remote=tcp:192.168.122.122:6642 external_ids:ovn-encap-type=geneve external_ids:ovn-encap-ip=192.168.122.122

ovs-vsctl add-br br-phys

ip link set br-phys up

ovs-vsctl set open . external-ids:ovn-bridge-mappings=phys:br-phys

systemctl restart ovn-controller

ovn-nbctl ls-add ls

ovn-nbctl lsp-add ls ln \

-- lsp-set-type ln localnet \

-- lsp-set-addresses ln unknown \

-- lsp-set-options ln network_name=phys

ovn-nbctl --wait=sb ha-chassis-group-add hagrp

ovn-nbctl --wait=sb ha-chassis-group-add-chassis hagrp hv1 10

#create two external port to similute sriov port (for dhcp and metdata)

ovn-nbctl lsp-add ls lext

ovn-nbctl lsp-set-addresses lext "00:00:00:00:00:04 10.0.0.4 2001::4"

ovn-nbctl lsp-set-type lext external

hagrp_uuid=`ovn-nbctl --bare --columns _uuid find ha_chassis_group name=hagrp`

ovn-nbctl set logical_switch_port lext ha_chassis_group=$hagrp_uuid

ovn-nbctl lsp-add ls lext2

ovn-nbctl lsp-set-addresses lext2 "00:00:00:00:00:10 10.0.0.10 2001::10"

ovn-nbctl lsp-set-type lext2 external

ovn-nbctl set logical_switch_port lext2 ha_chassis_group=$hagrp_uuid

ovn-nbctl --wait=hv sync

# create a test VM(10.0.0.101) on external network

ovs-vsctl add-port br-phys ext1 -- set interface ext1 type=internal

ip netns add ext1

ip link set ext1 netns ext1

ip netns exec ext1 ip link set ext1 up

ip netns exec ext1 ip addr add 10.0.0.101/24 dev ext1

ip netns exec ext1 ip addr add 2001::101/64 dev ext1

# create two test VMs (10.0.0.1 and 10.0.0.2)

ovn-nbctl lsp-add ls lp \

-- lsp-set-type lp localport \

-- lsp-set-addresses lp "00:00:00:00:00:01 10.0.0.1 2001::1" \

-- lsp-add ls lsp \

-- lsp-set-addresses lsp "00:00:00:00:00:02 10.0.0.2 2001::2"

ovs-vsctl add-port br-int lp -- set interface lp type=internal external_ids:iface-id=lp

ip netns add lp

ip link set lp netns lp

ip netns exec lp ip link set lp address 00:00:00:00:00:01

ip netns exec lp ip link set lp up

ip netns exec lp ip addr add 10.0.0.1/24 dev lp

ip netns exec lp ip addr add 2001::1/64 dev lp

ovn-nbctl --wait=hv sync

ovs-vsctl add-port br-int lsp -- set interface lsp type=internal external_ids:iface-id=lsp options:tx_pcap=lsp.pcap options:rxq_pcap=lsp-rx.pcap

ip netns add lsp

ip link set lsp netns lsp

ip netns exec lsp ip link set lsp address 00:00:00:00:00:02

ip netns exec lsp ip link set lsp up

ip netns exec lsp ip addr add 10.0.0.2/24 dev lsp

ip netns exec lsp ip addr add 2001::2/64 dev lsp

# start tcpdump process

ip netns exec ext1 tcpdump -i ext1 -w ext1.pcap &

ip netns exec lsp tcpdump -i lsp -w lsp.pcap &

sleep 2

# VM(local port) ping lext(extenal port)

ip netns exec lp ip neigh add 10.0.0.4 lladdr 00:00:00:00:00:04 dev lp

ip netns exec lp ip -6 neigh add 2001::4 lladdr 00:00:00:00:00:04 dev lp

ip netns exec lp ip neigh add 10.0.0.10 lladdr 00:00:00:00:00:10 dev lp

ip netns exec lp ip -6 neigh add 2001::10 lladdr 00:00:00:00:00:10 dev lp

ip netns exec lp ping 10.0.0.4 -c 1 -w 1 -W 1

ip netns exec lp ping 10.0.0.10 -c 1 -w 1 -W 1

ip netns exec lp ping6 2001::4 -c 1 -w 1 -W 1

ip netns exec lp ping6 2001::10 -c 1 -w 1 -W 1

sleep 1

pkill tcpdump

sleep 1

# analysis tcpdump output

tcpdump -r ext1.pcap -nnle

tcpdump -r ext1.pcap -nnle host 10.0.0.4 or host 10.0.0.10 or host 2001::4 or host 2001::10

20220818 - LXD with OVN

#https://linuxcontainers.org/lxd/docs/master/howto/network_ovn_setup/

#https://github.com/lxc/lxc-ci/blob/master/bin/test-lxd-network-ovn

sudo apt install ovn-host ovn-central -y

sudo systemctl -a |grep ovn |awk '{print $1}' |xargs -i sudo systemctl disable {}

sudo systemctl -a |grep ovn |awk '{print $1}' |grep service |xargs -i sudo systemctl start {}

sudo ovs-vsctl set open_vswitch . \

external_ids:ovn-remote=unix:/var/run/ovn/ovnsb_db.sock \

external_ids:ovn-encap-type=geneve \

external_ids:ovn-encap-ip=127.0.0.1

lxc network set lxdbr0 \

ipv4.address=192.168.121.1/24 ipv4.nat=true \

ipv4.dhcp.ranges=192.168.121.2-192.168.121.199 \

ipv4.ovn.ranges=192.168.121.200-192.168.121.254 \

ipv6.address=fd42:4242:4242:1010::1/64 ipv6.nat=true \

ipv6.ovn.ranges=fd42:4242:4242:1010::200-fd42:4242:4242:1010::254

lxc network create ovntest --type=ovn network=lxdbr0

lxc init ubuntu:22.04 c1

lxc config device override c1 eth0 network=ovntest

lxc start c1

lxc remote add faster https://mirrors.tuna.tsinghua.edu.cn/lxc-images/ --protocol=simplestreams --public

lxc image list faster:

lxc remote list

#Failed creating instance record: Failed detecting root disk device: No root device could be found

#lxc profile device add default root disk path=/ pool=default

#lxc profile show default

#lxc launch ubuntu:focal master -p juju-default --config=user.network-config="$(cat network.yml)"

#lxc launch faster:ubuntu/jammy i1

lxc init faster:ubuntu/jammy i1

lxc config device override i1 eth0 network=ovntest

lxc start i1

$ lxc list |grep i1

| i1 | RUNNING | 10.29.225.2 (eth0) | fd42:79f8:25b6:6f47:216:3eff:fe13:9cd6 (eth0) | CONTAINER | 0 |

$ lxc network list |grep -E 'lxdbr0|ovntest'

| lxdbr0 | bridge | YES | 192.168.121.1/24 | fd42:4242:4242:1010::1/64 | | 5 | CREATED |

| ovntest | ovn | YES | 10.29.225.1/24 | fd42:79f8:25b6:6f47::1/64 | | 1 | CREATED |

20221010 - LXD uses OVN

cd ~ && lxc launch faster:ubuntu/focal v1

lxc launch faster:ubuntu/focal v2

lxc launch faster:ubuntu/focal v3

#the subnet is 192.168.121.0/24

lxc config device override v1 eth0 ipv4.address=192.168.121.2

lxc config device override v2 eth0 ipv4.address=192.168.121.3

lxc config device override v3 eth0 ipv4.address=192.168.121.4

lxc stop v1 && lxc start v1 && lxc stop v2 && lxc start v2 && lxc stop v3 && lxc start v3

#on v1

lxc exec `lxc list |grep v1 |awk -F '|' '{print $2}'` bash

sudo apt install ovn-central -y

cat << EOF |tee /etc/default/ovn-central

OVN_CTL_OPTS= \

--db-nb-addr=192.168.121.2 \

--db-sb-addr=192.168.121.2 \

--db-nb-cluster-local-addr=192.168.121.2 \

--db-sb-cluster-local-addr=192.168.121.2 \

--db-nb-create-insecure-remote=yes \

--db-sb-create-insecure-remote=yes \

--ovn-northd-nb-db=tcp:192.168.121.2:6641,tcp:192.168.121.3:6641,tcp:192.168.121.4:6641 \

--ovn-northd-sb-db=tcp:192.168.121.2:6642,tcp:192.168.121.3:6642,tcp:192.168.121.4:6642

EOF

rm -rvf /var/lib/ovn

systemctl restart ovn-central

ovn-nbctl show

#on v2

lxc exec `lxc list |grep v2 |awk -F '|' '{print $2}'` bash

sudo apt install ovn-central -y

cat << EOF |tee /etc/default/ovn-central

OVN_CTL_OPTS= \

--db-nb-addr=192.168.121.3 \

--db-sb-addr=192.168.121.3 \

--db-nb-cluster-local-addr=192.168.121.3 \

--db-sb-cluster-local-addr=192.168.121.3 \

--db-nb-create-insecure-remote=yes \

--db-sb-create-insecure-remote=yes \

--ovn-northd-nb-db=tcp:192.168.121.2:6641,tcp:192.168.121.3:6641,tcp:192.168.121.4:6641 \

--ovn-northd-sb-db=tcp:192.168.121.2:6642,tcp:192.168.121.3:6642,tcp:192.168.121.4:6642 \

--db-nb-cluster-remote-addr=192.168.121.2 \

--db-sb-cluster-remote-addr=192.168.121.2

EOF

rm -rvf /var/lib/ovn

systemctl restart ovn-central

#on v3

lxc exec `lxc list |grep v3 |awk -F '|' '{print $2}'` bash

sudo apt install ovn-central -y

cat << EOF |tee /etc/default/ovn-central

OVN_CTL_OPTS= \

--db-nb-addr=192.168.121.4 \

--db-sb-addr=192.168.121.4 \

--db-nb-cluster-local-addr=192.168.121.4 \

--db-sb-cluster-local-addr=192.168.121.4 \

--db-nb-create-insecure-remote=yes \

--db-sb-create-insecure-remote=yes \

--ovn-northd-nb-db=tcp:192.168.121.2:6641,tcp:192.168.121.3:6641,tcp:192.168.121.4:6641 \

--ovn-northd-sb-db=tcp:192.168.121.2:6642,tcp:192.168.121.3:6642,tcp:192.168.121.4:6642 \

--db-nb-cluster-remote-addr=192.168.121.2 \

--db-sb-cluster-remote-addr=192.168.121.2

EOF

rm -rvf /var/lib/ovn

systemctl restart ovn-central

OVN_NB_DB=tcp:192.168.121.2:6641,tcp:192.168.121.3:6641,tcp:192.168.121.4:6641 ovn-nbctl show

OVN_SB_DB=tcp:192.168.121.2:6642,tcp:192.168.121.3:6642,tcp:192.168.121.4:6642 ovn-sbctl show

#inside v1, v2, v3

sudo apt install ovn-host -y

sudo ovs-vsctl set open_vswitch . \

external_ids:ovn-encap-type=geneve \

external_ids:ovn-remote="unix:/var/run/ovn/ovnsb_db.sock" \

external_ids:ovn-encap-ip=$(ip r get 192.168.121.1 | grep -v cache | awk '{print $5}')

sudo ovs-vsctl show |grep br-int

#inside v1, v2, v3

sudo apt install snapd -y

sudo snap install lxd

#sudo usermod -aG $USER lxd && sudo chown -R $USER ~/.config/

export EDITOR=vim

/snap/bin/lxd init --auto

#lxd connect to OVN

/snap/bin/lxc config set network.ovn.northbound_connection=tcp:192.168.121.2:6641,tcp:192.168.121.3:6641,tcp:192.168.121.4:6641

#create a bridged network for use as an OVN uplink network - https://discuss.linuxcontainers.org/t/ovn-high-availability-cluster-tutorial/11033

lxc network create lxdbr0 --target=v1

lxc network create lxdbr0 --target=v2

lxc network create lxdbr0 --target=v3

lxc network create lxdbr0 \

ipv4.address=192.168.121.1/24 \

ipv4.nat=true \

ipv4.dhcp.ranges=192.168.121.5-192.168.121.10 \ # Required to specify ipv4.ovn.ranges

ipv4.ovn.ranges=192.168.121.11-192.168.121.20 # For use with OVN network's router IP on the uplink network

#create an OVN network using the lxdbr0 bridge as an uplink

lxc network create ovn0 --type=ovn network=lxdbr0

#inside v3

lxc shell v3

lxc init images:ubuntu/focal c1

lxc config device add c1 eth0 nic network=ovn0

20230309 - 一个比较好的单机单网卡测试用的网络设计

如果一台机器只有一个网卡eno1的话,如何同时做openstack和lxd实验呢?

- openstack用的都是ovs bridge, 如东西向流量用br-data(只是针对non-tunnel流量,对于tunnel流量走gevene tunnel), 对于南北向流量也可以让它延用br-data (无论对于non-tunnel流量还是tunnel流量, 南北向流量用br-data均不会影响东西向流量)

- lxd最好使用linux bridge (当然,lxd也能使用ovs bridge, 但尚不清楚是否也能同时支持wol等,或者会不会更麻烦)

这样,可如下设计: - 创建br-eth0, 这个是linux bridge, 和eno1关联,并同时支持wol, 用于lxd与management network

- 创建br-data, 这个是ovs bridge, 若是单机br-data可不用再加NIC,若是多机br-data就还需要一块NIC,那可以在br-eth0与br-data之间创建linux peers来实现。

根据上面的思想,devstack中的网络可以这样设计,创建ovs bridge (br-data), 在Linux bridge(br-eth0)与br-data之间创始peers (veth-ex), 若跨机需要时可将veth-ex添加至br-data中。记得给veth-ex设置IP (10.0.1.1), 这样br-data将同时用于东西向(OVS_PHYSICAL_BRIDGE=br-data)与南北向(PUBLIC_BRIDGE=br-data)流量, 租户的non-tunnel如vlan流量将走通过veth-ex(10.0.1.1)在东西向和其他机器互联. 也可见devstack blog - https://blog.csdn.net/quqi99/article/details/97622336

sudo ip l add name veth-br-eth0 type veth peer name veth-ex >/dev/null 2>&1

sudo ip l set dev veth-br-eth0 up

sudo ip l set dev veth-ex up

sudo ip l set veth-br-eth0 master br-eth0

sudo ovs-vsctl --may-exist add-br br-data

sudo ovs-vsctl --may-exist add-port br-data veth-ex

sudo ip addr add 10.0.1.1/24 dev br-data >/dev/null >/dev/null 2>&1

PUBLIC_INTERFACE=veth-ex

OVS_PHYSICAL_BRIDGE=br-data

PUBLIC_BRIDGE=br-data

HOST_IP=10.0.1.1

FIXED_RANGE=10.0.1.0/24

NETWORK_GATEWAY=10.0.1.1

PUBLIC_NETWORK_GATEWAY=192.168.99.1

FLOATING_RANGE=192.168.99.0/24

Q_FLOATING_ALLOCATION_POOL=start=192.168.99.240,end=192.168.99.249

下面是使用netplan来实现br-eth0, 同时支持wol, 缺点是无法容易加post scripts. (已测试,it works)

#Create ovs-bridge br-data and enable wol on it

sudo systemctl stop NetworkManager.service

sudo systemctl disable NetworkManager.service

sudo systemctl stop NetworkManager-wait-online.service

sudo systemctl disable NetworkManager-wait-online.service

sudo systemctl stop NetworkManager-dispatcher.service

sudo systemctl disable NetworkManager-dispatcher.service

sudo apt install netplan.io openvswitch-switch -y

sudo apt install -y networkd-dispatcher -y

cat << EOF |sudo tee /etc/netplan/90-local.yaml

network:

version: 2

renderer: networkd

ethernets:

eno1:

dhcp4: no

match:

macaddress: f8:32:e4:be:87:cd

wakeonlan: true

bridges:

br-eth0:

dhcp4: yes

interfaces:

- eno1

#Use 'etherwake F8:32:E4:BE:87:CD' to wol in bridge

macaddress: f8:32:e4:be:87:cd

#addresses:

#- 192.168.99.235/24

#gateway4: 192.168.99.1

#nameservers:

# addresses:

# - 192.168.99.1

EOF

sudo netplan generate

sudo netplan apply

使用netplan的缺点是添加post scripts等hook不容易,下面的方法试图使用networkd-dispatcher来实现这一点,但未测试.

sudo apt install netplan.io openvswitch-switch -y

sudo apt install -y networkd-dispatcher -y

cat << EOF |sudo tee /etc/networkd-dispatcher/off.d/start.sh

#!/bin/bash -e

#IFACE='eno1'

if [ \$IFACE = "eno1" -o \$IFACE = "br-data" ]; then

if ip link show eno1 | grep "state DOWN" > /dev/null && !(arp -ni br-data | grep "ether" > /dev/null); then

date > /tmp/start.txt;

/usr/bin/ovs-vsctl --may-exist add-port br-eth0 eno1

ip l add name veth-br-eth0 type veth peer name veth-ex

ip l set dev veth-br-eth0 up

ip l set dev veth-ex up

ip l set veth-br-eth0 master br-eth0

fi

fi

EOF

cat << EOF |sudo tee /etc/networkd-dispatcher/routable.d/stop.sh

#!/bin/bash -e

if [ \$IFACE = "eno1" -o \$IFACE = "br-data" ]; then

if ip link show eno1 | grep "state UP" > /dev/null || arp -ni br-data | grep "ether" > /dev/null; then

date > /tmp/stop.txt;

systemctl stop hostapd;

fi

fi

EOF

sudo chmod +x /etc/networkd-dispatcher/off.d/start.sh

sudo chmod +x /etc/networkd-dispatcher/off.d/stop.sh

所以可能需要从netplan改回NetworkManager, 下面是用NetworkManager来实现ovs bridge的方法, 未测试,我们最好还是用Network Manager来实现Linux bridge

auto br-eth0

allow-ovs br-eth0

iface br-eth0 inet static

pre-up /usr/bin/ovs-vsctl -- --may-exist add-br br-eth0

pre-up /usr/bin/ovs-vsctl -- --may-exist add-port br-eth0 eno1

address 192.168.99.125

gateway 192.168.99.1

network 192.168.99.0

netmask 255.255.255.0

broadcast 192.168.99.255

ovs_type OVSBridge

ovs_ports eno1

#sudo ip -6 addr add 2001:2:3:4500:fa32:e4ff:febe:87cd/64 dev br-eth0

iface br-phy inet6 static

pre-up modprobe ipv6

address 2001:2:3:4500:fa32:e4ff:febe:87cd

netmask 64

gateway 2001:2:3:4500::1

auto eno1

allow-br-phy eno1

iface eno1 inet manual

ovs_bridge br-eth0

ovs_type OVSPort

下面有一个NetworkManager使用linux bridge的例子(来自: https://blog.csdn.net/quqi99/article/details/86538603),仅供参考:

root@node1:~# cat /etc/network/interfaces

auto lo

iface lo inet loopback

auto eth0

iface eth0 inet manual

auto br-eth0

iface br-eth0 inet static

address 192.168.99.124/24

gateway 192.168.99.1

bridge_ports eth0

dns-nameservers 192.168.99.1

bridge_stp on

bridge_fd 0

bridge_maxwait 0

up echo -n 0 > /sys/devices/virtual/net/$IFACE/bridge/multicast_snooping

# for stateless it's 'inet6 auto', for stateful it's 'inet6 dhcp'

iface br-eth0 inet6 auto

#iface eth0 inet6 static

#address 2001:192:168:99::135

#gateway 2001:192:168:99::1

#netmask 64

# use SLAAC to get global IPv6 address from the router

# we may not enable ipv6 forwarding, otherwise SLAAC gets disabled

# sleep 5 is due a bug and 'dhcp 1' indicates that info should be obtained from dhcpv6 server for stateless

up echo 0 > /proc/sys/net/ipv6/conf/$IFACE/disable_ipv6

up sleep 5

autoconf 1

accept_ra 2

dhcp 1

20230321 - openstack ovn test

1, Use lxd to create two chassises

sudo snap install lxd --classic

sudo usermod -aG $USER lxd

sudo chown -R $USER ~/.config/

export EDITOR=vim

lxc network create br-ovntest

lxc network set br-ovntest ipv4.address=10.5.21.1/16

lxc network set br-ovntest ipv4.dhcp=false

lxc network set br-ovntest ipv4.nat=true

lxc network set br-ovntest ipv6.address none

lxc network show br-ovntest

lxc profile create ovntest 2>/dev/null || echo "ovntest profile already exists"

lxc profile device set ovntest root pool=default

cat << EOF | tee /tmp/ovntest.yaml

config:

boot.autostart: "true"

linux.kernel_modules: openvswitch,nbd,ip_tables,ip6_tables

security.nesting: "true"

security.privileged: "true"

description: ""

devices:

#multiple NICs must come from different bridges, otherwise it will throw: Failed start validation for device "ens3"

ens3:

mtu: "9000"

name: ens3

nictype: bridged

parent: br-ovntest

type: nic

ens4:

mtu: "9000"

name: ens4

nictype: bridged

parent: br-eth0

type: nic

kvm:

path: /dev/kvm

type: unix-char

mem:

path: /dev/mem

type: unix-char

root:

path: /

pool: default

type: disk

tun:

path: /dev/net/tun

type: unix-char

name: juju-default

used_by: []

EOF

cat /tmp/ovntest.yaml |lxc profile edit ovntest

cat << EOF | tee network.yml

version: 1

config:

- type: physical

name: ens3

subnets:

- type: static

ipv4: true

address: 10.5.21.11

netmask: 255.255.0.0

gateway: 10.5.21.1

control: auto

- type: nameserver

address: 192.168.99.1

EOF

lxc launch jammy n1 -p ovntest --config=user.network-config="$(cat network.yml)"

#lxc network attach br-eth0 n1 ens4

cat << EOF | tee network.yml

version: 1

config:

- type: physical

name: ens3

subnets:

- type: static

ipv4: true

address: 10.5.21.12

netmask: 255.255.0.0

gateway: 10.5.21.1

control: auto

- type: nameserver

address: 192.168.99.1

EOF

lxc launch jammy n2 -p ovntest --config=user.network-config="$(cat network.yml)"

2, Install ovn

#on n1

apt install ovn-central openvswitch-switch ovn-host net-tools -y

ovn-nbctl set-connection ptcp:6641

ovn-sbctl set-connection ptcp:6642

#on n2

apt install openvswitch-switch ovn-host net-tools -y

#on both n1 and n2

ovs-vsctl set open_vswitch . external_ids:ovn-remote=tcp:10.5.21.11:6642 \

external_ids:ovn-encap-ip=$(ip addr show ens3| awk '$1 == "inet" {print $2}' | cut -f1 -d/) \

external_ids:ovn-encap-type=geneve external_ids:system-id=$(hostname)

3, West-east(only L2 - same subnet)

#Create all datapaths (two vSwitches and one vRouter)

ovn-nbctl ls-add private

ovn-nbctl ls-add ext_net

ovn-nbctl lr-add provider-router

#Connect private to provider-router

ovn-nbctl lrp-add provider-router lrp-sg21 00:00:00:00:00:01 192.168.21.1/24

ovn-nbctl lsp-add private lrp-sg21-patch

ovn-nbctl lsp-set-type lrp-sg21-patch router

ovn-nbctl lsp-set-addresses lrp-sg21-patch 00:00:00:00:00:01

ovn-nbctl lsp-set-options lrp-sg21-patch router-port=lrp-sg21

# create 2 test VMs

ovn-nbctl lsp-add private vm1-ovn

ovn-nbctl lsp-set-addresses vm1-ovn "00:00:00:00:00:11 192.168.21.11"

ovn-nbctl lsp-set-port-security vm1-ovn "00:00:00:00:00:11 192.168.21.11"

ovn-nbctl lsp-add private vm2-ovn

ovn-nbctl lsp-set-addresses vm2-ovn "00:00:00:00:00:12 192.168.21.12"

ovn-nbctl lsp-set-port-security vm2-ovn "00:00:00:00:00:12 192.168.21.12"

#create dhcp

ovn-nbctl dhcp-options-create 192.168.21.0/24

DHCP_OPTION_ID=$(ovn-nbctl dhcp-options-list)

ovn-nbctl dhcp-options-set-options $DHCP_OPTION_ID server_id=192.168.21.1 server_mac=00:00:00:00:00:01 lease_time=72000 router=192.168.21.1

ovn-nbctl lsp-set-dhcpv4-options vm1-ovn $DHCP_OPTION_ID

ovn-nbctl lsp-get-dhcpv4-options vm1-ovn

ovn-nbctl lsp-set-dhcpv4-options vm2-ovn $DHCP_OPTION_ID

ovn-nbctl lsp-get-dhcpv4-options vm2-ovn

#on n1

#ip netns add vm1

#ip link add vm1-eth0 type veth peer name veth-vm1

#ip link set veth-vm1 up

#ip link set vm1-eth0 netns vm1

#ip netns exec vm1 ip link set vm1-eth0 addres 00:00:00:00:00:01

#ip netns exec vm1 ip link set vm1-eth0 up

#ovs-vsctl add-port br-int veth-vm1

ip netns add vm1

ovs-vsctl add-port br-int vm1 -- set interface vm1 type=internal

ip link set vm1 netns vm1

ovs-vsctl set Interface vm1 external_ids:iface-id=vm1-ovn

ip netns exec vm1 ip link set vm1 address 00:00:00:00:00:11

ip netns exec vm1 ip link set vm1 up

ip netns exec vm1 dhclient vm1

#ip netns exec vm1 ip addr add 192.168.21.11/24 dev vm1

#ip netns exec vm1 ip route add default via 192.168.21.1 dev vm1

ip netns exec vm1 ip addr show

#on n2

ip netns add vm2

ovs-vsctl add-port br-int vm2 -- set interface vm2 type=internal

ovs-vsctl set Interface vm2 external_ids:iface-id=vm2-ovn

ip link set vm2 netns vm2

ip netns exec vm2 ip link set vm2 address 00:00:00:00:00:12

ip netns exec vm2 ip link set vm2 up

ip netns exec vm1 dhclient vm2

#ip netns exec vm2 ip addr add 192.168.21.12/24 dev vm2

#ip netns exec vm2 ip route add default via 192.168.21.1 dev vm2

ip netns exec vm2 ip addr show

#test it

ip netns exec vm1 ping 192.168.21.12

ip netns exec vm1 ping 192.168.21.1

#View the port number corresponding to vm1, here it's 2

ovs-ofctl dump-ports-desc br-int |grep addr

#View the mapping between port and datapath

# ovs-dpctl show |grep port

#ovn-trace arp request(arp.op==1) and icmp from vm1 to vm2

root@n1:~# ovn-nbctl show

switch 0d6b50df-c1c9-4787-9bd6-657eb5938178 (private)

port vm1-ovn

addresses: ["00:00:00:00:00:11 192.168.21.11"]

port vm2-ovn

addresses: ["00:00:00:00:00:12 192.168.21.12"]

ovn-trace private 'inport == "vm1-ovn" && eth.src == 00:00:00:00:00:11 && eth.dst == 00:00:00:00:00:12 && arp.op == 1 && arp.sha == 00:00:00:00:00:11 && arp.spa == 192.168.21.11 && arp.tha == 00:00:00:00:00:12 && arp.tpa == 192.168.21.12'

ovn-trace private 'inport == "vm1-ovn" && eth.src == 00:00:00:00:00:11 && eth.dst == 00:00:00:00:00:12 && ip4.src == 192.168.21.11 && ip4.dst == 192.168.21.12' --ct new

ovn-sbctl lflow-list |grep vm1-ovn

#ovs-trace arp request(arp_op=1) and icmp from vm1 to vm2

ovs-appctl ofproto/trace br-int in_port=2,arp,arp_tpa=192.168.21.12,arp_op=1,dl_src=00:00:00:00:00:01

ovs-appctl ofproto/trace br-int in_port=2,icmp,nw_src=192.168.21.11,nw_dst=192.168.21.12,dl_dst=00:00:00:00:00:12,dl_src=00:00:00:00:00:11

3, access qg

#Connect ext_net to provider-router

ovn-nbctl lrp-add provider-router lrp-qg21 00:00:00:00:00:10 192.168.99.10/24

ovn-nbctl lsp-add ext_net lrp-qg21-patch

ovn-nbctl lsp-set-type lrp-qg21-patch router

ovn-nbctl lsp-set-addresses lrp-qg21-patch 00:00:00:00:00:10

ovn-nbctl lsp-set-options lrp-qg21-patch router-port=lrp-qg21

ip netns exec vm1 ping -c1 192.168.99.10

4, North-south - SNAT

#Connect ext_net to external network

ovn-nbctl lsp-add ext_net provnet-localnet

ovn-nbctl lsp-set-addresses provnet-localnet unknown

ovn-nbctl lsp-set-type provnet-localnet localnet

ovn-nbctl lsp-set-options provnet-localnet network_name=physnet1

#only on n1, don't need to run it on n2 because our tenant is tunnel network

ovs-vsctl add-br br-data

ovs-vsctl add-port br-data ens4

ovs-vsctl set Open_vSwitch . external-ids:ovn-bridge-mappings=physnet1:br-data

#ovs-vsctl set Open_vSwitch . external-ids:ovn-cms-options=\"enable-chassis-as-gw\"

ip link set dev br-data up

ovs-vsctl get Open_vSwitch . external-ids

#Schedule qg port into one single chassis or multiple chassises(ha-cahssis-group)

ovn-nbctl lrp-set-gateway-chassis lrp-qg21 n1 2

#ovn-nbctl set logical_router_port lrp-qg21 options:redirect-chassis=n1

ovn-nbctl list logical_router_port

# create ha-chassis-group

#ovn-nbctl ha-chassis-group-add ha1

#ovn-nbctl ha-chassis-group-add-chassis ha1 n1 1

#ovn-nbctl ha-chassis-group-add-chassis ha1 n2 2

#ha1_uuid=`ovn-nbctl --bare --columns _uuid find ha_chassis_group name="ha1"`

#ovn-nbctl set Logical_Router_Port lrp-qg21 ha_chassis_group=$ha1_uuid

#Create the static route to qg. NOTE: It seems like it's okay to add it or not

ovn-nbctl lr-route-add provider-router "0.0.0.0/0" 192.168.99.10

#SNAT

ovn-nbctl -- --id=@nat create nat type="snat" logical_ip=192.168.21.0/24 external_ip=192.168.99.10 -- add logical_router provider-router nat @nat

#ovn-nbctl lr-nat-del provider-router snat 192.168.21.0/24

ovn-nbctl lr-nat-list provider-router

ip netns exec vm1 ping -c1 192.168.99.1

5, North-south - SNAT

#DNAT

ovn-nbctl -- --id=@nat create nat type="dnat" logical_ip=192.168.21.11 external_ip=192.168.99.11 -- add logical_router provider-router nat @nat

ping -c1 192.168.99.11

reference

[1] ovn通过分布式网关端口连接外部网络 - https://www.jianshu.com/p/dc565d6aaebd

[2] https://www.jianshu.com/p/44153cf101dd

[3] ovn原理与实践 - https://blog.csdn.net/NUCEMLS/article/details/126149936

本文内容由网友自发贡献,版权归原作者所有,本站不承担相应法律责任。如您发现有涉嫌抄袭侵权的内容,请联系:hwhale#tublm.com(使用前将#替换为@)