pytorch的densenet模块在torchvision的models中。

DenseNet由多个DenseBlock组成。

所以DenseNet一共有DenseNet-121,DenseNet-169,DenseNet-201和DenseNet-264 四种实现方式。

拿DenseNet-121为例,121表示的是卷积层和全连接层加起来的数目(一共120个卷积层,1个全连接层)

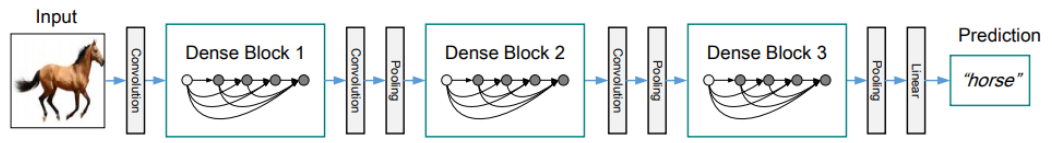

(图1)

从上图可以看到,无论是哪种类型的DenseNet都是由4个DenseBlock构成。

DenseNet-121 :第一个DenseBlock由6个DenseLayer组成,第二个DenseBlock由12个DenseLayer组成,第三个DenseBlock由24个DenseLayer组成,第四个DenseBlock由16个DenseLayer组成。

其他DenseNet-169、201、264的组成部分图1也有写明。

下面介绍一下DenseBlock

一,DenseBlock

(图二)

从上图二可以看出来,DenseBlock中,由多个DenseLayer组成。较前的DenseLayer都会连接到它后面的每一个特征图中。

下面为由3个DenseBlock组成的DenseNet:

代码结构:

然后我看_densenet()的代码:

def _densenet(arch, growth_rate, block_config, num_init_features, pretrained, progress,

**kwargs):

model = DenseNet(growth_rate, block_config, num_init_features, **kwargs)

if pretrained:

_load_state_dict(model, model_urls[arch], progress)

return model

可以发现,_densenet()主要是调用DenseNet()来构建 densenet的,不过_densenet()还做了一部,就是若我们需要引入预训练模型,那么_densenet()会帮我们载入预训练模型。

然后是DenseNet()的代码:

看看DenseNet()做了什么:

通过下面代码我们可以看到,DenseNet()主要构建:首层卷积层、四个DenseBlock、DenseBlock后接的TransitionLayer、分类器。注意:构建DenseBlock那里的,“block_config”参数是按照不同DenseNet类型而不同的,例如DenseNet-121的block_config就是(6,12,24,16),依次表示4个DenseBlock中各有6个、12个、24个、16个DenseLayer。

class DenseNet(nn.Module):

def __init__(self, growth_rate=32, block_config=(6, 12, 24, 16),

num_init_features=64, bn_size=4, drop_rate=0, num_classes=1000, memory_efficient=False):

super(DenseNet, self).__init__()

# 首层卷积层

self.features = nn.Sequential(OrderedDict([

('conv0', nn.Conv2d(3, num_init_features, kernel_size=7, stride=2,

padding=3, bias=False)),

('norm0', nn.BatchNorm2d(num_init_features)),

('relu0', nn.ReLU(inplace=True)),

('pool0', nn.MaxPool2d(kernel_size=3, stride=2, padding=1)),

]))

# 构建DenseBlock

num_features = num_init_features

for i, num_layers in enumerate(block_config): #构建4个DenseBlock

block = _DenseBlock(

num_layers=num_layers,

num_input_features=num_features,

bn_size=bn_size,

growth_rate=growth_rate,

drop_rate=drop_rate,

memory_efficient=memory_efficient

)

self.features.add_module('denseblock%d' % (i + 1), block)

num_features = num_features + num_layers * growth_rate

if i != len(block_config) - 1:

trans = _Transition(num_input_features=num_features, #每个DenseBlock后跟一个TransitionLayer

num_output_features=num_features // 2)

self.features.add_module('transition%d' % (i + 1), trans)

num_features = num_features // 2

# Final batch norm

self.features.add_module('norm5', nn.BatchNorm2d(num_features))

# Linear layer

self.classifier = nn.Linear(num_features, num_classes) #构建分类器

# Official init from torch repo.

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight)

elif isinstance(m, nn.BatchNorm2d):

nn.init.constant_(m.weight, 1)

nn.init.constant_(m.bias, 0)

elif isinstance(m, nn.Linear):

nn.init.constant_(m.bias, 0)

def forward(self, x):

features = self.features(x)

out = F.relu(features, inplace=True)

out = F.adaptive_avg_pool2d(out, (1, 1))

out = torch.flatten(out, 1)

out = self.classifier(out)

return out

既然DenseNet()主要是通过调用_DenseBlock()来构建DenseBlock的,那么

我们就看看_DenseBlock()的代码写了什么:

可以看到_DenseBlcok()通过输入的num_layer参数,来构建DenseLayer。num_layer就是上面的(6,12,24,16)中的随便一个数字。并且_DenseBlock()还对构建的每一个DenseLayer指定输入的特征图的通道。

class _DenseBlock(nn.Module):

def __init__(self, num_layers, num_input_features, bn_size, growth_rate, drop_rate, memory_efficient=False):

super(_DenseBlock, self).__init__()

for i in range(num_layers):

layer = _DenseLayer(

num_input_features + i * growth_rate,

growth_rate=growth_rate,

bn_size=bn_size,

drop_rate=drop_rate,

memory_efficient=memory_efficient,

)

self.add_module('denselayer%d' % (i + 1), layer)

def forward(self, init_features):

features = [init_features]

for name, layer in self.named_children():

new_features = layer(*features)

features.append(new_features)

return torch.cat(features, 1)

然后就是DenseLayer()的代码:

从代码可以看出来,每个DenseLayer的卷积都是两个卷积核,一个1x1的,一个3x3的。1x1的卷积核负责调整维度,把维度调到bn_size*growth_rate,growth_rate根据不同的DenseNet类型而不同,但bn_size一半是固定为4的。

class _DenseLayer(nn.Sequential):

def __init__(self, num_input_features, growth_rate, bn_size, drop_rate, memory_efficient=False):

super(_DenseLayer, self).__init__()

self.add_module('norm1', nn.BatchNorm2d(num_input_features)),

self.add_module('relu1', nn.ReLU(inplace=True)),

self.add_module('conv1', nn.Conv2d(num_input_features, bn_size *

growth_rate, kernel_size=1, stride=1,

bias=False)),

self.add_module('norm2', nn.BatchNorm2d(bn_size * growth_rate)),

self.add_module('relu2', nn.ReLU(inplace=True)),

self.add_module('conv2', nn.Conv2d(bn_size * growth_rate, growth_rate,

kernel_size=3, stride=1, padding=1,

bias=False)),

self.drop_rate = drop_rate

self.memory_efficient = memory_efficient

def forward(self, *prev_features):

bn_function = _bn_function_factory(self.norm1, self.relu1, self.conv1)

if self.memory_efficient and any(prev_feature.requires_grad for prev_feature in prev_features):

bottleneck_output = cp.checkpoint(bn_function, *prev_features)

else:

bottleneck_output = bn_function(*prev_features)

new_features = self.conv2(self.relu2(self.norm2(bottleneck_output)))

if self.drop_rate > 0:

new_features = F.dropout(new_features, p=self.drop_rate,

training=self.training)

return new_features

下面以为DenseNet-121为例说明:

我们从源码中可以看到,DenseNet-121的配置是这样子的

*上图红框处,32表示growth_rate,有两个作用:

1. DenseBlock中的每个DenseLayer的输入特征图的通道数依次增加growth_rate(densenet-121即32)。

2. 每个DenseLayer会通过卷积层调整最后输出的通道数,最后的通道数一定和growth_rate是一样的。

*而红框处(6,12,24,16)表示四个DenseBlock,每个DenseBlock含有多好个DenseLayer

*红框处下一个参数是64,表示num_init_features:

作用是经过首层卷积层后,出来的特征图的通道数。

详细如下图:

下图中input和output跟着的数字表示特征图的通道数:

从上图我们可以看出,每个DenseBlock之间,都会夹着一个 TransitionLayer,这个层的作用是把每个DenseLayer的输出聚合在一起后,再降维,降维的方式是,把通道数减一半。

我们可以看看TransitionLayer的代码:

class _Transition(nn.Sequential):

def __init__(self, num_input_features, num_output_features):

super(_Transition, self).__init__()

self.add_module('norm', nn.BatchNorm2d(num_input_features))

self.add_module('relu', nn.ReLU(inplace=True))

self.add_module('conv', nn.Conv2d(num_input_features, num_output_features,

kernel_size=1, stride=1, bias=False))

self.add_module('pool', nn.AvgPool2d(kernel_size=2, stride=2)) #尺寸减少一半

我们可以看到,一部分作用是降维(降低一半),一部分是平均池化,意在改变特征图尺寸。

二,DenseNet和ResNet:

图出自:https://zhuanlan.zhihu.com/p/37189203

首先是resnet,特征图融合的方式是数值相加

而DenseNet,特征图融合的方式则是连接。即把要融合的特征图堆叠在一起

1. DenseNet比ResNet更占显存,因为在一次推断中,DenseNet所产生的特征图会一定程度代表所占显存大小。有些框架会有优化,自动把比较靠前的层的feature map释放掉,但是densenet因为需要重复利用比较靠前的feature map,所以无法释放,导致显存占用过大。

2. 由于密集连接方式,DenseNet提升了梯度的反向传播,使得网络更容易训练。由于每层可以直达最后的误差信号,实现了隐式的“deep supervision”;

3. 参数更小且计算更高效,这有点违反直觉,由于DenseNet是通过concat特征来实现短路连接,实现了特征重用,并且采用较小的growth rate,每个层所独有的特征图是比较小的;

4. 由于特征复用,最后的分类器使用了低级特征。

代码:

mydensenet.py

from torchvision.models.densenet import densenet121

import torch.nn as nn

import sys

import numpy as np

import torch

from torch.autograd import Variable

from torchvision.datasets import CIFAR10

import torchvision.transforms as transforms

class Net(nn.Module):

def __init__(self, model):

super(Net, self).__init__()

# 取掉model的后1层

self.resnet_layer = nn.Sequential(*list(model.children())[:-1])

self.Linear_layer = nn.Linear(1024, 10) # 加上一层参数修改好的全连接层,由于cifar-10数据集是10分类,所以最后的分类器要改成10

def forward(self, x):

x = self.resnet_layer(x)

x = x.view(x.size(0), -1)

x = self.Linear_layer(x)

return x

def data_tf(x):

x = np.array(x, dtype='float32') / 255

x = (x - 0.5) / 0.5

x = x.transpose((2, 0, 1)) ## 将 channel 放到第一维,只是 pytorch 要求的输入方式

x = torch.from_numpy(x)

return x

transform = transforms.Compose([transforms.ToTensor(),

transforms.Normalize(mean=(0.5, 0.5, 0.5), std=(0.5, 0.5, 0.5)),

])

def get_acc(output, label):

total = output.shape[0]

_, pred_label = output.max(1)

num_correct = (pred_label == label).sum().item()

return num_correct / total

def train(net, train_data, valid_data, num_epochs, optimizer, criterion):

if torch.cuda.is_available():

net = net.cuda()

for epoch in range(num_epochs):

train_loss = 0

train_acc = 0

net = net.train()

for im, label in train_data:

if torch.cuda.is_available():

im = Variable(im.cuda())

label = Variable(label.cuda())

else:

im = Variable(im)

label = Variable(label)

# forward

output = net(im)

loss = criterion(output, label)

# forward

optimizer.zero_grad()

loss.backward()

optimizer.step()

train_loss += loss.item()

train_acc += get_acc(output, label)

if valid_data is not None:

valid_loss = 0

valid_acc = 0

net = net.eval()

for im, label in valid_data:

if torch.cuda.is_available():

with torch.no_grad():

im = Variable(im.cuda())

label = Variable(label.cuda())

else:

with torch.no_grad():

im = Variable(im)

label = Variable(label)

output = net(im)

loss = criterion(output, label)

valid_loss += loss.item()

valid_acc += get_acc(output, label)

epoch_str = (

"Epoch %d. Train Loss: %f, Train Acc: %f, Valid Loss: %f, Valid Acc: %f, "

% (epoch, train_loss / len(train_data),

train_acc / len(train_data), valid_loss / len(valid_data),

valid_acc / len(valid_data)))

else:

epoch_str = ("Epoch %d. Train Loss: %f, Train Acc: %f, " %

(epoch, train_loss / len(train_data),

train_acc / len(train_data)))

# prev_time = cur_time

print(epoch_str)

if __name__ =='__main__':

densenet = densenet121(pretrained=True)

net = Net(densenet)

train_set = CIFAR10('./data', train=True, transform=transform, download=True)

train_data = torch.utils.data.DataLoader(train_set, batch_size=64, shuffle=True)

test_set = CIFAR10('./data', train=False, transform=transform, download=True)

test_data = torch.utils.data.DataLoader(test_set, batch_size=128, shuffle=False)

optimizer = torch.optim.SGD(net.parameters(), lr=1e-1)

criterion = nn.CrossEntropyLoss() # 损失函数为交叉熵

train(net, train_data, test_data, 50, optimizer, criterion)

torch.save(net, 'denstnet.pth')

训练效果图:

test.py

import torch

import cv2

import torch.nn.functional as F

from mydensenet import Net ##重要,虽然显示灰色(即在次代码中没用到),但若没有引入这个模型代码,加载模型时会找不到模型

from torch.autograd import Variable

from torchvision import datasets, transforms

import numpy as np

classes = ('plane', 'car', 'bird', 'cat',

'deer', 'dog', 'frog', 'horse', 'ship', 'truck')

if __name__ == '__main__':

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

model = torch.load('densenet.pth') # 加载模型

model = model.to(device)

model.eval() # 把模型转为test模式

img = cv2.imread(r"C:\Users\Administrator\Desktop\pic\bird.jpg") # 读取要预测的图片

trans = transforms.Compose(

[

transforms.ToTensor(),

transforms.Normalize(mean=(0.5, 0.5, 0.5), std=(0.5, 0.5, 0.5))

])

img = trans(img)

img = img.to(device)

img = img.unsqueeze(0) # 图片扩展多一维,因为输入到保存的模型中是4维的[batch_size,通道,长,宽],而普通图片只有三维,[通道,长,宽]

# 扩展后,为[1,1,28,28]

output = model(img)

prob = F.softmax(output, dim=1) # prob是10个分类的概率

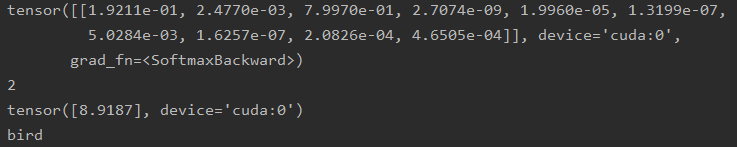

print(prob)

value, predicted = torch.max(output.data, 1)

print(predicted.item())

print(value)

pred_class = classes[predicted.item()]

print(pred_class)

网上找一张小鸟的图,然后用画图软件把它resize成 32x32:

测试效果:

本文内容由网友自发贡献,版权归原作者所有,本站不承担相应法律责任。如您发现有涉嫌抄袭侵权的内容,请联系:hwhale#tublm.com(使用前将#替换为@)