import collections

import os

import pickle

import warnings

import hydra

import numpy as np

import torch

from nerf.dataset import get_nerf_datasets, trivial_collate

from nerf.nerf_renderer import RadianceFieldRenderer, visualize_nerf_outputs

from nerf.stats import Stats

from omegaconf import DictConfig

from visdom import Visdom

CONFIG_DIR = os.path.join(os.path.dirname(os.path.realpath(__file__)), "configs") ##获取configs的路径添加进configdir

if __name__ == "__main__":

main()

@hydra.main(config_path=CONFIG_DIR, config_name="lego") ##函数修饰器声明

def main(cfg: DictConfig):

# Set the relevant seeds for reproducibility.设置随机数种子

np.random.seed(cfg.seed)

torch.manual_seed(cfg.seed)

# Device on which to run.

if torch.cuda.is_available():

device = "cuda"

else:

warnings.warn(

"Please note that although executing on CPU is supported,"

+ "the training is unlikely to finish in reasonable time."

)

device = "cpu"

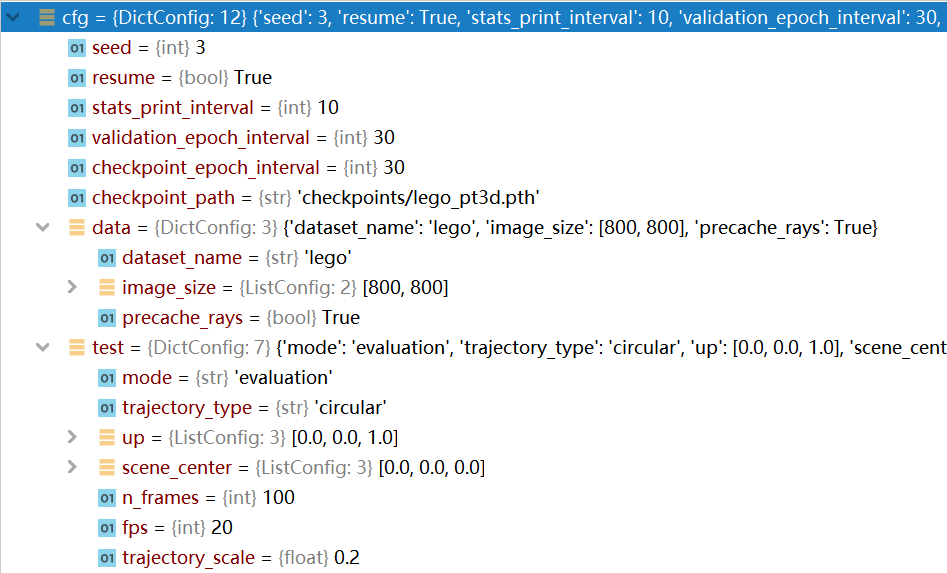

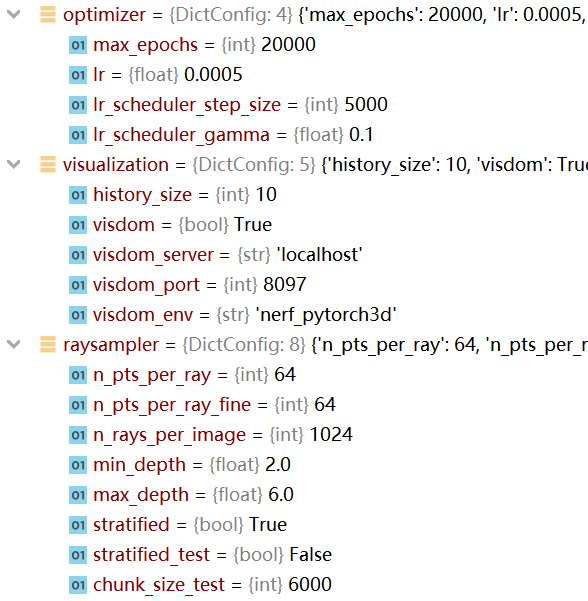

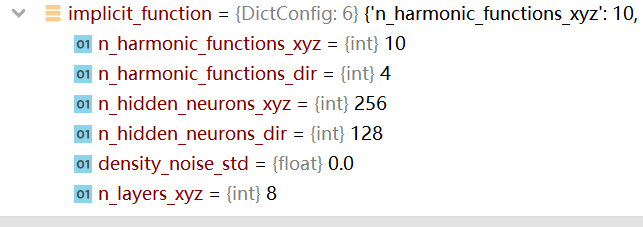

config值

{'seed': 3, 'resume': True, 'stats_print_interval': 10, 'validation_epoch_interval': 30, 'checkpoint_epoch_interval': 30, 'checkpoint_path': 'checkpoints/lego_pt3d.pth', 'data': {'dataset_name': 'lego', 'image_size': [800, 800], 'precache_rays': True}, 'test': {'mode': 'evaluation', 'trajectory_type': 'circular', 'up': [0.0, 0.0, 1.0], 'scene_center': [0.0, 0.0, 0.0], 'n_frames': 100, 'fps': 20, 'trajectory_scale': 0.2}, 'optimizer': {'max_epochs': 20000, 'lr': 0.0005, 'lr_scheduler_step_size': 5000, 'lr_scheduler_gamma': 0.1}, 'visualization': {'history_size': 10, 'visdom': True, 'visdom_server': 'localhost', 'visdom_port': 8097, 'visdom_env': 'nerf_pytorch3d'}, 'raysampler': {'n_pts_per_ray': 64, 'n_pts_per_ray_fine': 64, 'n_rays_per_image': 1024, 'min_depth': 2.0, 'max_depth': 6.0, 'stratified': True, 'stratified_test': False, 'chunk_size_test': 6000}, 'implicit_function': {'n_harmonic_functions_xyz': 10, 'n_harmonic_functions_dir': 4, 'n_hidden_neurons_xyz': 256, 'n_hidden_neurons_dir': 128, 'density_noise_std': 0.0, 'n_layers_xyz': 8}}

# Initialize the Radiance Field model.

model = RadianceFieldRenderer(

image_size=cfg.data.image_size,

n_pts_per_ray=cfg.raysampler.n_pts_per_ray, # 每一术光线采样的n个点

n_pts_per_ray_fine=cfg.raysampler.n_pts_per_ray, # 每一束光线采样的n个点 对于fine网络

n_rays_per_image=cfg.raysampler.n_rays_per_image, ##每个图像的n条光束

min_depth=cfg.raysampler.min_depth, # 最近的边界

max_depth=cfg.raysampler.max_depth, # 最远的边界

stratified=cfg.raysampler.stratified, # 分层

stratified_test=cfg.raysampler.stratified_test, # 分层测试

chunk_size_test=cfg.raysampler.chunk_size_test, # 块大小测试

n_harmonic_functions_xyz=cfg.implicit_function.n_harmonic_functions_xyz, # 坐标

n_harmonic_functions_dir=cfg.implicit_function.n_harmonic_functions_dir, # 方向

n_hidden_neurons_xyz=cfg.implicit_function.n_hidden_neurons_xyz, # xyz的隐藏神经元

n_hidden_neurons_dir=cfg.implicit_function.n_hidden_neurons_dir, # 方向的隐藏神经元

n_layers_xyz=cfg.implicit_function.n_layers_xyz, # 坐标n层

density_noise_std=cfg.implicit_function.density_noise_std, # 密度的噪音方差

visualization=cfg.visualization.visdom, # 可视化

)

# Move the model to the relevant device.

model.to(device)

class RadianceFieldRenderer(torch.nn.Module):

"""

Raybundler->class(orgin, direction, length, xys)

文件实现了 RadianceFieldRenderer类,集成torch.nn.Modulerendering的前向传播过程如下:

1.对于给定的输入 camera,rendering ray 通过“NeRFRaysampler” 产生。

2.在training mode下,射线是一组“n_rays_per_image”的图像网格的随机2D位置。

3.在evaluation mode下,光束对应的是整张图片的网格.光束被进一步分割为“chunk_size_test” 大小的块,以防止内存不足的错误.

渲染前向传递过程如下:

1) 对于给定的输入相机,渲染光线是用

`self._renderer['coarse']` 的 `NeRFRaysampler` 对象。

在训练模式下(`self.training==True`),光线是一组

图像网格的“n_rays_per_image”随机二维位置。

在评估模式下(`self.training==False`),光线对应

到完整的图像网格。光线进一步分裂为

`chunk_size_test`-大小的块,以防止内存不足错误。

2) 对于每个射线点,评估粗略的 `NeuralRadianceField` MLP。

指向此 MLP 的指针存储在 `self._implicit_function['coarse']` 中

3) 粗略的辐射场用`self._renderer['coarse']` 的 `EmissionAbsorptionNeRFRaymarcher` 对象。

4)粗raymarcher输出引导的概率分布用于精细渲染通道的重要性光线采样。这

`ProbabilisticRaysampler` 存储在 `self._renderer['fine'].raysampler` 中

实现重要性光线采样。

5) 类似于 2) `self._implicit_function['fine']` 中的精细 MLP

用占据和颜色标记射线点。

6) self._renderer['fine'].raymarcher` 生成最终的精细渲染。

7) 将精细渲染和粗糙渲染与地面实况输入图像进行比较与 PSNR 和 MSE 指标。

"""

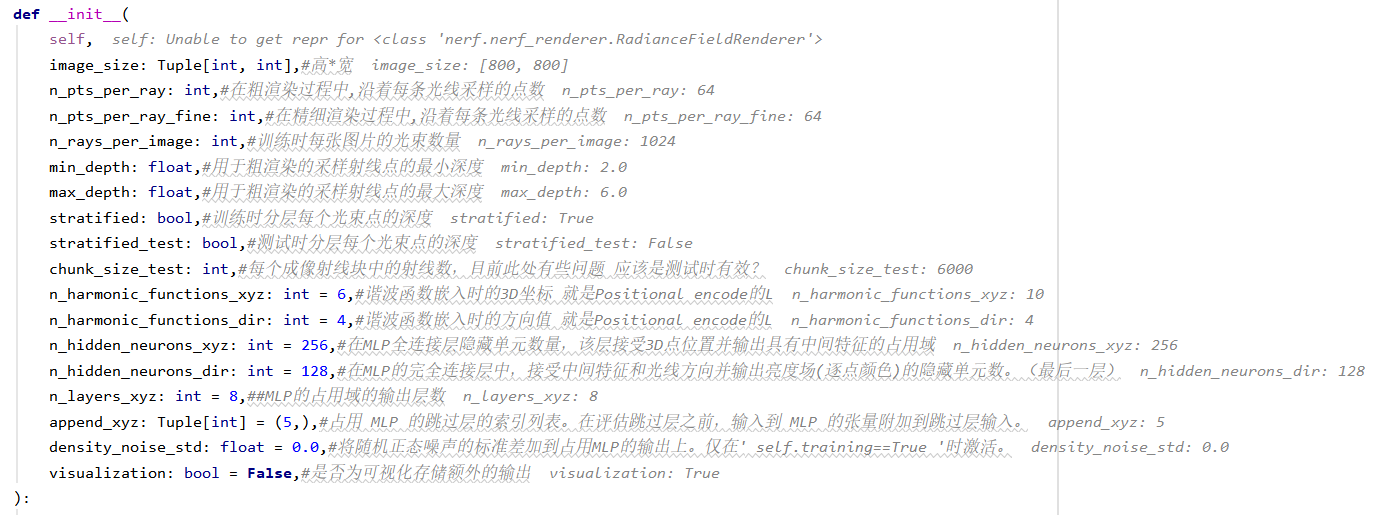

def __init__(

self,

image_size: Tuple[int, int],#高*宽

n_pts_per_ray: int,#在粗渲染过程中,沿着每条光线采样的点数

n_pts_per_ray_fine: int,#在精细渲染过程中,沿着每条光线采样的点数

n_rays_per_image: int,#训练时每张图片的光束数量

min_depth: float,#用于粗渲染的采样射线点的最小深度

max_depth: float,#用于粗渲染的采样射线点的最大深度

stratified: bool,#训练时分层每个光束点的深度

stratified_test: bool,#测试时分层每个光束点的深度

chunk_size_test: int,#每个成像射线块中的射线数,目前此处有些问题 应该是测试时有效?

n_harmonic_functions_xyz: int = 6,#谐波函数嵌入时的3D坐标 就是Positional encode的L

n_harmonic_functions_dir: int = 4,#谐波函数嵌入时的方向值 就是Positional encode的L

n_hidden_neurons_xyz: int = 256,#在MLP全连接层隐藏单元数量,该层接受3D点位置并输出具有中间特征的占用域

n_hidden_neurons_dir: int = 128,#在MLP的完全连接层中,接受中间特征和光线方向并输出亮度场(逐点颜色)的隐藏单元数。(最后一层)

n_layers_xyz: int = 8,##MLP的占用域的输出层数

append_xyz: Tuple[int] = (5,),#占用 MLP 的跳过层的索引列表。在评估跳过层之前,输入到 MLP 的张量附加到跳过层输入。

density_noise_std: float = 0.0,#将随机正态噪声的标准差加到占用MLP的输出上。仅在' self.training==True '时激活。

visualization: bool = False,#是否为可视化存储额外的输出

):

"""

Args:

image_size: The size of the rendered image (`[height, width]`).

n_pts_per_ray: The number of points sampled along each ray for the

coarse rendering pass.

n_pts_per_ray_fine: The number of points sampled along each ray for the

fine rendering pass.

n_rays_per_image: Number of Monte Carlo ray samples when training

(`self.training==True`).

min_depth: The minimum depth of a sampled ray-point for the coarse rendering.

max_depth: The maximum depth of a sampled ray-point for the coarse rendering.

stratified: If `True`, stratifies (=randomly offsets) the depths

of each ray point during training (`self.training==True`).

stratified_test: If `True`, stratifies (=randomly offsets) the depths

of each ray point during evaluation (`self.training==False`).

chunk_size_test: The number of rays in each chunk of image rays.

Active only when `self.training==True`.

n_harmonic_functions_xyz: The number of harmonic functions

used to form the harmonic embedding of 3D point locations.

n_harmonic_functions_dir: The number of harmonic functions

used to form the harmonic embedding of the ray directions.

n_hidden_neurons_xyz: The number of hidden units in the

fully connected layers of the MLP that accepts the 3D point

locations and outputs the occupancy field with the intermediate

features.

n_hidden_neurons_dir: The number of hidden units in the

fully connected layers of the MLP that accepts the intermediate

features and ray directions and outputs the radiance field

(per-point colors).

n_layers_xyz: The number of layers of the MLP that outputs the

occupancy field.

append_xyz: The list of indices of the skip layers of the occupancy MLP.

Prior to evaluating the skip layers, the tensor which was input to MLP

is appended to the skip layer input.

density_noise_std: The standard deviation of the random normal noise

added to the output of the occupancy MLP.

Active only when `self.training==True`.

visualization: whether to store extra output for visualization.

"""

super().__init__()

# The renderers and implicit functions are stored under the fine/coarse

# keys in ModuleDict PyTorch modules.

# 渲染器和隐式函数存储在fine/coarse下

# ModuleDict PyTorch 模块中的键

self._renderer = torch.nn.ModuleDict()##定义render(分coarse和fine) 两个key分别是coarse和fine

self._implicit_function = torch.nn.ModuleDict()##定义网络隐层函数(分coarse和fine), 结构和renderer一致,不过对应的是NeuralRadiencefunction

#self._implicit_function->n_harmonic_function_xyz_dir hidden_neurons_xyz_dir, n_layers, append_xyz

# Init the EA raymarcher used by both passes.

raymarcher = EmissionAbsorptionNeRFRaymarcher()##返回的是特征和权重

# Parse out image dimensions.

image_height, image_width = image_size#800 800

raymarcher 都是用 EmissionAbsorptionNeRFRaymarcher()

粗采样 均匀点采样 用 NeRFRaysampler

细采样 重要性采样 用 ProbabilisticRaysampler

for render_pass in ("coarse", "fine"):

if render_pass == "coarse":

# Initialize the coarse raysampler.

#正常的均匀点采样

raysampler = NeRFRaysampler(

n_pts_per_ray=n_pts_per_ray,##沿每条光线的采样点数

min_depth=min_depth,

max_depth=max_depth,

stratified=stratified,#训练时分层每个光束点的深度

stratified_test=stratified_test,

n_rays_per_image=n_rays_per_image,

image_height=image_height,

image_width=image_width,

)

elif render_pass == "fine":

#重要性采样

# Initialize the fine raysampler.

raysampler = ProbabilisticRaysampler(

n_pts_per_ray=n_pts_per_ray_fine,

stratified=stratified,

stratified_test=stratified_test,

)

else:

raise ValueError(f"No such rendering pass {render_pass}")

1、训练时 随机采样 。

2、测试时 采样整个图像网格。同时 采用 分块策略 可防止内存不足

3、对光线进行 预缓存 可加快 NeRF采样速度

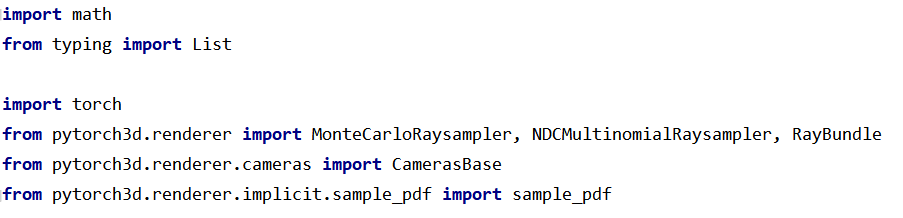

class NeRFRaysampler(torch.nn.Module):

"""

实现 NeRF 的光线采样器。

根据 `self.training` 标志,光线采样器要么采样一大块随机光线(`self.training==True`),或返回光线子集完整的图像网格(`self.training==False`)。

射线的分块允许有效地评估NeRF隐式表面函数,而不会遇到GPU内存错误。

此外,此光线采样器支持光线束的预缓存,对于一组输入相机(`self.precache_rays`),在训练之前预先缓存光线可以大大加快随后 训练 NeRF 迭代的射线采样步骤 的处理速度

"""

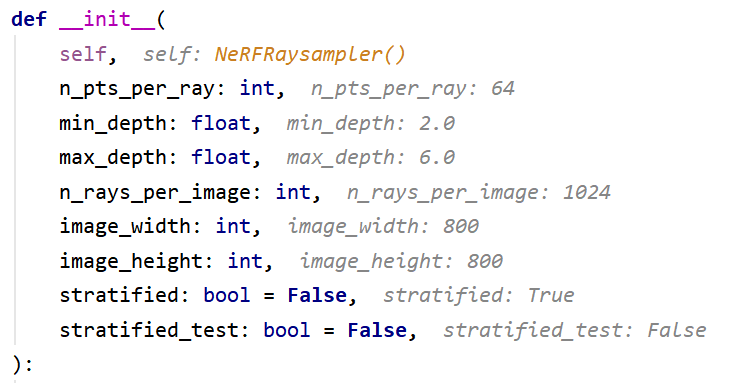

def __init__(

self,

n_pts_per_ray: int,

min_depth: float,

max_depth: float,

n_rays_per_image: int,

image_width: int,

image_height: int,

stratified: bool = False,

stratified_test: bool = False,

):

"""

Args:

n_pts_per_ray:沿每条射线采样的点数。

min_depth:射线点的最小深度。

max_depth:射线点的最大深度。

n_rays_per_image:训练时的蒙特卡洛射线样本数

(`self.training==True`)。

image_width:图像网格的水平尺寸。

image_height:图像网格的垂直尺寸。

stratified:如果“真”,分层(=随机偏移)深度

训练期间的每个射线点(`self.training==True`)。

stratified_test:如果为“真”,则分层(=随机偏移)深度

评估期间的每个射线点(`self.training==False`)。

"""

super().__init__()

self._stratified = stratified

self._stratified_test = stratified_test

# Initialize the grid ray sampler.初始化网格射线采样器

self._grid_raysampler = NDCMultinomialRaysampler(

image_width=image_width,

image_height=image_height,

n_pts_per_ray=n_pts_per_ray,

min_depth=min_depth,

max_depth=max_depth,

)

# Initialize the Monte Carlo ray sampler.

self._mc_raysampler = MonteCarloRaysampler(

min_x=-1.0,

max_x=1.0,

min_y=-1.0,

max_y=1.0,

n_rays_per_image=n_rays_per_image,

n_pts_per_ray=n_pts_per_ray,

min_depth=min_depth,

max_depth=max_depth,

)

# create empty ray cache 创建新的光线缓存

self._ray_cache = {}

初始化网格射线采样器

self._grid_raysampler = NDCMultinomialRaysampler

初始化Monte Carlo ray sampler.

self._mc_raysampler = MonteCarloRaysampler

NDCMultinomialRaysampler

MonteCarloRaysampler

见pytorch3D

ImplicitRenderer 见pytorch3D

# Initialize the fine/coarse renderer.初始化fine/coarse render

self._renderer[render_pass] = ImplicitRenderer(

raysampler=raysampler,

raymarcher=raymarcher,

)

# Instantiate the fine/coarse NeuralRadianceField module.网络实例化

self._implicit_function[render_pass] = NeuralRadianceField(

n_harmonic_functions_xyz=n_harmonic_functions_xyz,

n_harmonic_functions_dir=n_harmonic_functions_dir,

n_hidden_neurons_xyz=n_hidden_neurons_xyz,

n_hidden_neurons_dir=n_hidden_neurons_dir,

n_layers_xyz=n_layers_xyz,

append_xyz=append_xyz,

)

self._density_noise_std = density_noise_std

self._chunk_size_test = chunk_size_test

self._image_size = image_size

self.visualization = visualization

详细代码见下面implicit_function.py模块

HarmonicEmbedding 见pytorch3D

self.mlp_xyz = MLPWithInputSkips(##使用多层感知机

n_layers_xyz,

embedding_dim_xyz,

n_hidden_neurons_xyz,

embedding_dim_xyz,

n_hidden_neurons_xyz,

input_skips=append_xyz,

)

class MLPWithInputSkips

class MLPWithInputSkips(torch.nn.Module):

"""

实现神经辐射场的多层感知器架构。

因此,`MLPWithInputSkips` 是一个多层感知器,包括

具有 ReLU 激活的线性层序列。

Additionally, for a set of predefined layers `input_skips`, the forward pass

appends a skip tensor `z` to the output of the preceding layer.

此外,对于一组预定义层 `input_skips`,前向传递

在前一层的输出上附加一个跳过张量“z”。

Note that this follows the architecture described in the Supplementary

Material (Fig. 7) of [1].

References:

[1] Ben Mildenhall and Pratul P. Srinivasan and Matthew Tancik

and Jonathan T. Barron and Ravi Ramamoorthi and Ren Ng:

NeRF: Representing Scenes as Neural Radiance Fields for View

Synthesis, ECCV2020

"""

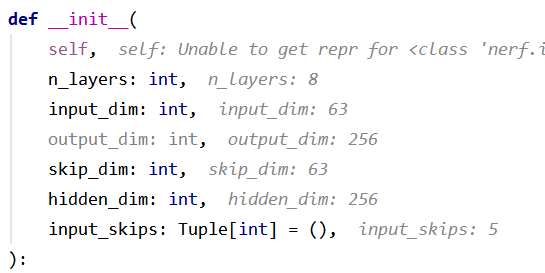

def __init__(

self,

n_layers: int,

input_dim: int,

output_dim: int,

skip_dim: int,

hidden_dim: int,

input_skips: Tuple[int] = (),

):

"""

Args:

n_layers: The number of linear layers of the MLP.

input_dim: The number of channels of the input tensor.

output_dim: The number of channels of the output.

skip_dim: The number of channels of the tensor `z` appended when

evaluating the skip layers.

hidden_dim: The number of hidden units of the MLP.

input_skips: The list of layer indices at which we append the skip

tensor `z`.

"""

super().__init__()

layers = []

for layeri in range(n_layers):

if layeri == 0:

dimin = input_dim

dimout = hidden_dim

elif layeri in input_skips:

dimin = hidden_dim + skip_dim

dimout = hidden_dim

else:

dimin = hidden_dim

dimout = hidden_dim

linear = torch.nn.Linear(dimin, dimout)

_xavier_init(linear)

layers.append(torch.nn.Sequential(linear, torch.nn.ReLU(True)))

self.mlp = torch.nn.ModuleList(layers)

self._input_skips = set(input_skips)

self.mlp_xyz的值

MLPWithInputSkips(

(mlp): ModuleList(

(0): Sequential(

(0): Linear(in_features=63, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

(1): Sequential(

(0): Linear(in_features=256, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

(2): Sequential(

(0): Linear(in_features=256, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

(3): Sequential(

(0): Linear(in_features=256, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

(4): Sequential(

(0): Linear(in_features=256, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

(5): Sequential(

(0): Linear(in_features=319, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

(6): Sequential(

(0): Linear(in_features=256, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

(7): Sequential(

(0): Linear(in_features=256, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

)

)

self.intermediate_linear = torch.nn.Linear(

n_hidden_neurons_xyz, n_hidden_neurons_xyz

)

_xavier_init(self.intermediate_linear)

self.density_layer = torch.nn.Linear(n_hidden_neurons_xyz, 1)#生成一个密度

_xavier_init(self.density_layer)

# Zero the bias of the density layer to avoid

# a completely transparent initialization.

# 将密度层的偏差归零以避免

# 一个完全透明的初始化。

self.density_layer.bias.data[:] = 0.0 # fixme: Sometimes this is not enough

self.color_layer = torch.nn.Sequential(

LinearWithRepeat(#输入是经过mlp全连接层后的中间特征和位置编码过后的direction

n_hidden_neurons_xyz + embedding_dim_dir, n_hidden_neurons_dir

),#输出是光线上每个点的特征以及该光线方向编码特征

torch.nn.ReLU(True),

torch.nn.Linear(n_hidden_neurons_dir, 3),

torch.nn.Sigmoid(),

)##获得一个rays_rgb图像

self.use_multiple_streams = use_multiple_streams

NeuralRadianceField(

(harmonic_embedding_xyz): HarmonicEmbedding()

(harmonic_embedding_dir): HarmonicEmbedding()

(mlp_xyz): MLPWithInputSkips(

(mlp): ModuleList(

(0): Sequential(

(0): Linear(in_features=63, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

(1): Sequential(

(0): Linear(in_features=256, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

(2): Sequential(

(0): Linear(in_features=256, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

(3): Sequential(

(0): Linear(in_features=256, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

(4): Sequential(

(0): Linear(in_features=256, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

(5): Sequential(

(0): Linear(in_features=319, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

(6): Sequential(

(0): Linear(in_features=256, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

(7): Sequential(

(0): Linear(in_features=256, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

)

)

(intermediate_linear): Linear(in_features=256, out_features=256, bias=True)

(density_layer): Linear(in_features=256, out_features=1, bias=True)

(color_layer): Sequential(

(0): LinearWithRepeat()

(1): ReLU(inplace=True)

(2): Linear(in_features=128, out_features=3, bias=True)

(3): Sigmoid()

)

)

elif render_pass == "fine":

#重要性采样

# Initialize the fine raysampler.

raysampler = ProbabilisticRaysampler(

n_pts_per_ray=n_pts_per_ray_fine,

stratified=stratified,

stratified_test=stratified_test,

)

RadianceFieldRenderer(

(_renderer): ModuleDict(

(coarse): ImplicitRenderer(

(raysampler): NeRFRaysampler(

(_grid_raysampler): NDCMultinomialRaysampler()

(_mc_raysampler): MonteCarloRaysampler()

)

(raymarcher): EmissionAbsorptionNeRFRaymarcher()

)

(fine): ImplicitRenderer(

(raysampler): ProbabilisticRaysampler()

(raymarcher): EmissionAbsorptionNeRFRaymarcher()

)

)

(_implicit_function): ModuleDict(

(coarse): NeuralRadianceField(

(harmonic_embedding_xyz): HarmonicEmbedding()

(harmonic_embedding_dir): HarmonicEmbedding()

(mlp_xyz): MLPWithInputSkips(

(mlp): ModuleList(

(0): Sequential(

(0): Linear(in_features=63, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

(1): Sequential(

(0): Linear(in_features=256, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

(2): Sequential(

(0): Linear(in_features=256, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

(3): Sequential(

(0): Linear(in_features=256, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

(4): Sequential(

(0): Linear(in_features=256, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

(5): Sequential(

(0): Linear(in_features=319, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

(6): Sequential(

(0): Linear(in_features=256, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

(7): Sequential(

(0): Linear(in_features=256, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

)

)

(intermediate_linear): Linear(in_features=256, out_features=256, bias=True)

(density_layer): Linear(in_features=256, out_features=1, bias=True)

(color_layer): Sequential(

(0): LinearWithRepeat()

(1): ReLU(inplace=True)

(2): Linear(in_features=128, out_features=3, bias=True)

(3): Sigmoid()

)

)

(fine): NeuralRadianceField(

(harmonic_embedding_xyz): HarmonicEmbedding()

(harmonic_embedding_dir): HarmonicEmbedding()

(mlp_xyz): MLPWithInputSkips(

(mlp): ModuleList(

(0): Sequential(

(0): Linear(in_features=63, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

(1): Sequential(

(0): Linear(in_features=256, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

(2): Sequential(

(0): Linear(in_features=256, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

(3): Sequential(

(0): Linear(in_features=256, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

(4): Sequential(

(0): Linear(in_features=256, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

(5): Sequential(

(0): Linear(in_features=319, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

(6): Sequential(

(0): Linear(in_features=256, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

(7): Sequential(

(0): Linear(in_features=256, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

)

)

(intermediate_linear): Linear(in_features=256, out_features=256, bias=True)

(density_layer): Linear(in_features=256, out_features=1, bias=True)

(color_layer): Sequential(

(0): LinearWithRepeat()

(1): ReLU(inplace=True)

(2): Linear(in_features=128, out_features=3, bias=True)

(3): Sigmoid()

)

)

)

)

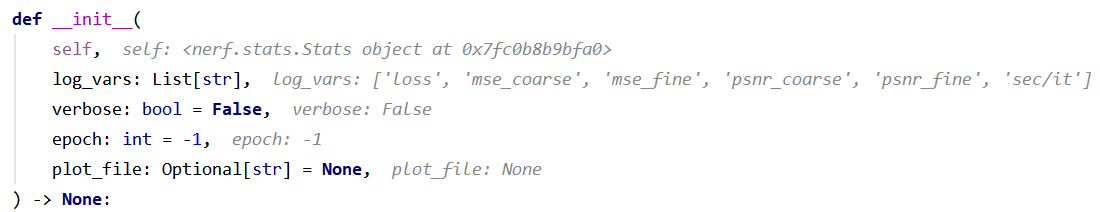

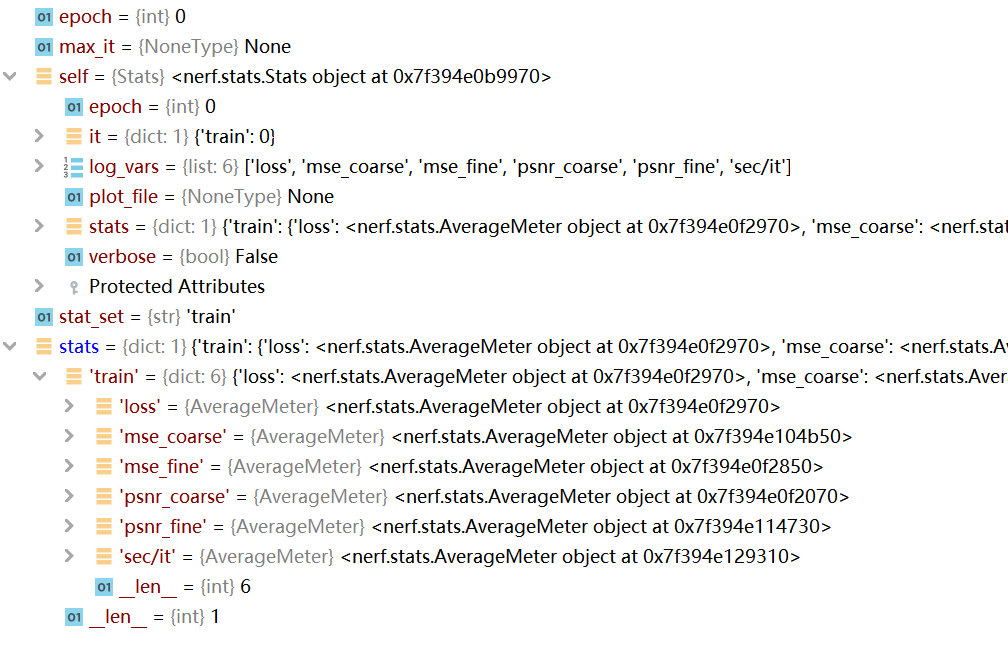

# Init the stats object.

if stats is None:

stats = Stats(

["loss", "mse_coarse", "mse_fine", "psnr_coarse", "psnr_fine", "sec/it"],

)

class Stats:

"""

统计日志对象,用于收集在PyTorch中训练深度网络的统计数据。

Example:

```

# 初始化统计数据结构,用于记录统计数据“objective”和“top1e”.

stats = Stats( ('objective','top1e') )

network = init_net() # init a pytorch module (=neural network)

dataloader = init_dataloader() # init a dataloader

for epoch in range(10):

# start of epoch -> call new_epoch

stats.new_epoch()

# Iterate over batches.

for batch in dataloader:

# 运行模型并将其保存到输出变量的“output”中。

output = network(batch)

# stats.update() 自动解析 the 'objective' and 'top1e'

# from the "output" dict 并把这个存储在数据库里。

stats.update(output)

stats.print() # 打印给定epoch的平均值

# Stores the training plots into '/tmp/epoch_stats.pdf'

# 并绘制成运行在localhost上的Visdom服务器(如果运行的话)。

stats.plot_stats(plot_file='/tmp/epoch_stats.pdf')

```

"""

def __init__(

self,

log_vars: List[str],

verbose: bool = False,

epoch: int = -1,

plot_file: Optional[str] = None,

) -> None:

"""

Args:

log_vars: 要记录的变量名列表。

verbose: 打印状态信息。

epoch: The initial epoch of the object.

plot_file: The path to the file that will hold the training plots.

"""

self.verbose = verbose

self.log_vars = log_vars

self.plot_file = plot_file

self.hard_reset(epoch=epoch)

self.hard_reset(epoch=epoch)

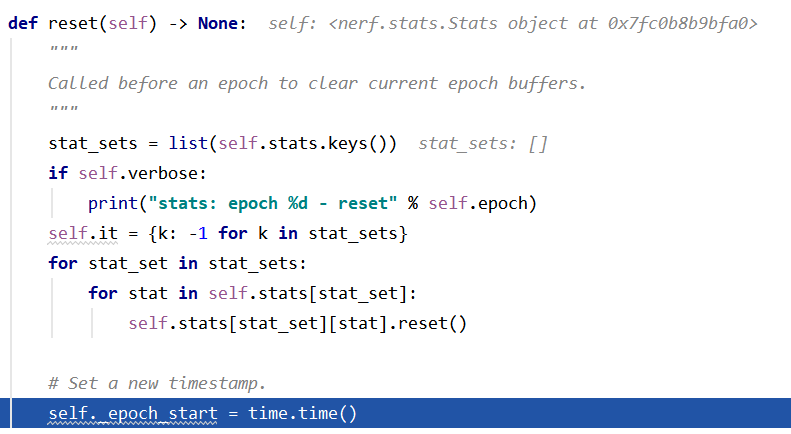

def hard_reset(self, epoch: int = -1) -> None:

"""

删除所有记录的数据。

"""

self._epoch_start = None

self.epoch = epoch

if self.verbose:

print("stats: epoch %d - hard reset" % self.epoch)

self.stats = {}

self.reset()

self.reset()

def reset(self) -> None:

"""

在一个epoch 之前调用以清除当前的epoch缓冲区。

"""

stat_sets = list(self.stats.keys())

if self.verbose:

print("stats: epoch %d - reset" % self.epoch)

self.it = {k: -1 for k in stat_sets}

for stat_set in stat_sets:

for stat in self.stats[stat_set]:

self.stats[stat_set][stat].reset()

# 设置一个新的时间戳。

self._epoch_start = time.time()

learning rate: current_lr = base_lr * gamma ** (epoch / step_size)

# 学习速率调度器设置.

# 在原始代码之后, we use exponential decay of the#指数衰减

# learning rate: current_lr = base_lr * gamma ** (epoch / step_size)

def lr_lambda(epoch): ##定义学习率指数衰减

return cfg.optimizer.lr_scheduler_gamma ** (

epoch / cfg.optimizer.lr_scheduler_step_size

)

# The learning rate scheduling is implemented with LambdaLR PyTorch scheduler.

# 利用学习率调度器实现lr——lambda的指数衰减学习率

lr_scheduler = torch.optim.lr_scheduler.LambdaLR(

optimizer, lr_lambda, last_epoch=start_epoch - 1, verbose=False

)

# 初始化缓存,以存储可视化所需的变量

visuals_cache = collections.deque(maxlen=cfg.visualization.history_size)

# Init the visualization visdom env.

if cfg.visualization.visdom:

viz = Visdom(

server=cfg.visualization.visdom_server,

port=cfg.visualization.visdom_port,

use_incoming_socket=False,

)

else:

viz = None

# Load the training/validation data.

train_dataset, val_dataset, _ = get_nerf_datasets(

dataset_name=cfg.data.dataset_name,

image_size=cfg.data.image_size, # 加载初始化中的训练集和验证集

)

##返回图像, 相机参数, 相机编号3个组成的数据结构

def get_nerf_datasets(

dataset_name: str, # 'lego | fern' 给定具体的场景

image_size: Tuple[int, int], #图像的尺寸(height,width)

data_root: str = DEFAULT_DATA_ROOT, #数据的网络连接

autodownload: bool = True, #根据网络连接对数据直接进行下载

) -> Tuple[Dataset, Dataset, Dataset]: ##返回训练集,验证集,测试集

"""

获取使用“dataset_name”参数指定的数据集的training and validation DataSet对象

Args:

dataset_name: 要加载的数据集的名称。

image_size: 表示加载的数据集图像大小的元组(高度、宽度)。

data_root: 存储数据的根文件夹。

autodownload: 自动下载数据集文件,以防它们丢失。

Returns:

train_dataset: The training dataset object.

val_dataset: The validation dataset object.

test_dataset: The testing dataset object.

"""

if dataset_name not in ALL_DATASETS:

raise ValueError(f"'{dataset_name}'' does not refer to a known dataset.")

print(f"Loading dataset {dataset_name}, image size={str(image_size)} ...")

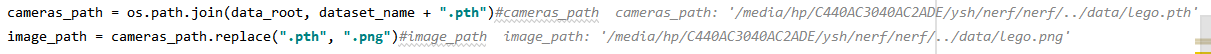

cameras_path = os.path.join(data_root, dataset_name + ".pth")#cameras_path

image_path = cameras_path.replace(".pth", ".png")#image_path

if autodownload and any(not os.path.isfile(p) for p in (cameras_path, image_path)):

# Automatically download the data files if missing.自动下载缺失的数据

download_data((dataset_name,), data_root=data_root)

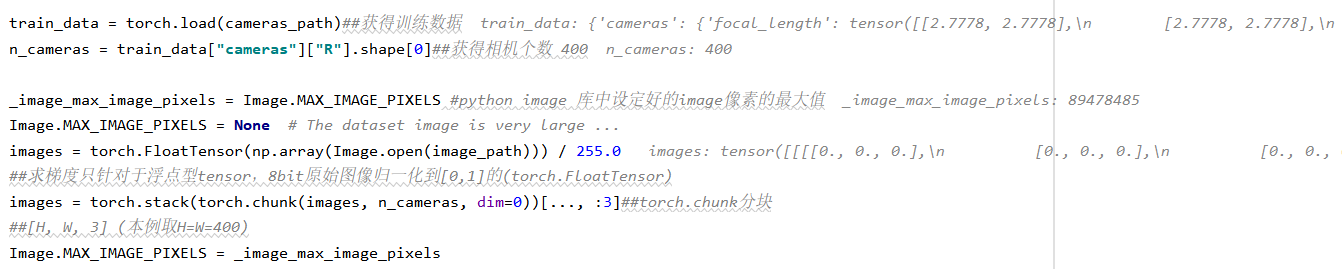

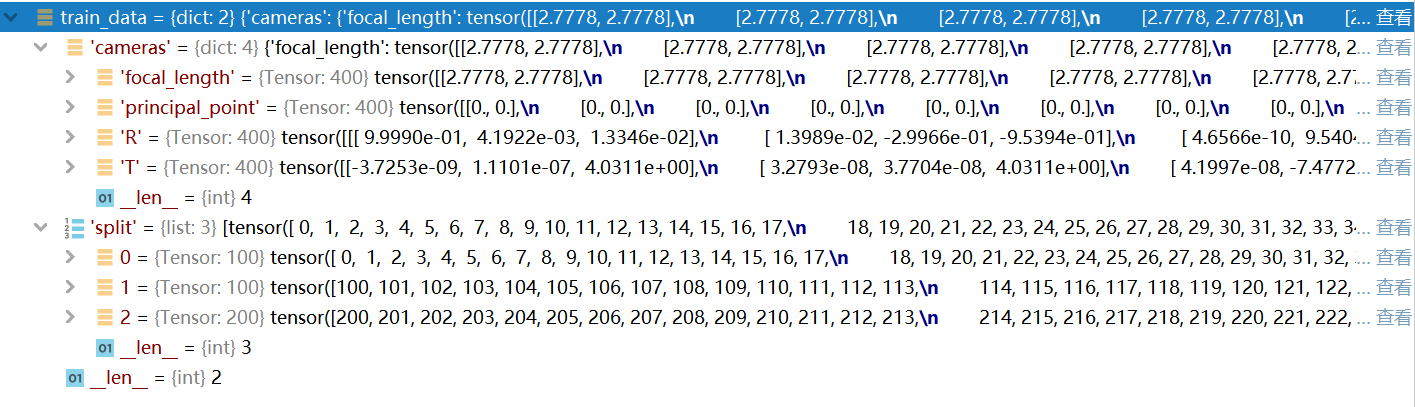

train_data = torch.load(cameras_path)##获得训练数据

n_cameras = train_data["cameras"]["R"].shape[0]##获得相机个数 400

_image_max_image_pixels = Image.MAX_IMAGE_PIXELS #python image 库中设定好的image像素的最大值

Image.MAX_IMAGE_PIXELS = None # The dataset image is very large ...

images = torch.FloatTensor(np.array(Image.open(image_path))) / 255.0

##求梯度只针对于浮点型tensor,8bit原始图像归一化到[0,1]的(torch.FloatTensor)

images = torch.stack(torch.chunk(images, n_cameras, dim=0))[..., :3]##torch.chunk分块

##[H, W, 3] (本例取H=W=400)

Image.MAX_IMAGE_PIXELS = _image_max_image_pixels

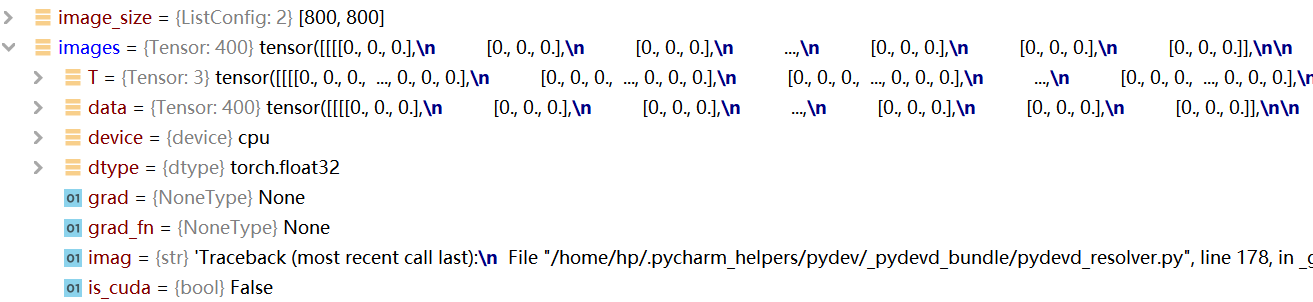

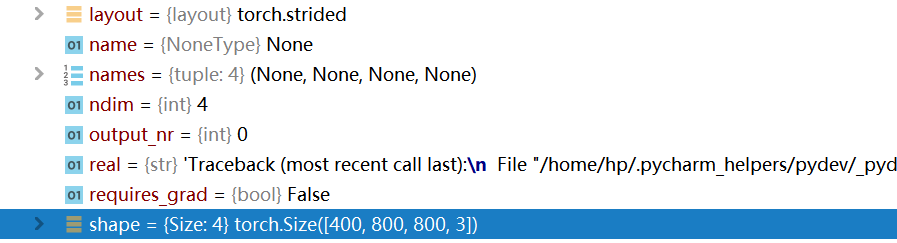

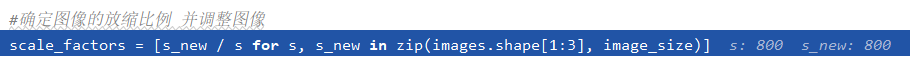

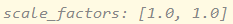

#确定图像的放缩比例 并调整图像

scale_factors = [s_new / s for s, s_new in zip(images.shape[1:3], image_size)]

if abs(scale_factors[0] - scale_factors[1]) > 1e-3:

raise ValueError(

"Non-isotropic scaling is not allowed. Consider changing the 'image_size' argument."

)

scale_factor = sum(scale_factors) * 0.5

if scale_factor != 1.0:

print(f"Rescaling dataset (factor={scale_factor})")

images = torch.nn.functional.interpolate(

images.permute(0, 3, 1, 2),

size=tuple(image_size),

mode="bilinear",

).permute(0, 2, 3, 1)##通过插值进行缩放

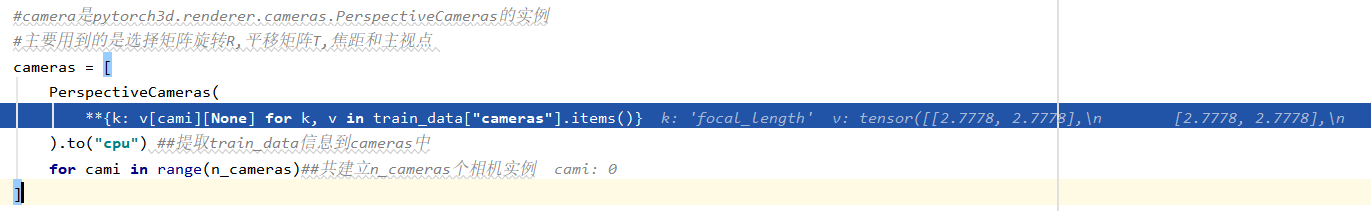

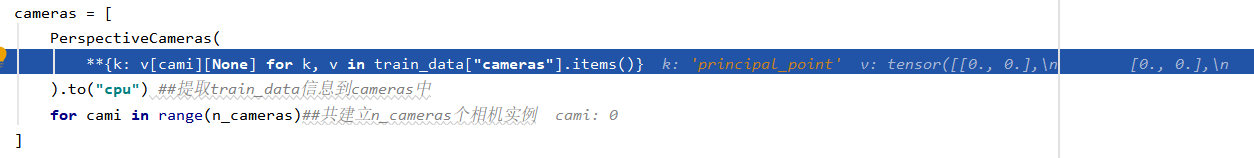

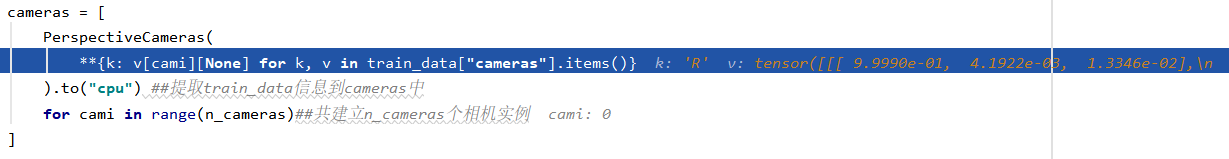

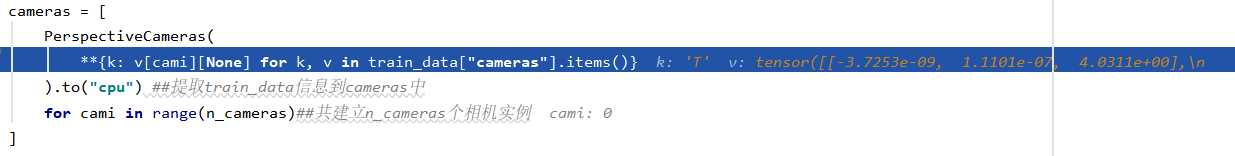

#camera是pytorch3d.renderer.cameras.PerspectiveCameras的实例

#主要用到的是旋转矩阵R,平移矩阵T,焦距和主视点

cameras = [

PerspectiveCameras(

**{k: v[cami][None] for k, v in train_data["cameras"].items()}

).to("cpu") ##提取train_data信息到cameras中

for cami in range(n_cameras)##共建立n_cameras个相机实例

]

##将train_data划分为train, val, test

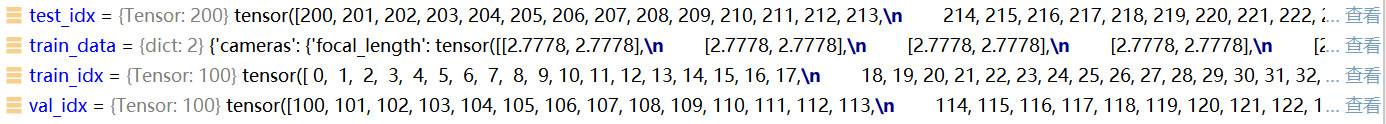

train_idx, val_idx, test_idx = train_data["split"]

train_dataset, val_dataset, test_dataset = [

ListDataset(

[

{"image": images[i], "camera": cameras[i], "camera_idx": int(i)}

for i in idx

]

)

for idx in [train_idx, val_idx, test_idx]

]

return train_dataset, val_dataset, test_dataset

class ListDataset(Dataset):

"""

由entries(条目)列表组成的简单数据集。

"""

def __init__(self, entries: List) -> None:

"""

Args:

entries: The list of dataset entries.

"""

self._entries = entries

def __len__(

self,

) -> int:

return len(self._entries)

def __getitem__(self, index):

return self._entries[index] ##直接获取该索引对应的列表内容

train_data

images = torch.stack(torch.chunk(images, n_cameras, dim=0))[…, :3]##torch.chunk分块

scale_factors

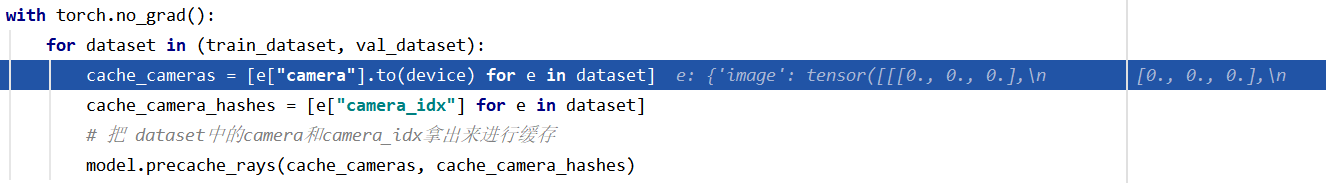

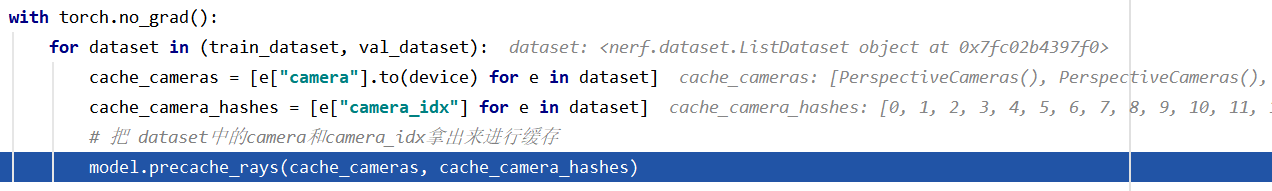

if cfg.data.precache_rays:

# Precache the projection rays.预缓存投影光线

model.eval() # 不启用 batchnorm and dropout

with torch.no_grad():

for dataset in (train_dataset, val_dataset):

cache_cameras = [e["camera"].to(device) for e in dataset]

cache_camera_hashes = [e["camera_idx"] for e in dataset]

# 把 dataset中的camera和camera_idx拿出来进行缓存

model.precache_rays(cache_cameras, cache_camera_hashes)

model.precache_rays(cache_cameras, cache_camera_hashes)

def precache_rays(

self,

cache_cameras: List[CamerasBase], #precache ray的n个camera的列表

cache_camera_hashes: List[str], #每个相机独特标识符的列表

):

"""

预缓存从相机“cache_cameras”列表中发出的光线,其中每个相机都用相应的哈希唯一标识来自`cache_camera_hashes`。

缓存的光线被移动到 cpu 并存储在 `self._renderer['coarse']._ray_cache`。

缓存具有相同哈希的两个相机时引发 `ValueError`。

参数:

cache_cameras:预先缓存了光线的“N”个摄像机的列表。

cache_camera_hashes:每个唯一标识符的“N”个列表

"""

self._renderer["coarse"].raysampler.precache_rays(##预缓存光线

cache_cameras,

cache_camera_hashes,

)

train_dataloader = torch.utils.data.DataLoader(

train_dataset,

batch_size=1,

shuffle=True,

num_workers=0,

collate_fn=trivial_collate,

)

# 验证数据器只是一个无休止的随机样本流。

val_dataloader = torch.utils.data.DataLoader(

val_dataset,

batch_size=1,

num_workers=0,

collate_fn=trivial_collate,

sampler=torch.utils.data.RandomSampler(

val_dataset,

replacement=True,

num_samples=cfg.optimizer.max_epochs,

),

)

# 接下来就是正常的训练过程

# Set the model to the training mode.

model.train()

# Run the main training loop.

for epoch in range(start_epoch, cfg.optimizer.max_epochs):

stats.new_epoch() # Init a new epoch.建立一个新的epoch训练

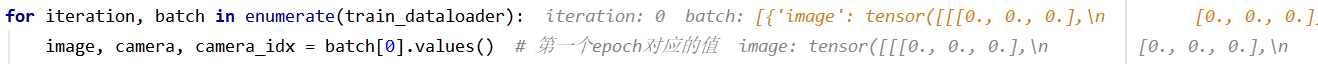

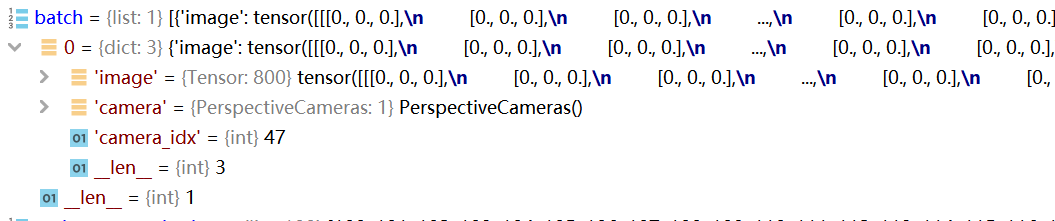

for iteration, batch in enumerate(train_dataloader):

image, camera, camera_idx = batch[0].values() # 第一个epoch对应的值

image = image.to(device)

camera = camera.to(device)

optimizer.zero_grad()

执行RadianceFieldRenderer模型的forword文件

代码在上面

# Run the forward pass of the model.

nerf_out, metrics = model(

camera_idx if cfg.data.precache_rays else None,

camera,

image,

)

RadianceFieldRenderer(

(_renderer): ModuleDict(

(coarse): ImplicitRenderer(

(raysampler): NeRFRaysampler(

(_grid_raysampler): NDCMultinomialRaysampler()

(_mc_raysampler): MonteCarloRaysampler()

)

(raymarcher): EmissionAbsorptionNeRFRaymarcher()

)

(fine): ImplicitRenderer(

(raysampler): ProbabilisticRaysampler()

(raymarcher): EmissionAbsorptionNeRFRaymarcher()

)

)

(_implicit_function): ModuleDict(

(coarse): NeuralRadianceField(

(harmonic_embedding_xyz): HarmonicEmbedding()

(harmonic_embedding_dir): HarmonicEmbedding()

(mlp_xyz): MLPWithInputSkips(

(mlp): ModuleList(

(0): Sequential(

(0): Linear(in_features=63, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

(1): Sequential(

(0): Linear(in_features=256, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

(2): Sequential(

(0): Linear(in_features=256, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

(3): Sequential(

(0): Linear(in_features=256, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

(4): Sequential(

(0): Linear(in_features=256, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

(5): Sequential(

(0): Linear(in_features=319, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

(6): Sequential(

(0): Linear(in_features=256, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

(7): Sequential(

(0): Linear(in_features=256, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

)

)

(intermediate_linear): Linear(in_features=256, out_features=256, bias=True)

(density_layer): Linear(in_features=256, out_features=1, bias=True)

(color_layer): Sequential(

(0): LinearWithRepeat()

(1): ReLU(inplace=True)

(2): Linear(in_features=128, out_features=3, bias=True)

(3): Sigmoid()

)

)

(fine): NeuralRadianceField(

(harmonic_embedding_xyz): HarmonicEmbedding()

(harmonic_embedding_dir): HarmonicEmbedding()

(mlp_xyz): MLPWithInputSkips(

(mlp): ModuleList(

(0): Sequential(

(0): Linear(in_features=63, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

(1): Sequential(

(0): Linear(in_features=256, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

(2): Sequential(

(0): Linear(in_features=256, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

(3): Sequential(

(0): Linear(in_features=256, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

(4): Sequential(

(0): Linear(in_features=256, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

(5): Sequential(

(0): Linear(in_features=319, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

(6): Sequential(

(0): Linear(in_features=256, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

(7): Sequential(

(0): Linear(in_features=256, out_features=256, bias=True)

(1): ReLU(inplace=True)

)

)

)

(intermediate_linear): Linear(in_features=256, out_features=256, bias=True)

(density_layer): Linear(in_features=256, out_features=1, bias=True)

(color_layer): Sequential(

(0): LinearWithRepeat()

(1): ReLU(inplace=True)

(2): Linear(in_features=128, out_features=3, bias=True)

(3): Sigmoid()

)

)

)

)

coarse

fine

# The loss is a sum of coarse and fine MSEs metrics指标

loss = metrics["mse_coarse"] + metrics["mse_fine"]

# Take the training step.

loss.backward()

optimizer.step()

loss:

tensor(0.2945, device='cuda:0', grad_fn=<AddBackward0>)

# Update stats with the current metrics.

stats.update(

{"loss": float(loss), **metrics},

stat_set="train",

)

if iteration % cfg.stats_print_interval == 0:

stats.print(stat_set="train") ##每隔10print出来一次

stats

{'train':

{'loss': <nerf.stats.AverageMeter object at 0x7f394e0f2970>,

'mse_coarse': <nerf.stats.AverageMeter object at 0x7f394e104b50>,

'mse_fine': <nerf.stats.AverageMeter object at 0x7f394e0f2850>,

'psnr_coarse': <nerf.stats.AverageMeter object at 0x7f394e0f2070>,

'psnr_fine': <nerf.stats.AverageMeter object at 0x7f394e114730>,

'sec/it': <nerf.stats.AverageMeter object at 0x7f394e129310>}

}

# Update the visualization cache.

if viz is not None: ##可视化更新

visuals_cache.append(

{

"camera": camera.cpu(),

"camera_idx": camera_idx,

"image": image.cpu().detach(), ##detach不需要梯度

"rgb_fine": nerf_out["rgb_fine"].cpu().detach(),

"rgb_coarse": nerf_out["rgb_coarse"].cpu().detach(),

"rgb_gt": nerf_out["rgb_gt"].cpu().detach(),

"coarse_ray_bundle": nerf_out["coarse_ray_bundle"],

}

)

# Adjust the learning rate.

lr_scheduler.step()

# Validation

if epoch % cfg.validation_epoch_interval == 0 and epoch > 0:

# Sample a validation camera/image.

val_batch = next(val_dataloader.__iter__())

val_image, val_camera, camera_idx = val_batch[0].values()

val_image = val_image.to(device)

val_camera = val_camera.to(device)

# 激活模型的val模式(让我们完成一个完整的渲染传递)。

model.eval()

with torch.no_grad():

val_nerf_out, val_metrics = model(

camera_idx if cfg.data.precache_rays else None,

val_camera,

val_image,

)

# Update stats with the validation metrics. ##更新指标 即损失函数的加权

stats.update(val_metrics, stat_set="val")

stats.print(stat_set="val")

if viz is not None:

# Plot that loss curves into visdom.

stats.plot_stats(

viz=viz,

visdom_env=cfg.visualization.visdom_env,

plot_file=None,

)

# 可视化中间结果。

visualize_nerf_outputs(

val_nerf_out, visuals_cache, viz, cfg.visualization.visdom_env

)

# Set the model back to train mode.

model.train()

# Checkpoint.

if (

epoch % cfg.checkpoint_epoch_interval == 0

and len(cfg.checkpoint_path) > 0

and epoch > 0

):

print(f"Storing checkpoint {checkpoint_path}.")

data_to_store = {

"model": model.state_dict(),

"optimizer": optimizer.state_dict(),

"stats": pickle.dumps(stats),

}

torch.save(data_to_store, checkpoint_path) ##保存训练模型参数数据到checkpoint

def precache_rays(

self,

cache_cameras: List[CamerasBase], #precache ray的n个camera的列表

cache_camera_hashes: List[str], #每个相机独特标识符的列表

):

"""

预缓存从相机“cache_cameras”列表中发出的光线,其中每个相机都用相应的哈希唯一标识来自`cache_camera_hashes`。

缓存的光线被移动到 cpu 并存储在 `self._renderer['coarse']._ray_cache`。

缓存具有相同哈希的两个相机时引发 `ValueError`。

参数:

cache_cameras:预先缓存了光线的“N”个摄像机的列表。

cache_camera_hashes:每个唯一标识符的“N”个列表

Precaches the rays emitted from the list of cameras `cache_cameras`,

where each camera is uniquely identified with the corresponding hash

from `cache_camera_hashes`.

The cached rays are moved to cpu and stored in

`self._renderer['coarse']._ray_cache`.

Raises `ValueError` when caching two cameras with the same hash.

Args:

cache_cameras: A list of `N` cameras for which the rays are pre-cached.

cache_camera_hashes: A list of `N` unique identifiers for each

camera from `cameras`.

"""

self._renderer["coarse"].raysampler.precache_rays(##预缓存光线

cache_cameras,

cache_camera_hashes,

)

def _process_ray_chunk(

self,

camera_hash: Optional[str],# pre-cached camera的唯一标识符

camera: CamerasBase,# 一批场景被渲染的cameara

image: torch.Tensor,# ground truth , shape(batch_size,,3)

chunk_idx: int, # 当前射线块的索引。

) -> dict:

"""

Samples and renders a chunk of rays.

Args:

camera_hash: A unique identifier of a pre-cached camera.

If `None`, the cache is not searched and the sampled rays are

calculated from scratch.

camera: A batch of cameras from which the scene is rendered.

image: A batch of corresponding ground truth images of shape

('batch_size', ·, ·, 3).

chunk_idx: The index of the currently rendered ray chunk.

Returns:

out: `dict` containing the outputs of the rendering:

`rgb_coarse`: The result of the coarse rendering pass.

`rgb_fine`: The result of the fine rendering pass.

`rgb_gt`: The corresponding ground-truth RGB values.

return :

out: `dict` 包含渲染的输出:

`rgb_coarse`:粗略渲染过程的结果。

`rgb_fine`:精细渲染通道的结果。

`rgb_gt`:对应的ground-truth RGB值。

"""

# Initialize the outputs of the coarse rendering to None.

coarse_ray_bundle = None##orgin direction depth xy二维坐标

coarse_weights = None

# First evaluate the coarse rendering pass, then the fine one.

for renderer_pass in ("coarse", "fine"):

(rgb, weights), ray_bundle_out = self._renderer[renderer_pass](

cameras=camera,

volumetric_function=self._implicit_function[renderer_pass],

chunksize=self._chunk_size_test,

chunk_idx=chunk_idx,

density_noise_std=(self._density_noise_std if self.training else 0.0),

input_ray_bundle=coarse_ray_bundle,

ray_weights=coarse_weights,

camera_hash=camera_hash,

)

if renderer_pass == "coarse":

rgb_coarse = rgb

# Store the weights and the rays of the first rendering pass

# for the ensuing importance ray-sampling of the fine render.

#存储第一个渲染通道的权重和光线,用于精细渲染的后续重要性光线采样。

coarse_ray_bundle = ray_bundle_out##第一次粗渲染的结果保存用于精细渲染

coarse_weights = weights

if image is not None:

# Sample the ground truth images at the xy locations of the

# rendering ray pixels.

# 在xy位置对ground truth图像进行采样,渲染光线像素。

rgb_gt = sample_images_at_mc_locs(

image[..., :3][None],

ray_bundle_out.xys,

)

else:

rgb_gt = None

elif renderer_pass == "fine":

rgb_fine = rgb

else:

raise ValueError(f"No such rendering pass {renderer_pass}")

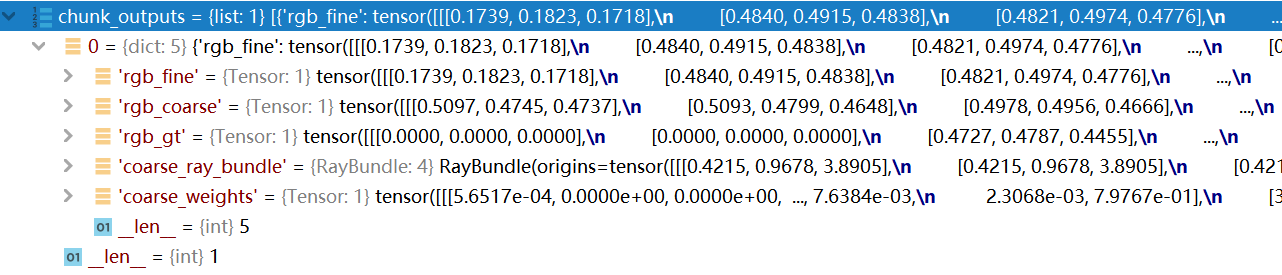

out = {"rgb_fine": rgb_fine, "rgb_coarse": rgb_coarse, "rgb_gt": rgb_gt}

if self.visualization:

# Store the coarse rays/weights only for visualization purposes.

# 存储粗射线/权重仅用于可视化目的。

out["coarse_ray_bundle"] = type(coarse_ray_bundle)(

*[v.detach().cpu() for k, v in coarse_ray_bundle._asdict().items()]

)

out["coarse_weights"] = coarse_weights.detach().cpu()

##切断梯度

return out

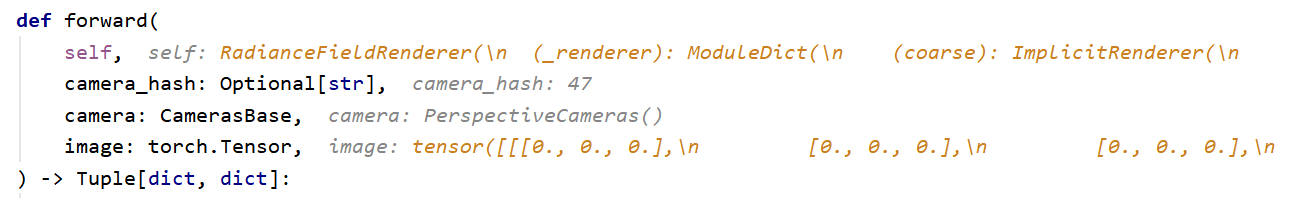

def forward(

self,

camera_hash: Optional[str],

camera: CamerasBase,

image: torch.Tensor,

) -> Tuple[dict, dict]:

"""

从输入的“camera”的角度执行辐射场的粗略和精细的渲染传递。

之后,两种渲染方式都与输入的地面真实“图像”相比较。

通过评估峰值信噪比和均方误差.

The rendering result depends on the `self.training` flag:

- In the training mode (`self.training==True`),

该函数renders图像射线的随机子集(MonteCarlo rendering)。

- In evaluation mode (`self.training==False`),

该函数renders整个图像。为了防止内存不足的错误,

当 `self.training==False` 时,对光线进行采样和渲染

批量大小为“chunksize”。

参数:

camera_hash:预缓存相机的唯一标识符。

如果为“None”,则不搜索缓存并且采样光线

从头计算。

camera:渲染场景的一批相机。

image: 一批对应形状为

('batch_size', ·, ·, 3)的ground truth图像

Returns:

out: `dict` 包含渲染的输出:

`rgb_coarse`:粗略渲染过程的结果。

`rgb_fine`:精细渲染通道的结果。

`rgb_gt`:对应的ground-truth RGB值。

The shape of `rgb_coarse`, `rgb_fine`, `rgb_gt` depends on the

`self.training` flag:

If `==True`, all 3 tensors are of shape

`(batch_size, n_rays_per_image, 3)` and contain the result

of the Monte Carlo training rendering pass.

If `==False`, all 3 tensors are of shape

`(batch_size, image_size[0], image_size[1], 3)` and contain

the result of the full image rendering pass.

metrics: `dict` 包含比较细和粗渲染到实际情况的误差度量:

`mse_coarse`:粗略渲染和渲染之间的均方误差

输入`图像`

`mse_fine`:精细渲染和渲染之间的均方误差

输入`图像`

`psnr_coarse`:粗略渲染和粗糙渲染之间的峰值信噪比

输入`图像`

`psnr_fine`:精细渲染和精细渲染之间的峰值信噪比

输入`图像`

"""

if not self.training:

# Full evaluation pass.

n_chunks = self._renderer["coarse"].raysampler.get_n_chunks(#获取chunk数即块数

self._chunk_size_test,

camera.R.shape[0],

)

else:

# 如果在测试的话完整的渲染.

n_chunks = 1

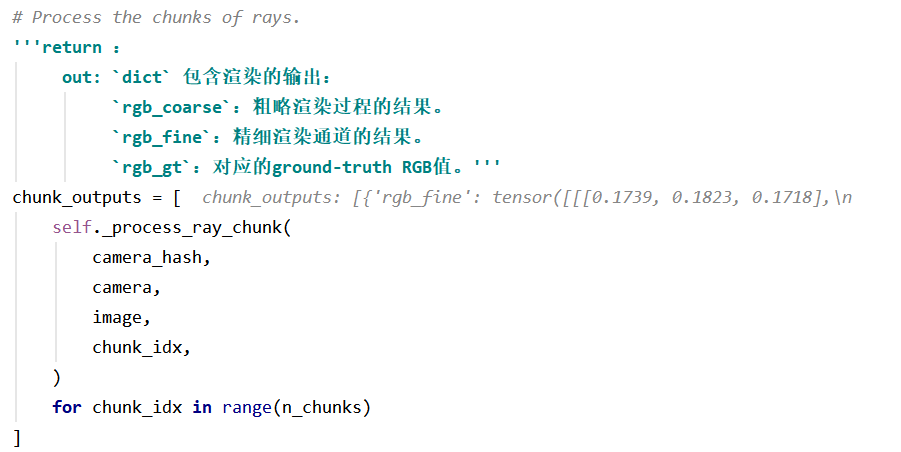

# Process the chunks of rays.

'''return :

out: `dict` 包含渲染的输出:

`rgb_coarse`:粗略渲染过程的结果。

`rgb_fine`:精细渲染通道的结果。

`rgb_gt`:对应的ground-truth RGB值。'''

chunk_outputs = [

self._process_ray_chunk(

camera_hash,

camera,

image,

chunk_idx,

)

for chunk_idx in range(n_chunks)

]

if not self.training:

# For a full render pass concatenate the output chunks,

# and reshape to image size.

# 对于完整的渲染过程,连接输出块,并重塑为图像大小。

out = {

k: torch.cat(

[ch_o[k] for ch_o in chunk_outputs],

dim=1,

).view(-1, *self._image_size, 3)

if chunk_outputs[0][k] is not None

else None

for k in ("rgb_fine", "rgb_coarse", "rgb_gt")

}

else:

out = chunk_outputs[0]

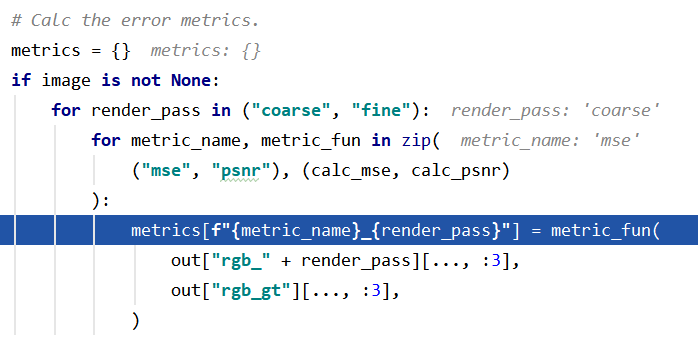

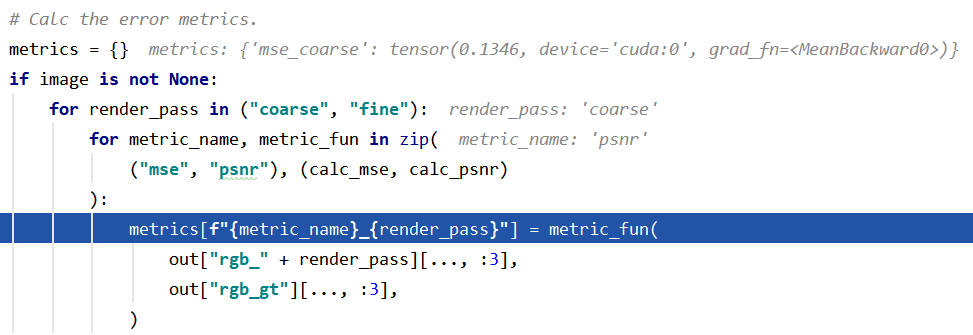

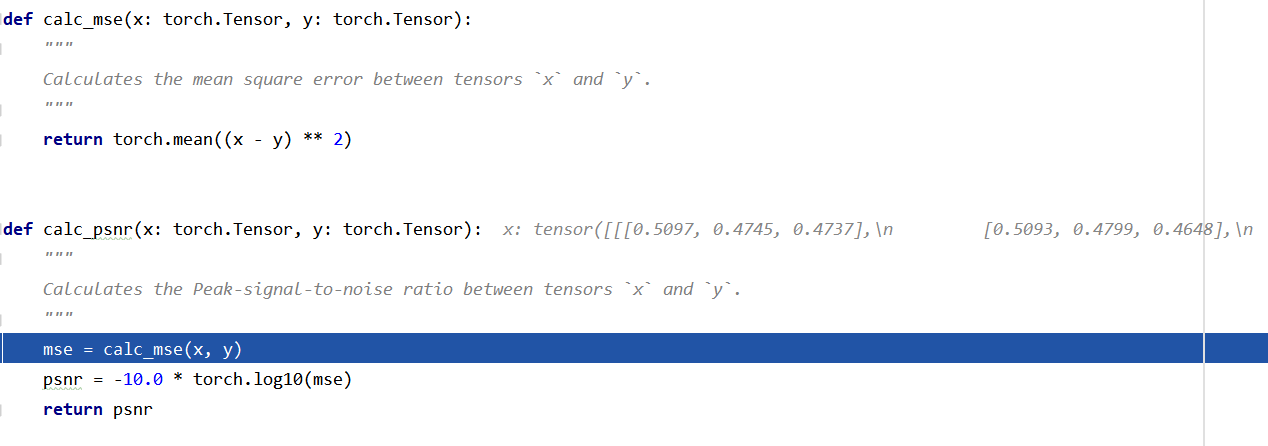

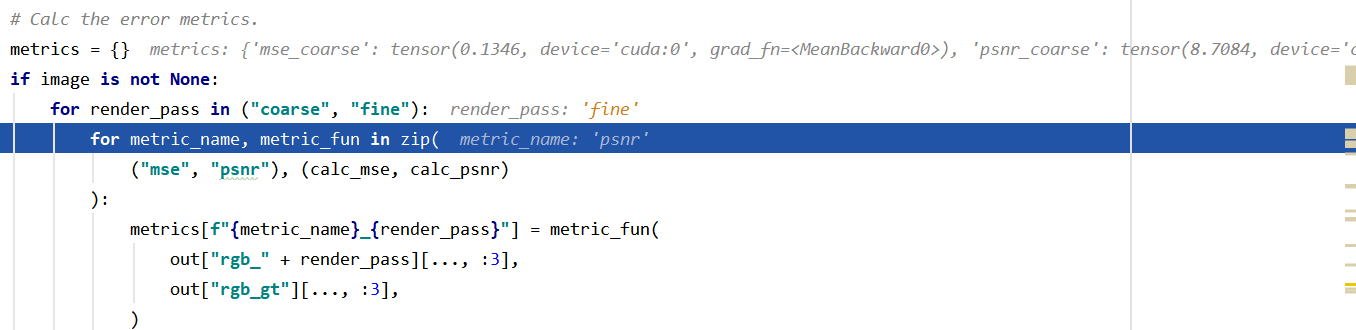

# Calc the error metrics.

metrics = {}

if image is not None:

for render_pass in ("coarse", "fine"):

for metric_name, metric_fun in zip(

("mse", "psnr"), (calc_mse, calc_psnr)

):

metrics[f"{metric_name}_{render_pass}"] = metric_fun(

out["rgb_" + render_pass][..., :3],

out["rgb_gt"][..., :3],

)

return out, metrics

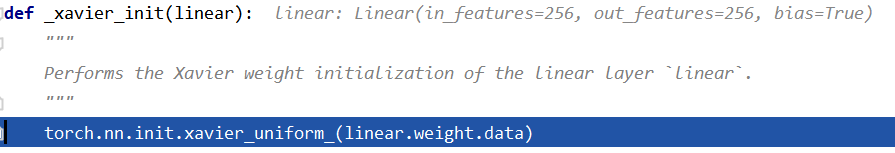

class MLPWithInputSkips(torch.nn.Module):

"""

实现神经辐射场的多层感知器架构。

因此,`MLPWithInputSkips` 是一个多层感知器,包括

具有 ReLU 激活的线性层序列。

Additionally, for a set of predefined layers `input_skips`, the forward pass

appends a skip tensor `z` to the output of the preceding layer.

此外,对于一组预定义层 `input_skips`,前向传递

在前一层的输出上附加一个跳过张量“z”。

Note that this follows the architecture described in the Supplementary

Material (Fig. 7) of [1].

References:

[1] Ben Mildenhall and Pratul P. Srinivasan and Matthew Tancik

and Jonathan T. Barron and Ravi Ramamoorthi and Ren Ng:

NeRF: Representing Scenes as Neural Radiance Fields for View

Synthesis, ECCV2020

"""

def __init__(

self,

n_layers: int,

input_dim: int,

output_dim: int,

skip_dim: int,

hidden_dim: int,

input_skips: Tuple[int] = (),

):

"""

Args:

n_layers: The number of linear layers of the MLP.

input_dim: The number of channels of the input tensor.

output_dim: The number of channels of the output.

skip_dim: The number of channels of the tensor `z` appended when

evaluating the skip layers.

hidden_dim: The number of hidden units of the MLP.

input_skips: The list of layer indices at which we append the skip

tensor `z`.

"""

super().__init__()

layers = []

for layeri in range(n_layers):

if layeri == 0:

dimin = input_dim

dimout = hidden_dim

elif layeri in input_skips:

dimin = hidden_dim + skip_dim

dimout = hidden_dim

else:

dimin = hidden_dim

dimout = hidden_dim

linear = torch.nn.Linear(dimin, dimout)

_xavier_init(linear)

layers.append(torch.nn.Sequential(linear, torch.nn.ReLU(True)))

self.mlp = torch.nn.ModuleList(layers)

self._input_skips = set(input_skips)

def forward(self, x: torch.Tensor, z: torch.Tensor) -> torch.Tensor:

"""

Args:

x: The input tensor of shape `(..., input_dim)`.

z: The input skip tensor of shape `(..., skip_dim)` which is appended

to layers whose indices are specified by `input_skips`.

Returns:

y: The output tensor of shape `(..., output_dim)`.

"""

y = x

for li, layer in enumerate(self.mlp):

if li in self._input_skips:

y = torch.cat((y, z), dim=-1)

y = layer(y)

return y

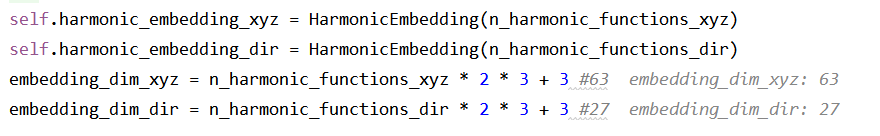

self.harmonic_embedding_xyz = HarmonicEmbedding(n_harmonic_functions_xyz)

谐波嵌入层将输入的三维坐标转换为更适合于深度神经网络处理的表示。

使用高频函数将输入映射到高维空间,可以更好地拟合包含高频变化的数据

class NeuralRadianceField(torch.nn.Module):

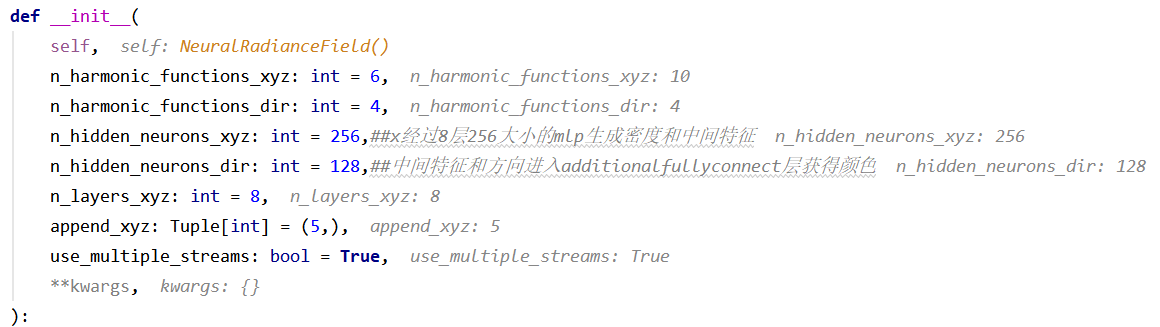

def __init__(

self,

n_harmonic_functions_xyz: int = 6,

n_harmonic_functions_dir: int = 4,

n_hidden_neurons_xyz: int = 256,##x经过8层256大小的mlp生成密度和中间特征

n_hidden_neurons_dir: int = 128,##中间特征和方向进入additionalfullyconnect层获得颜色

n_layers_xyz: int = 8,

append_xyz: Tuple[int] = (5,),

use_multiple_streams: bool = True,

**kwargs,

):

"""

Args:

n_harmonic_functions_xyz: 用于形成三维点位置谐波嵌入的调和函数的数目。

n_harmonic_functions_dir: 用于形成光线方向的谐波嵌入的调和函数的数目。

n_hidden_neurons_xyz: MLP的全连接层中接受3D点位置并输出具有中间特征的占用字段的隐藏单元数。

n_hidden_neurons_dir: MLP全连接层中接受中间特征和射线方向并输出辐射场(每点颜色)的隐藏单元数。

n_layers_xyz: 输出占用字段的MLP的层数。

append_xyz: 占用MLP的跳过层的索引列表。

use_multiple_streams: 是否应在单独的CUDA流上计算密度和颜色。

"""

super().__init__()

# 谐波嵌入层将输入的三维坐标转换为更适合于深度神经网络处理的表示。

# 使用高频函数将输入映射到高维空间,可以更好地拟合包含高频变化的数据

self.harmonic_embedding_xyz = HarmonicEmbedding(n_harmonic_functions_xyz)

self.harmonic_embedding_dir = HarmonicEmbedding(n_harmonic_functions_dir)

embedding_dim_xyz = n_harmonic_functions_xyz * 2 * 3 + 3 #63

embedding_dim_dir = n_harmonic_functions_dir * 2 * 3 + 3 #27

self.mlp_xyz = MLPWithInputSkips(##使用多层感知机

n_layers_xyz,

embedding_dim_xyz,

n_hidden_neurons_xyz,

embedding_dim_xyz,

n_hidden_neurons_xyz,

input_skips=append_xyz,

)

self.intermediate_linear = torch.nn.Linear(

n_hidden_neurons_xyz, n_hidden_neurons_xyz

)

_xavier_init(self.intermediate_linear)

self.density_layer = torch.nn.Linear(n_hidden_neurons_xyz, 1)#生成一个密度

_xavier_init(self.density_layer)

# Zero the bias of the density layer to avoid

# a completely transparent initialization.

# 将密度层的偏差归零以避免

# 一个完全透明的初始化。

self.density_layer.bias.data[:] = 0.0 # fixme: Sometimes this is not enough

self.color_layer = torch.nn.Sequential(

LinearWithRepeat(#输入是经过mlp全连接层后的中间特征和位置编码过后的direction

n_hidden_neurons_xyz + embedding_dim_dir, n_hidden_neurons_dir

),#输出是光线上每个点的特征以及该光线方向编码特征

torch.nn.ReLU(True),

torch.nn.Linear(n_hidden_neurons_dir, 3),

torch.nn.Sigmoid(),

)##获得一个rays_rgb图像

self.use_multiple_streams = use_multiple_streams

def _get_densities(

self,

features: torch.Tensor,

depth_values: torch.Tensor,

density_noise_std: float,

) -> torch.Tensor:

"""

This function takes `features` predicted by `self.mlp_xyz`

and converts them to `raw_densities` with `self.density_layer`.

`raw_densities` are later re-weighted using the depth step sizes

and mapped to [0-1] range with 1 - inverse exponential of `raw_densities`.

"""

raw_densities = self.density_layer(features)

deltas = torch.cat(

(

depth_values[..., 1:] - depth_values[..., :-1],

1e10 * torch.ones_like(depth_values[..., :1]),

),

dim=-1,

)[..., None]

if density_noise_std > 0.0:

raw_densities = (

raw_densities + torch.randn_like(raw_densities) * density_noise_std

)

densities = 1 - (-deltas * torch.relu(raw_densities)).exp()

return densities#(batchsize, 1024, 64, 1)

def _get_colors(

self, features: torch.Tensor, rays_directions: torch.Tensor

) -> torch.Tensor:

"""

This function takes per-point `features` predicted by `self.mlp_xyz`

and evaluates the color model in order to attach to each

point a 3D vector of its RGB color.

"""

# Normalize the ray_directions to unit l2 norm.先进行L2规则化

rays_directions_normed = torch.nn.functional.normalize(rays_directions, dim=-1)

# Obtain the harmonic embedding of the normalized ray directions.

rays_embedding = self.harmonic_embedding_dir(rays_directions_normed)#对其进行位置编码

return self.color_layer((self.intermediate_linear(features), rays_embedding))

#把特征和编码过后的direction输入到颜色层,得到(batchsize, 1024, 64, 3)

def _get_densities_and_colors(

self, features: torch.Tensor, ray_bundle: RayBundle, density_noise_std: float

) -> Tuple[torch.Tensor, torch.Tensor]:

"""

The second part of the forward calculation.

Args:

features: the output of the common mlp (the prior part of the

calculation), shape

(minibatch x ... x self.n_hidden_neurons_xyz).

ray_bundle: As for forward().

density_noise_std: As for forward().

Returns:

rays_densities: A tensor of shape `(minibatch, ..., num_points_per_ray, 1)`

denoting the opacity of each ray point.

rays_colors: A tensor of shape `(minibatch, ..., num_points_per_ray, 3)`

denoting the color of each ray point.

"""

if self.use_multiple_streams and features.is_cuda:

current_stream = torch.cuda.current_stream(features.device)

other_stream = torch.cuda.Stream(features.device)

other_stream.wait_stream(current_stream)

with torch.cuda.stream(other_stream):

rays_densities = self._get_densities(

features, ray_bundle.lengths, density_noise_std

)

# rays_densities.shape = [minibatch x ... x 1] in [0-1]

rays_colors = self._get_colors(features, ray_bundle.directions)

# rays_colors.shape = [minibatch x ... x 3] in [0-1]

current_stream.wait_stream(other_stream)

else:

# Same calculation as above, just serial.

rays_densities = self._get_densities(

features, ray_bundle.lengths, density_noise_std

)

rays_colors = self._get_colors(features, ray_bundle.directions)

return rays_densities, rays_colors

#两个输出,一个是rays_densities,shape (minibatch, ..., num_points_per_ray, 1),用于表示每个射线点的不透明度

#一个是rays_colors, shape (minibatch, …, num_points_per_ray, 3) 用于表示每个射线点的颜色

def forward(

self,

ray_bundle: RayBundle,

density_noise_std: float = 0.0,

**kwargs,

) -> Tuple[torch.Tensor, torch.Tensor]:

"""

The forward function accepts the parametrizations of

3D points sampled along projection rays. The forward

pass is responsible for attaching a 3D vector

and a 1D scalar representing the point's

RGB color and opacity respectively.

Args:

ray_bundle: A RayBundle object containing the following variables:

origins: A tensor of shape `(minibatch, ..., 3)` denoting the

origins of the sampling rays in world coords.

directions: A tensor of shape `(minibatch, ..., 3)`

containing the direction vectors of sampling rays in world coords.

lengths: A tensor of shape `(minibatch, ..., num_points_per_ray)`

containing the lengths at which the rays are sampled.

density_noise_std: A floating point value representing the

variance of the random normal noise added to the output of

the opacity function. This can prevent floating artifacts.

Returns:

rays_densities: A tensor of shape `(minibatch, ..., num_points_per_ray, 1)`

denoting the opacity of each ray point.

rays_colors: A tensor of shape `(minibatch, ..., num_points_per_ray, 3)`

denoting the color of each ray point.

"""

# We first convert the ray parametrizations to world

# coordinates with `ray_bundle_to_ray_points`.# 我们首先将光线参数化转换为世界与 `ray_bundle_to_ray_points` 坐标。

rays_points_world = ray_bundle_to_ray_points(ray_bundle)

# rays_points_world.shape = [minibatch x ... x 3]

# For each 3D world coordinate, we obtain its harmonic embedding.

embeds_xyz = self.harmonic_embedding_xyz(rays_points_world)

# embeds_xyz.shape = [minibatch x ... x self.n_harmonic_functions*6 + 3]

# self.mlp maps each harmonic embedding to a latent feature space.

features = self.mlp_xyz(embeds_xyz, embeds_xyz)

# features.shape = [minibatch x ... x self.n_hidden_neurons_xyz]

rays_densities, rays_colors = self._get_densities_and_colors(

features, ray_bundle, density_noise_std

)

return rays_densities, rays_colors

#两个输出,一个是rays_densities,shape (minibatch, ..., num_points_per_ray, 1),用于表示每个射线点的不透明度

#一个是rays_colors, shape (minibatch, …, num_points_per_ray, 3) 用于表示每个射线点的颜色

'''ray_bundle 包含了光束原点、方向、和长度等信息。

原点: shape (minibatch, ..., 3)

方向: shape (minibatch, ..., 3)

长度:shape (minibatch, ..., num_points_per_ray) 光线被采样的长度

return:

rays_densities : A tensor of shape (minibatch, ..., num_points_per_ray, 1) denoting the opacity of each ray point.

rays_colors: A tensor of shape (minibatch, ..., num_points_per_ray, 3) denoting the color of each ray point.'''

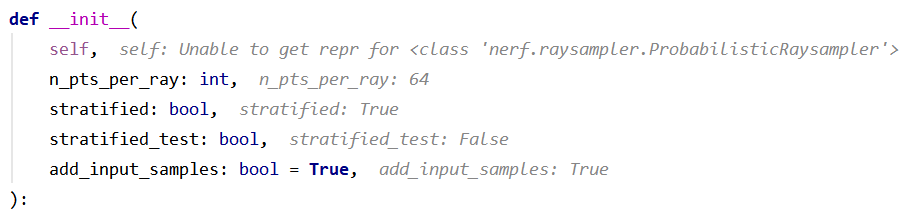

class ProbabilisticRaysampler(torch.nn.Module):

"""

实现沿光线的点的重要性采样。

输入是一个带有“ray_weights”张量的“RayBundle”对象,

该张量指定沿每条光线采样一个点的概率。

This raysampler is used for the fine rendering pass of NeRF.

As such, the forward pass accepts the RayBundle output by the

raysampling of the coarse rendering pass. Hence, it does not

take cameras as input.

"""

def __init__(

self,

n_pts_per_ray: int,

stratified: bool,

stratified_test: bool,

add_input_samples: bool = True,

):

"""

Args:

n_pts_per_ray: The number of points to sample along each ray.

stratified: If `True`, the input `ray_weights` are assumed to be

sampled at equidistant intervals.

stratified_test: Same as `stratified` with the difference that this

setting is applied when the module is in the `eval` mode

(`self.training==False`).

add_input_samples: Concatenates and returns the sampled values

together with the input samples.

"""

super().__init__()

self._n_pts_per_ray = n_pts_per_ray

self._stratified = stratified

self._stratified_test = stratified_test

self._add_input_samples = add_input_samples

def forward(

self,

input_ray_bundle: RayBundle,

ray_weights: torch.Tensor,

**kwargs,

) -> RayBundle:

"""

Args:

input_ray_bundle: An instance of `RayBundle` specifying the

source rays for sampling of the probability distribution.

ray_weights: A tensor of shape

`(..., input_ray_bundle.legths.shape[-1])` with non-negative

elements defining the probability distribution to sample

ray points from.

Returns:

ray_bundle: A new `RayBundle` instance containing the input ray

points together with `n_pts_per_ray` additional sampled

points per ray.

"""

# Calculate the mid-points between the ray depths.

z_vals = input_ray_bundle.lengths

batch_size = z_vals.shape[0]

# Carry out the importance sampling.

with torch.no_grad():

z_vals_mid = 0.5 * (z_vals[..., 1:] + z_vals[..., :-1])

z_samples = sample_pdf(

z_vals_mid.view(-1, z_vals_mid.shape[-1]),

ray_weights.view(-1, ray_weights.shape[-1])[..., 1:-1],

self._n_pts_per_ray,

det=not (

(self._stratified and self.training)

or (self._stratified_test and not self.training)

),

).view(batch_size, z_vals.shape[1], self._n_pts_per_ray)

if self._add_input_samples:

# Add the new samples to the input ones.

z_vals = torch.cat((z_vals, z_samples), dim=-1)

else:

z_vals = z_samples

# Resort by depth.

z_vals, _ = torch.sort(z_vals, dim=-1)

return RayBundle(

origins=input_ray_bundle.origins,

directions=input_ray_bundle.directions,

lengths=z_vals,

xys=input_ray_bundle.xys,

)

class NeRFRaysampler(torch.nn.Module):

"""

Implements the raysampler of NeRF.

Depending on the `self.training` flag, the raysampler either samples

a chunk of random rays (`self.training==True`), or returns a subset of rays

of the full image grid (`self.training==False`).

The chunking of rays allows for efficient evaluation of the NeRF implicit

surface function without encountering out-of-GPU-memory errors.

Additionally, this raysampler supports pre-caching of the ray bundles

for a set of input cameras (`self.precache_rays`).

Pre-caching the rays before training greatly speeds-up the ensuing

raysampling step of the training NeRF iterations.

实现 NeRF 的光线采样器。

根据 `self.training` 标志,光线采样器要么采样一大块随机光线(`self.training==True`),或返回光线子集完整的图像网格(`self.training==False`)。

光线的分块允许对 NeRF 隐式进行有效评估表面函数,而不会遇到 GPU 内存不足错误。

此外,此光线采样器支持光线束的预缓存,对于一组输入相机(`self.precache_rays`),在训练之前预先缓存光线可以大大加快随后的处理速度

训练 NeRF 迭代的射线采样步骤。

"""

def __init__(

self,

n_pts_per_ray: int,

min_depth: float,

max_depth: float,

n_rays_per_image: int,

image_width: int,

image_height: int,

stratified: bool = False,

stratified_test: bool = False,

):

"""

Args:

n_pts_per_ray: The number of points sampled along each ray.

min_depth: The minimum depth of a ray-point.

max_depth: The maximum depth of a ray-point.

n_rays_per_image: Number of Monte Carlo ray samples when training

(`self.training==True`).

image_width: The horizontal size of the image grid.

image_height: The vertical size of the image grid.

stratified: If `True`, stratifies (=randomly offsets) the depths

of each ray point during training (`self.training==True`).

stratified_test: If `True`, stratifies (=randomly offsets) the depths

of each ray point during evaluation (`self.training==False`).

n_pts_per_ray:沿每条射线采样的点数。

min_depth:射线点的最小深度。

max_depth:射线点的最大深度。

n_rays_per_image:训练时的蒙特卡洛射线样本数

(`self.training==True`)。

image_width:图像网格的水平尺寸。

image_height:图像网格的垂直尺寸。

分层:如果“真”,分层(=随机偏移)深度

训练期间的每个射线点(`self.training==True`)。

stratified_test:如果为“真”,则分层(=随机偏移)深度

评估期间的每个射线点(`self.training==False`)。

"""

super().__init__()

self._stratified = stratified

self._stratified_test = stratified_test

# Initialize the grid ray sampler.初始化网格射线采样器

self._grid_raysampler = NDCMultinomialRaysampler(

image_width=image_width,

image_height=image_height,

n_pts_per_ray=n_pts_per_ray,

min_depth=min_depth,

max_depth=max_depth,

)

# Initialize the Monte Carlo ray sampler.

self._mc_raysampler = MonteCarloRaysampler(

min_x=-1.0,

max_x=1.0,

min_y=-1.0,

max_y=1.0,

n_rays_per_image=n_rays_per_image,

n_pts_per_ray=n_pts_per_ray,

min_depth=min_depth,

max_depth=max_depth,

)

# create empty ray cache 创建新的光线缓存

self._ray_cache = {}

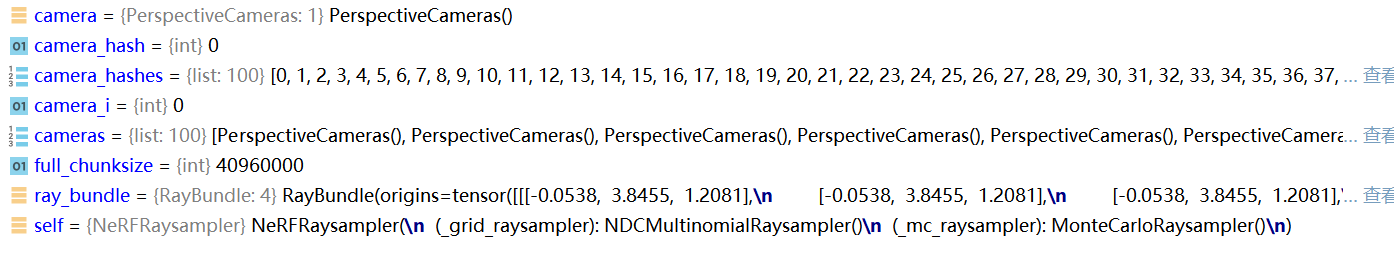

class NeRFRaysampler(torch.nn.Module)

def precache_rays(self, cameras: List[CamerasBase], camera_hashes: List):

self._renderer[“coarse”].raysampler.precache_rays

def precache_rays(self, cameras: List[CamerasBase], camera_hashes: List):

"""

预缓存从相机“cameras”列表中发出的光线,其中每个相机都用相应的哈希唯一标识来自`camera_hashes`。

缓存的光线被移动到 cpu 并存储在 `self._ray_cache` 中,缓存具有相同哈希的两个相机时引发 `ValueError`。

Args::

cameras:预先缓存了光线的“N”个相机的列表。

camera_hashes:每个的“N”个唯一标识符的列表,来自“cameras”的相机。

"""

print(f"Precaching {len(cameras)} ray bundles ...")

full_chunksize = ( #4096000

self._grid_raysampler._xy_grid.numel()

// 2

* self._grid_raysampler._n_pts_per_ray

)##每个chunk最多预缓存的光线数

if self.get_n_chunks(full_chunksize, 1) != 1:##只能是一个chunk来预缓存光线

raise ValueError("There has to be one chunk for precaching rays!")

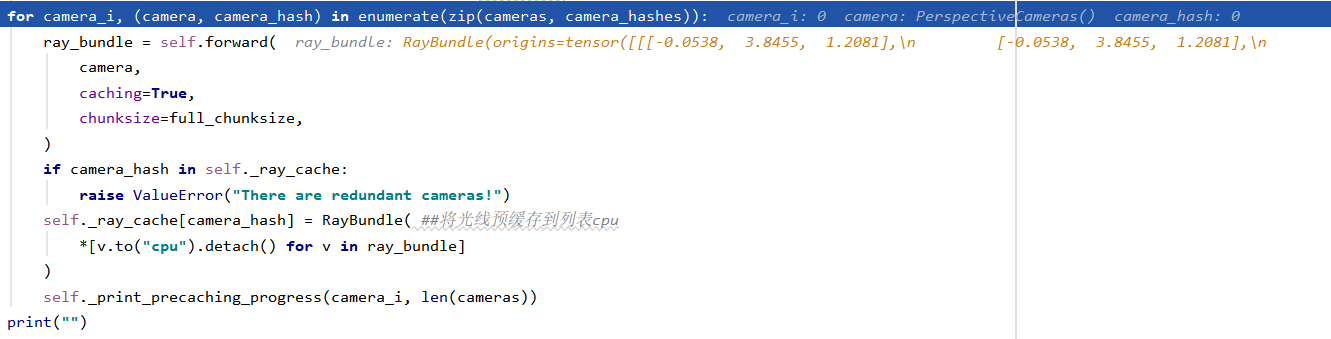

for camera_i, (camera, camera_hash) in enumerate(zip(cameras, camera_hashes)):

ray_bundle = self.forward(

camera,

caching=True,

chunksize=full_chunksize,

)

if camera_hash in self._ray_cache:

raise ValueError("There are redundant cameras!")

self._ray_cache[camera_hash] = RayBundle( ##将光线预缓存到列表cpu

*[v.to("cpu").detach() for v in ray_bundle]

)

self._print_precaching_progress(camera_i, len(cameras))

print("")

self

for camera_i, (camera, camera_hash) in enumerate(zip(cameras, camera_hashes)):

self.get_n_chunks(full_chunksize, 1)

def get_n_chunks(self, chunksize: int, batch_size: int):

"""

返回“chunksize”大小的光线采样器的光线块的总数。

参数:

chunksize:每个块的光线数量。

batch_size:光线采样器的批次大小。

return:

n_chunks:块的总数。

"""

return int(

math.ceil(#向上取整 numel->返回元素个数

(self._grid_raysampler._xy_grid.numel() * 0.5 * batch_size) / chunksize

)

)

ray_bundle = self.forward(

camera,

caching=True,

chunksize=full_chunksize,

)

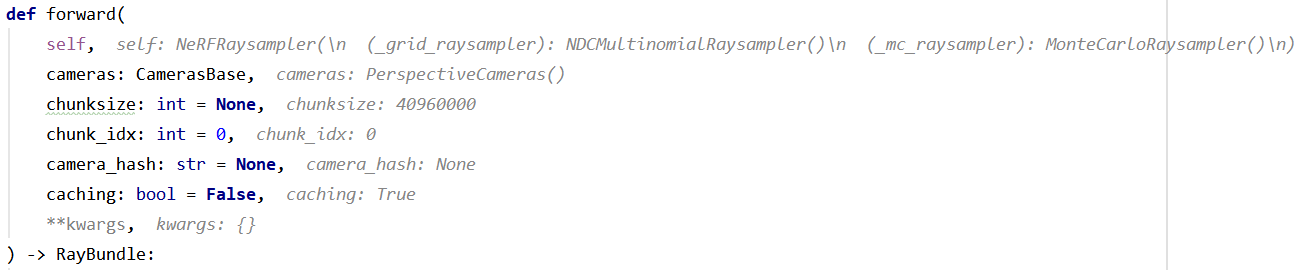

def forward(

self,

cameras: CamerasBase,

chunksize: int = None,

chunk_idx: int = 0,

camera_hash: str = None,

caching: bool = False,

**kwargs,

) -> RayBundle:

"""

Args:

cameras: 发出射线的一批“batch_size”照相机。

chunksize: 每块射线的数量。只在下列情况下激活 `self.training==False`.

chunk_idx: 射线块的索引。号码必须在

`[0, self.get_n_chunks(chunksize, batch_size)-1]`.

只在下列情况下激活 `self.training==False`.

camera_hash: 预缓存相机的唯一标识符。 If `None`,

缓存不被搜索,光线是从头开始计算的。

caching: If `True`, 激活缓存模式,该模式返回应该存储到缓存中的“RayBundle”。

Returns:

一个名为“RayBundle”的元组,具有以下字段:

origins: A tensor of shape

`(batch_size, n_rays_per_image, 3)`

在世界坐标中表示射线原点的位置。

directions: A tensor of shape

`(batch_size, n_rays_per_image, 3)`

表示世界坐标中每一条射线的方向。

lengths: A tensor of shape

`(batch_size, n_rays_per_image, n_pts_per_ray)`

包含世界单位中每条射线的z坐标(=depth).

xys: A tensor of shape

`(batch_size, n_rays_per_image, 2)`

包含每个射线的2D图像坐标。

"""

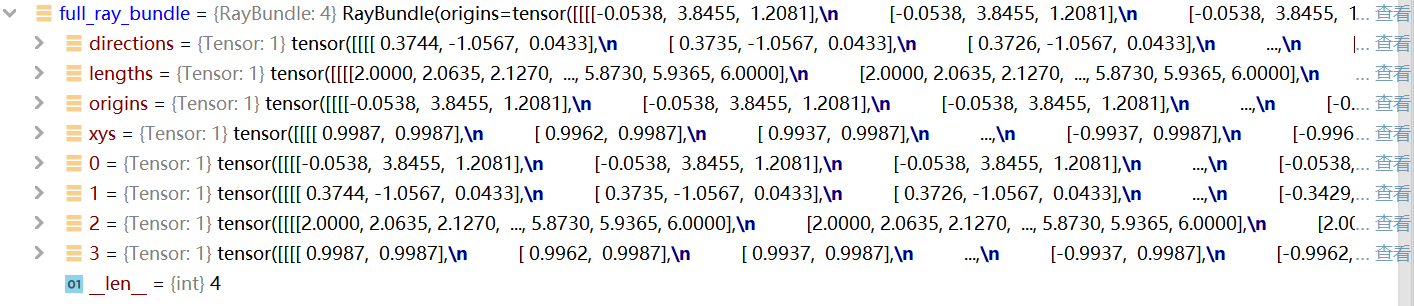

batch_size = cameras.R.shape[0] # pyre-ignore 1

device = cameras.device

if (camera_hash is None) and (not caching) and self.training:

# Sample random rays from scratch.从头开始采集光线

ray_bundle = self._mc_raysampler(cameras)

ray_bundle = self._normalize_raybundle(ray_bundle)

else:

if camera_hash is not None:

# 我们从缓存中取回相机的情况.

if batch_size != 1:

raise NotImplementedError(

"Ray caching works only for batches with a single camera!"

)

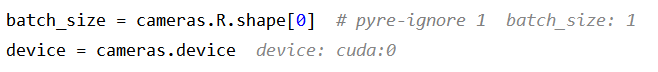

full_ray_bundle = self._ray_cache[camera_hash]#从光线缓存中取出光线

else:

# 我们从零开始生成一个完整的射线网格。

full_ray_bundle = self._grid_raysampler(cameras)

full_ray_bundle = self._normalize_raybundle(full_ray_bundle)

n_pixels = full_ray_bundle.directions.shape[:-1].numel() #640000

if self.training:

# During training we randomly subsample rays.对光线进行二次采样

sel_rays = torch.randperm(n_pixels, device=device)[

: self._mc_raysampler._n_rays_per_image

]

else:

# 如果我们进行测试,我们只接受所请求的块.直接取chunk

if chunksize is None:

chunksize = n_pixels * batch_size

start = chunk_idx * chunksize * batch_size #0

end = min(start + chunksize, n_pixels) #640000

sel_rays = torch.arange(

start,

end,

dtype=torch.long,

device=full_ray_bundle.lengths.device,

)

# 把整束射线中的“sel_rays”取出来。

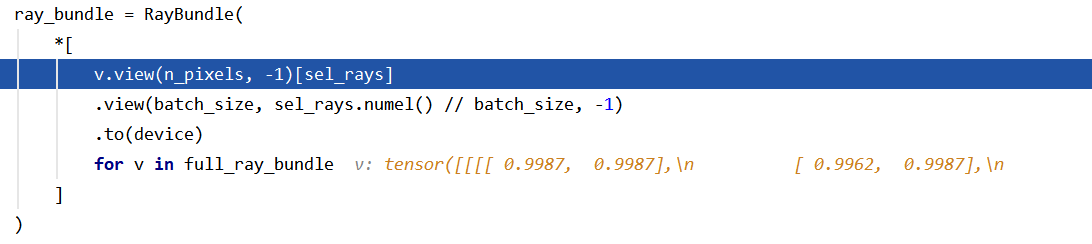

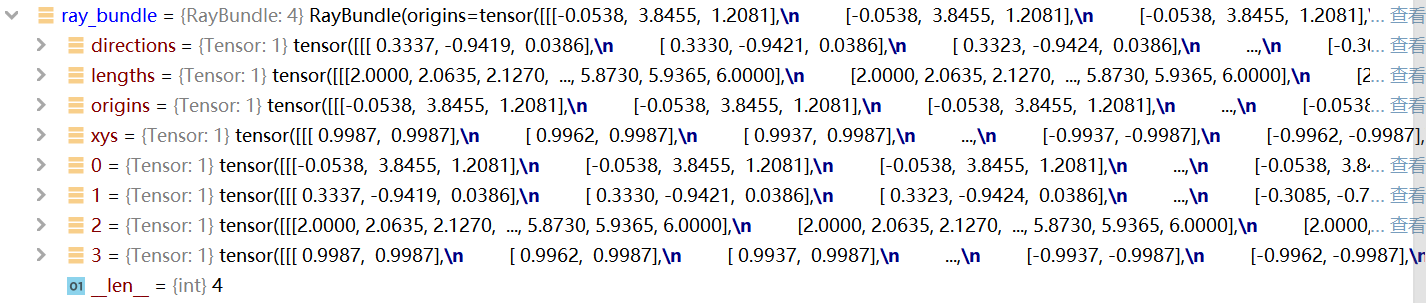

ray_bundle = RayBundle(

*[

v.view(n_pixels, -1)[sel_rays]

.view(batch_size, sel_rays.numel() // batch_size, -1)

.to(device)

for v in full_ray_bundle

]

)

if (

(self._stratified and self.training)

or (self._stratified_test and not self.training)

) and not caching: # 请确保缓存时不要分层!

ray_bundle = self._stratify_ray_bundle(ray_bundle)

return ray_bundle

self:

NeRFRaysampler(

(_grid_raysampler): NDCMultinomialRaysampler()

(_mc_raysampler): MonteCarloRaysampler()

)

full_ray_bundle = self._grid_raysampler(cameras)

ray_bundle = self._normalize_raybundle(ray_bundle)

def _normalize_raybundle(self, ray_bundle: RayBundle):

"""

将输入“RayBundle”的光线方向标准化为单位范数。

"""

ray_bundle = ray_bundle._replace(

directions=torch.nn.functional.normalize(ray_bundle.directions, dim=-1)

)

return ray_bundle

self._print_precaching_progress(camera_i, len(cameras))

def _print_precaching_progress(self, i, total, bar_len=30):

"""

打印光线缓存的进度条

"""

position = round((i + 1) / total * bar_len)

pbar = "[" + "█" * position + " " * (bar_len - position) + "]"

print(pbar, end="\r")

import torch

from pytorch3d.renderer import EmissionAbsorptionRaymarcher

from pytorch3d.renderer.implicit.raymarching import (

_check_density_bounds,

_check_raymarcher_inputs,

_shifted_cumprod,

)

class EmissionAbsorptionNeRFRaymarcher(EmissionAbsorptionRaymarcher):

"""

这本质上是`pytorch3d.renderer.EmissionAbsorptionRaymarcher`,返回渲染权重。

它也跳过返回 alpha-mask 的计算,在 NeRF 的情况下,到处等于 1

权重随后在NeRF管道中用于实现重要性 精细渲染过程的光线采样。

For more details about the EmissionAbsorptionRaymarcher please refer to

the documentation of `pytorch3d.renderer.EmissionAbsorptionRaymarcher`.

"""

def forward(

self,

rays_densities: torch.Tensor,

rays_features: torch.Tensor,

eps: float = 1e-10,

**kwargs,

) -> torch.Tensor:

"""

参数:

rays_densities:用张量表示的每射线密度值

形状为 `(..., n_points_per_ray, 1)`,其值范围为 [0, 1]。

rays_features:用张量表示的每条射线特征值

形状`(...,n_points_per_ray,feature_dim)`。

eps:计算前添加到“rays_densities”的下限,吸收函数(“1-rays_densities”的cumprod沿每条射线)。这可以防止 cumprod 产生精确的 0

这将抑制任何基于梯度的学习。

Return:

features:形状为 `(..., feature_dim)` 的张量,包含每条光线的渲染特征。

weights:形状为 `(..., n_points_per_ray)` 的张量,包含射线特定的发射吸收分布。每个射线分布 `(..., :)` 是一个有效概率分布,即它包含整合的非负值

到 1,这样 `weights.sum(dim=-1)==1).all()` 产生 `True`。

"""

_check_raymarcher_inputs(

rays_densities,

rays_features,

None,

z_can_be_none=True,

features_can_be_none=False,

density_1d=True,

)

_check_density_bounds(rays_densities)

##检查输入的ray_density and ray_color是否有误

rays_densities = rays_densities[..., 0]

absorption = _shifted_cumprod(

(1.0 + eps) - rays_densities, shift=self.surface_thickness

)

weights = rays_densities * absorption

#利用该式子计算weights(bs, 1024, 64) 当吸收系数大的时候,weights变小, 表明对当前颜色贡献小

features = (weights[..., None] * rays_features).sum(dim=-2)#rgb_putput

return features, weights

def new_epoch(self) -> None:

"""

Initializes a new epoch.

"""

if self.verbose:

print("stats: new epoch %d" % (self.epoch + 1))

self.epoch += 1 # increase epoch counter

self.reset() # zero the stats

# Update stats with the current metrics.

stats.update(

{"loss": float(loss), **metrics},

stat_set="train",

)

def update(self, preds: dict, stat_set: str = "train") -> None:

"""

Update the internal logs with metrics of a training step.

每个指标都存储为AverageMeter的实例。

Args:

preds: 要添加到日志中的值的Dict

stat_set: 待更新的统计数据集 (e.g. "train", "val").

"""

if self.epoch == -1: # uninitialized

warnings.warn(

"self.epoch==-1 means uninitialized stats structure"

" -> new_epoch() called"

)

self.new_epoch()

if stat_set not in self.stats:

self.stats[stat_set] = {}

self.it[stat_set] = -1

self.it[stat_set] += 1

epoch = self.epoch

it = self.it[stat_set]

for stat in self.log_vars:

if stat not in self.stats[stat_set]:

self.stats[stat_set][stat] = AverageMeter()

if stat == "sec/it": # compute speed

elapsed = time.time() - self._epoch_start

time_per_it = float(elapsed) / float(it + 1)

val = time_per_it

else:

if stat in preds:

val = self._gather_value(preds[stat])

else:

val = None

if val is not None:

self.stats[stat_set][stat].update(val, epoch=epoch, n=1)

def print(self, max_it: Optional[int] = None, stat_set: str = "train") -> None:

"""

Print the current values of all stored stats.

Args:

max_it: Maximum iteration number to be displayed.

If None, the maximum iteration number is not displayed.

stat_set: The set of statistics to be printed.

"""

epoch = self.epoch

stats = self.stats

str_out = ""

it = self.it[stat_set]

stat_str = ""

stats_print = sorted(stats[stat_set].keys())

for stat in stats_print:

if stats[stat_set][stat].count == 0:

continue

stat_str += " {0:.12}: {1:1.3f} |".format(stat, stats[stat_set][stat].avg)

head_str = f"[{stat_set}] | epoch {epoch} | it {it}"

if max_it:

head_str += f"/ {max_it}"

str_out = f"{head_str} | {stat_str}"

print(str_out)

def _gather_value(self, val):

if isinstance(val, float):

pass

else:

val = val.data.cpu().numpy()

val = float(val.sum())

return val

def plot_stats(

self,

viz: Visdom = None,

visdom_env: Optional[str] = None,

plot_file: Optional[str] = None,

) -> None:

"""

Plot the line charts of the history of the stats.

Args:

viz: The Visdom object holding the connection to a Visdom server.

visdom_env: The visdom environment for storing the graphs.

plot_file: The path to a file with training plots.

"""

stat_sets = list(self.stats.keys())

if viz is None:

withvisdom = False

elif not viz.check_connection():

warnings.warn("Cannot connect to the visdom server! Skipping visdom plots.")

withvisdom = False

else:

withvisdom = True

lines = []

for stat in self.log_vars:

vals = []

stat_sets_now = []

for stat_set in stat_sets:

val = self.stats[stat_set][stat].get_epoch_averages()

if val is None:

continue

else:

val = np.array(val).reshape(-1)

stat_sets_now.append(stat_set)

vals.append(val)

if len(vals) == 0:

continue

vals = np.stack(vals, axis=1)

x = np.arange(vals.shape[0])

lines.append((stat_sets_now, stat, x, vals))

if withvisdom:

for tmodes, stat, x, vals in lines:

title = "%s" % stat

opts = {"title": title, "legend": list(tmodes)}

for i, (tmode, val) in enumerate(zip(tmodes, vals.T)):

update = "append" if i > 0 else None

valid = np.where(np.isfinite(val))

if len(valid) == 0:

continue

viz.line(

Y=val[valid],

X=x[valid],

env=visdom_env,

opts=opts,

win=f"stat_plot_{title}",

name=tmode,

update=update,

)

if plot_file is None:

plot_file = self.plot_file

if plot_file is not None:

print("Exporting stats to %s" % plot_file)

ncol = 3

nrow = int(np.ceil(float(len(lines)) / ncol))

matplotlib.rcParams.update({"font.size": 5})

color = cycle(plt.cm.tab10(np.linspace(0, 1, 10)))

fig = plt.figure(1)

plt.clf()

for idx, (tmodes, stat, x, vals) in enumerate(lines):

c = next(color)

plt.subplot(nrow, ncol, idx + 1)

for vali, vals_ in enumerate(vals.T):

c_ = c * (1.0 - float(vali) * 0.3)

valid = np.where(np.isfinite(vals_))

if len(valid) == 0:

continue

plt.plot(x[valid], vals_[valid], c=c_, linewidth=1)

plt.ylabel(stat)

plt.xlabel("epoch")

plt.gca().yaxis.label.set_color(c[0:3] * 0.75)

plt.legend(tmodes)

gcolor = np.array(mcolors.to_rgba("lightgray"))

plt.grid(

b=True, which="major", color=gcolor, linestyle="-", linewidth=0.4

)

plt.grid(

b=True, which="minor", color=gcolor, linestyle="--", linewidth=0.2

)

plt.minorticks_on()

plt.tight_layout()

plt.show()

fig.savefig(plot_file)

class RayBundle(NamedTuple):

"""

RayBundle通过存储射线“origins”,沿投影射线参数化点,

`directions` vectors and `lengths` at which the ray-points are sampled.

此外,射线像素的XY位置(‘xys’)也被存储。

请注意,“directions”不必标准化;

它们在各自的一维坐标系中定义单位向量;

有关转换公式,请参见:func:“Ray_bundle_to_ray_points”的文档。

"""

origins: torch.Tensor

directions: torch.Tensor

lengths: torch.Tensor

xys: torch.Tensor

class NDCMultinomialRaysampler(MultinomialRaysampler):

"""

在一批矩形图像网格中有规律地分布的沿着射线的固定数目的点。

沿每条射线的点在预定义的最小深度和最大深度之间有均匀的z坐标.

`NDCMultinomialRaysampler‘遵循`Mesh’和‘PointClouds’渲染的屏幕惯例。

即像素坐标在[-1,1]x[-u,u]或[-u,u]x[-1,1]中,其中u>1是图像的纵横比。

有关参数的说明,请参阅给MultinomialRaySampler的文档。

"""

def __init__(

self,

*,

image_width: int,

image_height: int,

n_pts_per_ray: int,

min_depth: float,

max_depth: float,

n_rays_per_image: Optional[int] = None,

unit_directions: bool = False,

stratified_sampling: bool = False,

) -> None:

if image_width >= image_height:

range_x = image_width / image_height

range_y = 1.0

else:

range_x = 1.0

range_y = image_height / image_width

half_pix_width = range_x / image_width

half_pix_height = range_y / image_height

super().__init__(

min_x=range_x - half_pix_width,

max_x=-range_x + half_pix_width,

min_y=range_y - half_pix_height,

max_y=-range_y + half_pix_height,

image_width=image_width,

image_height=image_height,

n_pts_per_ray=n_pts_per_ray,

min_depth=min_depth,

max_depth=max_depth,

n_rays_per_image=n_rays_per_image,

unit_directions=unit_directions,

stratified_sampling=stratified_sampling,

)

class MultinomialRaysampler(torch.nn.Module):

"""

沿着规则分布的射线对固定数量的点进行采样

在一批矩形图像网格中.

每条射线上的点 在预定义的 最小和最大深度 之间.

raysampler首先生成以下形式的3D坐标网格:

```

/ min_x, min_y, max_depth -------------- / max_x, min_y, max_depth

/ /|

/ / | ^

/ min_depth min_depth / | |

min_x ----------------------------- max_x | | image

min_y min_y | | height

| | | |

| | | v

| | |

| | / max_x, max_y, ^

| | / max_depth /

min_x max_y / / n_pts_per_ray

max_y ----------------------------- max_x/ min_depth v

< --- image_width --- >

```

In order to generate ray points, `MultinomialRaysampler` takes each 3D point of

the grid (with coordinates `[x, y, depth]`) and unprojects it

with `cameras.unproject_points([x, y, depth])`, where `cameras` are an

additional input to the `forward` function.

Note that this is a generic implementation that can support any image grid

coordinate convention. For a raysampler which follows the PyTorch3D

coordinate conventions please refer to `NDCMultinomialRaysampler`.

As such, `NDCMultinomialRaysampler` is a special case of `MultinomialRaysampler`.

"""

def __init__(

self,

*,

min_x: float,

max_x: float,

min_y: float,

max_y: float,

image_width: int,

image_height: int,

n_pts_per_ray: int,

min_depth: float,

max_depth: float,

n_rays_per_image: Optional[int] = None,

unit_directions: bool = False,

stratified_sampling: bool = False,

) -> None:

"""

Args: