BufferManager类处理主机和设备buffer分配和释放。

这个RAII类处理主机和设备buffer的分配和释放、主机和设备buffers之间的memcpy以帮助inference,以及debugging dumps以验证inference。BufferManager类用于简化buffer管理以及buffer和 engine之间的交互。

代码位于:TensorRT\samples\common\buffers.h

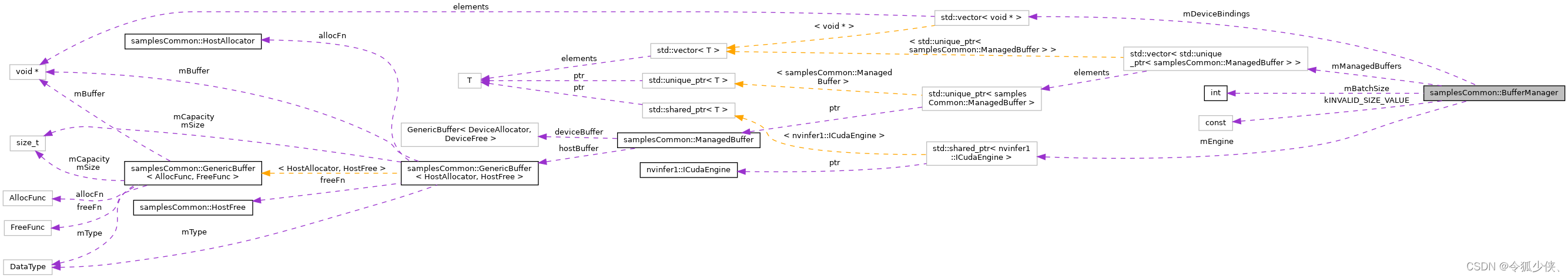

协作图

Public Member

BufferManager

BufferManager构造函数作用分配主机和设备的buffer内存,创建一个BufferManager来处理和引擎的缓冲区交互。具体实现如下:

BufferManager(std::shared_ptr<nvinfer1::ICudaEngine> engine, const int batchSize = 0,

const nvinfer1::IExecutionContext* context = nullptr)

: mEngine(engine), mBatchSize(batchSize)

{

assert(engine->hasImplicitBatchDimension() || mBatchSize == 0);

for (int i = 0; i < mEngine->getNbBindings(); i++)

{

auto dims = context ? context->getBindingDimensions(i) : mEngine->getBindingDimensions(i);

size_t vol = context || !mBatchSize ? 1 : static_cast<size_t>(mBatchSize);

nvinfer1::DataType type = mEngine->getBindingDataType(i);

int vecDim = mEngine->getBindingVectorizedDim(i);

if (-1 != vecDim)

{

int scalarsPerVec = mEngine->getBindingComponentsPerElement(i);

dims.d[vecDim] = divUp(dims.d[vecDim], scalarsPerVec);

vol *= scalarsPerVec;

}

vol *= samplesCommon::volume(dims);

std::unique_ptr<ManagedBuffer> manBuf{new ManagedBuffer()};

manBuf->deviceBuffer = DeviceBuffer(vol, type);

manBuf->hostBuffer = HostBuffer(vol, type);

mDeviceBindings.emplace_back(manBuf->deviceBuffer.data());

mManagedBuffers.emplace_back(std::move(manBuf));

}

}

getDeviceBindings

返回设备缓冲区的向量,可以直接将其用作IExecutionContext的execute和enqueue方法的绑定。

const std::vector<void*>& getDeviceBindings() const

{

return mDeviceBindings;

}

void* getDeviceBuffer(const std::string& tensorName) const

{

return getBuffer(false, tensorName);

}

getDeviceBuffer

返回与tensorName对应的设备缓冲区。如果找不到此类张量,则返回nullptr。

void* getDeviceBuffer(const std::string& tensorName) const

{

return getBuffer(false, tensorName);

}

getHostBuffer

返回与tensorName对应的主机缓冲区。如果找不到此类张量,则返回nullptr。

void* getHostBuffer(const std::string& tensorName) const

{

return getBuffer(true, tensorName);

}

size

返回与tensorName对应的主机和设备缓冲区的大小。如果找不到此类张量,则返回kINVALID_SIZE_VALUE。

size_t size(const std::string& tensorName) const

{

int index = mEngine->getBindingIndex(tensorName.c_str());

if (index == -1)

return kINVALID_SIZE_VALUE;

return mManagedBuffers[index]->hostBuffer.nbBytes();

}

print

将任意类型的缓冲区转储到std::ostream的模板化打印函数。rowCount参数控制每行上的元素数。rowCount为1表示每行上只有1个元素。

template <typename T>

void print(std::ostream& os, void* buf, size_t bufSize, size_t rowCount)

{

assert(rowCount != 0);

assert(bufSize % sizeof(T) == 0);

T* typedBuf = static_cast<T*>(buf);

size_t numItems = bufSize / sizeof(T);

for (int i = 0; i < static_cast<int>(numItems); i++)

{

if (rowCount == 1 && i != static_cast<int>(numItems) - 1)

os << typedBuf[i] << std::endl;

else if (rowCount == 1)

os << typedBuf[i];

else if (i % rowCount == 0)

os << typedBuf[i];

else if (i % rowCount == rowCount - 1)

os << " " << typedBuf[i] << std::endl;

else

os << " " << typedBuf[i];

}

}

copyInputToDevice

将输入主机缓冲区的内容同步复制到输入设备缓冲区。

void copyInputToDevice()

{

memcpyBuffers(true, false, false);

}

copyOutputToHost

将输出设备缓冲区的内容同步复制到输出主机缓冲区。

void copyOutputToHost()

{

memcpyBuffers(false, true, false);

}

将输出设备缓冲区的内容同步复制到输出主机缓冲区。

copyInputToDeviceAsync

将输入主机缓冲区的内容异步复制到输入设备缓冲区。

void copyInputToDeviceAsync(const cudaStream_t& stream = 0)

{

memcpyBuffers(true, false, true, stream);

}

copyOutputToHostAsync

将输出设备缓冲区的内容异步复制到输出主机缓冲区。

void copyOutputToHostAsync(const cudaStream_t& stream = 0)

{

memcpyBuffers(false, true, true, stream);

}

~BufferManager

~BufferManager() = default;

Private Member

getBuffer

void* getBuffer(const bool isHost, const std::string& tensorName) const

{

int index = mEngine->getBindingIndex(tensorName.c_str());

if (index == -1)

return nullptr;

return (isHost ? mManagedBuffers[index]->hostBuffer.data() : mManagedBuffers[index]->deviceBuffer.data());

}

memcpyBuffers

void memcpyBuffers(const bool copyInput, const bool deviceToHost, const bool async, const cudaStream_t& stream = 0)

{

for (int i = 0; i < mEngine->getNbBindings(); i++)

{

void* dstPtr

= deviceToHost ? mManagedBuffers[i]->hostBuffer.data() : mManagedBuffers[i]->deviceBuffer.data();

const void* srcPtr

= deviceToHost ? mManagedBuffers[i]->deviceBuffer.data() : mManagedBuffers[i]->hostBuffer.data();

const size_t byteSize = mManagedBuffers[i]->hostBuffer.nbBytes();

const cudaMemcpyKind memcpyType = deviceToHost ? cudaMemcpyDeviceToHost : cudaMemcpyHostToDevice;

if ((copyInput && mEngine->bindingIsInput(i)) || (!copyInput && !mEngine->bindingIsInput(i)))

{

if (async)

CHECK(cudaMemcpyAsync(dstPtr, srcPtr, byteSize, memcpyType, stream));

else

CHECK(cudaMemcpy(dstPtr, srcPtr, byteSize, memcpyType));

}

}

}

Member Data

Static Public Attributes

static const size_t kINVALID_SIZE_VALUE = ~size_t(0)

Private Attributes

std::shared_ptr<nvinfer1::ICudaEngine> samplesCommon::BufferManager::mEngine

int samplesCommon::BufferManager::mBatchSize

std::vector<std::unique_ptr<ManagedBuffer>> samplesCommon::BufferManager::mManagedBuffers

std::vector<void*> samplesCommon::BufferManager::mDeviceBindings

代码

#ifndef TENSORRT_BUFFERS_H

#define TENSORRT_BUFFERS_H

#include "NvInfer.h"

#include "common.h"

#include "half.h"

#include <cassert>

#include <cuda_runtime_api.h>

#include <iostream>

#include <iterator>

#include <memory>

#include <new>

#include <numeric>

#include <string>

#include <vector>

namespace samplesCommon

{

template <typename AllocFunc, typename FreeFunc>

class GenericBuffer

{

public:

GenericBuffer(nvinfer1::DataType type = nvinfer1::DataType::kFLOAT)

: mSize(0)

, mCapacity(0)

, mType(type)

, mBuffer(nullptr)

{

}

GenericBuffer(size_t size, nvinfer1::DataType type)

: mSize(size)

, mCapacity(size)

, mType(type)

{

if (!allocFn(&mBuffer, this->nbBytes()))

{

throw std::bad_alloc();

}

}

GenericBuffer(GenericBuffer&& buf)

: mSize(buf.mSize)

, mCapacity(buf.mCapacity)

, mType(buf.mType)

, mBuffer(buf.mBuffer)

{

buf.mSize = 0;

buf.mCapacity = 0;

buf.mType = nvinfer1::DataType::kFLOAT;

buf.mBuffer = nullptr;

}

GenericBuffer& operator=(GenericBuffer&& buf)

{

if (this != &buf)

{

freeFn(mBuffer);

mSize = buf.mSize;

mCapacity = buf.mCapacity;

mType = buf.mType;

mBuffer = buf.mBuffer;

buf.mSize = 0;

buf.mCapacity = 0;

buf.mBuffer = nullptr;

}

return *this;

}

void* data()

{

return mBuffer;

}

const void* data() const

{

return mBuffer;

}

size_t size() const

{

return mSize;

}

size_t nbBytes() const

{

return this->size() * samplesCommon::getElementSize(mType);

}

void resize(size_t newSize)

{

mSize = newSize;

if (mCapacity < newSize)

{

freeFn(mBuffer);

if (!allocFn(&mBuffer, this->nbBytes()))

{

throw std::bad_alloc{};

}

mCapacity = newSize;

}

}

void resize(const nvinfer1::Dims& dims)

{

return this->resize(samplesCommon::volume(dims));

}

~GenericBuffer()

{

freeFn(mBuffer);

}

private:

size_t mSize{0}, mCapacity{0};

nvinfer1::DataType mType;

void* mBuffer;

AllocFunc allocFn;

FreeFunc freeFn;

};

class DeviceAllocator

{

public:

bool operator()(void** ptr, size_t size) const

{

return cudaMalloc(ptr, size) == cudaSuccess;

}

};

class DeviceFree

{

public:

void operator()(void* ptr) const

{

cudaFree(ptr);

}

};

class HostAllocator

{

public:

bool operator()(void** ptr, size_t size) const

{

*ptr = malloc(size);

return *ptr != nullptr;

}

};

class HostFree

{

public:

void operator()(void* ptr) const

{

free(ptr);

}

};

using DeviceBuffer = GenericBuffer<DeviceAllocator, DeviceFree>;

using HostBuffer = GenericBuffer<HostAllocator, HostFree>;

class ManagedBuffer

{

public:

DeviceBuffer deviceBuffer;

HostBuffer hostBuffer;

};

class BufferManager

{

public:

static const size_t kINVALID_SIZE_VALUE = ~size_t(0);

BufferManager(std::shared_ptr<nvinfer1::ICudaEngine> engine, const int batchSize = 0,

const nvinfer1::IExecutionContext* context = nullptr)

: mEngine(engine)

, mBatchSize(batchSize)

{

assert(engine->hasImplicitBatchDimension() || mBatchSize == 0);

for (int i = 0; i < mEngine->getNbBindings(); i++)

{

auto dims = context ? context->getBindingDimensions(i) : mEngine->getBindingDimensions(i);

size_t vol = context || !mBatchSize ? 1 : static_cast<size_t>(mBatchSize);

nvinfer1::DataType type = mEngine->getBindingDataType(i);

int vecDim = mEngine->getBindingVectorizedDim(i);

if (-1 != vecDim)

{

int scalarsPerVec = mEngine->getBindingComponentsPerElement(i);

dims.d[vecDim] = divUp(dims.d[vecDim], scalarsPerVec);

vol *= scalarsPerVec;

}

vol *= samplesCommon::volume(dims);

std::unique_ptr<ManagedBuffer> manBuf{new ManagedBuffer()};

manBuf->deviceBuffer = DeviceBuffer(vol, type);

manBuf->hostBuffer = HostBuffer(vol, type);

mDeviceBindings.emplace_back(manBuf->deviceBuffer.data());

mManagedBuffers.emplace_back(std::move(manBuf));

}

}

std::vector<void*>& getDeviceBindings()

{

return mDeviceBindings;

}

const std::vector<void*>& getDeviceBindings() const

{

return mDeviceBindings;

}

void* getDeviceBuffer(const std::string& tensorName) const

{

return getBuffer(false, tensorName);

}

void* getHostBuffer(const std::string& tensorName) const

{

return getBuffer(true, tensorName);

}

size_t size(const std::string& tensorName) const

{

int index = mEngine->getBindingIndex(tensorName.c_str());

if (index == -1)

return kINVALID_SIZE_VALUE;

return mManagedBuffers[index]->hostBuffer.nbBytes();

}

template <typename T>

void print(std::ostream& os, void* buf, size_t bufSize, size_t rowCount)

{

assert(rowCount != 0);

assert(bufSize % sizeof(T) == 0);

T* typedBuf = static_cast<T*>(buf);

size_t numItems = bufSize / sizeof(T);

for (int i = 0; i < static_cast<int>(numItems); i++)

{

if (rowCount == 1 && i != static_cast<int>(numItems) - 1)

os << typedBuf[i] << std::endl;

else if (rowCount == 1)

os << typedBuf[i];

else if (i % rowCount == 0)

os << typedBuf[i];

else if (i % rowCount == rowCount - 1)

os << " " << typedBuf[i] << std::endl;

else

os << " " << typedBuf[i];

}

}

void copyInputToDevice()

{

memcpyBuffers(true, false, false);

}

void copyOutputToHost()

{

memcpyBuffers(false, true, false);

}

void copyInputToDeviceAsync(const cudaStream_t& stream = 0)

{

memcpyBuffers(true, false, true, stream);

}

void copyOutputToHostAsync(const cudaStream_t& stream = 0)

{

memcpyBuffers(false, true, true, stream);

}

~BufferManager() = default;

private:

void* getBuffer(const bool isHost, const std::string& tensorName) const

{

int index = mEngine->getBindingIndex(tensorName.c_str());

if (index == -1)

return nullptr;

return (isHost ? mManagedBuffers[index]->hostBuffer.data() : mManagedBuffers[index]->deviceBuffer.data());

}

void memcpyBuffers(const bool copyInput, const bool deviceToHost, const bool async, const cudaStream_t& stream = 0)

{

for (int i = 0; i < mEngine->getNbBindings(); i++)

{

void* dstPtr

= deviceToHost ? mManagedBuffers[i]->hostBuffer.data() : mManagedBuffers[i]->deviceBuffer.data();

const void* srcPtr

= deviceToHost ? mManagedBuffers[i]->deviceBuffer.data() : mManagedBuffers[i]->hostBuffer.data();

const size_t byteSize = mManagedBuffers[i]->hostBuffer.nbBytes();

const cudaMemcpyKind memcpyType = deviceToHost ? cudaMemcpyDeviceToHost : cudaMemcpyHostToDevice;

if ((copyInput && mEngine->bindingIsInput(i)) || (!copyInput && !mEngine->bindingIsInput(i)))

{

if (async)

CHECK(cudaMemcpyAsync(dstPtr, srcPtr, byteSize, memcpyType, stream));

else

CHECK(cudaMemcpy(dstPtr, srcPtr, byteSize, memcpyType));

}

}

}

std::shared_ptr<nvinfer1::ICudaEngine> mEngine;

int mBatchSize;

std::vector<std::unique_ptr<ManagedBuffer>> mManagedBuffers;

std::vector<void*> mDeviceBindings;

};

}

#endif

参考:

- https://www.ccoderun.ca/programming/doxygen/tensorrt/classsamplesCommon_1_1BufferManager.html#aa64f0092469babe813db491696098eb0

- https://github.com/NVIDIA/TensorRT

本文内容由网友自发贡献,版权归原作者所有,本站不承担相应法律责任。如您发现有涉嫌抄袭侵权的内容,请联系:hwhale#tublm.com(使用前将#替换为@)