基本思想:幸亏会一点点ffmpeg的视频编解码进行了视频播放,本篇博客教你如何直接解码h265进行视频播放,还得立下flag 要学如何硬解码

cmakelists.txt

cmake_minimum_required(VERSION 3.16)

project(depthai)

set(CMAKE_CXX_STANDARD 11)

find_package(OpenCV REQUIRED)

#message(STATUS ${OpenCV_INCLUDE_DIRS})

#添加头文件

include_directories(${OpenCV_INCLUDE_DIRS})

include_directories(${CMAKE_SOURCE_DIR}/include)

include_directories(${CMAKE_SOURCE_DIR}/include/utility)

#链接Opencv库

find_package(depthai CONFIG REQUIRED)

add_executable(depthai main.cpp include/utility/utility.cpp)

target_link_libraries(depthai ${OpenCV_LIBS} depthai::opencv -lavformat -lavcodec -lswscale -lavutil -lz)

main.cpp

#include <stdio.h>

#include <string>

#include <iostream>

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

extern "C"

{

#include <libavformat/avformat.h>

#include <libavcodec/avcodec.h>

#include <libavutil/imgutils.h>

#include <libswscale/swscale.h>

}

#include "utility.hpp"

#include "depthai/depthai.hpp"

using namespace std::chrono;

int main(int argc, char **argv) {

dai::Pipeline pipeline;

//定义

auto cam = pipeline.create<dai::node::ColorCamera>();

cam->setBoardSocket(dai::CameraBoardSocket::RGB);

cam->setResolution(dai::ColorCameraProperties::SensorResolution::THE_1080_P);

cam->setVideoSize(1920, 1080);

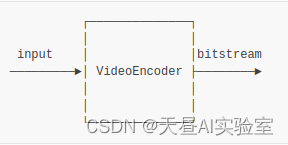

auto Encoder = pipeline.create<dai::node::VideoEncoder>();

Encoder->setDefaultProfilePreset(cam->getVideoSize(), cam->getFps(),

dai::VideoEncoderProperties::Profile::H265_MAIN);

cam->video.link(Encoder->input);

cam->setFps(60);

//定义输出

auto xlinkoutpreviewOut = pipeline.create<dai::node::XLinkOut>();

xlinkoutpreviewOut->setStreamName("out");

Encoder->bitstream.link(xlinkoutpreviewOut->input);

//结构推送相机

dai::Device device(pipeline);

//取帧显示

auto outqueue = device.getOutputQueue("out", cam->getFps(), false);//maxsize 代表缓冲数据

// auto videoFile = std::ofstream("video.h265", std::ios::binary);

int width = 1920;

int height = 1080;

AVCodec *pCodec = avcodec_find_decoder(AV_CODEC_ID_H265);

AVCodecContext *pCodecCtx = avcodec_alloc_context3(pCodec);

int ret = avcodec_open2(pCodecCtx, pCodec, NULL);

if (ret < 0) {//打开解码器

printf("Could not open codec.\n");

return -1;

}

AVFrame *picture = av_frame_alloc();

picture->width = width;

picture->height = height;

picture->format = AV_PIX_FMT_YUV420P;

ret = av_frame_get_buffer(picture, 1);

if (ret < 0) {

printf("av_frame_get_buffer error\n");

return -1;

}

AVFrame *pFrame = av_frame_alloc();

pFrame->width = width;

pFrame->height = height;

pFrame->format = AV_PIX_FMT_YUV420P;

ret = av_frame_get_buffer(pFrame, 1);

if (ret < 0) {

printf("av_frame_get_buffer error\n");

return -1;

}

AVFrame *pFrameRGB = av_frame_alloc();

pFrameRGB->width = width;

pFrameRGB->height = height;

pFrameRGB->format = AV_PIX_FMT_RGB24;

ret = av_frame_get_buffer(pFrameRGB, 1);

if (ret < 0) {

printf("av_frame_get_buffer error\n");

return -1;

}

int picture_size = av_image_get_buffer_size(AV_PIX_FMT_YUV420P, width, height,

1);//计算这个格式的图片,需要多少字节来存储

uint8_t *out_buff = (uint8_t *) av_malloc(picture_size * sizeof(uint8_t));

av_image_fill_arrays(picture->data, picture->linesize, out_buff, AV_PIX_FMT_YUV420P, width,

height, 1);

//这个函数 是缓存转换格式,可以不用 以为上面已经设置了AV_PIX_FMT_YUV420P

SwsContext *img_convert_ctx = sws_getContext(width, height, AV_PIX_FMT_YUV420P,

width, height, AV_PIX_FMT_RGB24, 4,

NULL, NULL, NULL);

AVPacket *packet = av_packet_alloc();

auto startTime = steady_clock::now();

int counter = 0;

float fps = 0;

while (true) {

auto h265Packet = outqueue->get<dai::ImgFrame>();

//videoFile.write((char *) (h265Packet->getData().data()), h265Packet->getData().size());

packet->data = (uint8_t *) h265Packet->getData().data(); //这里填入一个指向完整H264数据帧的指针

packet->size = h265Packet->getData().size(); //这个填入H265 数据帧的大小

packet->stream_index = 0;

ret = avcodec_send_packet(pCodecCtx, packet);

if (ret < 0) {

printf("avcodec_send_packet \n");

continue;

}

av_packet_unref(packet);

int got_picture = avcodec_receive_frame(pCodecCtx, pFrame);

av_frame_is_writable(pFrame);

if (got_picture < 0) {

printf("avcodec_receive_frame \n");

continue;

}

sws_scale(img_convert_ctx, pFrame->data, pFrame->linesize, 0,

height,

pFrameRGB->data, pFrameRGB->linesize);

cv::Mat mRGB(cv::Size(width, height), CV_8UC3);

mRGB.data = (unsigned char *) pFrameRGB->data[0];

cv::Mat mBGR;

cv::cvtColor(mRGB, mBGR, cv::COLOR_RGB2BGR);

counter++;

auto currentTime = steady_clock::now();

auto elapsed = duration_cast<duration<float>>(currentTime - startTime);

if (elapsed > seconds(1)) {

fps = counter / elapsed.count();

counter = 0;

startTime = currentTime;

}

std::stringstream fpsStr;

fpsStr << "NN fps: " << std::fixed << std::setprecision(2) << fps;

printf("fps %f\n",fps);

cv::putText(mBGR, fpsStr.str(), cv::Point(2, mBGR.rows - 4), cv::FONT_HERSHEY_TRIPLEX, 0.4,

cv::Scalar(0, 255, 0));

cv::imshow("demo", mBGR);

cv::waitKey(1);

}

return 0;

}

测试结果

补充一个测据的代码,如果不加测据可以在速度60fps,如果画面失真可以在降低帧率

#include <stdio.h>

#include <string>

#include <iostream>

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include <opencv2/imgcodecs.hpp>

extern "C"

{

#include <libavformat/avformat.h>

#include <libavcodec/avcodec.h>

#include <libavutil/imgutils.h>

#include <libswscale/swscale.h>

}

#include "utility.hpp"

#include "depthai/depthai.hpp"

using namespace std::chrono;

int main(int argc, char **argv) {

dai::Pipeline pipeline;

//定义

auto cam = pipeline.create<dai::node::ColorCamera>();

cam->setBoardSocket(dai::CameraBoardSocket::RGB);

cam->setResolution(dai::ColorCameraProperties::SensorResolution::THE_1080_P);

cam->setVideoSize(1920, 1080);

cam->setFps(30);

auto Encoder = pipeline.create<dai::node::VideoEncoder>();

Encoder->setDefaultProfilePreset(cam->getVideoSize(), cam->getFps(),

dai::VideoEncoderProperties::Profile::H265_MAIN);

cam->video.link(Encoder->input);

auto monoLeft = pipeline.create<dai::node::MonoCamera>();

auto monoRight = pipeline.create<dai::node::MonoCamera>();

auto stereo = pipeline.create<dai::node::StereoDepth>();

auto spatialLocationCalculator = pipeline.create<dai::node::SpatialLocationCalculator>();

auto xoutDepth = pipeline.create<dai::node::XLinkOut>();

auto xoutSpatialData = pipeline.create<dai::node::XLinkOut>();

auto xinSpatialCalcConfig = pipeline.create<dai::node::XLinkIn>();

auto xoutRgb = pipeline.create<dai::node::XLinkOut>();

xoutDepth->setStreamName("depth");

xoutSpatialData->setStreamName("spatialData");

xinSpatialCalcConfig->setStreamName("spatialCalcConfig");

xoutRgb->setStreamName("rgb");

monoLeft->setResolution(dai::MonoCameraProperties::SensorResolution::THE_400_P);

monoLeft->setBoardSocket(dai::CameraBoardSocket::LEFT);

monoRight->setResolution(dai::MonoCameraProperties::SensorResolution::THE_400_P);

monoRight->setBoardSocket(dai::CameraBoardSocket::RIGHT);

stereo->setDefaultProfilePreset(dai::node::StereoDepth::PresetMode::HIGH_ACCURACY);

stereo->setLeftRightCheck(true);

stereo->setExtendedDisparity(true);

spatialLocationCalculator->inputConfig.setWaitForMessage(false);

dai::SpatialLocationCalculatorConfigData config;

config.depthThresholds.lowerThreshold = 200;

config.depthThresholds.upperThreshold = 10000;

config.roi = dai::Rect(dai::Point2f( 0.1, 0.45), dai::Point2f(( 1) * 0.1, 0.55));

spatialLocationCalculator->initialConfig.addROI(config);

// Linking

monoLeft->out.link(stereo->left);

monoRight->out.link(stereo->right);

spatialLocationCalculator->passthroughDepth.link(xoutDepth->input);

stereo->depth.link(spatialLocationCalculator->inputDepth);

spatialLocationCalculator->out.link(xoutSpatialData->input);

xinSpatialCalcConfig->out.link(spatialLocationCalculator->inputConfig);

//定义输出

auto xlinkoutpreviewOut = pipeline.create<dai::node::XLinkOut>();

xlinkoutpreviewOut->setStreamName("out");

Encoder->bitstream.link(xlinkoutpreviewOut->input);

//结构推送相机

dai::Device device(pipeline);

device.setIrLaserDotProjectorBrightness(1000);

//取帧显示

auto outqueue = device.getOutputQueue("out", cam->getFps(), false);//maxsize 代表缓冲数据

auto depthQueue = device.getOutputQueue("depth", 4, false);

auto spatialCalcQueue = device.getOutputQueue("spatialData", 4, false);

//auto videoFile = std::ofstream("video.h265", std::ios::binary);

int width = 1920;

int height = 1080;

AVCodec *pCodec = avcodec_find_decoder(AV_CODEC_ID_H265);

AVCodecContext *pCodecCtx = avcodec_alloc_context3(pCodec);

int ret = avcodec_open2(pCodecCtx, pCodec, NULL);

if (ret < 0) {//打开解码器

printf("Could not open codec.\n");

return -1;

}

AVFrame *picture = av_frame_alloc();

picture->width = width;

picture->height = height;

picture->format = AV_PIX_FMT_YUV420P;

ret = av_frame_get_buffer(picture, 1);

if (ret < 0) {

printf("av_frame_get_buffer error\n");

return -1;

}

AVFrame *pFrame = av_frame_alloc();

pFrame->width = width;

pFrame->height = height;

pFrame->format = AV_PIX_FMT_YUV420P;

ret = av_frame_get_buffer(pFrame, 1);

if (ret < 0) {

printf("av_frame_get_buffer error\n");

return -1;

}

AVFrame *pFrameRGB = av_frame_alloc();

pFrameRGB->width = width;

pFrameRGB->height = height;

pFrameRGB->format = AV_PIX_FMT_RGB24;

ret = av_frame_get_buffer(pFrameRGB, 1);

if (ret < 0) {

printf("av_frame_get_buffer error\n");

return -1;

}

int picture_size = av_image_get_buffer_size(AV_PIX_FMT_YUV420P, width, height,

1);//计算这个格式的图片,需要多少字节来存储

uint8_t *out_buff = (uint8_t *) av_malloc(picture_size * sizeof(uint8_t));

av_image_fill_arrays(picture->data, picture->linesize, out_buff, AV_PIX_FMT_YUV420P, width,

height, 1);

//这个函数 是缓存转换格式,可以不用 以为上面已经设置了AV_PIX_FMT_YUV420P

SwsContext *img_convert_ctx = sws_getContext(width, height, AV_PIX_FMT_YUV420P,

width, height, AV_PIX_FMT_RGB24, 4,

NULL, NULL, NULL);

AVPacket *packet = av_packet_alloc();

auto startTime = steady_clock::now();

int counter = 0;

float fps = 0;

auto spatialCalcConfigInQueue = device.getInputQueue("spatialCalcConfig");

while (true) {

counter++;

auto currentTime = steady_clock::now();

auto elapsed = duration_cast<duration<float>>(currentTime - startTime);

if (elapsed > seconds(1)) {

fps = counter / elapsed.count();

counter = 0;

startTime = currentTime;

}

auto h265Packet = outqueue->get<dai::ImgFrame>();

//videoFile.write((char *) (h265Packet->getData().data()), h265Packet->getData().size());

packet->data = (uint8_t *) h265Packet->getData().data(); //这里填入一个指向完整H264数据帧的指针

packet->size = h265Packet->getData().size(); //这个填入H265 数据帧的大小

packet->stream_index = 0;

ret = avcodec_send_packet(pCodecCtx, packet);

if (ret < 0) {

printf("avcodec_send_packet \n");

continue;

}

av_packet_unref(packet);

int got_picture = avcodec_receive_frame(pCodecCtx, pFrame);

av_frame_is_writable(pFrame);

if (got_picture < 0) {

printf("avcodec_receive_frame \n");

continue;

}

sws_scale(img_convert_ctx, pFrame->data, pFrame->linesize, 0,

height,

pFrameRGB->data, pFrameRGB->linesize);

cv::Mat mRGB(cv::Size(width, height), CV_8UC3);

mRGB.data = (unsigned char *) pFrameRGB->data[0];

cv::Mat mBGR;

cv::cvtColor(mRGB, mBGR, cv::COLOR_RGB2BGR);

std::stringstream fpsStr;

fpsStr << "NN fps: " << std::fixed << std::setprecision(2) << fps;

printf("fps %f\n",fps);

cv::putText(mBGR, fpsStr.str(), cv::Point(2, mBGR.rows - 4), cv::FONT_HERSHEY_TRIPLEX, 0.4,

cv::Scalar(0, 255, 0));

config.roi = dai::Rect(dai::Point2f(3 * 0.1, 0.45), dai::Point2f((3 + 1) * 0.1, 0.55));

dai::SpatialLocationCalculatorConfig cfg;

cfg.addROI(config);

spatialCalcConfigInQueue->send(cfg);

// auto inDepth = depthQueue->get<dai::ImgFrame>();

//cv::Mat depthFrame = inDepth->getFrame(); // depthFrame values are in millimeters

auto spatialData = spatialCalcQueue->get<dai::SpatialLocationCalculatorData>()->getSpatialLocations();

for(auto depthData : spatialData) {

auto roi = depthData.config.roi;

roi = roi.denormalize(mBGR.cols, mBGR.rows);

auto xmin = static_cast<int>(roi.topLeft().x);

auto ymin = static_cast<int>(roi.topLeft().y);

auto xmax = static_cast<int>(roi.bottomRight().x);

auto ymax = static_cast<int>(roi.bottomRight().y);

auto coords = depthData.spatialCoordinates;

auto distance = std::sqrt(coords.x * coords.x + coords.y * coords.y + coords.z * coords.z);

auto color = cv::Scalar(0, 200, 40);

auto fontType = cv::FONT_HERSHEY_TRIPLEX;

cv::rectangle(mBGR, cv::Rect(cv::Point(xmin, ymin), cv::Point(xmax, ymax)), color);

std::stringstream depthDistance;

depthDistance.precision(2);

depthDistance << std::fixed << static_cast<float>(distance / 1000.0f) << "m";

cv::putText(mBGR, depthDistance.str(), cv::Point(xmin + 10, ymin + 20), fontType, 0.5, color);

}

cv::imshow("demo", mBGR);

cv::waitKey(1);

}

return 0;

}

如果你想推流oak得话 c++提供代码

https://github.com/sxj731533730/RTSPServerOAK

本文内容由网友自发贡献,版权归原作者所有,本站不承担相应法律责任。如您发现有涉嫌抄袭侵权的内容,请联系:hwhale#tublm.com(使用前将#替换为@)