临时被分配了一个任务 写一个C++版本的全景视频播放器

网上搜了搜 基于前辈的基础上 写的差不多了

测试视频源是用ffmpeg拉RTSP的流

最终是要嵌入到别的一个视频播放器模块 所以解码这块我不用太关注 只要实现渲染就可以了

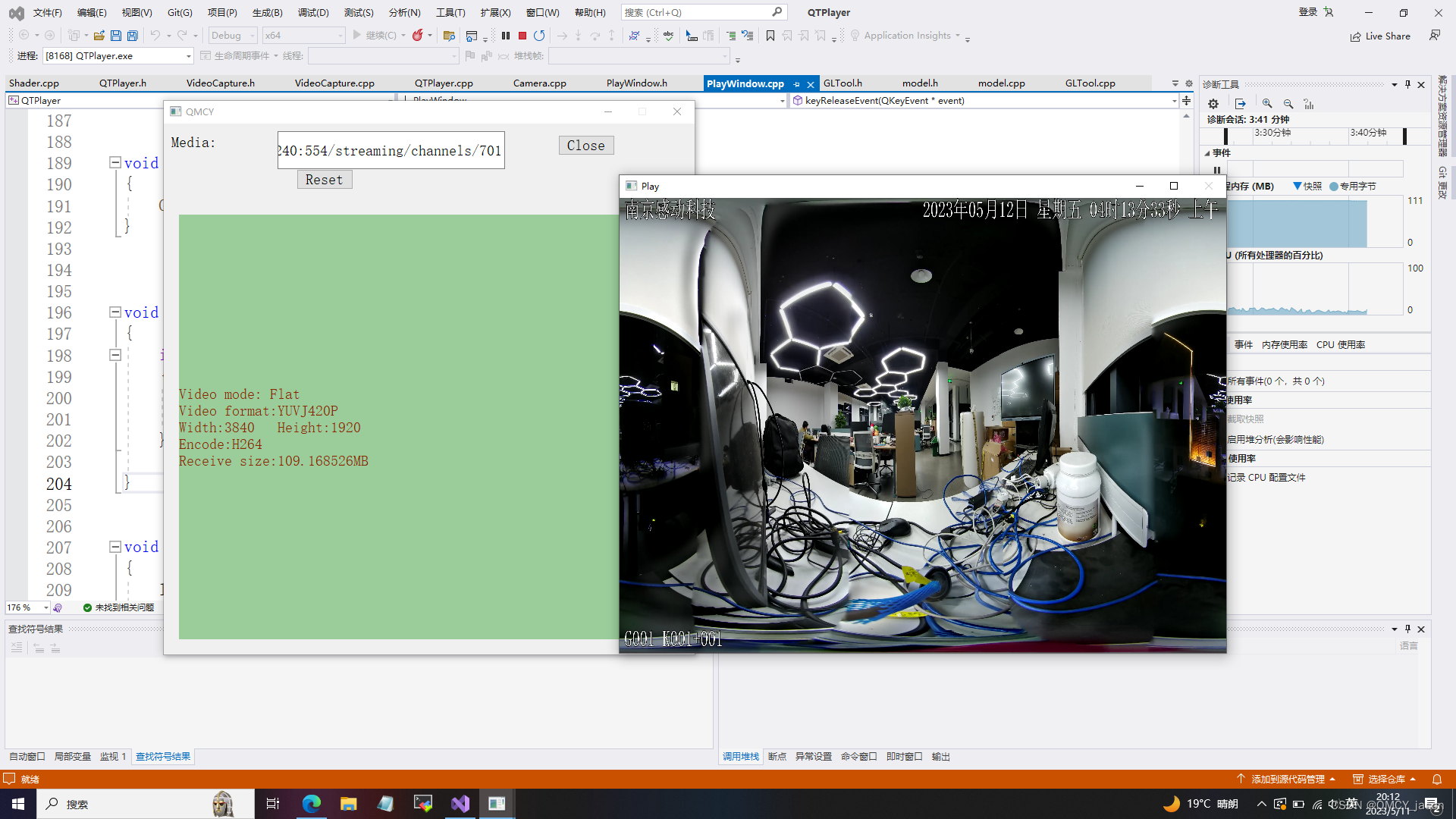

效果如下 左边的窗口用于输入视频源 以及显示一些关于视频的一些信息

看下视频演示效果

QT加OpenGL+ffmpeg 写的全景视频播放器

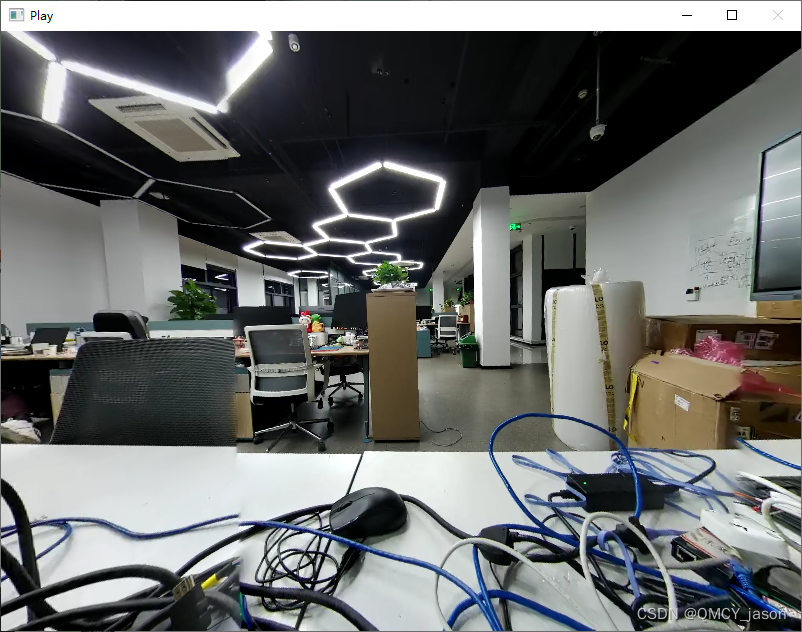

点击播放之后默认是非全景模式 按空格键切换到全景模式

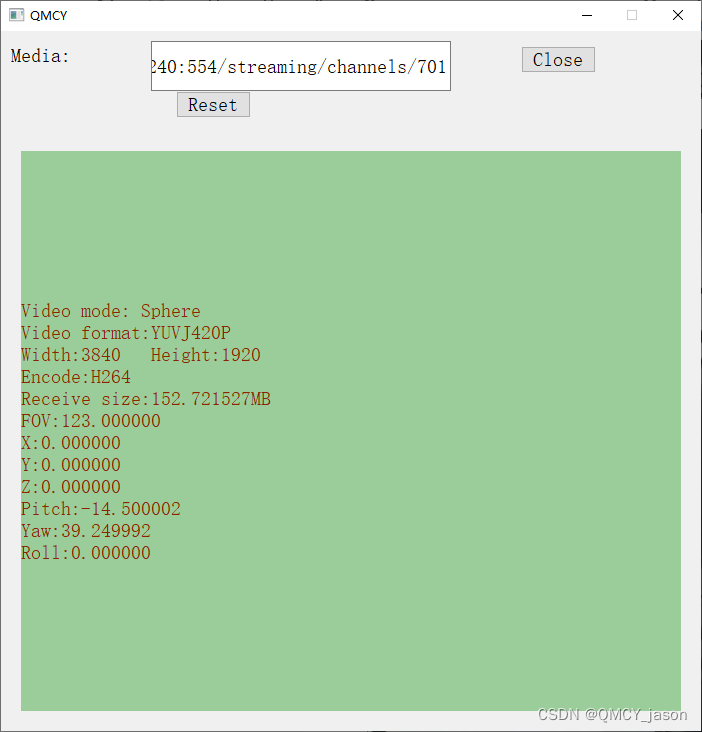

切换到全景模式之后 随着鼠标的拖动 左边的窗口会显示对应的全景的一些坐标信息

对比了下 和insta360的还是不一样 和Detu的播放器效果是一样的

由于用的是ffmpeg 所以可以支持rtsp rtmp等实时流媒体播放

ffmpeg用的是软解 也没有做视频同步之类的 但是播放实时视频没有啥问题

我们测试的RTSP是4K的 软解也没啥问题 记录下

绿色主窗口代码头文件:

#pragma once

#include <QtWidgets/QWidget>

#include "ui_QTPlayer.h"

#include "windows.h"

#include "glew.h"

#include <QPushButton>

#include <QLineEdit>

#include <QLabel>

#include <QMessageBox>

#include <QLabel>

#include "PlayWindow.h"

class QTPlayer : public QWidget

{

Q_OBJECT

public:

QTPlayer(QWidget *parent = nullptr);

~QTPlayer();

void closeEvent(QCloseEvent* event);

private slots:

void Refresh();

private:

Ui::QTPlayerClass ui;

QLabel* label;

QLineEdit* input;

QPushButton* open;

QPushButton* reset;

QLabel* label_info;

PlayWindow* play;

};

主窗口cpp代码

#include "QTPlayer.h"

#include <QTimer>

#include <QDateTime>

#include <iostream>

QTPlayer::QTPlayer(QWidget *parent)

: QWidget(parent,Qt::MSWindowsOwnDC)

{

ui.setupUi(this);

std::string filename = "rtsp://admin:thinker13@192.168.0.240:554/streaming/channels/701";

//新建窗口

play = new PlayWindow();

QFont ft;

ft.setPointSize(14);

label = new QLabel("Media:", this);

label->setFont(ft);

label->move(10, 15);

label_info = new QLabel( this);

label_info->move(20, 120);

label_info->setFont(ft);

label_info->resize(660, 560);

label_info->setStyleSheet("QLabel{background:#9BCD9B;color:#8B2500;}");

input = new QLineEdit(this);

input->move(150, 10);

input->resize(300, 50);

input->setText(QString(filename.c_str()));

input->setFont(ft);

//Play button

open = new QPushButton("Play", this);

open->setFont(ft);

open->move(520, 15);

//Reset button

reset = new QPushButton("Reset", this);

reset->setFont(ft);

reset->move(175, 60);

connect(reset, &QPushButton::clicked, play, [=]() {

play->ResetCamera();

});

//主窗口设置

this->resize(700, 700);

setWindowFlags(Qt::MSWindowsFixedSizeDialogHint);

setWindowTitle("QMCY");

QTimer* timer_info = new QTimer(parent);

connect(timer_info, &QTimer::timeout, this, &QTPlayer::Refresh);

timer_info->start(200);

//目标2

//open点击一下,按钮文本变colse,再次点击就关闭one窗口

connect(open, &QPushButton::clicked,play, [=]() {

if (open->text() == QString("Close"))

{

play->DeInitGL();

play->close();

open->setText("Play");

}

else

{

play->hide();

if (play->OpenFile(input->text().toStdString()))

{

play->show();

open->setText("Close");

}

else

{

QMessageBox::about(NULL, "ERROR", "Input file is not valid");

open->setText("Play");

}

}

});

}

QTPlayer::~QTPlayer()

{

delete label;

delete label_info;

delete input;

delete reset;

delete open;

delete play;

}

void QTPlayer::closeEvent(QCloseEvent* event)

{

if (play)

{

play->close();

}

}

void QTPlayer::Refresh()

{

std::string info;

play->GetMediaInfo(info);

label_info->setText(info.c_str());

}

播放窗口头文件

#pragma once

#include "windows.h"

#include "glew.h"

#include "camera.h"

#include "shader.h"

#include "texture2d.h"

#include "model.h"

#include "videocapture.h"

#include <QtWidgets/QWidget>

#include <iostream>

class PlayWindow :public QWidget

{

Q_OBJECT

public:

PlayWindow(QWidget* parent = nullptr);

~PlayWindow();

virtual QPaintEngine* paintEngine() const { return NULL; }

virtual void resizeEvent(QResizeEvent* event);

void GetMediaInfo(std::string &);

void ResetCamera();

bool CreateGLContext();

void Render();

bool event(QEvent* event);

void showEvent(QShowEvent* event);

void GLUpdate();

bool OpenFile(std::string url);

void InitModel();

void InitGL();

void DeInitGL();

void keyReleaseEvent(QKeyEvent* event);

void mousePressEvent(QMouseEvent* event);

void mouseMoveEvent(QMouseEvent* event);

void wheelEvent(QWheelEvent* event);

private slots:

void Tick();

private:

std::string play_url;

HDC dc;

HGLRC rc;

HWND hwnd;

bool hasVideo = false;

Camera3D m_camera;

float speed = 0.1;

float rotate_speed = 0.05;

QPoint last_pos;

std::shared_ptr<Model> flatModel;

std::shared_ptr<Model> sphereModel;

std::shared_ptr<Shader> shader;

std::shared_ptr<VideoCapture> video;

Texture2D* Y = NULL;

Texture2D* U = NULL;

Texture2D* V = NULL;

bool isVR360 = false;

float fov = 60;

};

播放窗口cpp文件

#include "PlayWindow.h"

#include "windows.h"

#include <QPushButton>

#include <QDebug>

#include "QtEvent.h"

#include <QResizeEvent>

#include <QTimer>

#include <QDateTime>

#include <iostream>

#include <QApplication>

PlayWindow::PlayWindow(QWidget* parent)

: QWidget(parent, Qt::MSWindowsOwnDC)

{

setAttribute(Qt::WA_PaintOnScreen);

setAttribute(Qt::WA_NoSystemBackground);

setAutoFillBackground(true);

auto flags = windowFlags();//save current configuration

//setWindowFlags(Qt::CustomizeWindowHint | Qt::WindowMinMaxButtonsHint);

setWindowFlags(Qt::CustomizeWindowHint | Qt::WindowMinMaxButtonsHint);

//setWindowFlags(flags);//restore

this->resize(800, 600);

setWindowTitle("Play");

hwnd = (HWND)winId();

CreateGLContext();

wglMakeCurrent(dc, rc);

if (glewInit() != GLEW_OK)

{

throw "glewInit failed!!!";

}

QTimer* timer = new QTimer(parent);

connect(timer, &QTimer::timeout, this, &PlayWindow::Tick);

timer->start();

m_camera.SetRotation(0, 0, 0);

InitModel();

}

PlayWindow::~PlayWindow()

{

DeInitGL();

wglMakeCurrent(NULL, NULL);

if (rc)wglDeleteContext(rc);

if (dc) ReleaseDC(hwnd, dc);

}

bool PlayWindow::CreateGLContext()

{

dc = GetDC(hwnd);

PIXELFORMATDESCRIPTOR pfd;

ZeroMemory(&pfd, sizeof(pfd));

pfd.nSize = sizeof(pfd);

pfd.nVersion = 1;

pfd.cColorBits = 32;

pfd.cDepthBits = 24;

pfd.cStencilBits = 8;

pfd.iPixelType = PFD_TYPE_RGBA;

pfd.dwFlags = PFD_DRAW_TO_WINDOW | PFD_SUPPORT_OPENGL | PFD_DOUBLEBUFFER;

int format = 0;

format = ChoosePixelFormat(dc, &pfd);

if (!format)

{

throw "ChoosePixelFormat failed!!!";

}

SetPixelFormat(dc, format, &pfd);

rc = wglCreateContext(dc);

return true;

}

void PlayWindow::ResetCamera()

{

fov = 60;

m_camera.Reset();

}

void PlayWindow::GetMediaInfo(std::string &out)

{

if (video)

{

out.append("Video mode:");

if (isVR360)

{

out.append(" Sphere \n");

}

else

{

out.append(" Flat \n");

}

out.append("Video format:");

out.append(video->format);

out.append("\nWidth:");

out.append(std::to_string(video->width));

out.append(" Height:");

out.append(std::to_string(video->height));

out.append("\nEncode:");

out.append(video->encoder);

out.append("\nReceive size:");

if (video->recv_size > 1024 * 1024 * 1024)

{

out.append(std::to_string(video->recv_size/(1024 * 1024 * 1024.0f)));

out.append("GB");

}

else if (video->recv_size > 1024 * 1024)

{

out.append(std::to_string(video->recv_size / (1024 * 1024.0f)));

out.append("MB");

}

else if (video->recv_size > 1024)

{

out.append(std::to_string(video->recv_size / (1024.0f)));

out.append("KB");

}

if (isVR360)

{

out.append("\nFOV:");

out.append(std::to_string(fov));

out.append("\nX:");

out.append(std::to_string(m_camera.GetPosition().x()));

out.append("\nY:");

out.append(std::to_string(m_camera.GetPosition().y()));

out.append("\nZ:");

out.append(std::to_string(m_camera.GetPosition().z()));

out.append("\nPitch:");

out.append(std::to_string(m_camera.GetRotation().x()));

out.append("\nYaw:");

out.append(std::to_string(m_camera.GetRotation().y()));

out.append("\nRoll:");

out.append(std::to_string(m_camera.GetRotation().z()));

}

}

else

{

out.append("Video is not avaiable");

}

}

void PlayWindow::resizeEvent(QResizeEvent* event)

{

glViewport(0, 0, event->size().width(), event->size().height());

GLUpdate();

}

bool PlayWindow::event(QEvent* event)

{

if (event->type() == QtEvent::GL_UPDATE)

{

if (hasVideo)

{

Render();

}

}

return QWidget::event(event);

}

void PlayWindow::GLUpdate()

{

QApplication::postEvent(this, new QtEvent(QtEvent::GL_UPDATE));

}

void PlayWindow::showEvent(QShowEvent* event)

{

GLUpdate();

}

void PlayWindow::keyReleaseEvent(QKeyEvent* event)

{

if (event->key() == Qt::Key_Space)

{

isVR360 = 1 - isVR360;

GLUpdate();

}

}

void PlayWindow::mousePressEvent(QMouseEvent* event)

{

last_pos = event->pos();

}

void PlayWindow::mouseMoveEvent(QMouseEvent* event)

{

QPoint dis = event->pos() - last_pos;

last_pos = event->pos();

m_camera.Rotate(dis.y() * rotate_speed, dis.x() * rotate_speed, 0);

GLUpdate();

}

void PlayWindow::wheelEvent(QWheelEvent* event)

{

if (fov < 45)

{

fov = 45;

}

if (fov > 120)

{

fov = 120;

}

if (fov >= 45 && fov <= 120)

{

fov -= event->angleDelta().y() / 120.0f;

GLUpdate();

}

}

void PlayWindow::Tick()

{

static long long last = 0;

if (last == 0) last = QDateTime::currentMSecsSinceEpoch();

float interval = QDateTime::currentMSecsSinceEpoch() - last;

last = QDateTime::currentMSecsSinceEpoch();

interval /= 1000;

GLUpdate();

}

void PlayWindow::DeInitGL()

{

if (Y)

{

Y = NULL;

}

if (U)

{

U = NULL;

}

if (V)

{

V = NULL;

}

if (shader)

{

shader = NULL;

}

if (video)

{

video = NULL;

}

}

void PlayWindow::InitModel()

{

flatModel = std::make_shared<Model>("res/model/quad.obj");

sphereModel = std::make_shared<Model>("res/model/sphere.obj");

}

void PlayWindow::InitGL()

{

ResetCamera();

if (video->formatType == PIX_FMT_YUV420P || video->formatType == PIX_FMT_YUVJ420P)

{

shader = std::make_shared<Shader>("res/glsl/vertexshader.glsl", "res/glsl/fragmentyuv.glsl");

Y = new Texture2D(video->width, video->height, GL_LUMINANCE, GL_LUMINANCE, NULL);

U = new Texture2D(video->width / 2, video->height / 2, GL_LUMINANCE, GL_LUMINANCE, NULL);

V = new Texture2D(video->width / 2, video->height / 2, GL_LUMINANCE, GL_LUMINANCE, NULL);

}

else if (video->formatType == PIX_FMT_YUV422P || video->formatType == PIX_FMT_YUVJ422P)

{

shader = std::make_shared<Shader>("res/glsl/vertexshader.glsl", "res/glsl/fragmentyuv.glsl");

Y = new Texture2D(video->width, video->height, GL_LUMINANCE, GL_LUMINANCE, NULL);

U = new Texture2D(video->width / 2, video->height, GL_LUMINANCE, GL_LUMINANCE, NULL);

V = new Texture2D(video->width / 2, video->height, GL_LUMINANCE, GL_LUMINANCE, NULL);

}

else if (video->formatType == PIX_FMT_YUV444P || video->formatType == PIX_FMT_YUVJ444P)

{

shader = std::make_shared<Shader>("res/glsl/vertexshader.glsl", "res/glsl/fragmentyuv.glsl");

Y = new Texture2D(video->width, video->height, GL_LUMINANCE, GL_LUMINANCE, NULL);

U = new Texture2D(video->width, video->height, GL_LUMINANCE, GL_LUMINANCE, NULL);

V = new Texture2D(video->width, video->height, GL_LUMINANCE, GL_LUMINANCE, NULL);

}

else if (video->formatType == PIX_FMT_YUYV422)

{

shader = std::make_shared<Shader>("res/glsl/vertexshader.glsl", "res/glsl/fragment_yuyv422.glsl");

Y = new Texture2D(video->width, video->height, GL_LUMINANCE_ALPHA, GL_LUMINANCE_ALPHA, NULL);

U = new Texture2D(video->width / 2, video->height, GL_RGBA, GL_RGBA, NULL);

}

else if (video->formatType == PIX_FMT_UYVY422)

{

shader = std::make_shared<Shader>("res/glsl/vertexshader.glsl", "res/glsl/fragment_uyvy422.glsl");

Y = new Texture2D(video->width, video->height, GL_LUMINANCE_ALPHA, GL_LUMINANCE_ALPHA, NULL);

U = new Texture2D(video->width / 2, video->height, GL_RGBA, GL_RGBA, NULL);

}

else if (video->formatType == PIX_FMT_NV12)

{

shader = std::make_shared<Shader>("res/glsl/vertexshader.glsl", "res/glsl/fragment_nv12.glsl");

Y = new Texture2D(video->width, video->height, GL_LUMINANCE, GL_LUMINANCE, NULL);

U = new Texture2D(video->width / 2, video->height / 2, GL_LUMINANCE_ALPHA, GL_LUMINANCE_ALPHA, NULL);

}

else if (video->formatType == PIX_FMT_NV21)

{

shader = std::make_shared<Shader>("res/glsl/vertexshader.glsl", "res/glsl/fragment_nv21.glsl");

Y = new Texture2D(video->width, video->height, GL_LUMINANCE, GL_LUMINANCE, NULL);

U = new Texture2D(video->width / 2, video->height / 2, GL_LUMINANCE_ALPHA, GL_LUMINANCE_ALPHA, NULL);

}

else if (video->formatType == PIX_FMT_GRAY)

{

shader = std::make_shared<Shader>("res/glsl/vertexshader.glsl", "res/glsl/fragment_gray.glsl");

Y = new Texture2D(video->width, video->height, GL_LUMINANCE, GL_LUMINANCE, NULL);

}

else if (video->formatType == PIX_FMT_RGB)

{

shader = std::make_shared<Shader>("res/glsl/vertexshader.glsl", "res/glsl/fragment_rgb.glsl");

Y = new Texture2D(video->width, video->height, GL_RGB, GL_RGB, NULL);

}

else if (video->formatType == PIX_FMT_BGR)

{

shader = std::make_shared<Shader>("res/glsl/vertexshader.glsl", "res/glsl/fragment_bgr.glsl");

Y = new Texture2D(video->width, video->height, GL_RGB, GL_RGB, NULL);

}

else if (video->formatType == PIX_FMT_RGBA)

{

shader = std::make_shared<Shader>("res/glsl/vertexshader.glsl", "res/glsl/fragment_rgba.glsl");

Y = new Texture2D(video->width, video->height, GL_RGBA, GL_RGBA, NULL);

}

else if (video->formatType == PIX_FMT_BGRA)

{

shader = std::make_shared<Shader>("res/glsl/vertexshader.glsl", "res/glsl/fragment_bgra.glsl");

Y = new Texture2D(video->width, video->height, GL_RGBA, GL_RGBA, NULL);

}

else if (video->formatType == PIX_FMT_ARGB)

{

shader = std::make_shared<Shader>("res/glsl/vertexshader.glsl", "res/glsl/fragment_argb.glsl");

Y = new Texture2D(video->width, video->height, GL_RGBA, GL_RGBA, NULL);

}

else if (video->formatType == PIX_FMT_ABGR)

{

shader = std::make_shared<Shader>("res/glsl/vertexshader.glsl", "res/glsl/fragment_abgr.glsl");

Y = new Texture2D(video->width, video->height, GL_RGBA, GL_RGBA, NULL);

}

glEnable(GL_DEPTH_TEST);

glEnable(GL_CULL_FACE);

glCullFace(GL_BACK);

glPolygonMode(GL_FRONT, GL_FILL);

CheckError();

}

void PlayWindow::Render()

{

QMatrix4x4 projectMat;

projectMat.perspective(fov, width() / (float)height(), 0.1f, 100);

glClearColor(0.0f, 0.0f, 0.0f, 1.0f);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

RETRY:

AVFrame* frame = nullptr;

if (video == nullptr)

{

goto EMPTY;

}

int ret = video->Retrieve(frame);

if (ret < 0)

{

goto EMPTY;

};

if (ret == 0)

{

video->Seek(0);

goto RETRY;

}

//qDebug() << "frame:pts=" << frame->pts;

#if 1

Model* model = isVR360 ? sphereModel.get() : flatModel.get();

const float* videoMat = isVR360 ? m_camera.GetViewMat().constData() : QMatrix4x4().constData();

const float* _projMat = isVR360 ? projectMat.constData() : QMatrix4x4().constData();

if (video->formatType == PIX_FMT_YUV420P || video->formatType == PIX_FMT_YUVJ420P)

{

Y->UpdateTexture2D(frame->width, frame->height, frame->linesize[0], frame->data[0]);

U->UpdateTexture2D(frame->width / 2, frame->height / 2, frame->linesize[1], frame->data[1]);

V->UpdateTexture2D(frame->width / 2, frame->height / 2, frame->linesize[2], frame->data[2]);

model->ApplyShader(shader.get());

model->SetTexture2D("VIDEO_Y", Y);

model->SetTexture2D("VIDEO_U", U);

model->SetTexture2D("VIDEO_V", V);

}

else if (video->formatType == PIX_FMT_YUV422P || video->formatType == PIX_FMT_YUVJ422P)

{

Y->UpdateTexture2D(frame->width, frame->height, frame->linesize[0], frame->data[0]);

U->UpdateTexture2D(frame->width / 2, frame->height, frame->linesize[1], frame->data[1]);

V->UpdateTexture2D(frame->width / 2, frame->height, frame->linesize[2], frame->data[2]);

model->ApplyShader(shader.get());

model->SetTexture2D("VIDEO_Y", Y);

model->SetTexture2D("VIDEO_U", U);

model->SetTexture2D("VIDEO_V", V);

}

else if (video->formatType == PIX_FMT_YUV444P || video->formatType == PIX_FMT_YUVJ444P)

{

Y->UpdateTexture2D(frame->width, frame->height, frame->linesize[0], frame->data[0]);

U->UpdateTexture2D(frame->width, frame->height, frame->linesize[1], frame->data[1]);

V->UpdateTexture2D(frame->width, frame->height, frame->linesize[2], frame->data[2]);

model->ApplyShader(shader.get());

model->SetTexture2D("VIDEO_Y", Y);

model->SetTexture2D("VIDEO_U", U);

model->SetTexture2D("VIDEO_V", V);

}

else if (video->formatType == PIX_FMT_YUYV422)

{

Y->UpdateTexture2D(frame->width, frame->height, frame->linesize[0] / 2, frame->data[0]);

U->UpdateTexture2D(frame->width / 2, frame->height, frame->linesize[0] / 4, frame->data[0]);

model->ApplyShader(shader.get());

model->SetTexture2D("VIDEO_Y", Y);

model->SetTexture2D("VIDEO_U", U);

}

else if (video->formatType == PIX_FMT_UYVY422)

{

Y->UpdateTexture2D(frame->width, frame->height, frame->linesize[0] / 2, frame->data[0]);

U->UpdateTexture2D(frame->width / 2, frame->height, frame->linesize[0] / 4, frame->data[0]);

model->ApplyShader(shader.get());

model->SetTexture2D("VIDEO_Y", Y);

model->SetTexture2D("VIDEO_U", U);

}

else if (video->formatType == PIX_FMT_NV12)

{

Y->UpdateTexture2D(frame->width, frame->height, frame->linesize[0], frame->data[0]);

U->UpdateTexture2D(frame->width / 2, frame->height / 2, frame->linesize[1] / 2, frame->data[1]);

model->ApplyShader(shader.get());

model->SetTexture2D("VIDEO_Y", Y);

model->SetTexture2D("VIDEO_U", U);

}

else if (video->formatType == PIX_FMT_NV21)

{

Y->UpdateTexture2D(frame->width, frame->height, frame->linesize[0], frame->data[0]);

U->UpdateTexture2D(frame->width / 2, frame->height / 2, frame->linesize[1] / 2, frame->data[1]);

model->ApplyShader(shader.get());

model->SetTexture2D("VIDEO_Y", Y);

model->SetTexture2D("VIDEO_U", U);

}

else if (video->formatType == PIX_FMT_GRAY)

{

Y->UpdateTexture2D(frame->width, frame->height, frame->linesize[0], frame->data[0]);

model->ApplyShader(shader.get());

model->SetTexture2D("VIDEO_Y", Y);

}

else if (video->formatType == PIX_FMT_RGB || video->formatType == PIX_FMT_BGR)

{

Y->UpdateTexture2D(frame->width, frame->height, frame->linesize[0] / 3, frame->data[0]);

model->ApplyShader(shader.get());

model->SetTexture2D("VIDEO_Y", Y);

}

else if (video->formatType == PIX_FMT_RGBA || video->formatType == PIX_FMT_BGRA

|| video->formatType == PIX_FMT_ARGB || video->formatType == PIX_FMT_ABGR)

{

Y->UpdateTexture2D(frame->width, frame->height, frame->linesize[0] / 4, frame->data[0]);

model->ApplyShader(shader.get());

model->SetTexture2D("VIDEO_Y", Y);

}

model->Blit(videoMat, _projMat);

#endif

EMPTY:

SwapBuffers(dc);

av_frame_unref(frame);

}

bool PlayWindow::OpenFile(std::string url)

{

DeInitGL();

video = std::make_shared<VideoCapture>();

auto ret = video->Open(url.c_str(), PIX_FMT_AUTO);

if (ret == false)

{

video = nullptr;

hasVideo = false;

this->hide();

return false;

}

hasVideo = true;

InitGL();

return true;

}

别的就是 camera的移动 以及openglmodel shader相关的代码了

完整代码 付费

本文内容由网友自发贡献,版权归原作者所有,本站不承担相应法律责任。如您发现有涉嫌抄袭侵权的内容,请联系:hwhale#tublm.com(使用前将#替换为@)