BPNN

import math

import random

random.seed(0)

def rand(a, b):

return (b - a) * random.random() + a

def make_matrix(m, n, fill=0.0):

mat = []

for i in range(m):

mat.append([fill] * n)

return mat

def sigmoid(x):

return 1.0 / (1.0 + math.exp(-x))

def sigmoid_derivative(x):

return x * (1 - x)

class BPNeuralNetwork:

def __init__(self):

self.input_n = 0

self.hidden_n = 0

self.output_n = 0

self.input_cells = []

self.hidden_cells = []

self.output_cells = []

self.input_weights = []

self.output_weights = []

self.input_correction = []

self.output_correction = []

def setup(self, ni, nh, no):

self.input_n = ni + 1

self.hidden_n = nh

self.output_n = no

# init cells

self.input_cells = [1.0] * self.input_n

self.hidden_cells = [1.0] * self.hidden_n

self.output_cells = [1.0] * self.output_n

# init weights

self.input_weights = make_matrix(self.input_n, self.hidden_n)

self.output_weights = make_matrix(self.hidden_n, self.output_n)

# random activate

for i in range(self.input_n):

for h in range(self.hidden_n):

self.input_weights[i][h] = rand(-0.2, 0.2)

for h in range(self.hidden_n):

for o in range(self.output_n):

self.output_weights[h][o] = rand(-2.0, 2.0)

# init correction matrix

self.input_correction = make_matrix(self.input_n, self.hidden_n)

self.output_correction = make_matrix(self.hidden_n, self.output_n)

def predict(self, inputs):

# activate input layer

for i in range(self.input_n - 1):

self.input_cells[i] = inputs[i]

# activate hidden layer

for j in range(self.hidden_n):

total = 0.0

for i in range(self.input_n):

total += self.input_cells[i] * self.input_weights[i][j]

self.hidden_cells[j] = sigmoid(total)

# activate output layer

for k in range(self.output_n):

total = 0.0

for j in range(self.hidden_n):

total += self.hidden_cells[j] * self.output_weights[j][k]

self.output_cells[k] = sigmoid(total)

return self.output_cells[:]

def back_propagate(self, case, label, learn, correct):

# feed forward

self.predict(case)

# get output layer error

output_deltas = [0.0] * self.output_n

for o in range(self.output_n):

error = label[o] - self.output_cells[o]

output_deltas[o] = sigmoid_derivative(self.output_cells[o]) * error

# get hidden layer error

hidden_deltas = [0.0] * self.hidden_n

for h in range(self.hidden_n):

error = 0.0

for o in range(self.output_n):

error += output_deltas[o] * self.output_weights[h][o]

hidden_deltas[h] = sigmoid_derivative(self.hidden_cells[h]) * error

# update output weights

for h in range(self.hidden_n):

for o in range(self.output_n):

change = output_deltas[o] * self.hidden_cells[h]

self.output_weights[h][o] += learn * change + correct * self.output_correction[h][o]

self.output_correction[h][o] = change

# update input weights

for i in range(self.input_n):

for h in range(self.hidden_n):

change = hidden_deltas[h] * self.input_cells[i]

self.input_weights[i][h] += learn * change + correct * self.input_correction[i][h]

self.input_correction[i][h] = change

# get global error

error = 0.0

for o in range(len(label)):

error += 0.5 * (label[o] - self.output_cells[o]) ** 2

return error

def train(self, cases, labels, limit=10000, learn=0.05, correct=0.1):

for j in range(limit):

error = 0.0

for i in range(len(cases)):

label = labels[i]

case = cases[i]

error += self.back_propagate(case, label, learn, correct)

def test(self):

cases = [

[0, 0],

[0, 1],

[1, 0],

[1, 1],

]

labels = [[0], [1], [1], [0]]

self.setup(2, 5, 1)

self.train(cases, labels, 10000, 0.05, 0.1)

for case in cases:

print(self.predict(case))

if __name__ == '__main__':

nn = BPNeuralNetwork()

nn.test()

CNN

# 二维CNN

import numpy as np

input = np.array([[1,1,1,0,0],[0,1,1,1,0],[0,0,1,1,1],[0,0,1,1,0],[0,1,1,0,0]])

kernel = np.array([[1,0,1],[0,1,0],[1,0,1]])

# print(input.shape,kernel.shape)

def my_conv(input,kernel):

output_size = len(input) - len(kernel) + 1

res = np.zeros([output_size,output_size], np.float32)

for i in range(len(res)):

for j in range(len(res)):

res[i][j] = compute_conv(input, kernel, i, j)

return res

def compute_conv(input, kernel, i, j):

res = 0

for kk in range(3):

for k in range(3):

# print(input[i+kk][j+k])

res += input[i+kk][j+k] * kernel[kk][k] #这句是关键代码,实现了两个矩阵的点乘操作

return res

print(my_conv(input, kernel))

RNN

import copy

import numpy as np

def rand(lower, upper):

return (upper - lower) * np.random.random() + lower

def sigmoid(x):

return 1 / (1 + np.exp(-x))

def sigmoid_derivative(y):

return y * (1 - y)

def make_mat(m, n, fill=0.0):

# n * m

return np.array([[fill] * n for _ in range(m)])

def make_rand_mat(m, n, lower=-1, upper=1):

return np.array([[rand(lower, upper)] * n for _ in range(m)])

def int_to_bin(x, dim=0):

x = bin(x)[2:]

# align

k = dim - len(x)

if k > 0:

x = "0" * k + x

return x

class RNN:

def __init__(self):

self.input_n = 0

self.hidden_n = 0

self.output_n = 0

self.input_weights = [] # (input, hidden)

self.output_weights = [] # (hidden, output)

self.hidden_weights = [] # (hidden, hidden)

def setup(self, ni, nh, no):

self.input_n = ni

self.hidden_n = nh

self.output_n = no

self.input_weights = make_rand_mat(self.input_n, self.hidden_n)

self.output_weights = make_rand_mat(self.hidden_n, self.output_n)

self.hidden_weights = make_rand_mat(self.hidden_n, self.hidden_n)

def predict(self, case, dim=0):

guess = np.zeros(dim)

hidden_layer_history = [np.zeros(self.hidden_n)]

for i in range(dim):

x = np.array([[c[dim - i - 1] for c in case]])

hidden_layer = sigmoid(np.dot(x, self.input_weights) + np.dot(hidden_layer_history[-1], self.hidden_weights))

output_layer = sigmoid(np.dot(hidden_layer, self.output_weights))

guess[dim - i - 1] = np.round(output_layer[0][0]) # if you don't like int, change it

hidden_layer_history.append(copy.deepcopy(hidden_layer))

return guess

def do_train(self, case, label, dim=0, learn=0.1):

input_updates = np.zeros_like(self.input_weights)

output_updates = np.zeros_like(self.output_weights)

hidden_updates = np.zeros_like(self.hidden_weights)

guess = np.zeros_like(label)

error = 0

output_deltas = []

hidden_layer_history = [np.zeros(self.hidden_n)]

for i in range(dim):

x = np.array([[c[dim - i - 1] for c in case]])

y = np.array([[label[dim - i - 1]]]).T

hidden_layer = sigmoid(np.dot(x, self.input_weights) + np.dot(hidden_layer_history[-1], self.hidden_weights))

output_layer = sigmoid(np.dot(hidden_layer, self.output_weights))

output_error = y - output_layer

output_deltas.append(output_error * sigmoid_derivative(output_layer))

error += np.abs(output_error[0])

guess[dim - i - 1] = np.round(output_layer[0][0])

hidden_layer_history.append(copy.deepcopy(hidden_layer))

future_hidden_layer_delta = np.zeros(self.hidden_n)

for i in range(dim):

x = np.array([[c[i] for c in case]])

hidden_layer = hidden_layer_history[-i - 1]

prev_hidden_layer = hidden_layer_history[-i - 2]

output_delta = output_deltas[-i - 1]

hidden_delta = (future_hidden_layer_delta.dot(self.hidden_weights.T) +

output_delta.dot(self.output_weights.T)) * sigmoid_derivative(hidden_layer)

output_updates += np.atleast_2d(hidden_layer).T.dot(output_delta)

hidden_updates += np.atleast_2d(prev_hidden_layer).T.dot(hidden_delta)

input_updates += x.T.dot(hidden_delta)

future_hidden_layer_delta = hidden_delta

self.input_weights += input_updates * learn

self.output_weights += output_updates * learn

self.hidden_weights += hidden_updates * learn

return guess, error

def train(self, cases, labels, dim=0, learn=0.1, limit=1000):

for i in range(limit):

for j in range(len(cases)):

case = cases[j]

label = labels[j]

self.do_train(case, label, dim=dim, learn=learn)

def test(self):

self.setup(2, 16, 1)

for i in range(20000):

a_int = int(rand(0, 127))

a = int_to_bin(a_int, dim=8)

a = np.array([int(t) for t in a])

b_int = int(rand(0, 127))

b = int_to_bin(b_int, dim=8)

b = np.array([int(t) for t in b])

c_int = a_int + b_int

c = int_to_bin(c_int, dim=8)

c = np.array([int(t) for t in c])

guess, error = self.do_train([a, b], c, dim=8)

if i % 1000 == 0:

print("Error:" + str(error))

print("Predict:" + str(guess))

print("True:" + str(c))

out = 0

for index, x in enumerate(reversed(guess)):

out += x * pow(2, index)

print(str(a_int) + " + " + str(b_int) + " = " + str(out))

result = str(self.predict([a, b], dim=8))

print(result)

print("===============")

if __name__ == '__main__':

nn = RNN()

nn.test()

LR

import numpy as np

# 数据处理

def loadData(fileName):

dataList = []; labelList = []

fr = open(fileName, 'r')

for line in fr.readlines():

curLine = line.strip().split(',')

labelList.append(float(curLine[2]))

dataList.append([float(num) for num in curLine[0:1]])

return dataList, labelList

# LR预测

def predict(w, x):

wx = np.dot(w, x)

P1 = np.exp(wx) / (1 + np.exp(wx))

if P1 >= 0.5:

return 1

return 0

# 梯度下降训练

def GD(trainDataList, trainLabelList, iter=30):

for i in range(len(trainDataList)):

trainDataList[i].append(1)

trainDataList = np.array(trainDataList)

w = np.zeros(trainDataList.shape[1])

alpha = 0.001

for i in range(iter):

for j in range(trainDataList.shape[0]):

wx = np.dot(w, trainDataList[j])

yi = trainLabelList[j]

xi = trainDataList[j]

w += alpha * (yi - (np.exp(wx)) / (1 + np.exp(wx))) * xi

return w

# 测试

def test(testDataList, testLabelList, w):

for i in range(len(testDataList)):

testDataList[i].append(1)

errorCnt = 0

for i in range(len(testDataList)):

if testLabelList[i] != predict(w, testDataList[i]):

errorCnt += 1

return 1 - errorCnt / len(testDataList)

# 打印准确率

if __name__ == '__main__':

trainData, trainLabel = loadData('../data/train.txt')

testData, testLabel = loadData('../data/test.txt')

w = GD(trainData, trainLabel)

accuracy = test(testData, testLabel, w)

print('the accuracy is:', accuracy)

import numpy as np

# sigmoid函数

def sigmoid(x):

return 1 / (1 + np.exp(-x))

# LR模型类

class LogisticRegression:

def __init__(self, lr=0.01, num_iters=1000):

self.lr = lr

self.num_iters = num_iters

self.w = None

self.b = None

# 训练函数

def fit(self, X, y):

n_samples, n_features = X.shape

# 初始化参数w和b

self.w = np.zeros(n_features)

self.b = 0

# 梯度下降训练模型

for i in range(self.num_iters):

z = np.dot(X, self.w) + self.b

h = sigmoid(z)

dw = (1 / n_samples) * np.dot(X.T, (h - y))

db = (1 / n_samples) * np.sum(h - y)

self.w -= self.lr * dw

self.b -= self.lr * db

# 预测函数

def predict(self, X):

z = np.dot(X, self.w) + self.b

h = sigmoid(z)

y_pred = np.round(h)

return y_pred

线性回归

import numpy as np

import matplotlib.pyplot as plt

x = np.array([[1, 5.56], [2, 5.70], [3, 5.91], [4, 6.40],[5, 6.80],

[6, 7.05], [7, 8.90], [8, 8.70],[9, 9.00], [10, 9.05]])

m, n = np.shape(x)

x_data = np.ones((m, n))

x_data[:, :-1] = x[:, :-1]

y_data = x[:, -1]

m, n = np.shape(x_data)

theta = np.ones(n)

def gradientDescent(iter, x, y, w, alpha):

x_train = x.transpose()

for i in range(0, iter):

pre = np.dot(x, w)

loss = (pre - y)

gradient = np.dot(x_train, loss) / m

w = w - alpha * gradient

cost = 1.0 / 2 * m * np.sum(np.square(np.dot(x, np.transpose(w)) - y))

print("第{}次梯度下降损失为: {}".format(i,round(cost,2)))

return w

result = gradientDescent(1000, x_data, y_data, theta, 0.01)

y_pre = np.dot(x_data, result)

print("线性回归模型 w: ", result)

plt.rc('font', family='Arial Unicode MS', size=14)

plt.scatter(x[:, 0], x[:, 1], color='b', label='训练数据')

plt.plot(x[:, 0], y_pre, color='r', label='预测数据')

plt.xlabel('x')

plt.ylabel('y')

plt.title('线性回归预测(梯度下降)')

plt.legend()

plt.show()

K-Means

import numpy as np

from matplotlib import pyplot

%matplotlib inline

class K_Means(object):

# k是分组数;tolerance‘中心点误差’;max_iter是迭代次数

def __init__(self, k=2, tolerance=0.0001, max_iter=300):

self.k_ = k

self.tolerance_ = tolerance

self.max_iter_ = max_iter

def fit(self, data):

self.centers_ = {} # self.centers_中存放每一个簇的簇中心

for i in range(self.k_):

self.centers_[i] = data[i] # (随机)取前k个样本作为初始中心点

for i in range(self.max_iter_):

self.clf_ = {} # self.clf_中存放每一个簇的样本

for i in range(self.k_):

self.clf_[i] = []

# print("质点:",self.centers_)

# 遍历训练集

for feature in data:

# distances = [np.linalg.norm(feature-self.centers[center]) for center in self.centers]

distances = []

for center in self.centers_:

# 欧拉距离

# np.sqrt(np.sum((features-self.centers_[center])**2))

distances.append(np.linalg.norm(feature - self.centers_[center]))

classification = distances.index(min(distances)) # 选到距离最小的簇为分类结果(取下标)

self.clf_[classification].append(feature) # 向此簇加入当前样本

# print("分组情况:",self.clf_)

prev_centers = dict(self.centers_) # 先保存上一轮的中心簇

for c in self.clf_:

self.centers_[c] = np.average(self.clf_[c], axis=0) # 簇中心更新为当前簇中样本的平均值

# '中心点'是否在误差范围

optimized = True

for center in self.centers_:

org_centers = prev_centers[center]

cur_centers = self.centers_[center]

if np.sum((cur_centers - org_centers) / org_centers * 100.0) > self.tolerance_:

optimized = False

if optimized:

break

def predict(self, p_data):

distances = [np.linalg.norm(p_data - self.centers_[center]) for center in self.centers_]

index = distances.index(min(distances))

return index

if __name__ == '__main__':

x = np.array([[1, 2], [1.5, 1.8], [5, 8], [8, 8], [1, 0.6], [9, 11]])

k_means = K_Means(k=2)

k_means.fit(x)

print(k_means.centers_)

for center in k_means.centers_:

pyplot.scatter(k_means.centers_[center][0], k_means.centers_[center][1], marker='*', s=350)

for cat in k_means.clf_:

for point in k_means.clf_[cat]:

pyplot.scatter(point[0], point[1], c=('r' if cat == 0 else 'b'))

predict = [[2, 1], [6, 9]]

for feature in predict:

cat = k_means.predict(predict)

pyplot.scatter(feature[0], feature[1], c=('r' if cat == 0 else 'b'), marker='x')

pyplot.show()

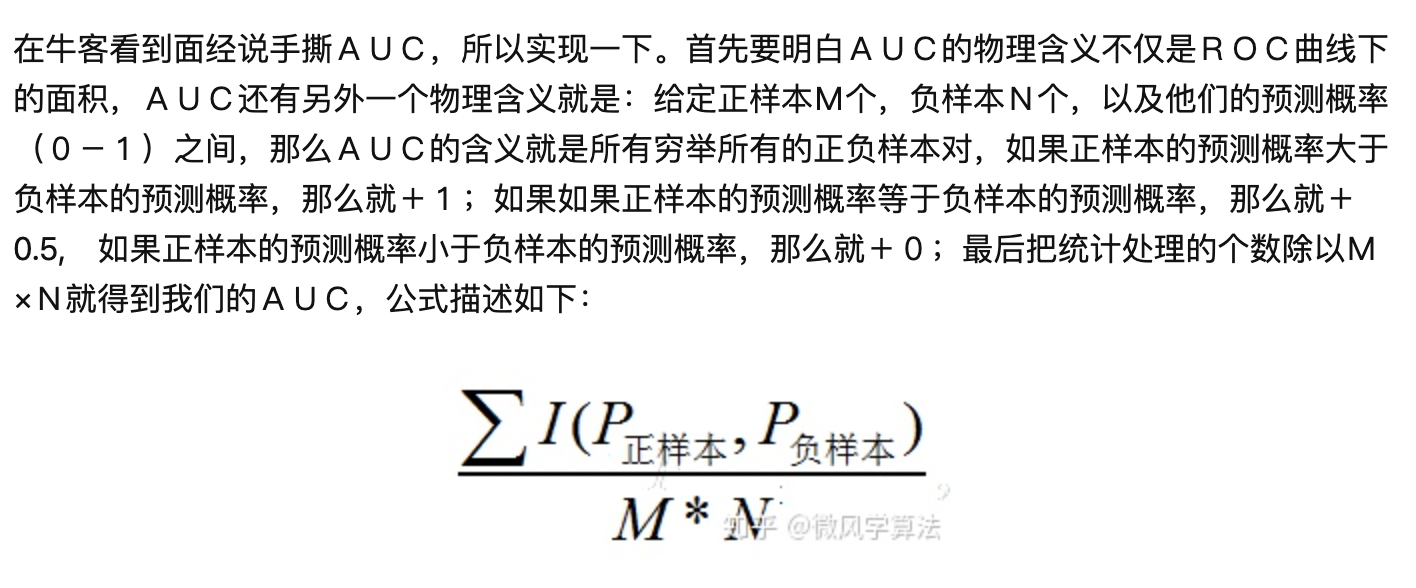

AUC

- AUC 正负样本对比实现(n^2)

def AUC(label, pre):

"""

适用于python3.0以上版本

"""

#计算正样本和负样本的索引,以便索引出之后的概率值

pos = [i for i in range(len(label)) if label[i] == 1]

neg = [i for i in range(len(label)) if label[i] == 0]

auc = 0

for i in pos:

for j in neg:

if pre[i] > pre[j]:

auc += 1

elif pre[i] == pre[j]:

auc += 0.5

return auc / (len(pos)*len(neg))

if __name__ == '__main__':

label = [1,0,0,0,1,0,1,1]

pre = [0.9, 0.8, 0.3, 0.1, 0.4, 0.9, 0.66, 0.7]

print(AUC(label, pre))

from sklearn.metrics import roc_curve, auc

fpr, tpr, th = roc_curve(label, pre , pos_label=1)

print('sklearn', auc(fpr, tpr))

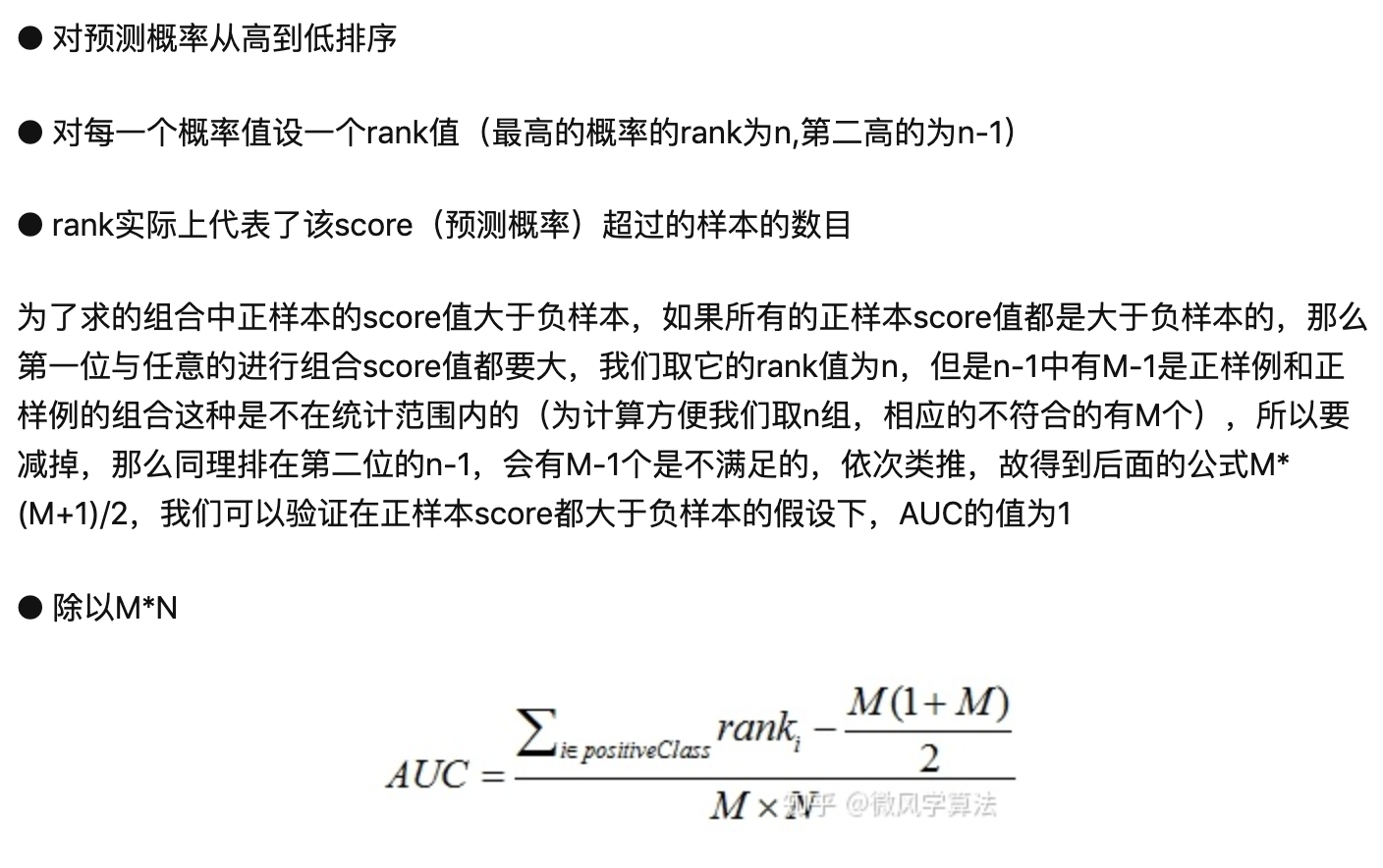

- 根据rank公式计算(nlogn)

# AUC rank公式实现

import numpy as np

from sklearn.metrics import roc_auc_score

def calc_auc(y_labels, y_scores):

f = list(zip(y_scores, y_labels))

rank = [values2 for values1, values2 in sorted(f, key=lambda x:x[0])]

rankList = [i+1 for i in range(len(rank)) if rank[i] == 1]

pos_cnt = np.sum(y_labels == 1)

neg_cnt = np.sum(y_labels == 0)

auc = (np.sum(rankList) - pos_cnt*(pos_cnt+1)/2) / (pos_cnt*neg_cnt)

print(auc)

def get_score():

# 随机生成100组label和score

y_labels = np.zeros(100)

y_scores = np.zeros(100)

for i in range(100):

y_labels[i] = np.random.choice([0, 1])

y_scores[i] = np.random.random()

return y_labels, y_scores

if __name__ == '__main__':

y_labels, y_scores = get_score()

# 调用sklearn中的方法计算AUC,与后面自己写的方法作对比

print('sklearn AUC:', roc_auc_score(y_labels, y_scores))

calc_auc(y_labels, y_scores)

import numpy as np

from sklearn.metrics import roc_curve

from sklearn.metrics import auc

#---自己按照公式实现

def auc_calculate(labels,preds,n_bins=100):

postive_len = sum(labels)

negative_len = len(labels) - postive_len

total_case = postive_len * negative_len

pos_histogram = [0 for _ in range(n_bins)]

neg_histogram = [0 for _ in range(n_bins)]

bin_width = 1.0 / n_bins

for i in range(len(labels)):

nth_bin = int(preds[i]/bin_width)

if labels[i]==1:

pos_histogram[nth_bin] += 1

else:

neg_histogram[nth_bin] += 1

accumulated_neg = 0

satisfied_pair = 0

for i in range(n_bins):

satisfied_pair += (pos_histogram[i]*accumulated_neg + pos_histogram[i]*neg_histogram[i]*0.5)

accumulated_neg += neg_histogram[i]

return satisfied_pair / float(total_case)

if __name__ == '__main__':

y = np.array([1,0,0,0,1,0,1,0,])

pred = np.array([0.9, 0.8, 0.3, 0.1,0.4,0.9,0.66,0.7])

fpr, tpr, thresholds = roc_curve(y, pred, pos_label=1)

print("-----sklearn:",auc(fpr, tpr))

print("-----py脚本:",auc_calculate(y,pred))

CrossEntropy / Softmax

import numpy as np

def cross_entropy(y, y_hat):

# n = 1e-6

# return -np.sum(y * np.log(y_hat + n) + (1 - y) * np.log(1 - y_hat + n), axis=1)

assert y.shape == y_hat.shape

res = -np.sum(np.nan_to_num(y * np.log(y_hat) + (1 - y) * np.log(1 - y_hat)))

return round(res, 3)

def softmax(y):

y_shift = y - np.max(y, axis=1, keepdims=True)

y_exp = np.exp(y_shift)

y_exp_sum = np.sum(y_exp, axis=1, keepdims=True)

return y_exp / y_exp_sum

if __name__ == "__main__":

y = np.array([1, 0, 0, 1]).reshape(-1, 1)

y_hat = np.array([1, 0.4, 0.5, 0.1]).reshape(-1, 1)

print(cross_entropy(y, y_hat))

# y = np.array([[1,2,3,4],[1,3,4,5],[3,4,5,6]])

# print(softmax(y))