找实习遇到的作业:

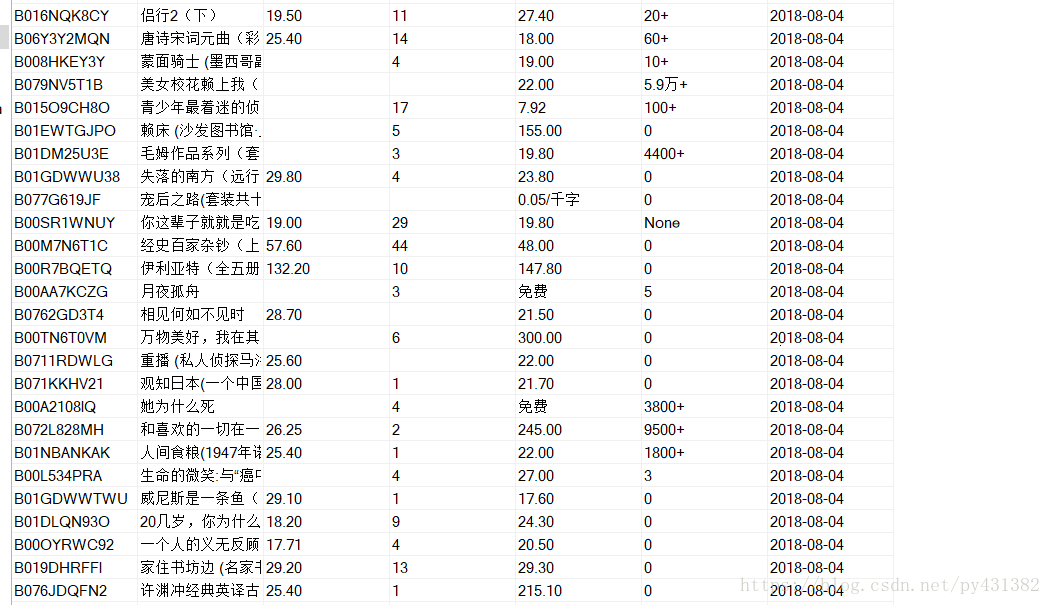

最终结果:

实现代码分两部分:抓取书籍id,爬取详细数据

1:

import requests

import re

from pyquery import PyQuery as pq

#提取一个代理

def get_proxy():

return str(requests.get("http://127.0.0.1:5010/get/").content)[2:-1]

#使用代理的requests请求

def url_open(url):

header = {'User-Agent': 'Mozilla/5.0 ', 'X-Requested-With': 'XMLHttpRequest'}

global proxy

try:

if proxy:

print('正在使用代理', proxy)

proxies = {'http':'http://'+proxy}

#print(proxies)

response = requests.get(url=url, headers=header, proxies=proxies)

else:

response = requests.get(url=url, headers=header)

if response.status_code == 200:

return response.text

if response.status_code == 503:

print('503')

proxy = get_proxy()

if proxy:

return url_open(url)

else:

print('请求代理失败')

return None

except Exception:

proxy=get_proxy()

return url_open(url)

###########文学分类入口链接提取################

html='href="/s/ref=lp_144180071_nr_n_0fst=as%3Aoff&rh=n%3A116087071%2Cn%3A%21116088071%2Cn%3A116169071%2Cn%3A144180071%2Cn%3A144201071&bbn=144180071&ie=UTF8&qid=1533176532&rnid=144180071"><span class="a-size-small a-color-base">文学名家</span></a></span></li><li><span class="a-list-item"><a class="a-link-normal s-ref-text-link" href="/s/ref=lp_144180071_nr_n_1?href="/s/ref=lp_144180071_nr_n_1?fst=as%3Aoff&rh=n%3A116087071%2Cn%3A%21116088071%2Cn%3A116169071%2Cn%3A144180071%2Cn%3A144206071&bbn=144180071&ie=UTF8&qid=1533176532&rnid=144180071"><span class="a-size-small a-color-base">作品集</span></a></span></li><li><span class="a-list-item"><a class="a-link-normal s-ref-text-link" href="/s/ref=lp_144180071_nr_n_2?fst=as%3Aoff&rh=n%3A116087071%2Cn%3A%21116088071%2Cn%3A116169071%2Cn%3A144180071%2Cn%3A144212071&bbn=144180071&ie=UTF8&qid=1533176532&rnid=144180071"><span class="a-size-small a-color-base">散文随笔</span></a></span></li><li><span class="a-list-item"><a class="a-link-normal s-ref-text-link" href="/s/ref=lp_144180071_nr_n_3?fst=as%3Aoff&rh=n%3A116087071%2Cn%3A%21116088071%2Cn%3A116169071%2Cn%3A144180071%2Cn%3A144222071&bbn=144180071&ie=UTF8&qid=1533176532&rnid=144180071"><span class="a-size-small a-color-base">诗歌词曲</span></a></span></li><li><span class="a-list-item"><a class="a-link-normal s-ref-text-link" href="/s/ref=lp_144180071_nr_n_4?fst=as%3Aoff&rh=n%3A116087071%2Cn%3A%21116088071%2Cn%3A116169071%2Cn%3A144180071%2Cn%3A144235071&bbn=144180071&ie=UTF8&qid=1533176532&rnid=144180071"><span class="a-size-small a-color-base">民间文学</span></a></span></li><li><span class="a-list-item"><a class="a-link-normal s-ref-text-link" href="/s/ref=lp_144180071_nr_n_5?fst=as%3Aoff&rh=n%3A116087071%2Cn%3A%21116088071%2Cn%3A116169071%2Cn%3A144180071%2Cn%3A144228071&bbn=144180071&ie=UTF8&qid=1533176532&rnid=144180071"><span class="a-size-small a-color-base">纪实文学</span></a></span></li><li><span class="a-list-item"><a class="a-link-normal s-ref-text-link" href="/s/ref=lp_144180071_nr_n_6?fst=as%3Aoff&rh=n%3A116087071%2Cn%3A%21116088071%2Cn%3A116169071%2Cn%3A144180071%2Cn%3A144218071&bbn=144180071&ie=UTF8&qid=1533176532&rnid=144180071"><span class="a-size-small a-color-base">影视文学</span></a></span></li><li><span class="a-list-item"><a class="a-link-normal s-ref-text-link" href="/s/ref=lp_144180071_nr_n_7?fst=as%3Aoff&rh=n%3A116087071%2Cn%3A%21116088071%2Cn%3A116169071%2Cn%3A144180071%2Cn%3A144234071&bbn=144180071&ie=UTF8&qid=1533176532&rnid=144180071"><span class="a-size-small a-color-base">戏剧与曲艺</span></a></span></li><li><span class="a-list-item"><a class="a-link-normal s-ref-text-link" href="/s/ref=lp_144180071_nr_n_8?fst=as%3Aoff&rh=n%3A116087071%2Cn%3A%21116088071%2Cn%3A116169071%2Cn%3A144180071%2Cn%3A144200071&bbn=144180071&ie=UTF8&qid=1533176532&rnid=144180071"><span class="a-size-small a-color-base">文学史</span></a></span></li><li><span class="a-list-item"><a class="a-link-normal s-ref-text-link" href="/s/ref=lp_144180071_nr_n_9?fst=as%3Aoff&rh=n%3A116087071%2Cn%3A%21116088071%2Cn%3A116169071%2Cn%3A144180071%2Cn%3A144181071&bbn=144180071&ie=UTF8&qid=1533176532&rnid=144180071"><span class="a-size-small a-color-base">文学理论</span></a></span></li><li><span class="a-list-item"><a class="a-link-normal s-ref-text-link" href="/s/ref=lp_144180071_nr_n_10?fst=as%3Aoff&rh=n%3A116087071%2Cn%3A%21116088071%2Cn%3A116169071%2Cn%3A144180071%2Cn%3A144187071&bbn=144180071&ie=UTF8&qid=1533176532&rnid=144180071"><span class="a-size-small a-color-base">文学评论与鉴赏</span></a></span></li><li><span class="a-list-item"><a class="a-link-normal s-ref-text-link" href="/s/ref=lp_144180071_nr_n_11?fst=as%3Aoff&rh=n%3A116087071%2Cn%3A%21116088071%2Cn%3A116169071%2Cn%3A144180071%2Cn%3A144242071&bbn=144180071&ie=UTF8&qid=1533176532&rnid=144180071"><span class="a-size-small a-color-base">期刊杂志</span></a></span></li><li><span class="a-list-item"><a class="a-link-normal s-ref-text-link" href="/s/ref=lp_144180071_nr_n_12?fst=as%3Aoff&rh=n%3A116087071%2Cn%3A%21116088071%2Cn%3A116169071%2Cn%3A144180071%2Cn%3A144243071&bbn=144180071&ie=UTF8&qid=1533176532&rnid=144180071"><span class="a-size-small a-color-base">文学作品导读'

doc=pq(html)

pages_list=[]

for each in re.findall('rh=(.*?)&',html):

pages_list.append('https://www.amazon.cn/s/rh='+each)

count=0 #用作txt文件名

asin_re=re.compile('data-asin="(.*?)" class') #用正则解析book_asin

for page_url in pages_list:

print(page_url)

html = url_open(page_url)

doc = pq(html)

if doc('#pagn > span.pagnDisabled').text():

page_count=int(doc('#pagn > span.pagnDisabled').text()) #解析该类下面有多少页,若出错,则设为400页

else:page_count=400

count += 1

with open(str(count)+'.txt','a',encoding='utf-8')as f: #创建txt文件

err_count=0

for i in range(1, page_count + 1):

print('正在爬取第%dy页的book_asin' % i)

url = page_url + '&page='+str(i)

html = url_open(url)

print(url)

if html!=None:

err_count=0

if err_count>=20: #在前面解析该类下面有多少页出错导致访问空页面时,超过20次即认为已经爬完该分类,跳出循环

break

data_asin = re.findall(asin_re, html)

print(data_asin)

for each in data_asin: #写入文件

f.write(each)

f.write('\n')

else: err_count+=1

2:

import requests

from fake_useragent import UserAgent

import pymysql

from multiprocessing import Process,Queue,Lock

from pyquery import PyQuery as pq

import time

import random

ua = UserAgent() #实例化,后文用它生成随机游览器请求头

# #调试排查问题所用

# def get(url,i=2):

# headers = {

# 'Accept': 'text/html,*/*',

# 'Accept-Encoding': 'gzip, deflate, br',

# 'Accept-Language': 'zh-CN,zh;q=0.9',

# 'Connection': 'keep-alive',

#

# 'Host': 'www.amazon.cn',

# 'Referer': 'https://www.amazon.cn/gp/aw/s/ref=is_pn_1?rh=n%3A658390051%2Cn%3A%21658391051%2Cn%3A658394051%2Cn%3A658509051&page=1',

# 'User-Agent': ua.random,

# 'X-Requested-With': 'XMLHttpRequest'

# }

# if i>0:

# try:

# response = requests.get(url=url, headers=headers,timeout=1)

# print(response.status_code)

# response.encoding='utf-8'

# return response.text

# except :

# get(url, i=i - 1)

# else:return None

def get_proxy():

return str(requests.get("http://127.0.0.1:5010/get/").content)[2:-1]

def title_parse(title): #由于amazon抓取下来的书籍标题太长,需要截取一下

jd_title = []

for each in title:

if each != "(":

jd_title.append(each)

else:

break

jd_title = ''.join(jd_title)

return jd_title

def price_parse(price): #处理一下amazon价格

amazon_price=[]

for each in price:

if each != "¥":

amazon_price.append(each)

else:

break

amazon_price = ''.join(amazon_price)

return amazon_price

#亚马逊请求函数

def url_open1(url):

header = {

'Accept': 'text/html,*/*',

'Accept-Encoding': 'gzip, deflate, br',

'Accept-Language': 'zh-CN,zh;q=0.9',

'Connection': 'keep-alive',

'Host': 'www.amazon.cn',

'Referer': 'https: // www.amazon.cn /',

'User-Agent': ua.random,

'X-Requested-With': 'XMLHttpRequest'

}

global proxy

try:

if proxy:

print('正在使用代理', proxy)

proxies = {'http':'http://'+proxy}

#print(proxies)

response = requests.get(url=url, headers=header, proxies=proxies)

else:

response = requests.get(url=url, headers=header)

if response.status_code == 200:

response.encoding='utf-8'

return response.text

if response.status_code == 503:

print('503')

proxy = get_proxy()

if proxy:

return url_open1(url)

else:

print('请求代理失败')

return None

except Exception:

proxy=get_proxy()

return url_open1(url)

#京东请求函数

def url_open2(url):

header = {

'User-Agent': ua.random,

}

global proxy

try:

if proxy:

print('正在使用代理', proxy)

proxies = {'http': 'http://' + proxy}

# print(proxies)

response = requests.get(url=url, headers=header, proxies=proxies)

else:

response = requests.get(url=url, headers=header)

if response.status_code == 200:

response.encoding = 'utf-8'

return response.text

if response.status_code == 503:

print('503')

proxy = get_proxy()

if proxy:

return url_open2(url)

else:

print('请求代理失败')

return None

except Exception:

proxy = get_proxy()

return url_open2(url)

#核心的蜘蛛了,承担了解析Amazon和JD详情页以及之后的存储数据功能

def spider(q,lock):

#操作MySQL

conn = pymysql.connect(host='localhost', port=3306, user='root', password='******', db='amazon', charset='utf8')

cursor = conn.cursor()

while True:

lock.acquire()

asin = q.get(block=False)[:-1]

lock.release()

url = 'https://www.amazon.cn/gp/product/{a}'.format(a=asin)

print(url)

html = url_open1(url)

if html==None: #有时候返回None,此语句防崩溃

continue

doc = pq(html)

title = doc('#ebooksProductTitle.a-size-extra-large').text() # 书名

amazon_price = doc('a .a-size-small.a-color-price').text()[1:] # 纸质书价格(人民币)

amazon_price=price_parse(amazon_price)

#e_price = doc('#tmmSwatches > ul > li.swatchElement.selected > span > span:nth-child(4) > span > a').text()[1:-2] # 电子书价格

amazon_comments = doc('#acrCustomerReviewText.a-size-base').text()[:-5] # 评论数

jd_search_title = title_parse(title)

url = 'https://search.jd.com/Search?keyword={a}&enc=utf-8'.format(a=jd_search_title)

html = url_open2(url)

if html==None:

continue

doc = pq(html)

jd_price = doc('#J_goodsList > ul > li:nth-child(1) > div > div.p-price > strong > i').text() #价格

its = doc('.gl-warp.clearfix li div .p-commit strong a').items() #评论数有点麻烦

try: #防止生成器为空调用next报错

its.__next__() #因为所需的数据在生成器的第二项,所以先调用一次next

jd_comments = its.__next__().text()

except:

jd_comments=None

print(amazon_comments, amazon_price, title)

print(jd_price,jd_comments)

date=time.strftime("%Y-%m-%d", time.localtime()) #抓取日期

#存入mysql

cursor.execute("INSERT INTO data(book_asin,title,amazon_price,amazon_comments,jd_price,jd_comments,update_date) VALUES ('{0}','{1}','{2}','{3}','{4}','{5}','{6}');".format(asin,title,amazon_price,amazon_comments,jd_price,jd_comments,date))

conn.commit()

time.sleep(random.random()) #延迟0~1秒

conn.close()

if __name__=='__main__':

q = Queue() #多线程,数据量不多,用队列通信

lock = Lock()

with open('asin.txt', 'r')as f:

AsinList = f.readlines()

for each in AsinList[6000:]: #老是被503,修该列表尽可能避免重复抓取

q.put(each)

#多线程一下子很快,但一小会就被封

p1 = Process(target=spider, args=(q, lock))

# p2 = Process(target=spider, args=(q, lock))

# p3 = Process(target=spider, args=(q, lock))

# p4 = Process(target=spider, args=(q, lock))

# p5 = Process(target=spider, args=(q, lock))

# p6 = Process(target=spider, args=(q, lock))

# p7 = Process(target=spider, args=(q, lock))

# p8 = Process(target=spider, args=(q, lock))

p1.start(), \

# p2.start(), p3.start(), p4.start(), p5.start(), p6.start(), p7.start(), p8.start()

p1.join(),\

# p2.join(),p3.join(), p4.join(), p5.join(), p6.join(), p7.join(), p8.join()

本文内容由网友自发贡献,版权归原作者所有,本站不承担相应法律责任。如您发现有涉嫌抄袭侵权的内容,请联系:hwhale#tublm.com(使用前将#替换为@)