文章目录

- Julia中的深度学习框架

- Flux

- 性能对比

- LeNet5

- 评估结果

- GPU(CUDA 11)下的测试结果

- GPU(CUDA 10)下的测试结果

- CPU单线程

- CPU多线程(18个线程)

- Matlab实现

- PyTorch实现

- Flux实现

- MNIST数据集读取

- 参考文献

Julia中的深度学习框架

目前新版的MATLAB已经自带深度学习工具箱, 入门教程可以参见本人博客Matlab深度学习上手初探. Python下较为流行的深度学习框架是PyTorch和Tensorflow, 其环境配置可以参考这里. Julia下目前有以下三种常见深度学习框架,其中点赞最多的是Flux,

Flux 是 100% 纯 Julia 堆栈,并在 Julia 的原生 GPU 和 自动差分 支持之上提供轻量级抽象。 它使简单的事情变得容易,同时保持完全可破解。

Flux is a 100% pure-Julia stack and provides lightweight abstractions on top of Julia’s native GPU and AD support. It makes the easy things easy while remaining fully hackable.

下面进行简单介绍.

后经测试发现, Batchsize对效率的影响较大, 除了Flux不支持CPU多线程外, 综合来看Flux的执行效率较高. 不再补充实验结果

Flux

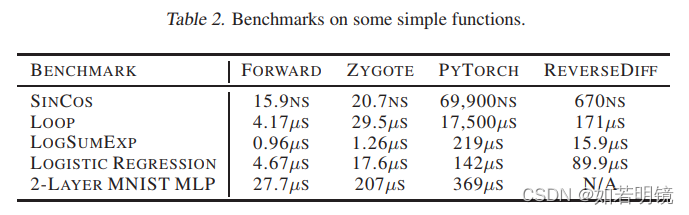

Flux使用Zygote进行自动差分,论文中给出的对比结果如下

Flux’s Model Zoo里面有常见模型的实现.

性能对比

这里对比MATLAB, Python 和Julia下的深度学习模型训练与测试性能. 由于最新版的Flux推荐使用CUDA11.2。

- 系统:Ubuntu16.04 LTS

- CUDA:

10.2 / 11.3 - CUDNN:

8.2.0.53 / 8.4.0.27 - Matlab:

2020a - PyTorch:

1.10+cu10.2 / 1.11.0(除CUDA10 GPU测试模式下使用1.10版本,其它使用1.11版本) - Flux:

0.13.1 - 计算平台:2699v3 CPU(单核/多核)、1080tiGPU

在CPU模式下,Matlab通过maxNumCompThreads(10)设置使用线程数(如果不设置,自动取为CPU的核数). PyTorch通过torch.set_num_threads()来设置(不设置自动取为CPU的核数). Flux尚不支持。

LeNet5

对比模型就选择较为简单的LeNet5, 起初设计用于数字(0~9)手写体分类. 数据集介绍及下载可以访问这里, 其网络结构如下 , 需要注意的是, 数据集的图像大小是

28

×

28

28\times 28

28×28, 不是下图中的

32

×

32

32\times 32

32×32

总共包含C1, C3, C5三个卷积层; S2, S4两个池化层, 也叫下采样层; 一个全连接层F6 和一个输出层OUTPUT. 具体参数如下, 其中卷积层参数格式输出通道数×输入通道数×卷积核高×卷积核宽, stride, padding (卷积输出大小的计算可以参考本人博客深度神经网络中的卷积) ; 池化层参数格式池化窗口的高×池化窗口的宽, stride, padding; 全连接参数格式输出神经元×输入神经元.

| 层名 | 参数 | 输入大小 | 输出大小 | 备注 |

|---|

| C1 |

6

×

1

×

5

×

5

6\times 1\times 5\times 5

6×1×5×5, 1, 0 |

N

×

1

×

32

×

32

N\times 1 \times 32\times 32

N×1×32×32 |

N

×

6

×

28

×

28

N\times 6 \times 28\times 28

N×6×28×28 |

32

+

2

×

0

−

5

1

+

1

=

28

\frac{32+2\times 0 - 5}{1} + 1 = 28

132+2×0−5+1=28 |

| S2 |

2

×

2

2\times 2

2×2, 2, 0 |

N

×

6

×

28

×

28

N\times 6 \times 28\times 28

N×6×28×28 |

N

×

6

×

14

×

14

N\times 6 \times 14\times 14

N×6×14×14 |

28

+

2

×

0

−

2

2

+

1

=

14

\frac{28+2\times 0 - 2}{2} + 1 = 14

228+2×0−2+1=14 |

| C3 |

16

×

6

×

5

×

5

16\times 6\times 5\times 5

16×6×5×5, 1, 0 |

N

×

6

×

14

×

14

N\times 6 \times 14\times 14

N×6×14×14 |

N

×

16

×

10

×

10

N\times 16 \times 10\times 10

N×16×10×10 |

14

+

2

×

0

−

5

1

+

1

=

10

\frac{14+2\times 0 - 5}{1} + 1 = 10

114+2×0−5+1=10 |

| S4 |

2

×

2

2\times 2

2×2, 2, 0 |

N

×

16

×

10

×

10

N\times 16 \times 10\times 10

N×16×10×10 |

N

×

16

×

5

×

5

N\times 16 \times 5\times 5

N×16×5×5 |

10

+

2

×

0

−

2

2

+

1

=

5

\frac{10+2\times 0 - 2}{2} + 1 = 5

210+2×0−2+1=5 |

| C5 |

120

×

16

×

5

×

5

120\times 16\times 5\times 5

120×16×5×5, 1, 0 |

N

×

16

×

5

×

5

N\times 16 \times 5\times 5

N×16×5×5 |

N

×

120

×

1

×

1

N\times 120 \times 1\times 1

N×120×1×1 | 可以使用全连接实现 |

| F6 |

84

×

120

84\times 120

84×120 |

N

×

120

N\times 120

N×120 |

N

×

84

N\times 84

N×84 | - |

| OUT |

10

×

84

10\times 84

10×84 |

N

×

84

N\times 84

N×84 |

N

×

10

N\times 10

N×10 | 0~9共10类 |

如今经常使用的LeNet5网络与原始的结构略微有些差异,主要体现在激活函数上, 如原始的输出层使用径向基(RBF),而现在常用Softmax. 卷积后的激活函数则常用ReLU.

由于原始的LeNet5网络计算了比较小,不能充分发挥GPU的性能,这里将C1, C3, C5 的输出通道数分别从 6, 16, 120 改为32, 64, 128, 下面给出实验代码和具体结果.

- 训练代数:

100 - 批大小:

600 - 每训练1代 (参数更新了60000/600=100次) 验证一次

- 学习率: 初始0.001, 每30代衰减一次,衰减系数0.1

- 不使用权重正则

评估结果

GPU(CUDA 11)下的测试结果

Matlab运行结果

Initializing input data normalization.

|======================================================================================================================|

| Epoch | Iteration | Time Elapsed | Mini-batch | Validation | Mini-batch | Validation | Base Learning |

| | | (hh:mm:ss) | Accuracy | Accuracy | Loss | Loss | Rate |

|======================================================================================================================|

| 1 | 1 | 00:00:06 | 11.50% | 9.82% | 9.5761 | 14.3017 | 0.0010 |

| 1 | 100 | 00:00:10 | 95.00% | 95.59% | 0.1730 | 0.1381 | 0.0010 |

| 2 | 200 | 00:00:13 | 97.50% | 97.43% | 0.0882 | 0.0800 | 0.0010 |

| 3 | 300 | 00:00:16 | 97.33% | 98.03% | 0.1136 | 0.0626 | 0.0010 |

| 4 | 400 | 00:00:18 | 99.00% | 98.43% | 0.0290 | 0.0502 | 0.0010 |

| 5 | 500 | 00:00:21 | 99.33% | 98.37% | 0.0302 | 0.0535 | 0.0010 |

...

| 95 | 9500 | 00:04:36 | 100.00% | 98.94% | 0.0005 | 0.0824 | 0.0010 |

| 96 | 9600 | 00:04:39 | 99.50% | 98.79% | 0.0378 | 0.0858 | 0.0010 |

| 97 | 9700 | 00:04:42 | 100.00% | 99.06% | 0.0003 | 0.0794 | 0.0010 |

| 98 | 9800 | 00:04:45 | 100.00% | 98.94% | 0.0002 | 0.0840 | 0.0010 |

| 99 | 9900 | 00:04:48 | 100.00% | 98.92% | 7.0254e-05 | 0.0735 | 0.0010 |

| 100 | 10000 | 00:04:51 | 100.00% | 98.88% | 0.0011 | 0.0761 | 0.0010 |

|======================================================================================================================|

ans =

"--->Total time: 309.35"

0.9888

PyTorch运行结果

--->Train epoch 0, loss: 0.0031, time: 1.38

--->Valid epoch 0, loss: 0.0176, accuracy: 0.6933, time: 0.38

--->Train epoch 1, loss: 0.0028, time: 0.93

--->Valid epoch 1, loss: 0.0151, accuracy: 0.9573, time: 0.34

--->Train epoch 2, loss: 0.0025, time: 0.91

--->Valid epoch 2, loss: 0.0149, accuracy: 0.9766, time: 0.35

--->Train epoch 3, loss: 0.0025, time: 0.94

--->Valid epoch 3, loss: 0.0148, accuracy: 0.9814, time: 0.38

--->Train epoch 4, loss: 0.0025, time: 1.00

--->Valid epoch 4, loss: 0.0148, accuracy: 0.9835, time: 0.35

--->Train epoch 5, loss: 0.0025, time: 1.00

--->Valid epoch 5, loss: 0.0148, accuracy: 0.9849, time: 0.36

...

--->Train epoch 95, loss: 0.0024, time: 1.01

--->Valid epoch 95, loss: 0.0147, accuracy: 0.9916, time: 0.40

--->Train epoch 96, loss: 0.0024, time: 1.02

--->Valid epoch 96, loss: 0.0147, accuracy: 0.9894, time: 0.34

--->Train epoch 97, loss: 0.0024, time: 0.99

--->Valid epoch 97, loss: 0.0147, accuracy: 0.9917, time: 0.34

--->Train epoch 98, loss: 0.0024, time: 0.98

--->Valid epoch 98, loss: 0.0147, accuracy: 0.9924, time: 0.35

--->Train epoch 99, loss: 0.0024, time: 1.06

--->Valid epoch 99, loss: 0.0147, accuracy: 0.9921, time: 0.39

--->Total time: 135.69, training time: 99.68, validation time: 35.97

Flux运行结果

--->Train, epoch: 1, loss: 35.2755, time: 127.61

--->Valid, epoch: 1, loss: 8.3011, accuracy: 97.5300, time: 22.58

--->Train, epoch: 2, loss: 6.9521, time: 1.47

--->Valid, epoch: 2, loss: 4.6231, accuracy: 98.6100, time: 0.16

--->Train, epoch: 3, loss: 4.7919, time: 1.17

--->Valid, epoch: 3, loss: 3.7656, accuracy: 98.8400, time: 0.14

--->Train, epoch: 4, loss: 3.6395, time: 1.15

--->Valid, epoch: 4, loss: 3.3123, accuracy: 98.9400, time: 0.14

--->Train, epoch: 5, loss: 2.8881, time: 1.15

--->Valid, epoch: 5, loss: 2.9827, accuracy: 99.0700, time: 0.15

...

--->Train, epoch: 95, loss: 0.0266, time: 1.21

--->Valid, epoch: 95, loss: 4.0840, accuracy: 99.3600, time: 0.15

--->Train, epoch: 96, loss: 0.0266, time: 1.23

--->Valid, epoch: 96, loss: 4.0877, accuracy: 99.3600, time: 0.15

--->Train, epoch: 97, loss: 0.0266, time: 1.29

--->Valid, epoch: 97, loss: 4.0956, accuracy: 99.3600, time: 0.16

--->Train, epoch: 98, loss: 0.0266, time: 1.27

--->Valid, epoch: 98, loss: 4.1022, accuracy: 99.3600, time: 0.15

--->Train, epoch: 99, loss: 0.0266, time: 1.27

--->Valid, epoch: 99, loss: 4.1118, accuracy: 99.3600, time: 0.16

--->Train, epoch: 100, loss: 0.0266, time: 1.40

--->Valid, epoch: 100, loss: 4.1137, accuracy: 99.3600, time: 0.16

--->Total time: 287.96, training time: 250.84, validation time: 36.52

GPU(CUDA 10)下的测试结果

Matlab运行结果

Initializing input data normalization.

|======================================================================================================================|

| Epoch | Iteration | Time Elapsed | Mini-batch | Validation | Mini-batch | Validation | Base Learning |

| | | (hh:mm:ss) | Accuracy | Accuracy | Loss | Loss | Rate |

|======================================================================================================================|

| 1 | 1 | 00:00:00 | 11.50% | 9.82% | 9.5761 | 14.3017 | 0.0010 |

| 1 | 100 | 00:00:02 | 95.00% | 95.59% | 0.1730 | 0.1381 | 0.0010 |

| 2 | 200 | 00:00:05 | 97.50% | 97.43% | 0.0882 | 0.0800 | 0.0010 |

| 3 | 300 | 00:00:08 | 97.33% | 98.03% | 0.1136 | 0.0626 | 0.0010 |

| 4 | 400 | 00:00:11 | 99.00% | 98.43% | 0.0290 | 0.0502 | 0.0010 |

| 5 | 500 | 00:00:14 | 99.33% | 98.37% | 0.0302 | 0.0535 | 0.0010 |

...

| 97 | 9700 | 00:04:34 | 100.00% | 99.06% | 0.0003 | 0.0794 | 0.0010 |

| 98 | 9800 | 00:04:37 | 100.00% | 98.94% | 0.0002 | 0.0840 | 0.0010 |

| 99 | 9900 | 00:04:40 | 100.00% | 98.92% | 7.0254e-05 | 0.0735 | 0.0010 |

| 100 | 10000 | 00:04:43 | 100.00% | 98.88% | 0.0011 | 0.0761 | 0.0010 |

|======================================================================================================================|

--->Total time: 285.16

Python 下 PyTorch运行结果

--->Train epoch 0, loss: 0.0029, time: 1.08

--->Valid epoch 0, loss: 0.0159, accuracy: 0.8679, time: 0.34

--->Train epoch 1, loss: 0.0025, time: 1.00

--->Valid epoch 1, loss: 0.0149, accuracy: 0.9781, time: 0.30

--->Train epoch 2, loss: 0.0025, time: 0.97

--->Valid epoch 2, loss: 0.0148, accuracy: 0.9781, time: 0.31

--->Train epoch 3, loss: 0.0025, time: 0.99

--->Valid epoch 3, loss: 0.0148, accuracy: 0.9831, time: 0.31

--->Train epoch 4, loss: 0.0025, time: 0.99

--->Valid epoch 4, loss: 0.0148, accuracy: 0.9835, time: 0.30

--->Train epoch 5, loss: 0.0025, time: 1.00

--->Valid epoch 5, loss: 0.0148, accuracy: 0.9859, time: 0.32

...

--->Train epoch 97, loss: 0.0024, time: 1.05

--->Valid epoch 97, loss: 0.0147, accuracy: 0.9920, time: 0.35

--->Train epoch 98, loss: 0.0024, time: 1.09

--->Valid epoch 98, loss: 0.0147, accuracy: 0.9930, time: 0.38

--->Train epoch 99, loss: 0.0024, time: 1.03

--->Valid epoch 99, loss: 0.0147, accuracy: 0.9922, time: 0.31

--->Total time: 140.93, training time: 106.05, validation time: 34.84

Julia 下 Flux运行结果

--->Train, epoch: 1, loss: 36.5890, time: 126.84

--->Valid, epoch: 1, loss: 8.8314, accuracy: 97.2600, time: 23.30

--->Train, epoch: 2, loss: 7.7986, time: 2.35

--->Valid, epoch: 2, loss: 5.7305, accuracy: 98.2200, time: 0.61

--->Train, epoch: 3, loss: 5.4430, time: 2.73

--->Valid, epoch: 3, loss: 4.1453, accuracy: 98.6600, time: 0.28

--->Train, epoch: 4, loss: 3.8629, time: 2.60

--->Valid, epoch: 4, loss: 4.2376, accuracy: 98.6400, time: 0.27

--->Train, epoch: 5, loss: 3.2275, time: 2.76

--->Valid, epoch: 5, loss: 3.1914, accuracy: 98.8300, time: 0.27

...

--->Train, epoch: 97, loss: 0.0000, time: 2.82

--->Valid, epoch: 97, loss: 4.0628, accuracy: 99.4500, time: 0.24

--->Train, epoch: 98, loss: 0.0000, time: 2.71

--->Valid, epoch: 98, loss: 4.0782, accuracy: 99.4500, time: 0.30

--->Train, epoch: 99, loss: 0.0000, time: 2.98

--->Valid, epoch: 99, loss: 4.0863, accuracy: 99.4400, time: 0.32

--->Train, epoch: 100, loss: 0.0000, time: 2.82

--->Valid, epoch: 100, loss: 4.0961, accuracy: 99.4400, time: 0.26

--->Total time: 456.28, training time: 397.09, validation time: 58.31

CPU单线程

Matlab运行结果

Initializing input data normalization.

|======================================================================================================================|

| Epoch | Iteration | Time Elapsed | Mini-batch | Validation | Mini-batch | Validation | Base Learning |

| | | (hh:mm:ss) | Accuracy | Accuracy | Loss | Loss | Rate |

|======================================================================================================================|

| 1 | 1 | 00:00:04 | 11.50% | 9.82% | 9.5761 | 14.3017 | 0.0010 |

| 1 | 100 | 00:01:12 | 94.67% | 95.71% | 0.1699 | 0.1346 | 0.0010 |

| 2 | 200 | 00:02:20 | 97.67% | 97.52% | 0.0820 | 0.0788 | 0.0010 |

| 3 | 300 | 00:03:27 | 97.17% | 98.02% | 0.1127 | 0.0630 | 0.0010 |

| 4 | 400 | 00:04:35 | 99.00% | 98.41% | 0.0288 | 0.0516 | 0.0010 |

| 5 | 500 | 00:05:43 | 99.33% | 98.43% | 0.0283 | 0.0526 | 0.0010 |

...

| 95 | 9500 | 01:48:14 | 100.00% | 99.06% | 0.0011 | 0.0717 | 0.0010 |

| 96 | 9600 | 01:49:22 | 99.67% | 99.06% | 0.0104 | 0.0818 | 0.0010 |

| 97 | 9700 | 01:50:31 | 99.67% | 98.93% | 0.0130 | 0.0944 | 0.0010 |

| 98 | 9800 | 01:51:39 | 100.00% | 98.84% | 0.0003 | 0.1067 | 0.0010 |

| 99 | 9900 | 01:52:47 | 99.83% | 99.10% | 0.0015 | 0.0778 | 0.0010 |

| 100 | 10000 | 01:53:56 | 100.00% | 99.06% | 3.7527e-05 | 0.0829 | 0.0010 |

|======================================================================================================================|

ans =

"--->Total time: 6840.93"

0.9906

Python 下 PyTorch运行结果

--->Train epoch 0, loss: 0.0029, time: 47.59

--->Valid epoch 0, loss: 0.0160, accuracy: 0.8669, time: 2.71

--->Train epoch 1, loss: 0.0026, time: 45.91

--->Valid epoch 1, loss: 0.0158, accuracy: 0.8837, time: 2.66

--->Train epoch 2, loss: 0.0025, time: 44.87

--->Valid epoch 2, loss: 0.0148, accuracy: 0.9837, time: 2.66

--->Train epoch 3, loss: 0.0025, time: 44.76

--->Valid epoch 3, loss: 0.0148, accuracy: 0.9845, time: 2.50

--->Train epoch 4, loss: 0.0025, time: 44.88

--->Valid epoch 4, loss: 0.0148, accuracy: 0.9833, time: 2.47

--->Train epoch 5, loss: 0.0025, time: 45.72

--->Valid epoch 5, loss: 0.0147, accuracy: 0.9877, time: 2.45

...

--->Train epoch 95, loss: 0.0024, time: 130.88

--->Valid epoch 95, loss: 0.0147, accuracy: 0.9934, time: 2.52

--->Train epoch 96, loss: 0.0024, time: 134.49

--->Valid epoch 96, loss: 0.0147, accuracy: 0.9927, time: 2.39

--->Train epoch 97, loss: 0.0024, time: 126.99

--->Valid epoch 97, loss: 0.0147, accuracy: 0.9938, time: 2.36

--->Train epoch 98, loss: 0.0024, time: 122.21

--->Valid epoch 98, loss: 0.0147, accuracy: 0.9924, time: 2.37

--->Train epoch 99, loss: 0.0024, time: 123.44

--->Valid epoch 99, loss: 0.0147, accuracy: 0.9913, time: 2.36

--->Total time: 9354.78, training time: 9101.38, validation time: 253.35

Julia 下 Flux运行结果

--->Train, epoch: 1, loss: 35.5091, time: 119.56

--->Valid, epoch: 1, loss: 9.4518, accuracy: 97.2300, time: 4.28

--->Train, epoch: 2, loss: 7.4576, time: 76.00

--->Valid, epoch: 2, loss: 4.7343, accuracy: 98.6300, time: 3.04

--->Train, epoch: 3, loss: 5.1258, time: 75.96

--->Valid, epoch: 3, loss: 3.8123, accuracy: 98.8300, time: 3.03

--->Train, epoch: 4, loss: 3.7617, time: 75.83

--->Valid, epoch: 4, loss: 3.5273, accuracy: 98.9600, time: 3.04

--->Train, epoch: 5, loss: 3.0759, time: 76.15

--->Valid, epoch: 5, loss: 3.3042, accuracy: 98.9400, time: 3.04

...

--->Train, epoch: 95, loss: 0.0266, time: 76.17

--->Valid, epoch: 95, loss: 3.8931, accuracy: 99.4600, time: 3.03

--->Train, epoch: 96, loss: 0.0266, time: 76.34

--->Valid, epoch: 96, loss: 3.9110, accuracy: 99.4500, time: 3.02

--->Train, epoch: 97, loss: 0.0266, time: 75.35

--->Valid, epoch: 97, loss: 3.9068, accuracy: 99.4600, time: 3.01

--->Train, epoch: 98, loss: 0.0266, time: 75.35

--->Valid, epoch: 98, loss: 3.8989, accuracy: 99.4600, time: 3.01

--->Train, epoch: 99, loss: 0.0266, time: 75.37

--->Valid, epoch: 99, loss: 3.9219, accuracy: 99.4600, time: 3.01

--->Train, epoch: 100, loss: 0.0266, time: 76.12

--->Valid, epoch: 100, loss: 3.9460, accuracy: 99.4500, time: 3.02

--->Total time: 7966.81, training time: 7661.05, validation time: 305.31

CPU多线程(18个线程)

Matlab运行结果

Initializing input data normalization.

|======================================================================================================================|

| Epoch | Iteration | Time Elapsed | Mini-batch | Validation | Mini-batch | Validation | Base Learning |

| | | (hh:mm:ss) | Accuracy | Accuracy | Loss | Loss | Rate |

|======================================================================================================================|

| 1 | 1 | 00:00:01 | 11.50% | 9.82% | 9.5761 | 14.3017 | 0.0010 |

| 1 | 100 | 00:00:21 | 94.83% | 95.70% | 0.1705 | 0.1385 | 0.0010 |

| 2 | 200 | 00:00:40 | 97.67% | 97.59% | 0.0859 | 0.0782 | 0.0010 |

| 3 | 300 | 00:00:59 | 97.17% | 97.97% | 0.1025 | 0.0633 | 0.0010 |

| 4 | 400 | 00:01:18 | 99.00% | 98.38% | 0.0275 | 0.0516 | 0.0010 |

| 5 | 500 | 00:01:37 | 98.83% | 98.50% | 0.0292 | 0.0504 | 0.0010 |

...

| 95 | 9500 | 00:30:29 | 99.83% | 99.10% | 0.0019 | 0.0729 | 0.0010 |

| 96 | 9600 | 00:30:48 | 99.83% | 98.96% | 0.0049 | 0.0770 | 0.0010 |

| 97 | 9700 | 00:31:08 | 99.50% | 98.85% | 0.0422 | 0.0908 | 0.0010 |

| 98 | 9800 | 00:31:27 | 100.00% | 98.98% | 8.1082e-05 | 0.0748 | 0.0010 |

| 99 | 9900 | 00:31:46 | 100.00% | 99.04% | 9.2632e-05 | 0.0724 | 0.0010 |

| 100 | 10000 | 00:32:06 | 100.00% | 99.01% | 0.0007 | 0.0853 | 0.0010 |

|======================================================================================================================|

ans =

"--->Total time: 1929.06"

0.9901

Python 下 PyTorch运行结果

--->Train epoch 0, loss: 0.0028, time: 8.96

--->Valid epoch 0, loss: 0.0151, accuracy: 0.9541, time: 0.54

--->Train epoch 1, loss: 0.0025, time: 9.09

--->Valid epoch 1, loss: 0.0149, accuracy: 0.9723, time: 0.53

--->Train epoch 2, loss: 0.0025, time: 9.03

--->Valid epoch 2, loss: 0.0148, accuracy: 0.9800, time: 0.54

--->Train epoch 3, loss: 0.0025, time: 9.04

--->Valid epoch 3, loss: 0.0148, accuracy: 0.9847, time: 0.55

--->Train epoch 4, loss: 0.0025, time: 9.43

--->Valid epoch 4, loss: 0.0147, accuracy: 0.9872, time: 0.55

--->Train epoch 5, loss: 0.0025, time: 9.60

--->Valid epoch 5, loss: 0.0147, accuracy: 0.9871, time: 0.59

...

--->Train epoch 95, loss: 0.0024, time: 16.00

--->Valid epoch 95, loss: 0.0147, accuracy: 0.9934, time: 0.58

--->Train epoch 96, loss: 0.0024, time: 15.19

--->Valid epoch 96, loss: 0.0147, accuracy: 0.9917, time: 0.59

--->Train epoch 97, loss: 0.0024, time: 15.36

--->Valid epoch 97, loss: 0.0147, accuracy: 0.9927, time: 0.53

--->Train epoch 98, loss: 0.0024, time: 15.47

--->Valid epoch 98, loss: 0.0147, accuracy: 0.9887, time: 0.51

--->Train epoch 99, loss: 0.0024, time: 15.53

--->Valid epoch 99, loss: 0.0147, accuracy: 0.9913, time: 0.53

--->Total time: 1388.82, training time: 1333.49, validation time: 55.26

Julia 下 Flux运行结果

Flux不支持多线程

Matlab实现

device = 'gpu';

mnist_folder = './mnist/';

rng(2022);

nclass = 10;

epochs = 100;

batch_size = 600;

[Xtrain, Ytrain, Xvalid, Yvalid] = read_mnist(mnist_folder);

[H, W, C, Ntrain] = size(Xtrain);

Nvalid = size(Xvalid, 4);

Ytrain = categorical(Ytrain);

Yvalid = categorical(Yvalid);

newlenet = [

imageInputLayer([28 28 1],"Name","imageinput")

convolution2dLayer([5 5],32,"Name","C1")

reluLayer("Name","relu1")

maxPooling2dLayer([2 2],"Name","S2","Stride",[2 2])

convolution2dLayer([5 5],64,"Name","C3")

reluLayer("Name","relu2")

maxPooling2dLayer([2 2],"Name","S4","Stride",[2 2])

convolution2dLayer([4 4],128,"Name","C5")

reluLayer("Name","relu3")

fullyConnectedLayer(84,"Name","F6")

reluLayer("Name","relu4")

fullyConnectedLayer(nclass,"Name","OUT")

softmaxLayer("Name","softmax")

classificationLayer("Name","classoutput")];

plot(layerGraph(newlenet));

options = trainingOptions('adam', ...

'ExecutionEnvironment', device, ...

'ValidationData', {Xvalid, Yvalid}, ...

'ValidationFrequency', Ntrain/batch_size, ...

'Plots', 'none', ... % 'training-progress' or 'none'

'Verbose', true, ...

'VerboseFrequency', Ntrain/batch_size, ... %

'WorkerLoad', 1, ...

'MaxEpochs', epochs, ...

'Shuffle', 'every-epoch', ...

'InitialLearnRate', 1e-3, ...

'LearnRateSchedule', 'none', ... # piecewise

'LearnRateDropFactor', 0.1, ...

'LearnRateDropPeriod', 30, ...

'L2Regularization', 0, ....

'MiniBatchSize', batch_size);

tstart = tic;

net = trainNetwork(Xtrain, Ytrain, newlenet, options);

tend = toc(tstart);

sprintf("--->Total time: %.2f", tend)

Pvalid = classify(net, Xvalid);

precision = sum(Pvalid==Yvalid) / numel(Pvalid);

disp(precision)

PyTorch实现

import time

import torch as th

from read_mnist import read_mnist

from collections import OrderedDict

mnist_folder = './mnist/';

device = 'cuda:0'

nclass = 10

epochs = 100

batch_size = 600

num_workers = 1

benchmark = True

deterministic = True

cudaTF32 = False

cudnnTF32 = False

Xtrain, Ytrain, Xvalid, Yvalid = read_mnist(mnist_folder)

Ntrain, C, H, W, = Xtrain.shape

Nvalid = Xvalid.shape[0]

print(Xtrain.shape, Ytrain.shape)

print(Xvalid.shape, Yvalid.shape)

Xtrain, Ytrain = th.from_numpy(Xtrain.astype('float32')), th.from_numpy(Ytrain.astype('int64'))

Xvalid, Yvalid = th.from_numpy(Xvalid.astype('float32')), th.from_numpy(Yvalid.astype('int64'))

Xtrain = (Xtrain - Xtrain.mean(dim=(1, 2, 3), keepdim=True)) / Xtrain.std(dim=(1, 2, 3), keepdim=True)

Xvalid = (Xvalid - Xvalid.mean(dim=(1, 2, 3), keepdim=True)) / Xvalid.std(dim=(1, 2, 3), keepdim=True)

class NewLeNet(th.nn.Module):

def __init__(self, nclass=10):

super(NewLeNet, self).__init__()

self.nclass = nclass

self.net = th.nn.Sequential(OrderedDict([

('C1', th.nn.Conv2d(1, 32, (5, 5))),

('relu1', th.nn.ReLU()),

('S2', th.nn.MaxPool2d((2, 2), (2, 2))),

('C3', th.nn.Conv2d(32, 64, (5, 5))),

('relu2', th.nn.ReLU()),

('S4', th.nn.MaxPool2d((2, 2), (2, 2))),

('C5', th.nn.Conv2d(64, 128, (4, 4))),

('relu3', th.nn.ReLU()),

('flatten', th.nn.Flatten()),

('F6', th.nn.Linear(128, 84)),

('relu4', th.nn.ReLU()),

('OUT', th.nn.Linear(84, self.nclass)),

('softmax', th.nn.Softmax(dim=1)),

]))

def forward(self, x):

output = self.net(x)

return output

def train(model, traindl, lossfn, optimizer, epoch, device):

model.train()

tstart = time.time()

train_loss = 0.

for batch_idx, (data, target) in enumerate(traindl):

data, target = data.to(device), target.to(device)

optimizer.zero_grad()

output = model(data)

loss = lossfn(output, target)

loss.backward()

optimizer.step()

train_loss += loss.item()

train_loss /= len(traindl.dataset)

tend = time.time()

print('--->Train epoch %d, loss: %.4f, time: %.2f' % (epoch, train_loss, tend - tstart))

return train_loss, tend - tstart

def valid(model, validdl, lossfn, epoch, device):

model.eval()

tstart = time.time()

valid_loss, correct = 0., 0.

with th.no_grad():

for data, target in validdl:

data, target = data.to(device), target.to(device)

output = model(data)

loss = lossfn(output, target)

valid_loss += loss.item()

pred = output.argmax(dim=1, keepdim=True)

correct += pred.eq(target.view_as(pred)).sum().item()

accuracy = correct / len(validdl.dataset)

valid_loss /= len(validdl.dataset)

tend = time.time()

print('--->Valid epoch %d, loss: %.4f, accuracy: %.4f, time: %.2f' % (epoch, valid_loss, accuracy, tend - tstart))

return valid_loss, tend - tstart

th.backends.cudnn.benchmark = benchmark

th.backends.cudnn.deterministic = deterministic

th.backends.cuda.matmul.allow_tf32 = cudaTF32

th.backends.cudnn.allow_tf32 = cudnnTF32

trainds = th.utils.data.TensorDataset(Xtrain, Ytrain)

validds = th.utils.data.TensorDataset(Xvalid, Yvalid)

traindl = th.utils.data.DataLoader(trainds, num_workers=num_workers, batch_size=batch_size, shuffle=True)

validdl = th.utils.data.DataLoader(validds, num_workers=num_workers, batch_size=100, shuffle=False)

model = NewLeNet(nclass=nclass).to(device)

optimizer = th.optim.Adam(model.parameters(), lr=0.001)

lossfn = th.nn.CrossEntropyLoss()

total_time = time.time()

train_loss, valid_loss, train_time, valid_time = 0., 0., 0., 0.

for epoch in range(epochs):

_, t = train(model, traindl, lossfn, optimizer, epoch, device)

train_time += t

_, t = valid(model, validdl, lossfn, epoch, device)

valid_time += t

total_time = time.time() - total_time

print('--->Total time: %.2f, training time: %.2f, validation time: %.2f' % (total_time, train_time, valid_time))

Flux实现

include("read_mnist.jl")

using Flux

using Printf

using Flux.Data: DataLoader

using Statistics, Random

using ParameterSchedulers: Step

# using ProgressMeter: @showprogress

device = gpu;

seed = 202;

mnist_folder = "./mnist/";

nclass = 10;

epochs = 100;

batch_size = 600;

Xtrain, Ytrain, Xvalid, Yvalid = read_mnist(mnist_folder);

Xtrain, Xvalid = Xtrain .- mean(Xtrain, dims=(1, 2, 3)), Xvalid .- mean(Xvalid, dims=(1, 2, 3))

Xtrain, Xvalid = Xtrain ./ std(Xtrain, dims=(1, 2, 3)), Xvalid ./ std(Xvalid, dims=(1, 2, 3))

Ytrain, Yvalid = Flux.onehotbatch(Ytrain, 0:9), Flux.onehotbatch(Yvalid, 0:9)

Ntrain, Nvalid = size(Xtrain)[1], size(Xvalid)[1]

println(size(Xtrain), size(Ytrain), size(Xvalid), size(Yvalid))

function NewLeNet(nclass=10)

return Chain(

Conv((5, 5), 1=>32, relu),

MaxPool((2, 2), stride=(2, 2)),

Conv((5, 5), 32=>64, relu),

MaxPool((2, 2), stride=(2, 2)),

Conv((4, 4), 64=>128, relu),

Flux.flatten,

Dense(128=>84, relu),

Dense(84=>nclass),

softmax,

)

end

train_loader = DataLoader((Xtrain, Ytrain), batchsize=batch_size, shuffle=true)

valid_loader = DataLoader((Xvalid, Yvalid), batchsize=100, shuffle=false)

nbtrain = ceil(Ntrain / batch_size)

nbvalid = ceil(Nvalid / 100)

model = NewLeNet(nclass) |> device

ps = Flux.params(model)

Random.seed!(seed)

loss(ŷ, y) = Flux.crossentropy(ŷ, y)

accuracy(ŷ, y) = mean(Flux.onecold(ŷ) .== Flux.onecold(y))

opt = Flux.Optimiser(ADAM(0.001), WeightDecay(0.))

# opt = Flux.Optimiser(ADAM(0.001), WeightDecay(0.), Step(0.001, 0.1, nbtrain))

total_time, train_time, valid_time = time(), 0., 0.

for i in 1:epochs

lossv, tstart = 0., time()

# Flux.train!(loss, Flux.params(model), train_loader, opt)

# @showprogress for (x, y) in train_loader

for (x, y) in train_loader

x, y = x |> device, y |> device

gs = Flux.gradient(ps) do

ŷ = model(x)

lossv += loss(ŷ, y)

end

Flux.Optimise.update!(opt, ps, gs)

end

lossv, tend = lossv / nbtrain, time()

global train_time += tend - tstart

@printf("--->Train, epoch: %d, loss: %.4f, time: %.2f\n", i, lossv, tend - tstart)

lossv, accv, tstart = 0., 0., time()

for (x, y) in valid_loader

x, y = x |> device, y |> device

ŷ = model(x)

lossv += loss(ŷ, y)

accv += accuracy(ŷ, y)

end

lossv, accv, tend = lossv / nbvalid, accv / nbvalid, time()

global valid_time += tend - tstart

@printf("--->Valid, epoch: %d, loss: %.4f, accuracy: %.4f, time: %.2f\n", i, lossv, accv, tend - tstart)

end

total_time = time() - total_time

@printf("--->Total time: %.2f, training time: %.2f, validation time: %.2f\n", total_time, train_time, valid_time)

MNIST数据集读取

MATLAB读取MNIST

function [Xtrain, Ytrain, Xtest, Ytest] = read_mnist(mnist_folder)

%read_mnist - read the orignal format MNIST data

%

% Syntax: [Xtrain, Ytrain, Xtest, Ytest] = read_mnist(mnist_folder)

%

% Long description

% read train images

fid = fopen([mnist_folder, 'train-images.idx3-ubyte'], 'rb');

Xtrain = fread(fid, inf, 'uint8', 'l');

Xtrain = Xtrain(17:end);

fclose(fid);

Xtrain = reshape(Xtrain, 28, 28, 1, size(Xtrain,1) / 784);

% read train labels

fid = fopen([mnist_folder, 'train-labels.idx1-ubyte'], 'rb');

Ytrain = fread(fid, inf, 'uint8', 'l');

Ytrain = Ytrain(9:end);

fclose(fid);

% read test images

fid = fopen([mnist_folder, 't10k-images.idx3-ubyte'], 'rb');

Xtest = fread(fid, inf, 'uint8', 'l');

Xtest = Xtest(17:end);

fclose(fid);

Xtest = reshape(Xtest, 28, 28, 1, size(Xtest,1) / 784);

% read test labels

fid = fopen([mnist_folder, 't10k-labels.idx1-ubyte'], 'rb');

Ytest = fread(fid, inf, 'uint8', 'l');

Ytest = Ytest(9:end);

fclose(fid);

end

Python读取MNIST

import os

import struct

import numpy as np

def read_mnist(mnist_folder):

f = open(mnist_folder + 'train-images.idx3-ubyte', 'rb')

magic, num, rows, cols = struct.unpack('>IIII', f.read(16))

Xtrain = np.fromfile(f, dtype=np.uint8).reshape(num, 1, 28, 28)

f.close()

f = open(mnist_folder + 'train-labels.idx1-ubyte', 'rb')

magic, num = struct.unpack('>II', f.read(8))

Ytrain = np.fromfile(f, dtype=np.uint8)

f.close()

f = open(mnist_folder + 't10k-images.idx3-ubyte', 'rb')

magic, num, rows, cols = struct.unpack('>IIII', f.read(16))

Xtest = np.fromfile(f, dtype=np.uint8).reshape(num, 1, 28, 28)

f.close()

f = open(mnist_folder + 't10k-labels.idx1-ubyte', 'rb')

magic, num = struct.unpack('>II', f.read(8))

Ytest = np.fromfile(f, dtype=np.uint8)

f.close()

return Xtrain, Ytrain, Xtest, Ytest

Julia读取MNIST

function read_mnist(mnist_folder)

fid = open(mnist_folder * "train-images.idx3-ubyte")

seek(fid, 4)

N = bswap(read(fid, Int32))

H = bswap(read(fid, Int32))

W = bswap(read(fid, Int32))

Xtrain = read(fid, N*H*W)

Xtrain = Int.(Xtrain)

Xtrain = reshape(Xtrain, (H, W, 1, N))

close(fid)

fid = open(mnist_folder * "train-labels.idx1-ubyte")

seek(fid, 4)

N = bswap(read(fid, Int32))

Ytrain = read(fid, N)

Ytrain = Int.(Ytrain)

Ytrain = reshape(Ytrain, (N,))

close(fid)

fid = open(mnist_folder * "t10k-images.idx3-ubyte")

seek(fid, 4)

N = bswap(read(fid, Int32))

H = bswap(read(fid, Int32))

W = bswap(read(fid, Int32))

Xtest = read(fid, N*H*W)

Xtest = Int.(Xtest)

Xtest = reshape(Xtest, (H, W, 1, N))

close(fid)

fid = open(mnist_folder * "t10k-labels.idx1-ubyte")

seek(fid, 4)

N = bswap(read(fid, Int32))

Ytest = read(fid, N)

Ytest = Int.(Ytest)

Ytest = reshape(Ytest, (N,))

close(fid)

Xtrain, Xtest = Float32.(Xtrain), Float32.(Xtest)

return Xtrain, Ytrain, Xtest, Ytest

end

if abspath(PROGRAM_FILE) == @__FILE__

Xtrain, Ytrain, Xtest, Ytest = read_mnist("./mnist/");

print(size(Xtrain), size(Ytrain))

print(typeof(Xtrain), typeof(Ytrain))

end

参考文献

本文内容由网友自发贡献,版权归原作者所有,本站不承担相应法律责任。如您发现有涉嫌抄袭侵权的内容,请联系:hwhale#tublm.com(使用前将#替换为@)