基本思想:帮助好友部署一个人脸检测模型,也是自己业务需求,部分代码来自好友陈同学,自己改了改c++的代码可以部署rk3399pro上了,其它资料见附录吧,官方的代码并未将landmark的点打印出来,rknn将这个信息补上了~

链接: https://pan.baidu.com/s/1MSZG-XeIFmBfYJwUMThzDQ?pwd=1ujq 提取码: 1ujq

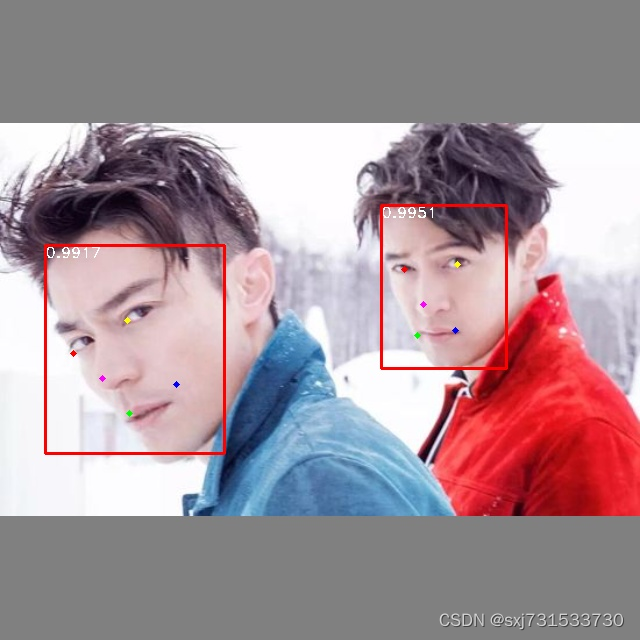

测试图片

一、测试mnn效果,其中mnn直接搬运了大佬参考附录一

cmakelists.txt

cmake_minimum_required(VERSION 3.16)

project(untitled10)

set(CMAKE_CXX_FLAGS "-std=c++11")

set(CMAKE_CXX_STANDARD 11)

include_directories(${CMAKE_SOURCE_DIR})

include_directories(${CMAKE_SOURCE_DIR}/include)

include_directories(${CMAKE_SOURCE_DIR}/include/MNN)

find_package(OpenCV REQUIRED)

#message(STATUS ${OpenCV_INCLUDE_DIRS})

#添加头文件

include_directories(${OpenCV_INCLUDE_DIRS})

#链接Opencv库

add_library(libmnn SHARED IMPORTED)

#set_target_properties(libncnn PROPERTIES IMPORTED_LOCATION ${CMAKE_SOURCE_DIR}/lib/libncnn.a)

set_target_properties(libmnn PROPERTIES IMPORTED_LOCATION ${CMAKE_SOURCE_DIR}/lib/libMNN.so)

add_executable(untitled10 main.cpp retinaface.cpp)

target_link_libraries(untitled10 ${OpenCV_LIBS} libmnn )

main.cpp

#include <iostream>

#include "retinaface.h"

int main() {

RetinaFace *item=new RetinaFace();

item->Initial("../FaceDetector8.mnn");

cv::Mat img=cv::imread("../0.jpeg");

vector<FaceObject> faces;

item->DetectFace(img,faces);

for(auto &face:faces){

cv::rectangle(img, cv::Point(face.rect.x, face.rect.y),

cv::Point(face.rect.x + face.rect.width, face.rect.y + face.rect.height),

cv::Scalar(0, 255, 0), 2);

string score = to_string(face.prob);

cv::putText(img, score, cv::Point(face.rect.x, face.rect.y),cv::FONT_HERSHEY_DUPLEX, 0.6, (0, 255, 255));

}

cv::imshow("image",img);

cv::waitKey(0);

delete item;

return 0;

}

retinaface.h

#include <MNN/ImageProcess.hpp>

#include <MNN/Interpreter.hpp>

#include <memory>

#include <iostream>

#include <vector>

#include <math.h>

#include "opencv2/opencv.hpp"

#include "opencv2/highgui.hpp"

using namespace std;

struct FaceObject {

cv::Rect_<float> rect;

float prob;

};

struct Anchor{

float cx;

float cy;

float s_kx;

float s_ky;

};

class RetinaFace {

public:

RetinaFace ();

int Initial(std::string model_path);

~RetinaFace();

int DetectFace(cv::Mat img,vector<FaceObject> &faces);

private:

MNN::Interpreter *net_ = nullptr;

MNN::Session *session_ = nullptr;

MNN::CV::ImageProcess::Config preProcessConfig_;

MNN::Tensor* input_;

MNN::Tensor* loc_;

MNN::Tensor* cls_;

vector<Anchor> Anchors_;

int width_;

int height_;

float mean_vals_[3];

float conf_;

int top_k_;

};

retinaface.cpp

#include "retinaface.h"

#include <string.h>

#include <errno.h>

#include <string>

#include <vector>

#include <sys/time.h>

int32_t NowMicros() {

struct timeval tv;

gettimeofday(&tv,nullptr);

return static_cast<int32_t>(tv.tv_sec)*1000+tv.tv_usec/1000;

}

float intersection_area(const FaceObject& a, const FaceObject& b) {

cv::Rect_<float> inter = a.rect & b.rect;

return inter.area();

}

void generate_anchors(int height,int width,vector<Anchor> &Anchors) {

int steps[3] = {8,16,32};

int min_sizes[3][2] = {{16,32},{64,128},{256,512}};

int feature_maps[3][2] = {0};

for(int i=0;i<3;i++) {

feature_maps[i][0] = ceil(height*1.0/steps[i]);

feature_maps[i][1] = ceil(width*1.0/steps[i]);

}

Anchors.clear();

for(int i=0;i<3;i++) {

int *min_size = min_sizes[i];

for(int id_y=0;id_y<feature_maps[i][0];id_y++) {

for(int id_x=0;id_x<feature_maps[i][1];id_x++)

for(int k = 0;k<2;k++) {

float s_kx = min_size[k]*1.0/width;

float s_ky = min_size[k]*1.0/height;

float dense_cx = (id_x + 0.5)*steps[i]/width;

float dense_cy = (id_y + 0.5)*steps[i]/height;

Anchor a;

a.cx = dense_cx;

a.cy = dense_cy;

a.s_kx = s_kx;

a.s_ky = s_ky;

Anchors.push_back(a);

}

}

}

}

void decode(float *loc,vector<Anchor> &Anchors, float *out_loc, int width, int height) {

float variance[2] = {0.1,0.2};

for (int q=0; q<1; q++) {

for (int y=0; y<16800; y++) {

out_loc[y*4] = Anchors[y].cx + loc[0]*variance[0]*Anchors[y].s_kx;

out_loc[y*4+1] = Anchors[y].cy + loc[1]*variance[0]*Anchors[y].s_ky;

out_loc[y*4+2] = (Anchors[y].s_kx*exp(loc[2]*variance[1]))*width; //width

out_loc[y*4+3] = (Anchors[y].s_ky*exp(loc[3]*variance[1]))*height; //height

out_loc[y*4] = out_loc[y*4]*width - out_loc[y*4+2]/2; //x0

out_loc[y*4+1] = out_loc[y*4+1]*height - out_loc[y*4+3]/2; //y0

loc += 4;

}

}

}

void nms_sorted_bboxes(const std::vector<FaceObject>& faceobjects, std::vector<int>& picked, float nms_threshold) {

picked.clear();

const int n = faceobjects.size();

vector<float> areas(n);

for (int i = 0; i < n; i++) {

areas[i] = faceobjects[i].rect.area();

}

for (int i = 0; i < n; i++) {

const FaceObject& a = faceobjects[i];

int keep = 1;

for (int j = 0; j < (int)picked.size(); j++) {

const FaceObject& b = faceobjects[picked[j]];

// intersection over union

float inter_area = intersection_area(a, b);

float union_area = areas[i] + areas[picked[j]] - inter_area;

//float IoU = inter_area / union_area

if (inter_area / union_area > nms_threshold)

keep = 0;

}

if (keep)

picked.push_back(i);

}

}

bool FaceLargerScore(FaceObject a, FaceObject b) {

if ( a.prob > b.prob) {

return true;

} else {

return false;

}

}

RetinaFace::RetinaFace() {

width_ = 640;

height_ = 640;

mean_vals_[0] = 104.0f;

mean_vals_[1] = 117.0f;

mean_vals_[2] = 123.0f;

conf_ = 0.7;

top_k_ = 100;

generate_anchors(width_,height_,Anchors_);

}

RetinaFace::~RetinaFace() {

if(net_ != nullptr) {

net_->releaseModel();

net_->releaseSession(session_);

}

Anchors_.clear();

}

int RetinaFace::Initial(std::string model_path) {

std::string modelpath = model_path ;

net_ = MNN::Interpreter::createFromFile(modelpath.c_str());

MNN::ScheduleConfig netConfig;

netConfig.type = MNN_FORWARD_CPU;

netConfig.numThread = 4;

session_ = net_->createSession(netConfig);

input_ = net_->getSessionInput(session_, "input0");

net_->resizeTensor(input_, {1, 3, width_, height_});

net_->resizeSession(session_);

cls_ = net_->getSessionOutput(session_, "576");

loc_ = net_->getSessionOutput(session_, "output0");

::memcpy(preProcessConfig_.mean, mean_vals_, sizeof(mean_vals_));

preProcessConfig_.sourceFormat = MNN::CV::BGR;

preProcessConfig_.destFormat = MNN::CV::BGR;

return 0;

}

int RetinaFace::DetectFace(const cv::Mat img,vector<FaceObject> &faces) {

//先pad将图像填补为正方形,pre-process

cv::Mat pad_img;

int long_size = max(img.cols,img.rows);

if (long_size == img.cols) {

int top = (long_size - img.rows)/2;

int bottom = long_size - top - img.rows;

cv::copyMakeBorder(img,pad_img,top,bottom,0,0,cv::BORDER_CONSTANT,0);

} else {

int left = (long_size - img.cols)/2;

int right = long_size - left - img.cols;

cv::copyMakeBorder(img,pad_img,0,0,left,right,cv::BORDER_CONSTANT,0);

}

//pad为正方形后,再resize为模型的固定输入大小(640,640)

cv::Mat bgr;

cv::resize(pad_img,bgr,cv::Size(width_,height_));

net_->resizeTensor(input_, {1, 3, width_, height_});

net_->resizeSession(session_);

//mnn 输入

MNN::CV::ImageProcess *pretreat_ = MNN::CV::ImageProcess::create(preProcessConfig_);

pretreat_->convert(bgr.data, width_, height_, bgr.step[0], input_);

net_->runSession(session_);

MNN::Tensor clsHost(cls_, MNN::Tensor::CAFFE);

MNN::Tensor locHost(loc_, MNN::Tensor::CAFFE);

cls_->copyToHostTensor(&clsHost);

loc_->copyToHostTensor(&locHost);

std::vector<int> shape0 = clsHost.shape();

std::vector<int> shape1 = locHost.shape();

int c = shape0[0];

int length = shape0[1];

std::cout<<c<<" "<<length<<std::endl;

float *out_loc = new float[length*4];

auto cls_data = clsHost.host<float>();

auto loc_data = locHost.host<float>();

decode(loc_data, Anchors_, out_loc, width_, height_);

vector<FaceObject> tempfaces;

//将大于阈值的框存进temfaces,准备下一步的nms

for (int q=0; q<c; q++) {

for (int y=0; y<length;y++) {

if(cls_data[2*y+1] > conf_) {

FaceObject face;

face.prob = cls_data[2*y+1];

face.rect.x = out_loc[4*y];

face.rect.y = out_loc[4*y+1];

face.rect.width = out_loc[4*y+2];

face.rect.height = out_loc[4*y+3];

tempfaces.push_back(face);

}

}

}

//根据分数排序,保留top_k

std::sort(tempfaces.begin(), tempfaces.end(),FaceLargerScore);

if (tempfaces.size() > top_k_)

tempfaces.resize(top_k_);

//执行nms

std::vector<int> picked;

int start = NowMicros();

nms_sorted_bboxes(tempfaces, picked, 0.4);

int end = NowMicros();

std::cout<<"nms:"<<(end-start)<<"ms"<<std::endl;

//上面的操作是在640×640的图片上做的(先pad图片到正方形,再resize到640*640)

//因此下面的操作是将检测框,反映射回到原图像的尺寸

float scale = long_size*1.0/width_; //width_与height_相等

for (int i = 0; i < picked.size(); i++) {

FaceObject face = tempfaces[picked[i]];

//反映射回pad_img

int real_x = face.rect.x*scale;

int real_y = face.rect.y*scale;

int real_width = face.rect.width*scale;

int real_height = face.rect.height*scale;

//反映射回实际原图尺寸

real_x = real_x - (long_size - img.cols)/2;

real_y = real_y - (long_size - img.rows)/2;

//如果检测框超出了原始图像的边界,则将检测框拉回边界

real_x = (real_x > 0) ? real_x :0;

real_y = (real_y > 0) ? real_y :0;

real_width = ((real_x + real_width) < img.cols ) ? real_width : (img.cols - real_x);

real_height = ((real_y + real_height) < img.rows ) ? real_height : (img.rows - real_y);

face.rect.x = real_x;

face.rect.y = real_y;

face.rect.width = real_width;

face.rect.height = real_height;

faces.push_back(face);

}

return 0;

}

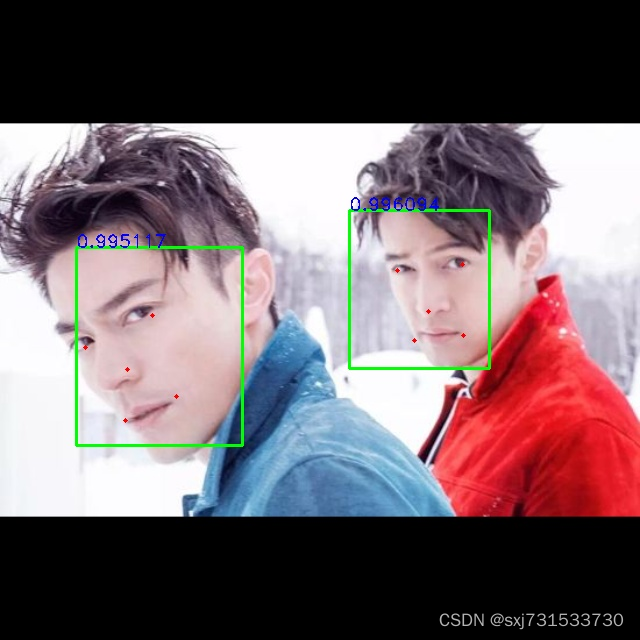

二、测试rknn效果

py代码

from rknn.api import RKNN

import numpy as np

import cv2

import time

from math import ceil

from itertools import product as product

ONNX_MODEL = 'FaceDetector8.onnx'

RKNN_MODEL = 'retinaface.rknn'

img_path = '0.jpeg'

IMG_SIZE = 640

cfg_mnet = {

'min_sizes': [[16, 32], [64, 128], [256, 512]],

'steps': [8, 16, 32],

'variance': [0.1, 0.2],

}

# 得到anchor

class Anchors(object):

def __init__(self, cfg, image_size=None):

super(Anchors, self).__init__()

self.min_sizes = cfg['min_sizes']

self.steps = cfg['steps']

#---------------------------#

# 图片的尺寸

#---------------------------#

self.image_size = image_size

#---------------------------#

# 三个有效特征层高和宽

#---------------------------#

self.feature_maps = [[ceil(self.image_size[0]/step), ceil(self.image_size[1]/step)] for step in self.steps]

def get_anchors(self):

anchors = []

for k, f in enumerate(self.feature_maps):

min_sizes = self.min_sizes[k]

#-----------------------------------------#

# 对特征层的高和宽进行循环迭代

#-----------------------------------------#

for i, j in product(range(f[0]), range(f[1])):

for min_size in min_sizes:

s_kx = min_size / self.image_size[1]

s_ky = min_size / self.image_size[0]

dense_cx = [x * self.steps[k] / self.image_size[1] for x in [j + 0.5]]

dense_cy = [y * self.steps[k] / self.image_size[0] for y in [i + 0.5]]

for cy, cx in product(dense_cy, dense_cx):

anchors += [cx, cy, s_kx, s_ky]

output_np=np.array(anchors).reshape(-1,4)

return output_np

# 填充灰条,实现resize

def letterbox_image (image, size):

ih, iw, _ = np.shape(image)

w, h = size

scale = min(w/iw, h/ih)

nw = int(iw*scale)

nh = int(ih*scale)

image = cv2.resize(image, (nw, nh))

new_image = np.ones([size[1], size[0], 3]) * 128

new_image[(h-nh)//2:nh+(h-nh)//2, (w-nw)//2:nw+(w-nw)//2] = image

return new_image

# 人脸框坐标解码

def decode(loc, priors, variances):

boxes = np.concatenate((priors[:, :2] + loc[:, :2] * variances[0] * priors[:, 2:],

priors[:, 2:] * np.exp(loc[:, 2:] * variances[1])), 1)

boxes[:, :2] -= boxes[:, 2:] / 2

boxes[:, 2:] += boxes[:, :2]

return boxes

# 人脸关键点解码

def decode_landm(pre, priors, variances):

landms = np.concatenate((priors[:, :2] + pre[:, :2] * variances[0] * priors[:, 2:],

priors[:, :2] + pre[:, 2:4] * variances[0] * priors[:, 2:],

priors[:, :2] + pre[:, 4:6] * variances[0] * priors[:, 2:],

priors[:, :2] + pre[:, 6:8] * variances[0] * priors[:, 2:],

priors[:, :2] + pre[:, 8:10] * variances[0] * priors[:, 2:],

), 1)

return landms

def pynms(dets, thresh): #非极大抑制

x1 = dets[:, 0]

y1 = dets[:, 1]

x2 = dets[:, 2]

y2 = dets[:, 3]

areas = (y2 - y1) * (x2 - x1)

scores = dets[:, 4]

keep = []

index = scores.argsort()[::-1] #置信度从大到小排序(下标)

while index.size > 0:

i = index[0]

keep.append(i)

x11 = np.maximum(x1[i], x1[index[1:]]) # 计算相交面积

y11 = np.maximum(y1[i], y1[index[1:]])

x22 = np.minimum(x2[i], x2[index[1:]])

y22 = np.minimum(y2[i], y2[index[1:]])

w = np.maximum(0, x22 - x11) # 当两个框不想交时x22 - x11或y22 - y11 为负数,

# 两框不相交时把相交面积置0

h = np.maximum(0, y22 - y11) #

overlaps = w * h

ious = overlaps / (areas[i] + areas[index[1:]] - overlaps)#计算IOU

idx = np.where(ious <= thresh)[0] #IOU小于thresh的框保留下来

index = index[idx + 1]

return keep

def filter_box(org_box,conf_thres,iou_thres): #过滤掉无用的框

conf = org_box[..., 4] > conf_thres #删除置信度小于conf_thres的BOX

# print(conf)

box = org_box[conf == True]

output = []

curr_cls_box = np.array(box)

curr_cls_box[:,:4]=curr_cls_box[:,:4]*640

curr_cls_box[:,5:]=curr_cls_box[:,5:]*640

curr_out_box = pynms(curr_cls_box,iou_thres) #经过非极大抑制后输出的BOX下标

for k in curr_out_box:

output.append(curr_cls_box[k]) #利用下标取出非极大抑制后的BOX

output = np.array(output)

return output

# 输入图片处理

def procss_img(img_path):

img=cv2.imread(img_path)

img=cv2.cvtColor(img,cv2.COLOR_BGR2RGB)

img=letterbox_image(img,(IMG_SIZE,IMG_SIZE))

or_img=np.array(img,np.uint8)

or_img=cv2.cvtColor(or_img,cv2.COLOR_RGB2BGR)

img=img.astype(dtype=np.float32)

img-=np.array((104,117,123),np.float32)

# img = img.transpose(2, 0, 1)

img=np.expand_dims(img,axis=0)

return img,or_img #img为模型输入,or_img用于画人脸框

# 画人脸框和5个关键点

def draw_img(boxes_conf_landms,old_image):

for b in boxes_conf_landms:

text = "{:.4f}".format(b[4])

b = list(map(int, b))

# b[0]-b[3]为人脸框的坐标,b[4]为得分

cv2.rectangle(old_image, (b[0], b[1]), (b[2], b[3]), (0, 0, 255), 2)

cx = b[0]

cy = b[1] + 12

cv2.putText(old_image, text, (cx, cy),cv2.FONT_HERSHEY_DUPLEX, 0.5, (255, 255, 255))

# b[5]-b[14]为人脸关键点的坐标

cv2.circle(old_image, (b[5], b[6]), 1, (0, 0, 255), 4)

cv2.circle(old_image, (b[7], b[8]), 1, (0, 255, 255), 4)

cv2.circle(old_image, (b[9], b[10]), 1, (255, 0, 255), 4)

cv2.circle(old_image, (b[11], b[12]), 1, (0, 255, 0), 4)

cv2.circle(old_image, (b[13], b[14]), 1, (255, 0, 0), 4)

return old_image

if __name__ == '__main__':

# # Create RKNN object

rknn = RKNN(verbose=True)

# Load rknn model

print('--> Loading model')

# pre-process config

print('--> config model')

rknn.config(mean_values=[[104, 117, 123]],

target_platform='rk3399pro')

print('done')

print('--> Loading model')

ret = rknn.load_onnx(model=ONNX_MODEL)

if ret != 0:

print('Load model failed!')

exit(ret)

print('done')

# Build model

print('--> Building model')

t1 = time.time()

ret = rknn.build(do_quantization=True, dataset='dataset.txt') # ,pre_compile=True buzuo lianghua gaicheng False zheyang jieguo xiangtong he pythondaima

t2 = time.time() - t1

print("cost time = ", t2)

if ret != 0:

print('Build retainface failed!')

exit(ret)

print('done')

# Export rknn model

print('--> Export RKNN model')

ret = rknn.export_rknn(RKNN_MODEL)

img, or_img = procss_img(img_path)

# Init runtime environment

print('--> Init runtime environment')

ret = rknn.init_runtime()

print("151561515")

if ret != 0:

print('Init runtime environment failed!')

exit(ret)

print('done')

# Inference

print('--> Running model')

outputs = rknn.inference(inputs=[img])

#输出数据转为numpy数据格式

output_1 = np.array(outputs[0]).squeeze()

output_2 = np.array(outputs[1]).squeeze()

output_3 = np.array(outputs[2]).squeeze()

#生成anchor , 人脸框关键点解码

anchors = Anchors(cfg_mnet, image_size=(640, 640)).get_anchors()

print(anchors)

boxes = decode(output_1, anchors, cfg_mnet['variance'])

print(boxes)

landms = decode_landm(output_3, anchors, cfg_mnet['variance'])

print(landms)

conf = output_2[:, 1:2]

#非极大抑制,得到最终输出

boxs_conf = np.concatenate((boxes, conf, landms), -1)

boxs_conf = filter_box(boxs_conf, 0.5, 0.45)

#画出人类框和5个人脸关键并保存图片

if boxs_conf is not None:

draw_img(boxs_conf, or_img)

print(img)

cv2.imwrite("dog1.jpg",or_img)

# cv2.imshow('re', or_img)

# cv2.waitKey(0)

rknn.release()

测试结果

c++代码

cmakelists.txt

cmake_minimum_required(VERSION 3.16)

project(retainface_rknn)

set(CMAKE_CXX_FLAGS "-std=c++11")

set(CMAKE_CXX_STANDARD 11)

include_directories(${CMAKE_SOURCE_DIR})

include_directories(${CMAKE_SOURCE_DIR}/include)

find_package(OpenCV REQUIRED)

#message(STATUS ${OpenCV_INCLUDE_DIRS})

#添加头文件

include_directories(${OpenCV_INCLUDE_DIRS})

#链接Opencv库

add_library(librknn_api SHARED IMPORTED)

set_target_properties(librknn_api PROPERTIES IMPORTED_LOCATION ${CMAKE_SOURCE_DIR}/lib/librknn_api.so)

add_executable(retainface_rknn main.cpp)

target_link_libraries(retainface_rknn ${OpenCV_LIBS} librknn_api )

main.cpp

#include <stdio.h>

#include <stdint.h>

#include <stdlib.h>

#include <queue>

#include "rknn_api.h"

#include "opencv2/core/core.hpp"

#include "opencv2/imgproc/imgproc.hpp"

#include "opencv2/highgui/highgui.hpp"

#include <chrono>

using namespace cv;

using namespace std;

struct FaceObject {

cv::Rect_<float> rect;

cv::Point landmark[5];

float prob;

};

struct Anchor{

float cx;

float cy;

float s_kx;

float s_ky;

};

void printRKNNTensor(rknn_tensor_attr *attr) {

printf("index=%d name=%s n_dims=%d dims=[%d %d %d %d] n_elems=%d size=%d "

"fmt=%d type=%d qnt_type=%d fl=%d zp=%d scale=%f\n",

attr->index, attr->name, attr->n_dims, attr->dims[3], attr->dims[2],

attr->dims[1], attr->dims[0], attr->n_elems, attr->size, 0, attr->type,

attr->qnt_type, attr->fl, attr->zp, attr->scale);

}

void decode(float *loc,float *land_mark,vector<Anchor> &Anchors, float *out_loc, float *out_landmark,int width, int height) {

float variance[2] = {0.1,0.2};

for (int q=0; q<1; q++) {

for (int y=0; y<16800; y++) {

out_loc[y*4] = Anchors[y].cx + loc[0]*variance[0]*Anchors[y].s_kx;

out_loc[y*4+1] = Anchors[y].cy + loc[1]*variance[0]*Anchors[y].s_ky;

out_loc[y*4+2] = (Anchors[y].s_kx*exp(loc[2]*variance[1]))*width; //width

out_loc[y*4+3] = (Anchors[y].s_ky*exp(loc[3]*variance[1]))*height; //height

out_loc[y*4] = out_loc[y*4]*width - out_loc[y*4+2]/2; //x0

out_loc[y*4+1] = out_loc[y*4+1]*height - out_loc[y*4+3]/2; //y0

loc += 4;

out_landmark[y*10] = Anchors[y].cx + land_mark[0]*variance[0]*Anchors[y].s_kx;

out_landmark[y*10+1] = Anchors[y].cy + land_mark[1]*variance[0]*Anchors[y].s_ky;

out_landmark[y*10+2] = Anchors[y].cx + land_mark[2]*variance[0]*Anchors[y].s_kx;

out_landmark[y*10+3] = Anchors[y].cy + land_mark[3]*variance[0]*Anchors[y].s_ky;

out_landmark[y*10+4] = Anchors[y].cx + land_mark[4]*variance[0]*Anchors[y].s_kx;

out_landmark[y*10+5] = Anchors[y].cy + land_mark[5]*variance[0]*Anchors[y].s_ky;

out_landmark[y*10+6] = Anchors[y].cx + land_mark[6]*variance[0]*Anchors[y].s_kx;

out_landmark[y*10+7] = Anchors[y].cy + land_mark[7]*variance[0]*Anchors[y].s_ky;

out_landmark[y*10+8] = Anchors[y].cx + land_mark[8]*variance[0]*Anchors[y].s_kx;

out_landmark[y*10+9] = Anchors[y].cy + land_mark[9]*variance[0]*Anchors[y].s_ky;

land_mark+=10;

}

}

}

bool FaceLargerScore(FaceObject a, FaceObject b) {

if ( a.prob > b.prob) {

return true;

} else {

return false;

}

}

float intersection_area(const FaceObject& a, const FaceObject& b) {

cv::Rect_<float> inter = a.rect & b.rect;

return inter.area();

}

void nms_sorted_bboxes(const std::vector<FaceObject>& faceobjects, std::vector<int>& picked, float nms_threshold) {

picked.clear();

const int n = faceobjects.size();

vector<float> areas(n);

for (int i = 0; i < n; i++) {

areas[i] = faceobjects[i].rect.area();

}

for (int i = 0; i < n; i++) {

const FaceObject& a = faceobjects[i];

int keep = 1;

for (int j = 0; j < (int)picked.size(); j++) {

const FaceObject& b = faceobjects[picked[j]];

// intersection over union

float inter_area = intersection_area(a, b);

float union_area = areas[i] + areas[picked[j]] - inter_area;

//float IoU = inter_area / union_area

if (inter_area / union_area > nms_threshold)

keep = 0;

}

if (keep)

picked.push_back(i);

}

}

int post_process_u8(float *input0,float *input1,float *input2,cv::Mat img,int w,int h,std::vector<Anchor> Anchors_,int long_size,std::vector<FaceObject> &faces){

float *loc_data=input0;

float *cls_data=input1;

float *land_mark=input2;

int length=16800;

int c=1;

float conf_=0.7;

int top_k_=100;

float *out_loc = new float[length*4];

float *out_landmark = new float[length*10];

decode(loc_data, land_mark,Anchors_, out_loc,out_landmark, w, h);

vector<FaceObject> tempfaces;

//将大于阈值的框存进temfaces,准备下一步的nms

for (int q=0; q<c; q++) {

for (int y=0; y<length;y++) {

if(cls_data[2*y+1] > conf_) {

FaceObject face;

face.prob = cls_data[2*y+1];

face.rect.x = out_loc[4*y];

face.rect.y = out_loc[4*y+1];

face.rect.width = out_loc[4*y+2];

face.rect.height = out_loc[4*y+3];

face.landmark[0].x=out_landmark[10*y]*w;

face.landmark[0].y=out_landmark[10*y+1]*h;

face.landmark[1].x=out_landmark[10*y+2]*w;

face.landmark[1].y=out_landmark[10*y+3]*h;

face.landmark[2].x=out_landmark[10*y+4]*w;

face.landmark[2].y=out_landmark[10*y+5]*h;

face.landmark[3].x=out_landmark[10*y+6]*w;

face.landmark[3].y=out_landmark[10*y+7]*h;

face.landmark[4].x=out_landmark[10*y+8]*w;

face.landmark[4].y=out_landmark[10*y+9]*h;

tempfaces.push_back(face);

}

}

}

//根据分数排序,保留top_k

std::sort(tempfaces.begin(), tempfaces.end(),FaceLargerScore);

if (tempfaces.size() > top_k_)

tempfaces.resize(top_k_);

//执行nms

std::vector<int> picked;

nms_sorted_bboxes(tempfaces, picked, 0.4);

//上面的操作是在640×640的图片上做的(先pad图片到正方形,再resize到640*640)

//因此下面的操作是将检测框,反映射回到原图像的尺寸

float scale = long_size*1.0/w; //width_与height_相等

for (int i = 0; i < picked.size(); i++) {

FaceObject face = tempfaces[picked[i]];

//反映射回pad_img

int real_x = face.rect.x*scale;

int real_y = face.rect.y*scale;

int real_width = face.rect.width*scale;

int real_height = face.rect.height*scale;

//反映射回实际原图尺寸

real_x = real_x - (long_size - img.cols)/2;

real_y = real_y - (long_size - img.rows)/2;

//如果检测框超出了原始图像的边界,则将检测框拉回边界

real_x = (real_x > 0) ? real_x :0;

real_y = (real_y > 0) ? real_y :0;

real_width = ((real_x + real_width) < img.cols ) ? real_width : (img.cols - real_x);

real_height = ((real_y + real_height) < img.rows ) ? real_height : (img.rows - real_y);

face.rect.x = real_x;

face.rect.y = real_y;

face.rect.width = real_width;

face.rect.height = real_height;

faces.push_back(face);

}

delete []out_loc;

delete []out_landmark;

return 0;

}

void generate_anchors(int height,int width,vector<Anchor> &Anchors) {

int steps[3] = {8,16,32};

int min_sizes[3][2] = {{16,32},{64,128},{256,512}};

int feature_maps[3][2] = {0};

for(int i=0;i<3;i++) {

feature_maps[i][0] = ceil(height*1.0/steps[i]);

feature_maps[i][1] = ceil(width*1.0/steps[i]);

}

Anchors.clear();

for(int i=0;i<3;i++) {

int *min_size = min_sizes[i];

for(int id_y=0;id_y<feature_maps[i][0];id_y++) {

for(int id_x=0;id_x<feature_maps[i][1];id_x++)

for(int k = 0;k<2;k++) {

float s_kx = min_size[k]*1.0/width;

float s_ky = min_size[k]*1.0/height;

float dense_cx = (id_x + 0.5)*steps[i]/width;

float dense_cy = (id_y + 0.5)*steps[i]/height;

Anchor a;

a.cx = dense_cx;

a.cy = dense_cy;

a.s_kx = s_kx;

a.s_ky = s_ky;

Anchors.push_back(a);

}

}

}

}

int main(int argc, char **argv) {

const char *img_path = "../0.jpeg";

const char *model_path = "../FaceDetector8.rknn";

const char *post_process_type = "fp";//fp

const int target_width = 640;

const int target_height = 640;

vector<Anchor> Anchors_;

vector<FaceObject> faces;

generate_anchors(target_width,target_height,Anchors_);

// Load image

cv::Mat bgr = cv::imread(img_path);

if (!bgr.data) {

printf("cv::imread %s fail!\n", img_path);

return -1;

}

//BGR->RGB

//cv::cvtColor(rgb, bgr, cv::COLOR_BGR2RGB);

cv::Mat pad_img;

int long_size = max(bgr.cols,bgr.rows);

if (long_size == bgr.cols) {

int top = (long_size - bgr.rows)/2;

int bottom = long_size - top - bgr.rows;

cv::copyMakeBorder(bgr,pad_img,top,bottom,0,0,cv::BORDER_CONSTANT,0);

} else {

int left = (long_size - bgr.cols)/2;

int right = long_size - left - bgr.cols;

cv::copyMakeBorder(bgr,pad_img,0,0,left,right,cv::BORDER_CONSTANT,0);

}

//pad为正方形后,再resize为模型的固定输入大小(640,640)

cv::Mat img_resize;

cv::resize(pad_img,img_resize,cv::Size(target_width,target_height));

// Load model

FILE *fp = fopen(model_path, "rb");

if (fp == NULL) {

printf("fopen %s fail!\n", model_path);

return -1;

}

fseek(fp, 0, SEEK_END);

int model_len = ftell(fp);

void *model = malloc(model_len);

fseek(fp, 0, SEEK_SET);

if (model_len != fread(model, 1, model_len, fp)) {

printf("fread %s fail!\n", model_path);

free(model);

return -1;

}

rknn_context ctx = 0;

int ret = rknn_init(&ctx, model, model_len, 0);

if (ret < 0) {

printf("rknn_init fail! ret=%d\n", ret);

return -1;

}

/* Query sdk version */

rknn_sdk_version version;

ret = rknn_query(ctx, RKNN_QUERY_SDK_VERSION, &version,

sizeof(rknn_sdk_version));

if (ret < 0) {

printf("rknn_init error ret=%d\n", ret);

return -1;

}

printf("sdk version: %s driver version: %s\n", version.api_version,

version.drv_version);

/* Get input,output attr */

rknn_input_output_num io_num;

ret = rknn_query(ctx, RKNN_QUERY_IN_OUT_NUM, &io_num, sizeof(io_num));

if (ret < 0) {

printf("rknn_init error ret=%d\n", ret);

return -1;

}

printf("model input num: %d, output num: %d\n", io_num.n_input,

io_num.n_output);

rknn_tensor_attr input_attrs[io_num.n_input];

memset(input_attrs, 0, sizeof(input_attrs));

for (int i = 0; i < io_num.n_input; i++) {

input_attrs[i].index = i;

ret = rknn_query(ctx, RKNN_QUERY_INPUT_ATTR, &(input_attrs[i]),

sizeof(rknn_tensor_attr));

if (ret < 0) {

printf("rknn_init error ret=%d\n", ret);

return -1;

}

printRKNNTensor(&(input_attrs[i]));

}

rknn_tensor_attr output_attrs[io_num.n_output];

memset(output_attrs, 0, sizeof(output_attrs));

for (int i = 0; i < io_num.n_output; i++) {

output_attrs[i].index = i;

ret = rknn_query(ctx, RKNN_QUERY_OUTPUT_ATTR, &(output_attrs[i]),

sizeof(rknn_tensor_attr));

printRKNNTensor(&(output_attrs[i]));

}

int input_channel = 3;

int input_width = 0;

int input_height = 0;

if (input_attrs[0].fmt == RKNN_TENSOR_NCHW) {

printf("model is NCHW input fmt\n");

input_width = input_attrs[0].dims[0];

input_height = input_attrs[0].dims[1];

printf("input_width=%d input_height=%d\n", input_width, input_height);

} else {

printf("model is NHWC input fmt\n");

input_width = input_attrs[0].dims[1];

input_height = input_attrs[0].dims[2];

printf("input_width=%d input_height=%d\n", input_width, input_height);

}

printf("model input height=%d, width=%d, channel=%d\n", input_height, input_width,

input_channel);

/* Init input tensor */

rknn_input inputs[1];

memset(inputs, 0, sizeof(inputs));

inputs[0].index = 0;

inputs[0].buf = img_resize.data;

inputs[0].type = RKNN_TENSOR_UINT8;

inputs[0].size = input_width * input_height * input_channel;

inputs[0].fmt = RKNN_TENSOR_NHWC;

inputs[0].pass_through = 0;

/* Init output tensor */

rknn_output outputs[io_num.n_output];

memset(outputs, 0, sizeof(outputs));

for (int i = 0; i < io_num.n_output; i++) {

outputs[i].want_float = 1;

}

printf("img.cols: %d, img.rows: %d\n", img_resize.cols, img_resize.rows);

auto t1=std::chrono::steady_clock::now();

rknn_inputs_set(ctx, io_num.n_input, inputs);

ret = rknn_run(ctx, NULL);

if (ret < 0) {

printf("ctx error ret=%d\n", ret);

return -1;

}

ret = rknn_outputs_get(ctx, io_num.n_output, outputs, NULL);

if (ret < 0) {

printf("outputs error ret=%d\n", ret);

return -1;

}

/* Post process */

std::vector<float> out_scales;

std::vector<uint8_t> out_zps;

for (int i = 0; i < io_num.n_output; ++i) {

out_scales.push_back(output_attrs[i].scale);

out_zps.push_back(output_attrs[i].zp);

}

if (strcmp(post_process_type, "fp") == 0) {

post_process_u8((float *) outputs[0].buf,(float *) outputs[1].buf,(float*) outputs[2].buf,img_resize,

target_height, target_width,Anchors_, long_size,faces);

}

//毫秒级

auto t2=std::chrono::steady_clock::now();

double dr_ms=std::chrono::duration<double,std::milli>(t2-t1).count();

printf("%lf ms\n",dr_ms);

for(auto &face:faces){

cv::rectangle(img_resize, cv::Point(face.rect.x, face.rect.y),

cv::Point(face.rect.x + face.rect.width, face.rect.y + face.rect.height),

cv::Scalar(0, 255, 0), 2);

string score = to_string(face.prob);

cv::putText(img_resize, score, cv::Point(face.rect.x, face.rect.y),cv::FONT_HERSHEY_DUPLEX, 0.6, (0, 255, 255));

cv::circle(img_resize, face.landmark[0], 2, cv::Scalar(0, 0, 255), -1);

cv::circle(img_resize, face.landmark[1], 2, cv::Scalar(0, 0, 255), -1);

cv::circle(img_resize, face.landmark[2], 2, cv::Scalar(0, 0, 255), -1);

cv::circle(img_resize, face.landmark[3], 2, cv::Scalar(0, 0, 255), -1);

cv::circle(img_resize, face.landmark[4], 2, cv::Scalar(0, 0, 255), -1);

}

cv::imwrite("../image.jpg",img_resize);

cv::waitKey(0);

ret = rknn_outputs_release(ctx, io_num.n_output, outputs);

if (ret < 0) {

printf("rknn_query fail! ret=%d\n", ret);

goto Error;

}

Error:

if (ctx > 0)

rknn_destroy(ctx);

if (model)

free(model);

if (fp)

fclose(fp);

return 0;

}

运行数据

/tmp/tmp.q0kaCsDGvt/cmake-build-debug/untitled10

D RKNNAPI: ==============================================

D RKNNAPI: RKNN VERSION:

D RKNNAPI: API: 1.6.1 (00c4d8b build: 2021-03-15 16:31:37)

D RKNNAPI: DRV: 1.7.1 (0cfd4a1 build: 2021-12-10 09:43:11)

D RKNNAPI: ==============================================

sdk version: 1.6.1 (00c4d8b build: 2021-03-15 16:31:37) driver version: 1.7.1 (0cfd4a1 build: 2021-12-10 09:43:11)

model input num: 1, output num: 3

index=0 name=input0_129 n_dims=4 dims=[1 3 640 640] n_elems=1228800 size=1228800 fmt=0 type=3 qnt_type=2 fl=0 zp=0 scale=1.000000

index=0 name=Concat_Concat_130/out0_0 n_dims=3 dims=[0 1 16800 4] n_elems=67200 size=67200 fmt=0 type=3 qnt_type=2 fl=0 zp=130 scale=0.040004

index=1 name=Softmax_Softmax_171/out0_1 n_dims=3 dims=[0 1 16800 2] n_elems=33600 size=67200 fmt=0 type=1 qnt_type=0 fl=0 zp=0 scale=1.000000

index=2 name=Concat_Concat_170/out0_2 n_dims=3 dims=[0 1 16800 10] n_elems=168000 size=168000 fmt=0 type=3 qnt_type=2 fl=0 zp=122 scale=0.086914

model is NCHW input fmt

input_width=640 input_height=640

model input height=640, width=640, channel=3

img.cols: 640, img.rows: 640

79.234952 ms

Process finished with exit code 0

测试结果

参考:

GitHub - OpenFirework/Retinaface_CPP: Retinaface人脸检测网络 MNN、NCNN嵌入式,Linux端推理源码,包含一个卡通人脸检测的模型,脚本一键构建可执行程序

本文内容由网友自发贡献,版权归原作者所有,本站不承担相应法律责任。如您发现有涉嫌抄袭侵权的内容,请联系:hwhale#tublm.com(使用前将#替换为@)