深度学习

在深入学习的基本单位上实施初级到高级操作。

> Excerpts

我习惯于为不同的问题创建新的深度学习架构,但选择哪个框架(Keras、Pytorch、TensorFlow)通常比较困难。

由于其中存在不确定性,因此了解这些框架的基本单元(NumPy、Torch、Tensor)的基本操作是件好事。

在这篇文章中,我跨 3 个框架执行了几个相同的操作,也尝试了大多数框架的可视化操作。

这是一个初学者友好的帖子,所以让我们开始。

1. 安装

pip install numpy

pip install tensorflow

pip install torch

2. 版本检查

import numpy as np

import tensorflow as tf

import torch

print(np.__version__)

print(tf.__version__)

print(torch.__version__)

### OUTPUT ###

2.3.0

1.18.5

1.6.0+cu101

view raw

3. 阵列初始化 = 1-D、2-D、3-D

标量和一维阵列

> Scalar, 1-D, 2-D arrays

Numpy

# Numpy

a = np.array(10)

print(a)

print(a.shape, a.dtype) #shape of the array and type of the elements

a = np.array([10])

print(a)

print(a.shape, a.dtype) #shape of the array and type of the elements

a = np.array([10], dtype=np.float32)

print(a)

print(a.shape, a.dtype) #shape of the array and type of the elements

############################# OUTPUT ##############################

10 () int64

[10] (1,) int64

[10.] (1,) float32

TensorFlow

# TensorFlow

b = tf.constant(10) # As Scalar

print(b)

b = tf.constant(10, shape=(1,1)) # As 1-D Vector

print(b)

b = tf.constant(10, shape=(1,1), dtype=tf.float32) # with Data-type

print(b)

############################# OUTPUT ##############################

tf.Tensor(10, shape=(), dtype=int32)

tf.Tensor([[10]], shape=(1, 1), dtype=int32)

tf.Tensor([[10.]], shape=(1, 1), dtype=float32)

Torch

# Torch

c = torch.tensor(10, ) # As Scalar

print(c)

c = torch.tensor([10]) # As 1-D Vector

print(c, c.shape, c.dtype)

c = torch.tensor([10], dtype=torch.float32) # With Data-type

print(c)

############################# OUTPUT ##############################

tensor(10)

tensor([10])torch.Size([1]) torch.int64

tensor([10.])

二维矢量阵列

> 2-D Array

Numpy

# Numpy

a = np.array([[1,2,3], [4,5,6]])

print(a)

print(a.shape, a.dtype)

############################# OUTPUT ##############################

[[1 2 3]

[4 5 6]]

(2, 3) int64

TensorFlow

# TensorFlow

b = tf.constant([[1,2,3], [4,5,6]])

print(b)

print(b.shape)

############################# OUTPUT ##############################

tf.Tensor([[1 2 3]

[4 5 6]], shape=(2, 3), dtype=int32)

(2, 3)

Torch

# Torch

c = torch.tensor([[1,2,3], [4,5,6]])

print(c)

print(c.shape)

############################# OUTPUT ##############################

tensor([[1, 2, 3],

[4, 5, 6]])

torch.Size([2, 3])

4. 生成数据

> Zeros and Ones

> Diagonal & Same element fill

Numpy

# Numpy

a = np.zeros((3,3))

print(a, a.shape, a.dtype)

a = np.ones((3,3))

print(a, a.shape, a.dtype)

a = np.eye(3)

print(a, a.shape, a.dtype)

a = np.full((3,3),10.0)

print(a, a.shape, a.dtype)

############################# OUTPUT ##############################

[[0. 0. 0.]

[0. 0. 0.]

[0. 0. 0.]] (3, 3) float64

[[1. 1. 1.]

[1. 1. 1.]

[1. 1. 1.]] (3, 3) float64

[[1. 0. 0.]

[0. 1. 0.]

[0. 0. 1.]] (3, 3) float64

[[10. 10. 10.]

[10. 10. 10.]

[10. 10. 10.]] (3, 3) float64

Tensorflow

# TensorFlow

b = tf.zeros((3,3))

print(b)

b = tf.ones((3,3))

print(b)

b = tf.eye(3)

print(b)

b = tf.fill([3,3], 10)

print(b)

############################# OUTPUT ##############################

tf.Tensor( [[0. 0. 0.]

[0. 0. 0.]

[0. 0. 0.]], shape=(3, 3), dtype=float32)

tf.Tensor( [[1. 1. 1.]

[1. 1. 1.]

[1. 1. 1.]], shape=(3, 3), dtype=float32)

tf.Tensor( [[1. 0. 0.]

[0. 1. 0.]

[0. 0. 1.]], shape=(3, 3), dtype=float32)

tf.Tensor( [[10 10 10]

[10 10 10]

[10 10 10]], shape=(3, 3), dtype=int32)

Torch

# Torch

c = torch.zeros((3,3))

print(c)

c = torch.ones((3,3))

print(c)

c = torch.eye(3)

print(c)

c = c.new_full([3,3], 10)

print(c)

############################# OUTPUT ##############################

tensor([[0., 0., 0.],

[0., 0., 0.],

[0., 0., 0.]])

tensor([[1., 1., 1.],

[1., 1., 1.],

[1., 1., 1.]])

tensor([[1., 0., 0.],

[0., 1., 0.],

[0., 0., 1.]])

tensor([[10., 10., 10.],

[10., 10., 10.],

[10., 10., 10.]])

从正态分布绘制随机样本

> Normal Dist'n Bell Curve

> Samples were drawn from Normal Dist'n

Numpy

# Numpy

a = np.random.randn(3,3)

print(a, a.shape, a.dtype)

print(a.mean(), a.std())

############################# OUTPUT ##############################

[[ 0.41406362 -1.51382214 0.55400531]

[-0.95226975 -0.50038461 1.29014057]

[ 0.90320426 -1.65923581 -1.03100388]] (3, 3) float64

-0.27725582657643905 1.0291132313341855

Tensorflow

# TensorFlow

b = tf.random.normal((3,3),mean=0, stddev=1)

print(b)

print(tf.reduce_mean(b), tf.math.reduce_std(b))

############################# OUTPUT ##############################

tf.Tensor([[0.04017716 -2.2840774 -0.3615016 ]

[-1.9259684 1.2054121 0.02211744]

[0.96204025 0.07906733 -2.2352242 ]], shape=(3, 3), dtype=float32)

tf.Tensor(-0.49977303, shape=(), dtype=float32)

tf.Tensor(1.2557517, shape=(), dtype=float32)

Torch

# Torch

c = torch.normal(mean=0, std=1, size=(3, 3))

print(c)

print(torch.mean(c), torch.std(c))

############################# OUTPUT ##############################

tensor([[-0.1682, 0.9610, 1.1005],

[-1.0462, 0.4431, 0.6005],

[-1.2714, -1.1894, 0.7221]])

tensor(0.0169) tensor(0.9595)

从均匀分布中抽取样本

> Uniform Dist'n Curve

> Samples were drawn from Uniform Dist'n

Numpy

# Numpy

a = np.random.uniform(low=0, high=1, size=(3,3))

print(a, a.shape, a.dtype)

print(a.mean(), a.std())

############################# OUTPUT ##############################

[[0.00738313 0.56734768 0.92893306]

[0.36580515 0.91986967 0.70643149]

[0.72854485 0.73961837 0.88049091]] (3, 3) float64

0.6493804787345917 0.28317558657389263

Tensorflow

# TensorFlow

b = tf.random.uniform((3,3),minval=0,maxval=1)#Values are always > 1

print(b)

print(tf.reduce_mean(b), tf.math.reduce_std(b))

############################# OUTPUT ##############################

tf.Tensor( [[0.11186028 0.04624796 0.59104955]

[0.5344571 0.1144793 0.8468257 ]

[0.5247066 0.61488223 0.7592212 ]], shape=(3, 3), dtype=float32)

tf.Tensor(0.4604144, shape=(), dtype=float32)

tf.Tensor(0.2792521, shape=(), dtype=float32)

Torch

# Torch

num_samples = 3

Dim = 3

c = torch.distributions.Uniform(0, +1).sample((num_samples, Dim))

print(c)

print(torch.mean(c), torch.std(c))

############################# OUTPUT ##############################

tensor([[0.5842, 0.5787, 0.3526],

[0.2647, 0.6233, 0.4482],

[0.3495, 0.0562, 0.0495]])

tensor(0.3674) tensor(0.2158)

向量排列

Numpy

# Numpy

a = np.arange(0,9)

print(a)

a = np.arange(start=1, stop=20, step=2, dtype=np.float32)

print(a, a.dtype)

############################# OUTPUT ##############################

[0 1 2 3 4 5 6 7 8]

[ 1. 3. 5. 7. 9. 11. 13. 15. 17. 19.] float32

Tensorflow

# TensorFlow

b = tf.range(9)

print(b)

b = tf.range(start=1, limit=20, delta=2, dtype=tf.float64)

print(b)

############################# OUTPUT ##############################

tf.Tensor([0 1 2 3 4 5 6 7 8], shape=(9,), dtype=int32)

tf.Tensor([ 1. 3. 5. 7. 9. 11. 13. 15. 17. 19.], shape=(10,), dtype=float64)

Torch

# Torch

c = torch.arange(start=0, end=9)

print(c)

c = torch.arange(start=1, end=20, step=2, dtype=torch.float64)

print(c)

############################# OUTPUT ##############################

tensor([0, 1, 2, 3, 4, 5, 6, 7, 8])

tensor([ 1., 3., 5., 7., 9., 11., 13., 15., 17., 19.], dtype=torch.float64)

7. 数据类型 + 转换

uint8/16/32/64 ← →浮子8/16/32/64

Numpy

# Numpy

a = a.astype(np.uint8)

print(a, a.dtype)

############################# OUTPUT ##############################

[ 1 3 5 7 9 11 13 15 17 19] uint8

Tensorflow

# TensorFlow

b = tf.cast(b, dtype=tf.uint8)

print(b)

############################# OUTPUT ##############################

tf.Tensor([ 1 3 5 7 9 11 13 15 17 19], shape=(10,), dtype=uint8)

Torch

# Torch

c = torch.tensor(c)

c = c.type(torch.int64)

print(c)

############################# OUTPUT ##############################

tensor([ 1, 3, 5, 7, 9, 11, 13, 15, 17, 19])

8. 数学运算

> Sum and Subtract operations

> multiply and divide operations

Numpy

# Numpy

a = np.array([1,2,3,4,5])

b = np.array([6,7,8,9,10])

c = np.add(a, b)

print(c, c.dtype)

c = np.subtract(b,a)

print(c, c.dtype)

c = np.divide(b,a)

print(c, c.dtype)

c = np.multiply(b,a)

print(c, c.dtype)

c = (a**2)

print(c)

############################# OUTPUT ##############################

[ 7 9 11 13 15] int64 [5 5 5 5 5] int64

[6. 3.5 2.66666667 2.25 2. ] float64

[ 6 14 24 36 50] int64

[ 1 4 9 16 25]

Tensorflow

# TensorFlow

x = tf.constant([1,2,3,4,5])

y = tf.constant([6,7,8,9,10])

z = tf.add(x,y)

print(z)

z = tf.subtract(y,x)

print(z)

z = tf.divide(y,x)

print(z)

z = tf.multiply(y,x)

print(z)

z = (x **2)

print(z)

############################# OUTPUT ##############################

tf.Tensor([ 7 9 11 13 15], shape=(5,), dtype=int32)

tf.Tensor([5 5 5 5 5], shape=(5,), dtype=int32)

tf.Tensor([6. 3.5 2.66666667 2.25 2. ], shape=(5,), dtype=float64)

tf.Tensor([ 6 14 24 36 50], shape=(5,), dtype=int32)

tf.Tensor([ 1 4 9 16 25], shape=(5,), dtype=int32)

Torch

# Torch

t = torch.tensor([1,2,3,4,5])

u = torch.tensor([6,7,8,9,10])

v = torch.add(t, u)

print(v)

v = torch.sub(u,t)

print(v)

v = torch.true_divide (u, t)

print(v)

v = torch.mul(u,t)

print(v)

v = (t **2)

print(v)

############################# OUTPUT ##############################

tensor([ 7, 9, 11, 13, 15])

tensor([5, 5, 5, 5, 5])

tensor([6.0000, 3.5000, 2.6667, 2.2500, 2.0000])

tensor([ 6, 14, 24, 36, 50])

tensor([ 1, 4, 9, 16, 25])

9. 点积

> Dot Product

Numpy

# Numpy

a = np.array([1,2,3,4,5])

b = np.array([6,7,8,9,10])

c = np.dot(a, b)

print(c, c.dtype)

############################# OUTPUT ##############################

130 int64

Tensorflow

# TensorFlow

x = tf.constant([1,2,3,4,5])

y = tf.constant([6,7,8,9,10])

z = tf.tensordot(x,y, axes=1)

print(z)

############################# OUTPUT ##############################

tf.Tensor(130, shape=(), dtype=int32)

Torch

# Torch

t = torch.tensor([1,2,3,4,5])

u = torch.tensor([6,7,8,9,10])

v = torch.dot(t,u)

print(v)

############################# OUTPUT ##############################

tensor(130)

10. 矩阵乘法

> Matrix Multiplication

Numpy

# Numpy

a = np.array([[1,2,3], [4,5,6]])

b = np.array([[1,2,3], [4,5,6], [7,8,9]])

c = np.matmul(a,b) # (2,3) @ (3,3) --> (2,3) output shape

print(c)

############################# OUTPUT ##############################

[[30 36 42]

[66 81 96]]

Tensorflow

# TensorFlow

x = tf.constant([[1,2,3], [4,5,6]])

y = tf.constant([[1,2,3], [4,5,6], [7,8,9]])

z = tf.matmul(x,y) # (2,3) @ (3,3) --> (2,3) output shape

print(z)

############################# OUTPUT ##############################

tf.Tensor([[30 36 42]

[66 81 96]], shape=(2, 3), dtype=int32)

Torch

# Torch

t = torch.tensor([[1,2,3], [4,5,6]])

u = torch.tensor([[1,2,3], [4,5,6], [7,8,9]])

v = torch.matmul(t,u) # (2,3) @ (3,3) --> (2,3) output shape

print(v)

############################# OUTPUT ##############################

tensor([[30, 36, 42],

[66, 81, 96]])

11. 索引和切片(2D)

> Indexing and Slicing

Numpy

# Numpy

a = np.array([1,2,3,4,5,6,7,8])

print(a[:])

print(a[2:-3])

print(a[3:-1])

print(a[::2])

indices = np.array([0,3,5])

x_indices = a[indices]

print(x_indices)

############################# OUTPUT ##############################

[1 2 3 4 5 6 7 8]

[3 4 5]

[4 5 6 7]

[1 3 5 7]

[1 4 6]

Tensorflow

# TensorFlow

b = tf.constant([1,2,3,4,5,6,7,8])

print(b[:])

print(b[2:-3])

print(b[3:-1])

print(b[::2])

indices = tf.constant([0,3,5])

x_indices = tf.gather(b, indices)

print(x_indices)

############################# OUTPUT ##############################

tf.Tensor([1 2 3 4 5 6 7 8], shape=(8,), dtype=int32)

tf.Tensor([3 4 5], shape=(3,), dtype=int32)

tf.Tensor([4 5 6 7], shape=(4,), dtype=int32)

tf.Tensor([1 3 5 7], shape=(4,), dtype=int32)

tf.Tensor([1 4 6], shape=(3,), dtype=int32)

Torch

# Torch

c = torch.tensor([1,2,3,4,5,6,7,8])

print(c[:])

print(c[2:-3])

print(c[3:-1])

print(c[::2])

indices = torch.tensor([0,3,5])

x_indices = c[indices]

print(x_indices)

############################# OUTPUT ##############################

tensor([1, 2, 3, 4, 5, 6, 7, 8])

tensor([3, 4, 5])

tensor([4, 5, 6, 7])

tensor([1, 3, 5, 7])

tensor([1, 4, 6])

12. 索引和切片(2D + 矩阵)

> Matrix Slicing

Numpy

# Numpy

a = np.array([[1,2,3],[4,5,6],[7,8,9]])

# Matrix Indexing

# Print all individual Rows and Columns

print("Row-1",a[0, :])

print("Row-2",a[1, :])

print("Row-3",a[2, :])

print("Col-1",a[:, 0])

print("Col-2",a[:, 1])

print("Col-3",a[:, 2])

# Print the sub-diagonal matrix

print("Upper-Left",a[0:2,0:2])

print("Upper-Right",a[0:2,1:3])

print("Bottom-Left",a[1:3,0:2])

print("Bottom-Right",a[1:3,1:3])

############################# OUTPUT ##############################

Row-1 [1 2 3]

Row-2 [4 5 6]

Row-3 [7 8 9]

Col-1 [1 4 7]

Col-2 [2 5 8]

Col-3 [3 6 9]

Upper-Left [[1 2]

[4 5]]

Upper-Right [[2 3]

[5 6]]

Bottom-Left [[4 5]

[7 8]]

Bottom-Right [[5 6]

[8 9]]

Tensorflow

# TensorFlow

b = tf.constant([[1,2,3],[4,5,6],[7,8,9]])

# Matrix Indexing

# Print all individual Rows and Columns

print("Row-1",b[0, :])

print("Row-2",b[1, :])

print("Row-3",b[2, :])

print("Col-1",b[:, 0])

print("Col-2",b[:, 1])

print("Col-3",b[:, 2])

# Print the sub-diagonal matrix

print("Upper-Left",b[0:2,0:2])

print("Upper-Right",b[0:2,1:3])

print("Bottom-Left",b[1:3,0:2])

print("Bottom-Right",b[1:3,1:3])

############################# OUTPUT ##############################

Row-1 tf.Tensor([1 2 3], shape=(3,), dtype=int32)

Row-2 tf.Tensor([4 5 6], shape=(3,), dtype=int32)

Row-3 tf.Tensor([7 8 9], shape=(3,), dtype=int32)

Col-1 tf.Tensor([1 4 7], shape=(3,), dtype=int32)

Col-2 tf.Tensor([2 5 8], shape=(3,), dtype=int32)

Col-3 tf.Tensor([3 6 9], shape=(3,), dtype=int32)

Upper-Left tf.Tensor( [[1 2] [4 5]], shape=(2, 2), dtype=int32)

Upper-Right tf.Tensor( [[2 3] [5 6]], shape=(2, 2), dtype=int32)

Bottom-Left tf.Tensor( [[4 5] [7 8]], shape=(2, 2), dtype=int32)

Bottom-Right tf.Tensor( [[5 6] [8 9]], shape=(2, 2), dtype=int32)

Torch

# Torch

c = torch.tensor([[1,2,3],[4,5,6],[7,8,9]])

# Matrix Indexing

# Print all individual Rows and Columns

print("Row-1",c[0, :])

print("Row-2",c[1, :])

print("Row-3",c[2, :])

print("Col-1",c[:, 0])

print("Col-2",c[:, 1])

print("Col-3",c[:, 2])

# Print the sub-diagonal matrix

print("Upper-Left",c[0:2,0:2])

print("Upper-Right",c[0:2,1:3])

print("Bottom-Left",c[1:3,0:2])

print("Bottom-Right",c[1:3,1:3])

############################# OUTPUT ##############################

Row-1 tensor([1, 2, 3])

Row-2 tensor([4, 5, 6])

Row-3 tensor([7, 8, 9])

Col-1 tensor([1, 4, 7])

Col-2 tensor([2, 5, 8])

Col-3 tensor([3, 6, 9])

Upper-Left tensor([[1, 2],[4, 5]])

Upper-Right tensor([[2, 3],[5, 6]])

Bottom-Left tensor([[4, 5],[7, 8]])

Bottom-Right tensor([[5, 6],[8, 9]])

13. 轴的重塑和转置

> Reshape & Transpose

Numpy

# Numpy

a = np.arange(9)

print(a)

a = np.reshape(a, (3,3))

print(a)

a = np.transpose(a, (1,0)) # Swap axes (1,0)

print(a)

############################# OUTPUT ##############################

[0 1 2 3 4 5 6 7 8]

[[0 1 2]

[3 4 5]

[6 7 8]]

[[0 3 6]

[1 4 7]

[2 5 8]]

Tensorflow

# TensorFlow

b = tf.range(9)

print(b)

b = tf.reshape(b, (3,3))

print(b)

b = tf.transpose(b, perm=[1,0]) # Swap axes in perm (1,0)

print(b)

############################# OUTPUT ##############################

tf.Tensor([0 1 2 3 4 5 6 7 8], shape=(9,), dtype=int32)

tf.Tensor( [[0 1 2] [3 4 5] [6 7 8]], shape=(3, 3), dtype=int32)

tf.Tensor( [[0 3 6] [1 4 7] [2 5 8]], shape=(3, 3), dtype=int32)

Torch

# Torch

c = torch.arange(9)

print(c)

c = torch.reshape(c, (3,3))

print(c)

c = c.permute(1,0) # Swap axes in perm (1,0)

############################# OUTPUT ##############################

tensor([0, 1, 2, 3, 4, 5, 6, 7, 8])

tensor([[0, 1, 2], [3, 4, 5], [6, 7, 8]])

tensor([[0, 3, 6], [1, 4, 7], [2, 5, 8]])

14. 串联

> Matrix Concatenation

Numpy

# Numpy

a = np.array([[1, 2], [3, 4]])

print("a",a)

b = np.array([[5, 6]])

print("b",b)

d = np.concatenate((a, b), axis=0)

print("Concat (axis=0 - Row)")

print(d)

e = np.concatenate((a, b.T), axis=1)

print("Concat (axis=1 - Column)")

print(e)

############################# OUTPUT ##############################

a [[1 2] [3 4]]

b [[5 6]]

Concat (axis=0 - Row)

[[1 2]

[3 4]

[5 6]]

Concat (axis=1 - Column)

[[1 2 5]

[3 4 6]]

Tensorflow

# TensorFlow

x = tf.constant([[1, 2], [3, 4]])

print("x",x)

y = tf.constant([[5, 6]])

print("y",y)

z = tf.concat((x, y), axis=0)

print("Concat (axis=0 - Row)")

print(z)

z = tf.concat((x, tf.transpose(y)), axis=1)

print("Concat (axis=1 - Column)")

print(z)

############################# OUTPUT ##############################

x tf.Tensor( [[1 2] [3 4]], shape=(2, 2), dtype=int32)

y tf.Tensor([[5 6]], shape=(1, 2), dtype=int32)

Concat (axis=0 - Row)

tf.Tensor( [[1 2] [3 4] [5 6]], shape=(3, 2), dtype=int32)

Concat (axis=1 - Column)

tf.Tensor( [[1 2 5] [3 4 6]], shape=(2, 3), dtype=int32)

Torch

# Torch

t = torch.tensor([[1, 2], [3, 4]])

print("x",t)

u = torch.tensor([[5, 6]])

print("y",u)

v = torch.cat((t , u), axis=0)

print("Concat (axis=0 - Row)")

print(v)

v = torch.cat((t , u.T), axis=1)

print("Concat (axis=1 - Column)")

print(v)

############################# OUTPUT ##############################

x tensor([[1, 2], [3, 4]])

y tensor([[5, 6]])

Concat (axis=0 - Row)

tensor([[1, 2],

[3, 4],

[5, 6]])

Concat (axis=1 - Column)

tensor([[1, 2, 5],

[3, 4, 6]])

15. 跨轴求和

> Axes — Sum

Numpy

# Numpy

a = np.array([[1,2,3,4,5], [10,10,10,10,10]])

print(a)

print("Overall flattened Sum", a.sum())

print("Sum across Columns",a.sum(axis=0))

print("Sum across Rows",a.sum(axis=1))

############################# OUTPUT ##############################

[[ 1 2 3 4 5] [10 10 10 10 10]]

Overall flattened Sum 65

Sum across Columns [11 12 13 14 15]

Sum across Rows [15 50]

Tensorflow

# TensorFlow

b = tf.constant([[1,2,3,4,5], [10,10,10,10,10]])

print(b)

print("Overall flattened Sum",tf.math.reduce_sum(b))

print("Sum across Columns",tf.math.reduce_sum(b, axis=0))

print("Sum across Rows",tf.math.reduce_sum(b, axis=1))

############################# OUTPUT ##############################

tf.Tensor([[ 1 2 3 4 5] [10 10 10 10 10]], shape=(2, 5), dtype=int32)

Overall flattened Sum tf.Tensor(65, shape=(), dtype=int32)

Sum across Columns tf.Tensor([11 12 13 14 15], shape=(5,), dtype=int32)

Sum across Rows tf.Tensor([15 50], shape=(2,), dtype=int32)

Torch

# Torch

c = torch.tensor([[1,2,3,4,5], [10,10,10,10,10]])

#print(c)

print("Overall flattened Sum",torch.sum(c))

print("Sum across Columns",torch.sum(c, axis=0))

print("Sum across Rows",torch.sum(c, axis=1))

############################# OUTPUT ##############################

Overall flattened Sum tensor(65)

Sum across Columns tensor([11, 12, 13, 14, 15])

Sum across Rows tensor([15, 50])

16. 轴的均值

Numpy

# Numpy

a = np.array([[1,2,3,4,5],

[10,10,10,10,10]])

print(a)

print("Overall flattened mean", a.mean())

print("Sum across Columns",a.mean(axis=0))

print("Sum across Rows",a.mean(axis=1))

############################# OUTPUT ##############################

[[ 1 2 3 4 5] [10 10 10 10 10]]

Overall flattened mean 6.5

Sum across Columns [5.5 6. 6.5 7. 7.5]

Sum across Rows [ 3. 10.]

Tensorflow

# TensorFlow

b = tf.constant([[1,2,3,4,5],

[10,10,10,10,10]])

print(b)

print("Overall flattened mean",tf.math.reduce_mean(b))

print("Sum across Columns",tf.math.reduce_mean(b, axis=0))

print("Sum across Rows",tf.math.reduce_mean(b, axis=1))

############################# OUTPUT ##############################

tf.Tensor( [[ 1 2 3 4 5] [10 10 10 10 10]], shape=(2, 5), dtype=int32)

Overall flattened mean tf.Tensor(6, shape=(), dtype=int32)

Sum across Columns tf.Tensor([5 6 6 7 7], shape=(5,), dtype=int32) Sum across Rows tf.Tensor([ 3 10], shape=(2,), dtype=int32)

Torch

# Torch

c = torch.tensor([[1,2,3,4,5], [10,10,10,10,10]], dtype=torch.float32)

print(c)

print("Overall flattened mean",torch.mean(c))

print("Sum across Columns",torch.mean(c, axis=0))

print("Sum across Rows",torch.mean(c, axis=1))

############################# OUTPUT ##############################

tensor([[ 1., 2., 3., 4., 5.],

[10., 10., 10., 10., 10.]])

Overall flattened mean tensor(6.5000)

Sum across Columns tensor([5.5000, 6.0000, 6.5000, 7.0000, 7.5000])

Sum across Rows tensor([ 3., 10.])

17. 尺寸扩展和移动轴

> Concat and move axes

Numpy

# Numpy

a = np.full((3,3),10.0)

print(a)

print(a.shape)

a = np.expand_dims(a, axis=0)

print(a)

print(a.shape)

b = np.full((3,3),20.0)

print(b)

b = np.expand_dims(b, axis=0)

print(b.shape)

c = np.concatenate((a,b), axis=0)

c = np.moveaxis(c,2,0) # Move 2nd dimension to 0th position

print(c)

print(c.shape)

############################# OUTPUT ##############################

[[10. 10. 10.]

[10. 10. 10.]

[10. 10. 10.]]

(3, 3)

[[[10. 10. 10.]

[10. 10. 10.]

[10. 10. 10.]]]

(1, 3, 3)

[[20. 20. 20.]

[20. 20. 20.]

[20. 20. 20.]]

(1, 3, 3)

[[[10. 10. 10.] [20. 20. 20.]]

[[10. 10. 10.] [20. 20. 20.]]

[[10. 10. 10.] [20. 20. 20.]]]

(3, 2, 3)

Tensorflow

# TensorFlow

x = tf.fill((3,3),10.0)

print(x)

print(x.shape)

x = tf.expand_dims(x, axis=0)

print(x.shape)

y = tf.fill((3,3),20.0)

print(y)

print(y.shape)

y = tf.expand_dims(y, axis=0)

print(y.shape)

z = tf.concat((x,y), axis=0)

z = tf.transpose(z, [1, 0, 2])

print(z.shape)

############################# OUTPUT ##############################

tf.Tensor( [[10. 10. 10.]

[10. 10. 10.]

[10. 10. 10.]], shape=(3, 3), dtype=float32)

(3, 3)

(1, 3, 3)

tf.Tensor( [[20. 20. 20.]

[20. 20. 20.]

[20. 20. 20.]], shape=(3, 3), dtype=float32)

(3, 3)

(1, 3, 3)

(3, 2, 3)

Torch

# Torch

m1 = torch.ones((2,), dtype=torch.int32)

m1 = m1.new_full((3, 3), 10)

m1 = torch.unsqueeze(m1, axis=0)

print(m1)

print(m1.shape)

m2 = torch.ones((2,), dtype=torch.int32)

m2 = m2.new_full((3, 3), 20)

print(m2)

m2 = torch.unsqueeze(m2, axis=0)

print(m2.shape)

m = torch.cat((m1,m2), axis=0)

m = m.permute([1,0,2])

print(m)

print(m.shape)

############################# OUTPUT ##############################

tensor([[[10, 10, 10],

[10, 10, 10],

[10, 10, 10]]], dtype=torch.int32)

torch.Size([1, 3, 3])

tensor([[20, 20, 20],

[20, 20, 20],

[20, 20, 20]], dtype=torch.int32)

torch.Size([1, 3, 3])

tensor([[[10, 10, 10],

[20, 20, 20]],

[[10, 10, 10],

[20, 20, 20]],

[[10, 10, 10],

[20, 20, 20]]], dtype=torch.int32)

torch.Size([3, 2, 3])

最大最小值

> Max for axis=0

> Max for axis=1

Numpy

# Numpy

a = np.array([[5,10,15],

[20,25,30]])

b = np.array([[6,69,35],

[70,10,82]])

c = np.array([[25,45,48],

[4,100,89]])

print(a)

final = np.zeros((3,2,3))

print(final.shape)

final[0, :, :] = a

final[1, :, :] = b

final[2, :, :] = c

print(final)

print("Overall flattened max", final.max())

print("max across Columns",final.max(axis=0))

print("max across Rows",final.max(axis=1))

print("Index of max value across the flattened max", final.argmax())

print("Index of max value across Columns",final.argmax(axis=0))

print("Index of max value across Rows",final.argmax(axis=1))

############################# OUTPUT ##############################

[[ 5 10 15]

[20 25 30]]

(3, 2, 3)

[[[ 5. 10. 15.]

[ 20. 25. 30.]]

[[ 6. 69. 35.]

[ 70. 10. 82.]]

[[ 25. 45. 48.]

[ 4. 100. 89.]]]

Overall flattened max 100.0

max across Columns

[[ 25. 69. 48.]

[ 70. 100. 89.]]

max across Rows

[[ 20. 25. 30.]

[ 70. 69. 82.]

[ 25. 100. 89.]]

Index of max value across the flattened max 16

Index of max value across Columns

[[2 1 2]

[1 2 2]]

Index of max value across Rows

[[1 1 1]

[1 0 1]

[0 1 1]]

Tensorflow

# Tensorflow

final = tf.constant([[[5,10,15],

[20,25,30]],

[[6,69,35],

[70,10,82]],

[[25,45,48],

[4,100,89]]])

print(final)

print("Overall flattened max", tf.math.reduce_max(final))

print("max across Columns",tf.math.reduce_max(final,axis=0))

print("max across Rows",tf.math.reduce_max(final, axis=1))

print("Index of max value across the flattened max", tf.math.argmax(final))

print("Index of max value across Columns",tf.math.argmax(final, axis=0))

print("Index of max value across Rows",tf.math.argmax(final, axis=1))

############################# OUTPUT ##############################

tf.Tensor( [[[ 5 10 15]

[ 20 25 30]]

[[ 6 69 35]

[ 70 10 82]]

[[ 25 45 48]

[ 4 100 89]]], shape=(3, 2, 3), dtype=int32)

Overall flattened max tf.Tensor(100, shape=(), dtype=int32)

max across Columns

tf.Tensor( [[ 25 69 48]

[ 70 100 89]], shape=(2, 3), dtype=int32)

max across Rows

tf.Tensor( [[ 20 25 30]

[ 70 69 82]

[ 25 100 89]], shape=(3, 3), dtype=int32)

Index of max value across the flattened max

tf.Tensor( [[2 1 2]

[1 2 2]], shape=(2, 3), dtype=int64)

Index of max value across Columns

tf.Tensor( [[2 1 2]

[1 2 2]], shape=(2, 3), dtype=int64)

Index of max value across Rows

tf.Tensor( [[1 1 1]

[1 0 1]

[0 1 1]], shape=(3, 3), dtype=int64)

Torch

# Torch

final = torch.tensor([[[5,10,15],

[20,25,30]],

[[6,69,35],

[70,10,82]],

[[25,45,48],

[4,100,89]]])

print(final)

print("Overall flattened max", torch.max(final))

print("max across Columns",torch.max(final,axis=0))

print("max across Rows",torch.max(final, axis=1))

print("Index of max value across the flattened max", torch.argmax(final))

print("Index of max value across Columns",torch.argmax(final, axis=0))

print("Index of max value across Rows",torch.argmax(final, axis=1))

############################# OUTPUT ##############################

tensor([[[ 5, 10, 15],

[ 20, 25, 30]],

[[ 6, 69, 35],

[ 70, 10, 82]],

[[ 25, 45, 48],

[ 4, 100, 89]]])

Overall flattened max tensor(100)

max across Columns torch.return_types.max( values=

tensor([[ 25, 69, 48],

[ 70, 100, 89]]),

indices=tensor([[2, 1, 2],

[1, 2, 2]]))

max across Rows torch.return_types.max( values=

tensor([[ 20, 25, 30],

[ 70, 69, 82],

[ 25, 100, 89]]),

indices=tensor([[1, 1, 1],

[1, 0, 1],

[0, 1, 1]]))

Index of max value across the flattened max tensor(16)

Index of max value across Columns

tensor([[2, 1, 2],

[1, 2, 2]])

Index of max value across Rows

tensor([[1, 1, 1],

[1, 0, 1],

[0, 1, 1]])

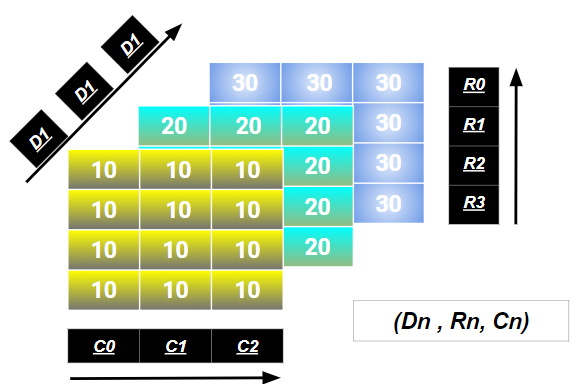

19. 切片和索引(3D矩阵)

> 3x3 Matrix and its indices

> Upper-Left & Lower-Right

> Middle Elements & Inverse Middle Element

Numpy

# Numpy

a = np.array([[10,10,10],[10,10,10],

[10,10,10],[10,10,10]])

b = np.array([[20,20,20],[20,20,20],

[20,20,20],[20,20,20]])

c = np.array([[30,30,30],[30,30,30],

[30,30,30],[30,30,30]])

final = np.zeros((3,4,3))

final[0, :, :] = a

final[1, :, :] = b

final[2, :, :] = c

print("Upper-Left",final[:, 0:2, 0:2])

print("Lower-Right",final[:, 2:, 1:])

print("Middle Elements",final[:,1:3, 1])

# Ignore Middle Elements

a = np.array([[1,2,3],[4,5,6],[7,8,9],[10,11,12]])

b = np.array([[13,14,15],[16,17,18],[19,20,21],[22,23,24]])

c = np.array([[25,26,27],[28,29,30],[31,32,33],[34,35,36]])

final = np.zeros((3,4,3))

print(final.shape)

final[0, :, :] = a

final[1, :, :] = b

final[2, :, :] = c

# Though may work,but not efficient

print("Ignore Middle",final[:,[0,0,0,1,1,2,2,3,3,3], [0,1,2,0,2,0,2,0,1,2]])

############################# OUTPUT ##############################

Upper-Left [[[10. 10.]

[10. 10.]]

[[20. 20.]

[20. 20.]]

[[30. 30.]

[30. 30.]]]

Lower-Right [[[10. 10.]

[10. 10.]]

[[20. 20.]

[20. 20.]]

[[30. 30.]

[30. 30.]]]

Middle Elements [[10. 10.]

[20. 20.]

[30. 30.]]

(3, 4, 3)

Ignore Middle [[ 1. 2. 3. 4. 6. 7. 9. 10. 11. 12.]

[13. 14. 15. 16. 18. 19. 21. 22. 23. 24.]

[25. 26. 27. 28. 30. 31. 33. 34. 35. 36.]]

Tensorflow

# Tensorflow

final = tf.constant([[[10,10,10],[10,10,10],

[10,10,10],[10,10,10]],

[[20,20,20],[20,20,20],

[20,20,20],[20,20,20]],

[[30,30,30],[30,30,30],

[30,30,30],[30,30,30]]])

print("Upper-Left",final[:, 0:2, 0:2])

print("Lower-Right",final[:, 2:, 1:])

print("Middle Elements",final[:,1:3, 1])

############################# OUTPUT ##############################

Upper-Left tf.Tensor(

[[[10 10]

[10 10]]

[[20 20]

[20 20]]

[[30 30]

[30 30]]], shape=(3, 2, 2), dtype=int32)

Lower-Right tf.Tensor(

[[[10 10]

[10 10]]

[[20 20]

[20 20]]

[[30 30]

[30 30]]], shape=(3, 2, 2), dtype=int32)

Middle Elements tf.Tensor(

[[10 10]

[20 20]

[30 30]], shape=(3, 2), dtype=int32)

Torch

# Torch

a = torch.Tensor([[10,10,10],[10,10,10],

[10,10,10],[10,10,10]])

b = torch.Tensor([[20,20,20],[20,20,20],

[20,20,20],[20,20,20]])

c = torch.Tensor([[30,30,30],[30,30,30],

[30,30,30],[30,30,30]])

final = np.zeros((3,4,3))

final[0, :, :] = a

final[1, :, :] = b

final[2, :, :] = c

print("Upper-Left",final[:, 0:2, 0:2])

print("Lower-Right",final[:, 2:, 1:])

print("Middle Elements",final[:,1:3, 1])

# Ignore Middle Elements

a = torch.Tensor([[1,2,3],[4,5,6],[7,8,9],[10,11,12]])

b = torch.Tensor([[13,14,15],[16,17,18],[19,20,21],[22,23,24]])

c = torch.Tensor([[25,26,27],[28,29,30],[31,32,33],[34,35,36]])

final = np.zeros((3,4,3))

print(final.shape)

final[0, :, :] = a

final[1, :, :] = b

final[2, :, :] = c

# Though may work,but not efficient

print("Ignore Middle",final[:,[0,0,0,1,1,2,2,3,3,3], [0,1,2,0,2,0,2,0,1,2]])

############################# OUTPUT ##############################

Upper-Left [[[10. 10.]

[10. 10.]]

[[20. 20.]

[20. 20.]]

[[30. 30.]

[30. 30.]]]

Lower-Right [[[10. 10.]

[10. 10.]]

[[20. 20.]

[20. 20.]]

[[30. 30.]

[30. 30.]]]

Middle Elements [[10. 10.]

[20. 20.]

[30. 30.]]

(3, 4, 3)

Ignore Middle [[ 1. 2. 3. 4. 6. 7. 9. 10. 11. 12.]

[13. 14. 15. 16. 18. 19. 21. 22. 23. 24.]

[25. 26. 27. 28. 30. 31. 33. 34. 35. 36.]]

本文内容由网友自发贡献,版权归原作者所有,本站不承担相应法律责任。如您发现有涉嫌抄袭侵权的内容,请联系:hwhale#tublm.com(使用前将#替换为@)