需求

由Frankmocap所得到的.pkl文件转为blender里的.bvh或者Maya里的.fbx

Frankmocap_github项目地址

2D转3D转.bvh可以看VideoTo3dPoseAndBvh,.bvh转3D相对来说好转一点毕竟.bvh中的关节点与关节点组成的向量有这6自由度信息,但是3D点只有3自由度要转.bvh就要限制自由度。

问题

1.到2022年5月9号为止项目原作者还没有更新这一功能,即提供一个blender的addon;

2.第三方作者魔改了很多相关的插件,可以为EasyMocap,Vibe,Frankmocap等提供blender接口;然而很多可能满足smpl需求却不满足smplx需求;

3.blender里的python环境,package的安装相关教程较少,官方文档又不想看。

相关 1

第三方addon,这个小哥做了一个addon,基于VIBE改的(VIBE里由.pkl转.bvh的工作),但是这个插件偏向于EasyMocap相关功能较多,我导入frankmocap的pkl发现blender里只能生成14个点;在原Frankmocap项目的issue里可以找到这个小哥的很多评论,不会的也可以联系他;

编辑—>偏好设置---->安装addon即安装zip---->N调出addon界面---->导入mocap目录(内含一个视频extract出的很多.pkl)

相关 2

第二个忘记是哪找的了,是一个python文件fbx_output_FRANKMOCAP.py,这个程序里面导入模块import bpy,很明显是要在blender里面运行的;blender用python是真的不友好,要去看官方手册,里面的python控制台我不知道有什么用。。。。而且不同版本的blender也不一样会报很多奇怪的错误。

环境

ubuntu + blender3.1.2稳定版;

下载文件

需要一个SMPL_unity_v.1.0.0.zip文件(可以去SMPL作者官网那里下载),解压后放到VIBE项目下,把fbx_output_FRANKMOCAP.py插件放到VIBE项目下的lib/utils下面,原项目的环境不用配,只需要配置插件里的环境就好了,配置方法如下;

安装package

只要把要的包放到blender目录下的site-packages里就好了,我的操作是自己建一个和blender的python版本一样的conda虚拟环境,然后进到环境里用pip install packages -t directory命令装到blender目录下的site-packages里;

运行

运行脚本或者.py文件可以输入以下命令:./blender --python ~/Desktop/VIBE_pkl2fbx/lib/utils/fbx_output_FRANKMOCAP.py,原文件是有argparse命令行参数的,我不知道通过blender环境怎么输入,就直接改了原代码,把要输入的参数都直接修改好了,如果有人要用代码的话得自己改一下里面的路径和参数。最终可以在blender里导出.bvh或者.fbx文件。

Blender Python API中文介绍

blender的用法(ubuntu)

1.可以直接命令行下载安装,也可以去官网下载压缩包解压,我是解压的;

2.Blender左下角可以调出时间线功能,一般.bvh和.fbx都是一段骨骼或者蒙皮的动画,时间线可以播放动作;

3.找到blender安装目录下的bin下面的python解释器,用ubuntu终端输入命令行./python3.10可以进到blender解释器里,虽然好像没什么用;

4.blender软件里的python控制台是已经进到他那个python环境里了,在这里也运行不了啥程序,除了bpy模块下的函数我不知有什么其他作用。这时候有两种方法:第一种就是用scripting去打开.py文件再运行;第二种就是使用Ubuntu的终端,首先来到blender安装目录,运行./blender就可以打开这个软件(双击图标也可以),运行脚本或者.py文件可以输入以下命令:./blender --python directory.py。

5.blender肯定可以通过IDE或者其他API来使用python,但是作者只想用一个工具就没有深入。

6.所有效果图其实是一段会动的视频和frankmocap对应的输入视频是一样的动作,缺点是在这个插件里手部点没有输出。

修改过后的代码

import os

import sys

import bpy

import time

import joblib

import argparse

import numpy as np

import addon_utils

from math import radians

from mathutils import Matrix, Vector, Quaternion, Euler

import pickle

import os.path

import pandas as pd

male_model_path = '/home/dms/Desktop/VIBE_pkl2fbx/data/SMPL_unity_v.1.0.0/smpl/Models/SMPL_m_unityDoubleBlends_lbs_10_scale5_207_v1.0.0.fbx'

female_model_path = '/home/dms/Desktop/VIBE_pkl2fbx/data/SMPL_unity_v.1.0.0/smpl/Models/SMPL_f_unityDoubleBlends_lbs_10_scale5_207_v1.0.0.fbx'

fps_source = 30

fps_target = 30

gender = 'male'

start_origin = 1

bone_name_from_index = {

0 : 'Pelvis',

1 : 'L_Hip',

2 : 'R_Hip',

3 : 'Spine1',

4 : 'L_Knee',

5 : 'R_Knee',

6 : 'Spine2',

7 : 'L_Ankle',

8: 'R_Ankle',

9: 'Spine3',

10: 'L_Foot',

11: 'R_Foot',

12: 'Neck',

13: 'L_Collar',

14: 'R_Collar',

15: 'Head',

16: 'L_Shoulder',

17: 'R_Shoulder',

18: 'L_Elbow',

19: 'R_Elbow',

20: 'L_Wrist',

21: 'R_Wrist',

22: 'L_Hand',

23: 'R_Hand'

}

def Rodrigues(rotvec):

theta = np.linalg.norm(rotvec)

r = (rotvec/theta).reshape(3, 1) if theta > 0. else rotvec

cost = np.cos(theta)

mat = np.asarray([[0, -r[2], r[1]],

[r[2], 0, -r[0]],

[-r[1], r[0], 0]])

return(cost*np.eye(3) + (1-cost)*r.dot(r.T) + np.sin(theta)*mat)

def setup_scene(model_path, fps_target):

scene = bpy.data.scenes['Scene']

scene.render.fps = fps_target

if 'Cube' in bpy.data.objects:

bpy.data.objects['Cube'].select_set(True)

bpy.ops.object.delete()

bpy.ops.import_scene.fbx(filepath=model_path)

def process_pose(current_frame, pose, trans, pelvis_position):

if pose.shape[0] == 72:

rod_rots = pose.reshape(24, 3)

else:

rod_rots = pose.reshape(26, 3)

mat_rots = [Rodrigues(rod_rot) for rod_rot in rod_rots]

armature = bpy.data.objects['Armature']

bones = armature.pose.bones

bones[bone_name_from_index[0]].location = Vector((100*trans[1], 100*trans[2], 100*trans[0])) - pelvis_position

bones[bone_name_from_index[0]].keyframe_insert('location', frame=current_frame)

for index, mat_rot in enumerate(mat_rots, 0):

if index >= 24:

continue

bone = bones[bone_name_from_index[index]]

bone_rotation = Matrix(mat_rot).to_quaternion()

quat_x_90_cw = Quaternion((1.0, 0.0, 0.0), radians(-90))

quat_z_90_cw = Quaternion((0.0, 0.0, 1.0), radians(-90))

if index == 0:

bone.rotation_quaternion = (quat_x_90_cw @ quat_z_90_cw) @ bone_rotation

else:

bone.rotation_quaternion = bone_rotation

bone.keyframe_insert('rotation_quaternion', frame=current_frame)

return

def process_poses(

input_path,

gender,

fps_source,

fps_target,

start_origin,

person_id=1,

):

print('Processing: ' + input_path)

pkl_path = '/home/dms/Desktop/VIBE_pkl2fbx/data/mocap/'

list_dir = os.listdir(pkl_path)

s_list = sorted(list_dir)

append_data = (pd.DataFrame(pd.DataFrame.from_dict(pickle.load(open(pkl_path+s_list[0],'rb'))).pred_output_list[0]['pred_body_pose']))

for i in s_list:

if i == s_list[0]:

print('0')

next

else:

print(i)

frank_data = pickle.load(open(pkl_path+i,'rb'))

frank = pd.DataFrame.from_dict(frank_data)

if 'pred_body_pose' not in frank.columns:

next

frank_pose = pd.DataFrame(frank.pred_output_list[0]['pred_body_pose'])

append_data = append_data.append(frank_pose)

poses = append_data.to_numpy()

trans = np.zeros((poses.shape[0], 3))

if gender == 'female':

model_path = female_model_path

for k,v in bone_name_from_index.items():

bone_name_from_index[k] = 'f_avg_' + v

elif gender == 'male':

model_path = male_model_path

for k,v in bone_name_from_index.items():

bone_name_from_index[k] = 'm_avg_' + v

else:

print('ERROR: Unsupported gender: ' + gender)

sys.exit(1)

if fps_target > fps_source:

fps_target = fps_source

print(f'Gender: {gender}')

print(f'Number of source poses: {str(poses.shape[0])}')

print(f'Source frames-per-second: {str(fps_source)}')

print(f'Target frames-per-second: {str(fps_target)}')

print('--------------------------------------------------')

setup_scene(model_path, fps_target)

scene = bpy.data.scenes['Scene']

sample_rate = int(fps_source/fps_target)

scene.frame_end = (int)(poses.shape[0]/sample_rate)

bpy.ops.object.mode_set(mode='EDIT')

pelvis_position = Vector(bpy.data.armatures[0].edit_bones[bone_name_from_index[0]].head)

bpy.ops.object.mode_set(mode='OBJECT')

source_index = 0

frame = 1

offset = np.array([0.0, 0.0, 0.0])

while source_index < poses.shape[0]:

print('Adding pose: ' + str(source_index))

if start_origin:

if source_index == 0:

offset = np.array([trans[source_index][0], trans[source_index][1], 0])

scene.frame_set(frame)

process_pose(frame, poses[source_index], (trans[source_index] - offset), pelvis_position)

source_index += sample_rate

frame += 1

return frame

def export_animated_mesh(output_path):

output_dir = os.path.dirname(output_path)

if not os.path.isdir(output_dir):

os.makedirs(output_dir, exist_ok=True)

bpy.ops.object.select_all(action='DESELECT')

bpy.data.objects['Armature'].select_set(True)

bpy.data.objects['Armature'].children[0].select_set(True)

if output_path.endswith('.glb'):

print('Exporting to glTF binary (.glb)')

bpy.ops.export_scene.gltf(filepath=output_path, export_format='GLB', export_selected=True, export_morph=False)

elif output_path.endswith('.fbx'):

print('Exporting to FBX binary (.fbx)')

bpy.ops.export_scene.fbx(filepath=output_path, use_selection=True, add_leaf_bones=False)

else:

print('ERROR: Unsupported export format: ' + output_path)

sys.exit(1)

return

if __name__ == '__main__':

try:

if bpy.app.background:

parser = argparse.ArgumentParser(description='Create keyframed animated skinned SMPL mesh from VIBE output')

parser.add_argument('--input', dest='input_path', type=str, required=True,

help='Input file or directory')

parser.add_argument('--output', dest='output_path', type=str, required=True,

help='Output file or directory')

parser.add_argument('--fps_source', type=int, default=fps_source,

help='Source framerate')

parser.add_argument('--fps_target', type=int, default=fps_target,

help='Target framerate')

parser.add_argument('--gender', type=str, default=gender,

help='Always use specified gender')

parser.add_argument('--start_origin', type=int, default=start_origin,

help='Start animation centered above origin')

parser.add_argument('--person_id', type=int, default=1,

help='Detected person ID to use for fbx animation')

args = parser.parse_args()

input_path = args.input_path

output_path = args.output_path

if not os.path.exists(input_path):

print('ERROR: Invalid input path')

sys.exit(1)

fps_source = 30

fps_target = 30

gender = 'male'

start_origin = 1

input_path = '~/Desktop/VIBE_pkl2fbx/data/mocap'

output_path = '~/Desktop/VIBE_pkl2fbx/data/output/output.fbx'

startTime = time.perf_counter()

cwd = os.getcwd()

print('Input path: ' + input_path)

print('Output path: ' + output_path)

if not (output_path.endswith('.fbx') or output_path.endswith('.glb')):

print('ERROR: Invalid output format (must be .fbx or .glb)')

sys.exit(1)

poses_processed = process_poses(

input_path=input_path,

gender='male',

fps_source=30,

fps_target=30,

start_origin=1,

person_id=0

)

export_animated_mesh(output_path)

print('--------------------------------------------------')

print('Animation export finished.')

print(f'Poses processed: {str(poses_processed)}')

print(f'Processing time : {time.perf_counter() - startTime:.2f} s')

print('--------------------------------------------------')

sys.exit(0)

except SystemExit as ex:

if ex.code is None:

exit_status = 0

else:

exit_status = ex.code

print('Exiting. Exit status: ' + str(exit_status))

if bpy.app.background:

sys.exit(exit_status)

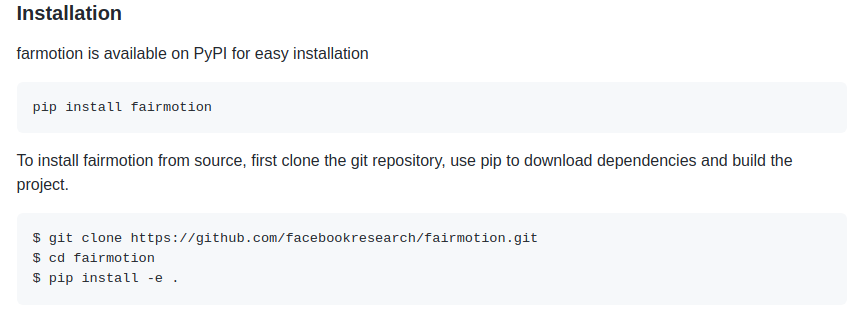

相关 3

同是Facebook研究院出品的fairmotion项目里主要提供smpl和bvh格式相互之间的转化和其他很多tasks,但是项目文档说明较少,几乎全是接口函数,如果想对应自己的需求的话需要自己去看里面函数的定义。

环境

这个环境十分好配,采取以下两种方法都可以,只不过由于human_body_prior库有改动,会出现一些小问题,问题出来再改就行了。

运行

代码路径fairmotion/fairmotion/data/frankmocap.py 下的原代码运行后会报ValueError: could not broadcast input array from shape (8,3,3) into shape (1,3,3)问题我没有解决,用的是另外一个代码,而且需要对frankmocap得到的很多pkl进行一个处理合并为一个pkl。处理好之后就可以运行frankmocap.py文件从smpl数据格式转到bvh格式了。

数据预处理代码:

import os

import pickle

import os.path as op

processed_data = []

root = r'./frankmocap_output/mocap'

for name in sorted(os.listdir(op.join(root))):

file_path = op.join(root, name)

preprocess_data = pickle.load(open(file_path, 'rb'))

motion_key = list(preprocess_data.keys())[6]

motion_data = preprocess_data[motion_key]

processed_data.append(motion_data)

pickle.dump(processed_data, open(r'./frankmocap_output/processed_data.pkl', 'wb'))

frankmocap2bvh代码:

import numpy as np

import pickle

import torch

from fairmotion.data import amass, bvh

from fairmotion.core import motion as motion_classes

from fairmotion.utils import constants, utils

from fairmotion.ops import conversions, motion as motion_ops

def get_smpl_base_position(bm, betas):

pose_body_zeros = torch.zeros((1, 3 * (22 - 1)))

body = bm(pose_body=pose_body_zeros, betas=betas)

base_position = body.Jtr.detach().numpy()[0, 0:22]

return base_position

def compute_im2sim_scale(

joints_img,

base_position,

):

left_leg_sim = np.linalg.norm(

base_position[amass.joint_names.index("lknee")] - base_position[amass.joint_names.index("lankle")])

left_leg_img = np.linalg.norm(joints_img[29][:2] - joints_img[30][:2])

right_leg_sim = np.linalg.norm(

base_position[amass.joint_names.index("rknee")] - base_position[amass.joint_names.index("rankle")])

right_leg_img = np.linalg.norm(joints_img[25][:2] - joints_img[26][:2])

return (left_leg_sim + right_leg_sim) / (left_leg_img + right_leg_img)

def load(

file,

motion=None,

bm_path=None,

motion_key=None,

estimate_root=False,

scale=1.0,

load_skel=True,

load_motion=True,

v_up_skel=np.array([0.0, 1.0, 0.0]),

v_face_skel=np.array([0.0, 0.0, 1.0]),

v_up_env=np.array([0.0, 1.0, 0.0]),

):

processed_data = pickle.load(open(file, "rb"))

motion_data = processed_data

bm = amass.load_body_model(bm_path)

betas = torch.Tensor(np.array(motion_data[0][0]["pred_betas"])[:]).to("cpu")

num_joints = len(amass.joint_names)

skel = amass.create_skeleton_from_amass_bodymodel(bm, betas, len(amass.joint_names), amass.joint_names)

joint_names = [j.name for j in skel.joints]

num_frames = len(motion_data)

print(num_frames)

T = np.random.rand(num_frames, num_joints, 4, 4)

T[:] = constants.EYE_T

ref_root_y = np.min((

motion_data[0][0]["pred_joints_img"][25][1],

motion_data[0][0]["pred_joints_img"][30][1]

))

for i in range(num_frames):

for j in range(num_joints):

T[i][joint_names.index(amass.joint_names[j])] = conversions.R2T(

np.array(motion_data[i][0]["pred_rotmat"][0])[j]

)

if estimate_root:

R_root = conversions.T2R(T[i][0])

p_root = np.zeros(3)

base_position = get_smpl_base_position(bm, betas)

im2sim_scale = compute_im2sim_scale(

motion_data[i][0]["pred_joints_img"],

base_position,

)

p_root[0] = np.mean((

motion_data[i][0]["pred_joints_img"][27][0],

motion_data[i][0]["pred_joints_img"][28][0]

)) * im2sim_scale

root_y = np.mean((

motion_data[i][0]["pred_joints_img"][27][1],

motion_data[i][0]["pred_joints_img"][28][1]

))

p_root[2] = (ref_root_y - root_y) * im2sim_scale

T[i][0] = conversions.Rp2T(R_root, p_root)

motion = motion_classes.Motion.from_matrix(T, skel)

motion.set_fps(60)

motion = motion_ops.rotate(

motion,

conversions.Ax2R(conversions.deg2rad(-90)),

)

positions = motion.positions(local=False)

for i in range(motion.num_frames()):

ltoe = positions[i][amass.joint_names.index("ltoe")][2]

rtoe = positions[i][amass.joint_names.index("rtoe")][2]

offset = min(ltoe, rtoe)

if offset < 0.05:

R, p = conversions.T2Rp(T[i][0])

p[2] += 0.05 - offset

T[i][0] = conversions.Rp2T(R, p)

motion = motion_classes.Motion.from_matrix(T, skel)

motion = motion_ops.rotate(

motion,

conversions.Ax2R(conversions.deg2rad(-90)),

)

return motion

if __name__ == '__main__':

file = r'../../daoboke/frankmocap_output/processed_data.pkl'

motion = load(file=file, bm_path=r'../../body_models/smplh/neutral/model.npz')

bvh.save(motion, filename=r'../../daoboke/frankmocap_output/output.bvh')

如果想要简单的从一般的smpl格式数据转到bvh的话,可以在fairmotion项目中运行以下代码,然而在fairmotion中我未能成功完成bvh2smpl代码:

import torch

from human_body_prior.body_model.body_model import BodyModel

from fairmotion.data import amass, bvh

def load_body_model(bm_path, num_betas=10, model_type="smplh"):

comp_device = torch.device("cpu")

bm = BodyModel(

bm_path=bm_path,

num_betas=num_betas,

model_type=model_type

).to(comp_device)

return bm

file = './smplData/DanceDB/20120731_StefanosTheodorou/Stefanos_1os_antrikos_karsilamas_C3D_poses.npz'

bm_path = '../body_models/smplh/{}/model.npz'.format('male')

num_betas = 10

model_type = 'smplh'

bm = load_body_model(bm_path, num_betas, model_type)

motion = amass.load(file, bm, bm_path)

bvh.save(motion, filename='./smplData/output.bvh')

本文内容由网友自发贡献,版权归原作者所有,本站不承担相应法律责任。如您发现有涉嫌抄袭侵权的内容,请联系:hwhale#tublm.com(使用前将#替换为@)